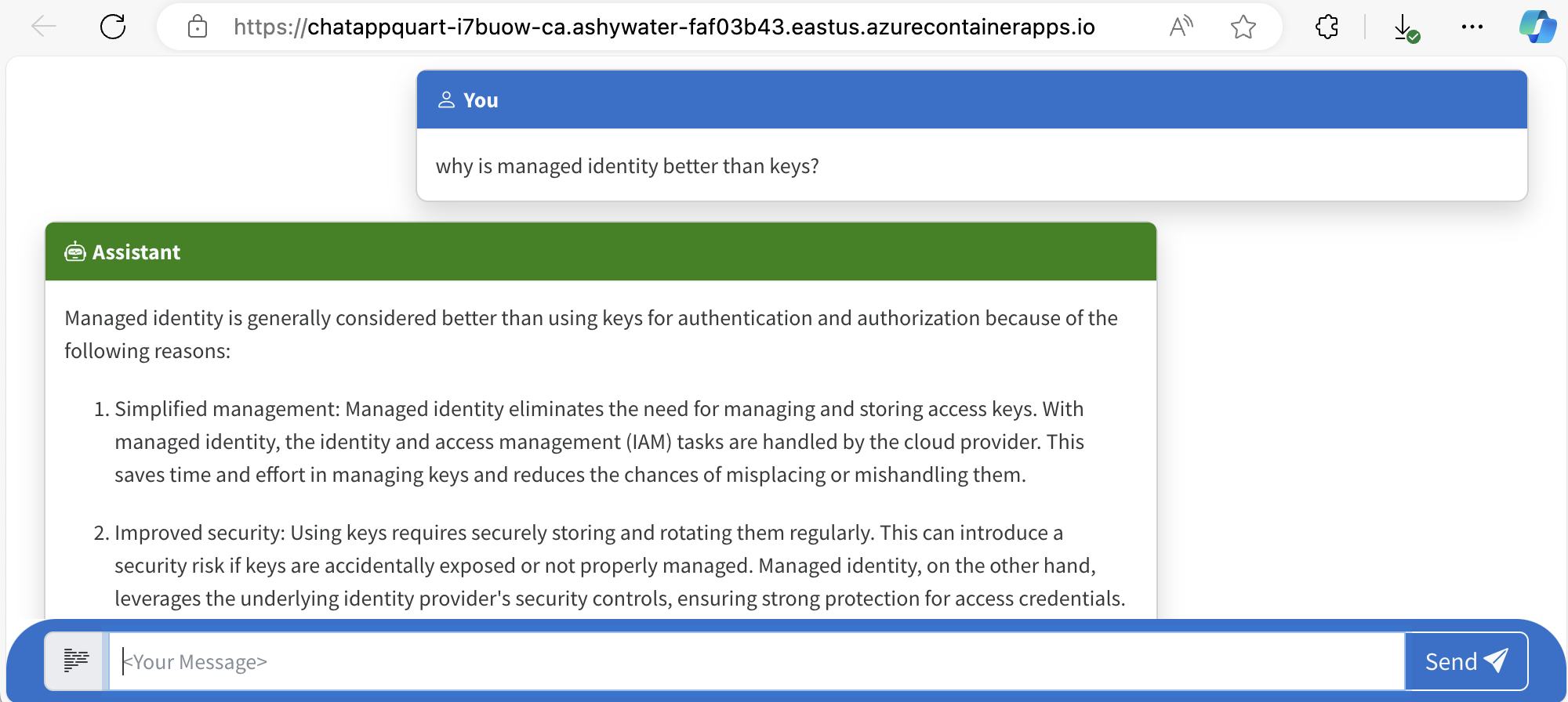

This repository includes a Python app that uses Azure OpenAI to generate responses to user messages.

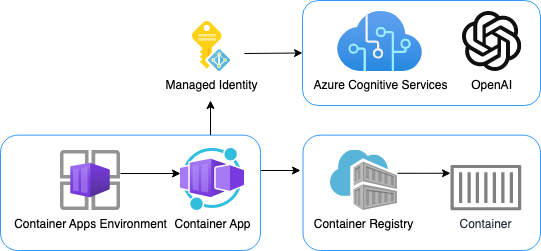

The project includes all the infrastructure and configuration needed to provision Azure OpenAI resources and deploy the app to Azure Container Apps using the Azure Developer CLI. By default, the app will use managed identity to authenticate with Azure OpenAI.

We recommend first going through the deploying steps before running this app locally, since the local app needs credentials for Azure OpenAI to work properly.

- Features

- Architecture diagram

- Opening the project

- Deploying

- Development server

- Costs

- Security Guidelines

- Resources

- A Python Quart that uses the openai package to generate responses to user messages.

- A basic HTML/JS frontend that streams responses from the backend using JSON Lines over a ReadableStream.

- Bicep files for provisioning Azure resources, including Azure OpenAI, Azure Container Apps, Azure Container Registry, Azure Log Analytics, and RBAC roles.

- Support for using local LLMs during development.

You have a few options for getting started with this template. The quickest way to get started is GitHub Codespaces, since it will setup all the tools for you, but you can also set it up locally.

You can run this template virtually by using GitHub Codespaces. The button will open a web-based VS Code instance in your browser:

-

Open the template (this may take several minutes):

-

Open a terminal window

-

Continue with the deploying steps

A related option is VS Code Dev Containers, which will open the project in your local VS Code using the Dev Containers extension:

-

Start Docker Desktop (install it if not already installed)

-

Open the project:

-

In the VS Code window that opens, once the project files show up (this may take several minutes), open a terminal window.

-

Continue with the deploying steps

If you're not using one of the above options for opening the project, then you'll need to:

-

Make sure the following tools are installed:

-

Download the project code:

azd init -t openai-chat-app-entra-auth-local

-

Open the project folder

-

Create a Python virtual environment and activate it.

-

Install required Python packages:

pip install -r requirements-dev.txt

-

Install the app as an editable package:

python3 -m pip install -e src

-

Continue with the deploying steps.

Once you've opened the project in Codespaces, in Dev Containers, or locally, you can deploy it to Azure.

-

Sign up for a free Azure account and create an Azure Subscription.

-

Request access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access and awaiting approval.

-

Check that you have the necessary permissions:

- Your Azure account must have

Microsoft.Authorization/roleAssignments/writepermissions, such as Role Based Access Control Administrator, User Access Administrator, or Owner. If you don't have subscription-level permissions, you must be granted RBAC for an existing resource group and deploy to that existing group. - Your Azure account also needs

Microsoft.Resources/deployments/writepermissions on the subscription level.

- Your Azure account must have

-

Login to Azure:

azd auth login

-

Provision and deploy all the resources:

azd up

It will prompt you to provide an

azdenvironment name (like "chat-app"), select a subscription from your Azure account, and select a location where OpenAI is available (like "francecentral"). Then it will provision the resources in your account and deploy the latest code. If you get an error or timeout with deployment, changing the location can help, as there may be availability constraints for the OpenAI resource. -

When

azdhas finished deploying, you'll see an endpoint URI in the command output. Visit that URI, and you should see the chat app! 🎉 -

When you've made any changes to the app code, you can just run:

azd deploy

This project includes a Github workflow for deploying the resources to Azure on every push to main. That workflow requires several Azure-related authentication secrets to be stored as Github action secrets. To set that up, run:

azd pipeline configAssuming you've run the steps in Opening the project and the steps in Deploying, you can now run the Quart app in your development environment:

python -m quart --app src.quartapp run --port 50505 --reloadThis will start the app on port 50505, and you can access it at http://localhost:50505.

To save costs during development, you may point the app at a local LLM server.

Pricing varies per region and usage, so it isn't possible to predict exact costs for your usage. The majority of the Azure resources used in this infrastructure are on usage-based pricing tiers. However, Azure Container Registry has a fixed cost per registry per day.

You can try the Azure pricing calculator for the resources:

- Azure OpenAI Service: S0 tier, ChatGPT model. Pricing is based on token count. Pricing

- Azure Container App: Consumption tier with 0.5 CPU, 1GiB memory/storage. Pricing is based on resource allocation, and each month allows for a certain amount of free usage. Pricing

- Azure Container Registry: Basic tier. Pricing

- Log analytics: Pay-as-you-go tier. Costs based on data ingested. Pricing

azd down.

This template uses Managed Identity for authenticating to the Azure OpenAI service.

Additionally, we have added a GitHub Action that scans the infrastructure-as-code files and generates a report containing any detected issues. To ensure continued best practices in your own repository, we recommend that anyone creating solutions based on our templates ensure that the Github secret scanning setting is enabled.

You may want to consider additional security measures, such as:

- Protecting the Azure Container Apps instance with a firewall and/or Virtual Network.

- OpenAI Chat Application with Microsoft Entra Authentication - MSAL SDK: Similar to this project, but adds user authentication with Microsoft Entra using the Microsoft Graph SDK and built-in authentication feature of Azure Container Apps.

- OpenAI Chat Application with Microsoft Entra Authentication - Built-in Auth: Similar to this project, but adds user authentication with Microsoft Entra using the Microsoft Graph SDK and MSAL SDK.

- RAG chat with Azure AI Search + Python: A more advanced chat app that uses Azure AI Search to ground responses in domain knowledge. Includes user authentication with Microsoft Entra as well as data access controls.

- Develop Python apps that use Azure AI services