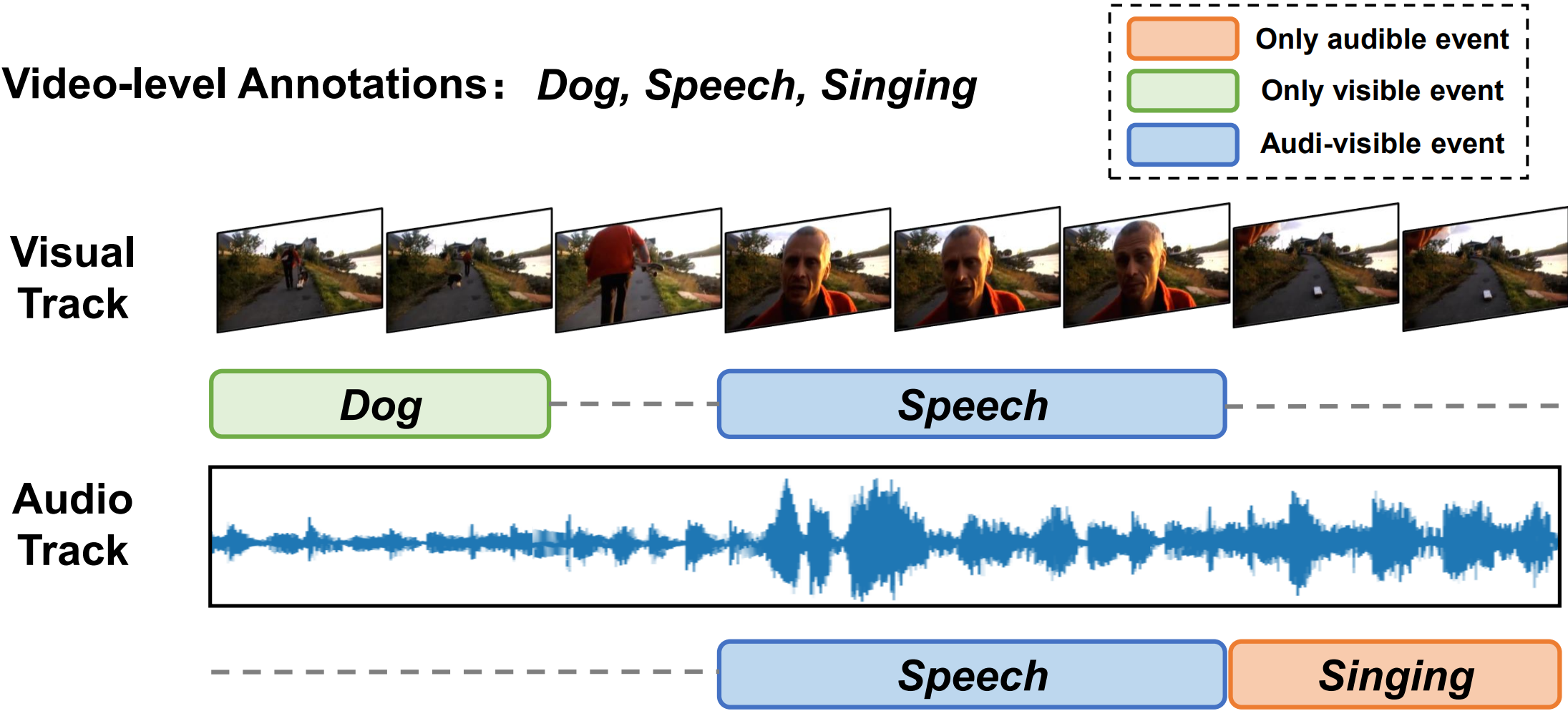

Collecting Cross-Modal Presence-Absence Evidence for Weakly-Supervised Audio-Visual Event Perception

Junyu Gao, Mengyuan Chen, Changsheng Xu

Code for CVPR 2023 paper Collecting Cross-Modal Presence-Absence Evidence for Weakly-Supervised Audio-Visual Event Perception

**Typo**: It should be noted that in the framework graph of the paper, we incorrectly labeled the name of "Absence/Presence Evidence Collecter". Here's the correct version. We are sorry for the typo.Here we list our used requirements and dependencies.

- GPU: GeForce RTX 3090

- Python: 3.8.6

- PyTorch: 1.12.1

- Other: Pandas, Openpyxl, Wandb (optional)

- Please download the preprocessed audio and visual features from https://github.com/YapengTian/AVVP-ECCV20.

- Put the downloaded features into

data/feats/, and put the annotation files intodata/annotations/.

Run ./train.sh.

Download the checkpoint file from Google Drive, and put it into save/pretrained/.

Then run ./test.sh.

If you find the code useful in your research, please consider citing it:

@inproceedings{junyu2023CVPR_CMPAE,

author = {Gao, Junyu and Chen, Mengyuan and Xu, Changsheng},

title = {Collecting Cross-Modal Presence-Absence Evidence for Weakly-Supervised Audio-Visual Event Perception},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

See MIT License

This repo contains modified codes from:

- JoMoLD: for implementation of the backbone JoMoLD (ECCV-2022).

We sincerely thank the owners of the great repos!