VizNet: Data, System, and Analysis

This repository includes scripts to retrieve the four corpora (Plotly, ManyEyes, Webtables, Open Data Portal) included in VizNet, characterize those datasets with statistical features, sample high-quality datasets within constraints to replicate Kim and Heer (EuroVis 2018), and run the experimental system for crowdsourced evaluation.

What is VizNet?

Collecting, curating, and cleaning data is a laborious and expensive process. Visualization researchers have relied on running studies with ad hoc, sometimes synthetically generated, datasets. However, such datasets do not display the same characteristics as data found in the wild. VizNet is a centralized and large-scale repository of data as used in practice, compiled from the web, open data repositories, and online visualization platforms.

VizNet enables data scientists and visualization researchers to aggregate data, enumerate visual encodings, and crowdsource effectiveness evaluations.

What is VizNet useful for?

Researchers can use the VizNet repository to conduct studies with real world data. For example, we have used VizNet to replicate a study assessing the influence of task and data distribution on the effectiveness of visual encodings. We then train a machine learning model to predict perceptual effectiveness of (data, visualization, task) triplets. Such learned models could be used to power visualization recommendation systems. We also provide scripts for sampling high-quality data subject to experimental constraints, verifying data quality of samples, and a system for crowdsourcing effectiveness evaluations. We will provide anonymized experimental results and associated analysis scripts shortly.

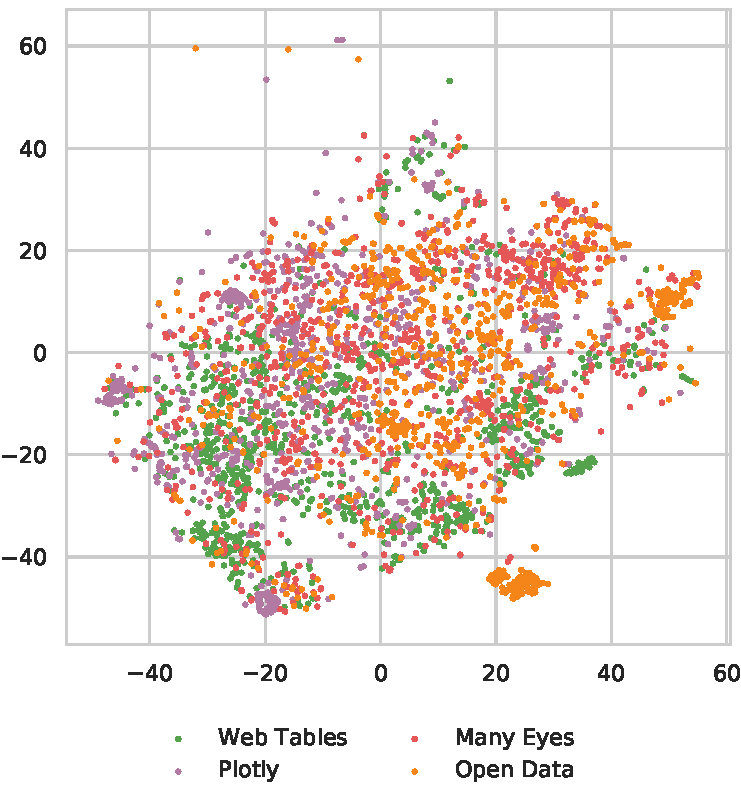

If experiment design demands the use of synthetically generated data, researchers can assess the ecological validity of synthetic data by comparing against real distributions. We provide an overview of statistical properties of datasets within VizNet, a script for extracting statistical features from the corpora, a notebook for characterizing the features, and a notebook for clustering datasets embedded in a two-dimensional latent space, as shown in the figure to the left. Each data point is a table from one of the four data sources included in VizNet, which is encoded using color.

By using data from the VizNet repository, or data characterized along similar dimensions, researchers can compare design techniques against a common baseline.

Lastly, VizNet can be used for more than visualization research. For example, we are currently developing a machine learning approach to semantic type detection using the VizNet repository. We also anticipate researchers using VizNet to study and develop measures of data quality, which is an important feature of large-scale repositories.

What's in this repository?

raw/

└───retrieve_corpora.sh: Shell script to download and unzip raw corpora

characterization/

└───extract_features.py: Entry point for extracting features from a specific corpus

└───feature_extraction/: Modules for extracting and aggregating features from datasets

└───Descriptive Statistics.ipynb: Notebook for visualizing and characterizing dataset features

experiment/

└───Data Quality Assessment.ipynb: Code to assess measures of data quality for experiment datasets

└───sample_CQQ_specs_with_data.py: Script to sample CQQ specifications from corpora subject to experimental constraints

└───Cluster CQQ Features.ipynb: Script to cluster CQQ features

└───Question Generation.ipynb: Notebook to generate experimental questions from CQQ specifications

└───questions.json: Questions presented to users in experiment

└───data/: 12,000 datasets used in experiment

└───system/: front-end and back-end code used to run experiment

└─── README.md: instructions to run the experimental system

└───screenshots/: screen shots from deployed experiment

helpers/

└───read_raw_data.py: Functions for reading original raw data files as raw Pandas data frames

└───preprocessing/: Utility functions for extracting features from tables for predictive modeling

How do I use this repository?

This repository supports multiple usecases. For users who want to access the raw data, we store the four corpora in an Amazon S3 bucket. To download and unzip the data, run sh ./retrieve_corpora.sh in the raw directory. Note that the uncompressed datasets take up ~657Gb. We will provide samples of the dataset shortly.

If you want to run the code, note that all processing scripts and notebooks are written in Python 3. To get started:

- In the base directory, initialize and activate virtual environment:

virtualenv -p python3 venv && source venv/bin/activate - Install Python dependencies:

pip install -r requirements.txt - Start Jupyter notebook or Jupyter lab:

jupyter notebookorjupyter lab

To run the crowdsourced experiment, please follow the instructions provided in experiment/README.md

. The experiment frontend is implemented in React/Redux. The backend is implemented in Python, and uses Flask as an API layer with PostgreSQL as a database. To customize this system for your own experiment, you can modify the list of questions, referenced datasets, and visual specifications.

Pre-experiment screening questions

Main portion of VizNet crowdsourced replication experiment