In this workshop we will use Azure Data Factory to copy, prepare and enrich data.

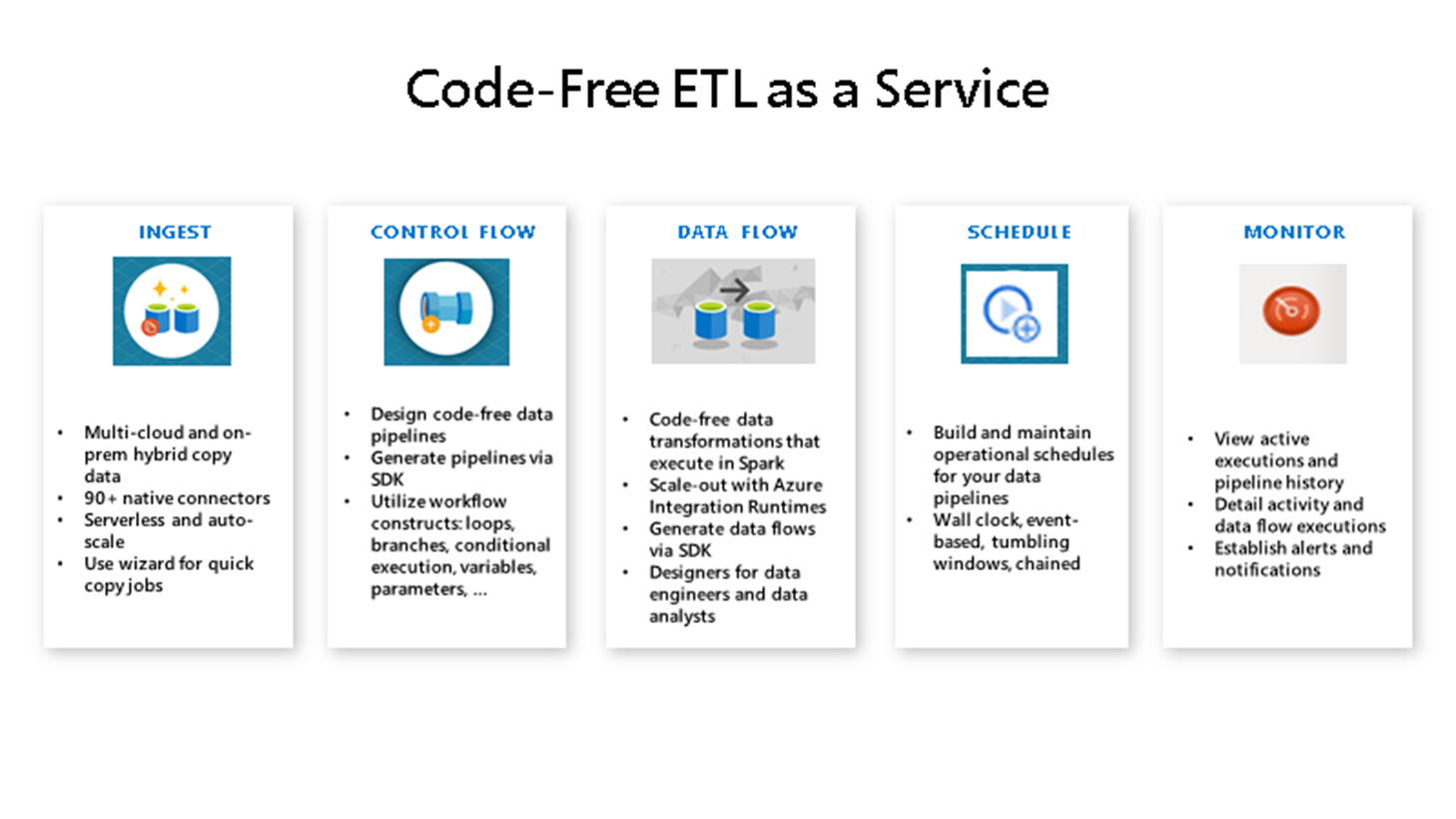

Azure Data Factory is a cloud-based ETL and data integration service that allows you to create data-driven workflows for orchestrating data movement and transforming data at scale. Using Azure Data Factory, you can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores.

Access to an Azure Subscription containing the following resources:

- Use Azure Data Factory Mapping Data Flows to:

- Develop data transformation logic without writing code

- Create ETL (extract-transform-load) patterns integrated with Data Factory pipelines

- Perform in-memory transformations such as:

- Derived columns

- Joins

- Lookups

- Conditional splits

- Alter rows

- And a lot more!

- Use Azure Data Factory to:

- When pipeline implementation to integrate with the API becomes too complex

- To process complex API connections & authentication processes

- Simplify or breakdown complex files into simpler units

- Execute recursive calls to API's

- Reuse existing code