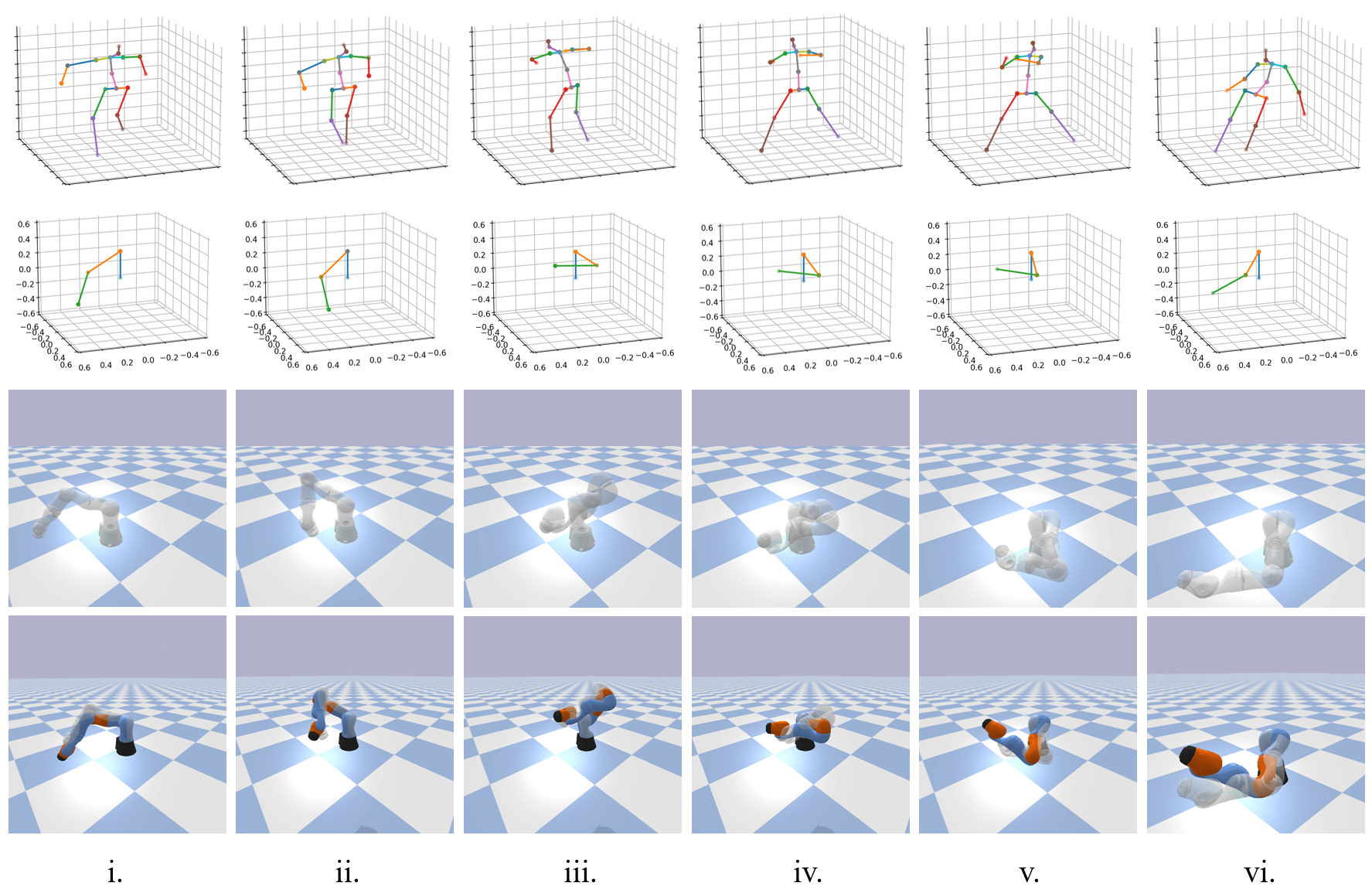

Motion imitation is a challenging task in robotics and computer vision, typically requiring expert data obtained through MoCap devices. However, acquiring such data is resource-intensive in terms of financial resources, manpower, and time. Our project addresses this challenge by proposing a novel model that simplifies Motion Imitation into a prediction problem of joint angle values in reinforcement learning.

|

|

|---|

The model first extracts motion information represented by skeletal structure from the target human arm motion videos. Then, it retargets the arm motions morphologically to match those of a robotic manipulator, making them essentially equivalent. This facilitates the subsequent generation of reference motions for the robotic manipulator to imitate. The generated reference motions are then utilized to formulate a reinforcement learning problem, enabling the model to learn a policy to imitate human arm motions or apply a learned policy to imitate unfamiliar motions.

Clone the repository and cd to Imitation-main directory.

git clone https://github.com/iQiyuan/Imitation.git

cd Imitation-mainInstall conda and create the provided conda virtual environment.

conda env create -f environment.yaml

conda activate MoImDownload the pre-trained model for 3D Human Pose Estimation following the instructions in "Dataset Setup" and "Demo" from this repository.

Make sure the required pre-trained weights are downloaded and in the correct directory listed below.

./pose_est/lib/checkpoint/pose_hrnet_w48_384x288.pth

./pose_est/lib/checkpoint/yolov3.weights

Put the target video under this directory.

./pose_est/video/target_video_name.mp4

Run the below commands to extract skeletal motions and retarget it.

python extract.py --video target_video_name.mp4

python retarget.py --video target_video_nameExpected outputs are under this directory.

./pose_est/output/target_video_name

./demo_data/target_video_name

Although the pre-trained weights are provided, you can always train a new imitation policy using the reference motion extracted in the previous step.

python train.py --GUI True --video target_video_nameThe extracted reference motions can also be used to test the learned policy, i.e. let the robotic manipulator imitate whatever arm motions demonstrated in the videos.

python test.py --GUI True --video target_video_nameThe learned policy can be quantitatively evaluated.

python performance.py

python evaluate.py --GUI True --video target_video_nameWe are currently preparing a research paper that will provide a detailed explanation of the proposed model, its implementation, experimental results, and future directions. Stay tuned for updates!

The code for this project is based on modifications made to the following repositories. Thanks to the authors of these repositories for open-sourcing their work.

OpenAi_baselines

PPO_PyTorch

Motion_Imitation

StridedTransformer

PyBullet_official_e.g.

This project is licensed under the MIT License - see the LICENSE file for details.