cuXfilteris inspired from the Crossfilter library, which is a fast, browser-based filtering mechanism across multiple dimensions and offers features do groupby operations on top of the dimensions. One of the major limitations of using Crossfilter is that it keeps data in-memory on a client-side browser, making it inefficient for processing large datasets.cuXfilterusescuDFon the server-side, while keeping the dataframe in the GPU throughout the session. This results in sub-second response times for histogram calculations, groupby operations and querying datasets in the range of 10M to 200M rows (multiple columns).

To build using Docker:

- Edit the

config.envfile to reflect accurate IP, dataset name, and mapbox token values.- add your server ip address to the

server_ipproperty in the format:http://server.ip.addr - add

demo_mapbox_tokenfor running the GTC demo - download the dataset

146M_predictions_v2.arrowfrom here

- add your server ip address to the

docker build -t user_name/viz .docker run --runtime=nvidia -d --env-file ./config.env -p 80:80 --name rapids_viz -v /folder/with/data:/usr/src/app/node_server/uploads user_name/viz

Config.env Parameters:

server_ip: ip address of the server machine, needs to be set before building the docker containercuXfilter_port: internal port at which cuXfilter server runs. No need to change this.Do not publish this portdemos_serve_port: internal port at which demos run. No need to change this.Do not publish this portgtc_demo_port: internal port at which gtc demo server runs. No need to change this.Do not publish this portsanic_server_port_cudf: sanic_server(cudf) runs on this port, internal to the container and can only be accessed by the node_server. Do not publish this portsanic_server_port_pandas: sanic_server(pandas) runs on this port, internal to the container and can only be accessed by the node_server. Do not publish this portwhitelisted_urls_for_clients: list of whitelisted urls for clients to access node_server. User can add a list of urls(before building the container) he/she plans to develop on as origin, to avoid CORs issues.jupyter_port: internal port at which jupyter notebook server runs. No need to change this.Do not publish this portdemo_mapbox_token: mapbox token for the mortgage demo. Can be created for free heredemo_dataset_name: dataset name for the example and mortgage demo. Default value: '146M_predictions_v2'. Can be downloaded from herermm: using the experimental memory pool allocator(https://github.com/rapidsai/rmm) gives better performance, but may throw out of memory errors.

With the default settings:

Access the crossfilter demos at http://server.ip.addr/demos/examples/

Access the GTC demos at http://server.ip.addr/demos/gtc_demo

Access jupyter integration demo at http://server.ip.addr/jupyter

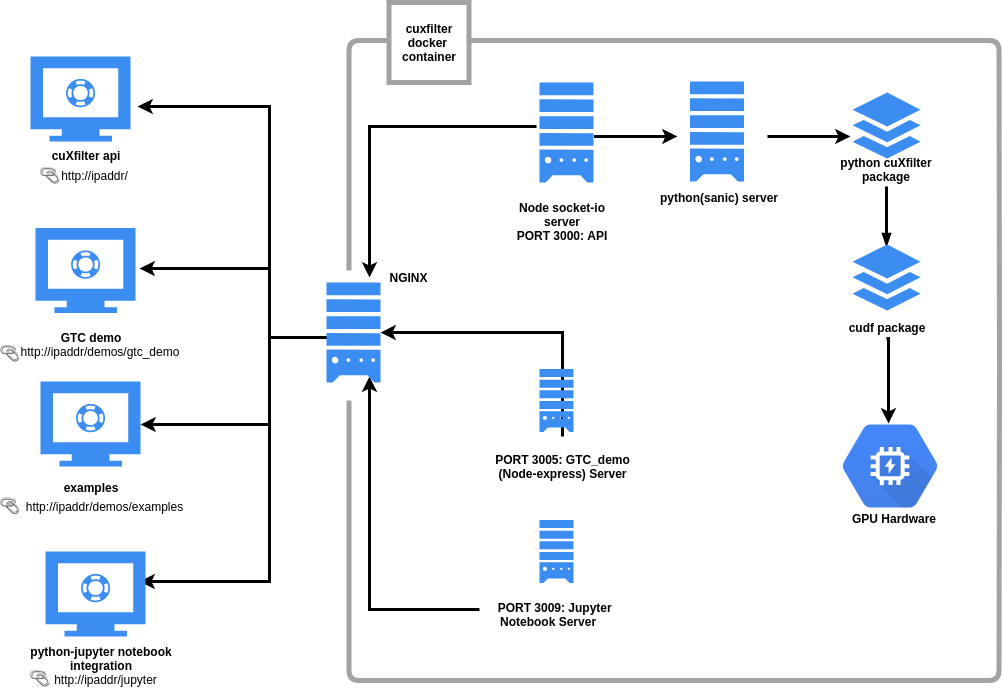

Docker container(python_sanic <--> node) SERVER <<<===(socket.io)===>>> browser(client-side JS)

-

The sanic server interacts with the node_server, and maintains dataframe objects in memory, throughout the user session. There are two instances of the sanic_server running all the time, one at port 3002 (handling all cudf dataframe queries) and the other at port 3003 (handling all pandas dataframe queries, incase anyone wants to compare performance). This server is not exposed to the cuXfilter-client.js library, and is accessable only to the node-server, which acts as a load-balancer between cuXfilter-client.js library and the sanic server.

Files:

app/views.py-> handles all routes, and appends each response with calculation timeapp/utilities/cuXfilter_utils.py-> all cudf crossfilter functionsapp/utilities/numbaHistinMem.py-> histogram calculations using numba for a cudf.Series(ndarray)app/utilities/pandas_utils.py-> all pandas crossfilter functions

-

The Node server is exposed to the cuXfilter-client.js library and handles socket.io incoming requests and responses. It handles user-sessions, and gives an option to the client-side to perform cross-browser/cross-tab crossfiltering too.

Files:

routes/cuXfilter.js-> handles all socket-io routes, and appends each response with the amount of time spent by the node_server for each requestroutes/utilities/cuXfilter_utils.js-> utility functions for communicating with the sanic_server and handling the responses

-

A javascript(es6) client library that provides crossfilter functionality to create interactive vizualizations right from the browser

Currently, there are a few memory limitations for running cuXfilter.

- Dataset size should be half the size of total GPU memory available. This is because the GPU memory usage spikes around 2X, in case of groupby operations.

This will not be an issue once dask_gdf engine is implemented(assuming the user has access to multiple GPUs)

In case the server becomes unresponsive, here are the steps you can take to resolve it:

- Check if the gpu memory is full, using the

nvidia-smicommand. If the gpu memory usage seems full and frozen, this may be due to the cudf out of memory error, which may happen if the dataset is too large to fit into the GPU memory. Please referMemory limitationswhile choosing datasets

A docker container restart might solve the issue temporarily.

Currently, cuXfilter supports only arrow file format as input. The python_scripts folder in the root directory provides a helper script to convert csv to arrow file. For more information, follow this link