Required downloads :

- ./model_interfaces/cifar_models/model_defended/checkpoint-70000.data-00000-of-00001

- ./model_interfaces/cifar_models/model_undefended/checkpoint-79000.data-00000-of-00001

- ./audios/

+The clone file size is about 200 MBs. (If the files are not available, please contact me.)

Other dependencies:

matplotlib, tqdm, librosa, pytorch, torchvision, tensorflow=1.14

using conda, use the following commands

conda create -n DeeperSearchconda activate DeeperSearchconda install python=3.6conda install matplotlib tqdm librosaconda install pytorch torchvision -c pytorchconda install tensorflow=1.14 -c conda-forgeconda clean -a

After you finish experimenting, you may delete the environment now.conda activate baseconda env remove -n DeeperSearch

In this project, we try to replicate and go further with a method by Zhang et al., a simple way to generate adversarial reaction from a blackbox classifier model.

As improvements, we experimented with:

- The attack is required to output a certain class specified by the user.

-

The grouping method by Zhang et al. produces very distinctive visual artifact to the perturbed image. A simple alternative grouping method was experimented for more natural looking image outcome.

-

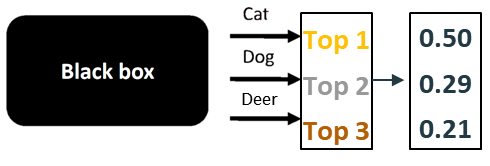

Classifier API are not always expected to give away all the probabilities for each classes. In this experiment setting, the classifier only outputs categories of a few most probable classes. The category output is then convert to statistical probability for the cost of proportionally more query counts.

As expansion, we experimented the same concept on audio classification.

-

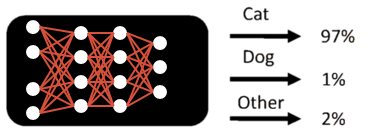

A neural network can be used to classify an input to a category, and is thus used as a classifier. A classifier can be trained with pre-labeled dataset and used on a new data to see which class it belongs to.

It is called a neural network since the internal structure is mimicked from actual neural network.

-

Since the classifier (neural network) is self taught based on mathmatical model, its reasoning for the classification is not the same as how we understand the data. Because of this perception difference, the classifier will sometimes make odd classification results. This is called an adversarial output.

In short, adversarial attack is deliberately fooling the classifier.

-

DeepSearch is an algorithm Zhang et al. came up for simple blackbox adversarial attack.

To know more about how the algorithm works, watch this video from ACM, or watch our video of project proposal.

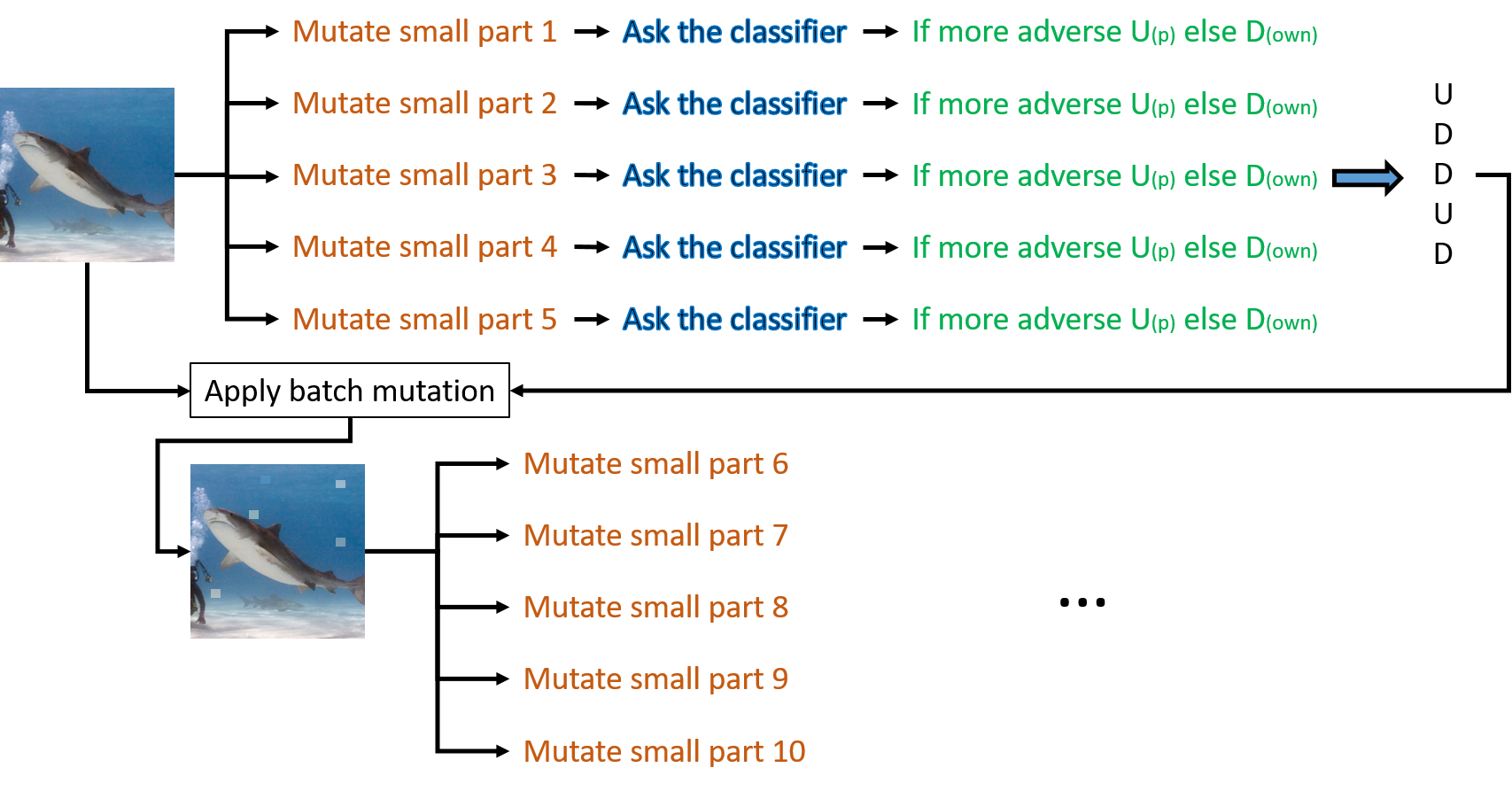

The basic idea is to ask the classifier again and again with a slightly modified image to get closer to fooling it, building up step by step. Below is a simple graphical representation of the algorithm. Although not shown in the illustration, if adversity is achieved in any of the evaluation, the algorithm returns the image and terminates.

Using your command line, execute the program with python.

python testDeepSearch.py

This will run the code for imagenet attack as default. More options include:

python testDeepSearch.py --cifar : Which runs the algorithm on a defended resnet classifier

python testDeepSearch.py --cifar --undef : Runs the fastest attack, on an undefended resnet classifier.

python testDeepSearch.py --targeted 1 --target [targetnumber] : Target number is the number of the class you want to target to. Cifar dataset has 0 to 9 values, and imagenet has 0 to 999 values.

python testDeepSearch.py --proba 0 : This will run the categorical attack.

python testDeepSearch.py --spectro : This runs the algorithm on audio.

But there are still other parameters you can and might want to change inside the testDeepSearch.py. For example,

- In line 95, uncommenting the line will output every 'print()' into a log file.

- In line 103, you can decide how many data you want to run the attack on.

- In line 111, you can choose maximum number of query count by adjusting

max_callsand can control the amount of information to output while the program is running by settingverbose. distortion_cap,group_size, andbatch_sizehas a significant impact on performance and results. You might want to play with these parameters once you get to understand how the code works.

-

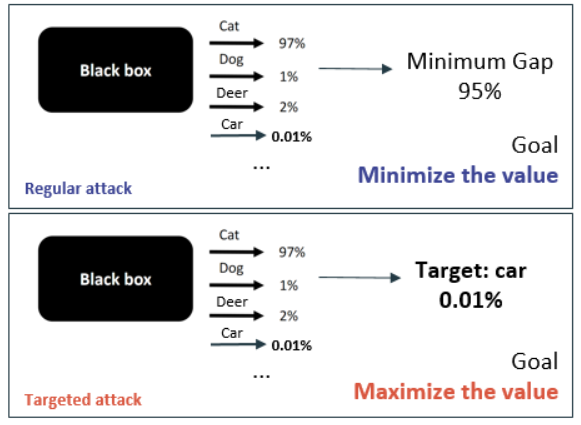

The algorithm use a scoring to decide to which direction the modification should be made. For regular attack, this scoring looked for the smallest probability gap against the original class, meaning the target changed per each loop for fastest result.

This was replaced by fixed probability of our targeted class. -

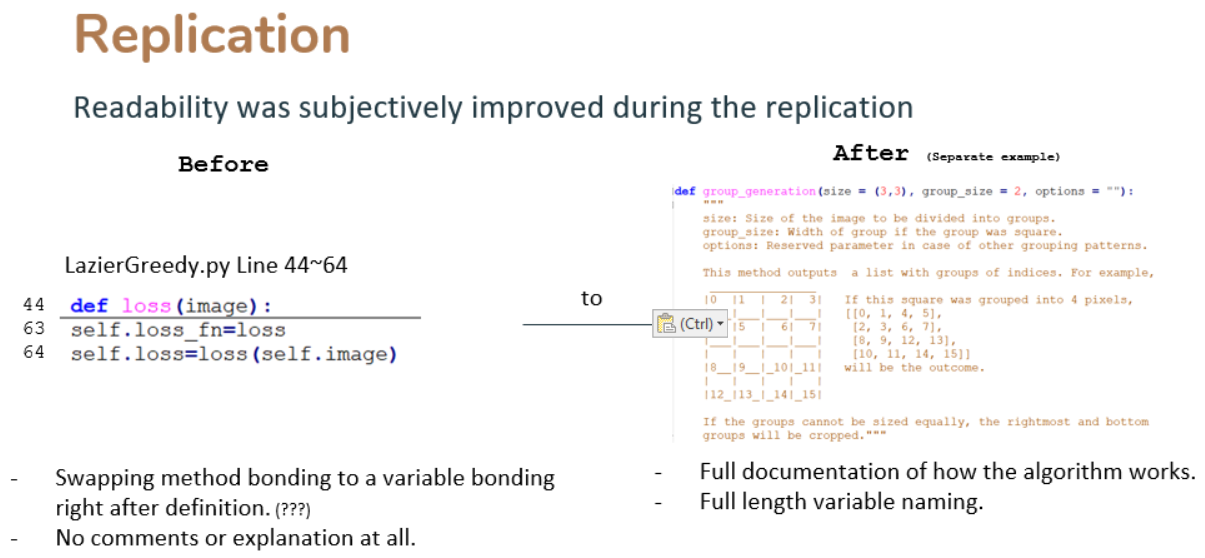

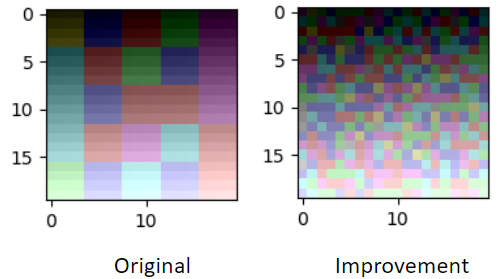

Zhang et al. used square grouping pattern in order to exploit image locality for better performance. But this decision causes very unnatural patterns to appear on the image. We changed the pattern to random so the modification we make can be disguised as white noise. -

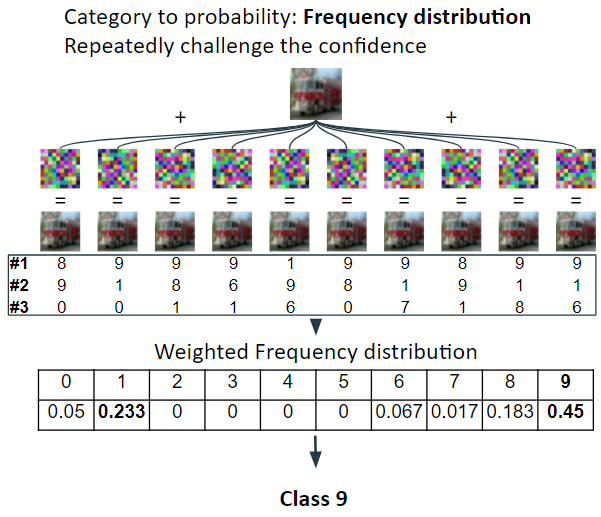

In categorical feedback attack, a new way to assess classification confidence was needed. The basic idea is to use statistics. Bunch of small random noise pattern was added to the original image to challenge the confidence of the classification. This give us various outputs because of the disturbance, and the statistics of the output can be processed for confidence assessment.

This greatly increases the query count and reduces accuracy, but can still be useful setting for a realistic blackbox attack. -

For audio, we converted audio into 2 dimensional array with fourier transform and performed Deep Search on the 2 d array and inverse transformed it back to audio.

It should be also possible to run the mutation with audio PCM data, which is 1 dimensional array of air pressure changes. This kind of implementation however, was not done in this project.

The code can be broken down to 4 parts: The interface (for the classifier and for the user), mutation, evaluataion, and deepSearch.

- The interfaces ("

testDeepSearch.py", "***Wrappers.py") are used to execute the algorithm with desired parameters or access the classifier models. - The

mutation.pyis where the group is divided and the image is modified. - The

evaluation.pyis where different scoring shceme of modified image is implemented. Score is evaluated to images to determine which direction (U/D) the image should be modified in order to get closer to adversity. - The

deepSearch.pyis essentially outer conditional loop that usesmutation.pyandevaluation.pyuntil it reaches adversity.

For more detail of how the code works, please read the documentation within the code.

The resulting image for each experiments can be seen in the ./Results/ directory. Each experiments are coded:

T - Targeted | NT - Non-targeted | C - Categorical | Pr - Probabilistic | D - Defended | UD - Undefended

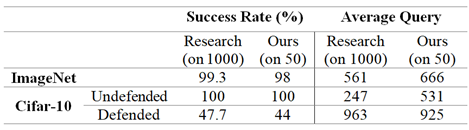

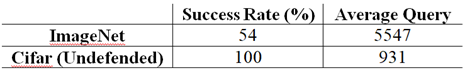

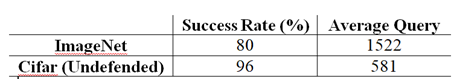

Here are some statistical data of the experiments:

- Replication of Zhang et al.:

- Targeted Attack:

- Improved Grouping:

- Categorical Feedbacked Attack: 3 out of 50 images of Cifar succeded with avg count of 5,617.

In this work we have proposed several ways of how to improve baseline algorithm DeepSearch for adversarial blackbox attacks. We have found that the algorithm can be effectively applied on targeted attacks and audio domain. We have also observed that categorical attacks might not be successful in query usage. Finally, the random grouping is effective in reducing artifacts, but the quality of the images was reduced.

The main restriction factor of this project has been the massive time complexity and limited computational power. More efficient classifier models and better computational power can be used for more accurate result derivation.