Orginal Paper: GNN4EEG: A Benchmark and Toolkit for Electroencephalography Classification with Graph Neural Network

GNN4EEG is a benchmark and toolkit focusing on Electroencephalography (EEG) classification tasks via Graph Neural Network (GNN), aiming to facilitate research in this direction. Researchers can arbitrarily choose their prefered GNN models, hyper-parameters and experimental protocols. Training and evaluating dataset can be flexibly chosen as the default FACED dataset (with detailed information listed in "Models and Dataset" chapter) or any self-built datasets. The characteristics of our toolkit can be summarized as follows:

-

Large Benchmark: We introduce a large benchmark constructed with 4 EEG classification tasks based on EEG data collected from the FACED dataset , consists of 123 subjects .

-

Multiple SOTA Models: We implement 4 state-of-the-art GNN-based EEG classification models, i.e., DGCNN, RGNN, SparseDGCNN and HetEmotionNet.

-

Various Experimental Protocols: We provide comprehensive experimental settings and evaluation protocols, e.g., 2 data splitting protocols, and 3 cross-validation protocols.

-

Easy for Usage: Our toolkit can proceed the whole process of training and tuning an available EEG classification model for real-time applications in just a few lines of code.

-

Flexible Framework: Researchers can arbitrarily select their experimental settings and datasets.

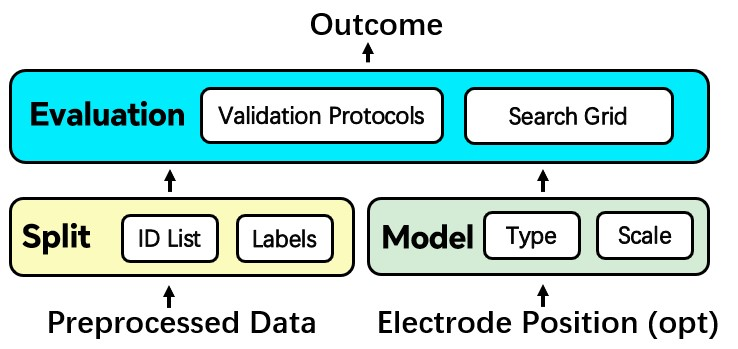

Generally, GNN4EEG decomposes the whole training and evaluating progress into three modules:

-

Data Splitting: First, it is necessary to choose the data splitting protocols, i.e., intra-subject or cross-subject. A list describing the subject of each sample should be provided to guide the splitting.

-

Model Selection: To initiate a specific model, parameters like the number of classification categories, graph nodes, hidden layer dimension, and GNN layers should be included. Electrode positions, frequency values, and other options are also necessities for certain GNN models.

-

Validation Protocols and Other Training Configurations: The final step is to declare the validation protocols and other configurations. As illustrated above, GNN4EEG provides three validation protocols, i.e., CV, FCV, and NCV. For detailed training configurations, the user can set the learning rate, dropout rate, number of epochs, 𝐿1 and 𝐿2 regularization coefficient, batch size, optimizer, and training device.

A data flow diagram is illustrated as following:

- Install Anaconda with Python >= 3.5

- Clone the repository

git clone https://github.com/Miracle-2001/GNN4EEG.git- Install requirements and step into the

srcfolder

cd GNN4EEG

cd src

pip install -r requirements.txt- (optional) To download the FACED dataset, please refer to the DOI link. Detailed steps will be discussed in here .

*UPDATE on 2024.3.15 : For convience to use, we uploaded a preprocessed FACED dataset for 2 class classification and 9 class classification, respectively. Each sample in both of them contains 5 bands. Specificly, the shape of 'FACED_dataset_2_labels.mat' is (123, 720, 150), the shape of 'FACED_dataset_2_labels.mat' is (123, 840, 150). Here, 123 means the number of subjects. 720=24×30, which means 24 videos and each videos has 30 seconds. 150=30×5, which means each sample is consist of 30 channels 5 frequence bands EEG topological graph.

Download link: FACED_dataset_2_labels.mat, FACED_dataset_9_labels.mat

After download, you can use the code below to load the dataset.

import hdf5storage as hdf5

data = hdf5.loadmat('FACED_dataset_2_labels.mat')['de_lds']

print(data.shape) #(123, 720, 150)Models:

We have implemented the following methods :

-

EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks (DGCNN [IEEE Trans'20])

-

EEG-Based Emotion Recognition Using Regularized Graph Neural Networks (RGNN [IEEE Trans'20])

-

SparseDGCNN: Recognizing Emotion from Multichannel EEG Signals (SparseDGCNN [IEEE Trans'21])

-

HetEmotionNet: Two-Stream Heterogeneous Graph Recurrent Neural Network for Multi-modal Emotion Recognition (HetEmotionNet [ACM MM'21])

Dataset:

GNN4EEG built the large-scale benchmark with the Finer-grained Affective Computing EEG Dataset (FACED). As far as we know, FACED is the largest affective computing dataset, which is constructed by recording 32-channel EEG signals from a large cohort of 123 subjects watching 28 emotion-elicitation video clips.

Experiments:

We present the experimental setup and the evaluation results using the proposed GNN4EEG toolkit on FACED dataset. Analyses of overall performances are elaborated here. The experiments are implemented on NVIDIA GeForce RTX 3090.

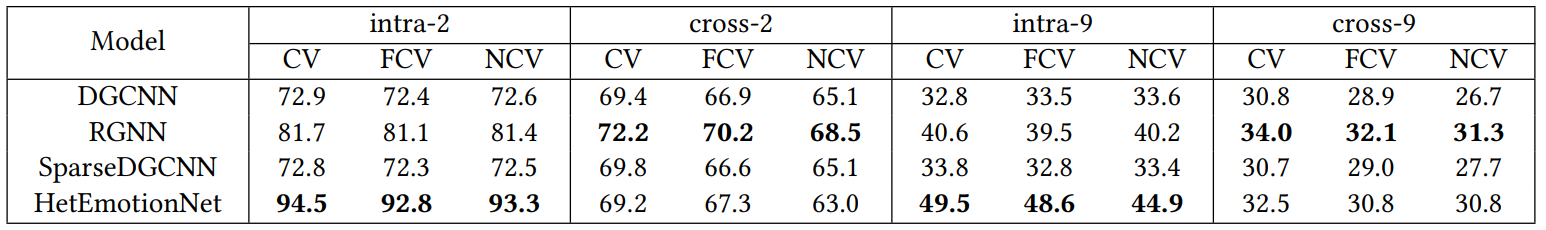

(Here, "intra-2" means binary intra-subject classification task and "cross-9" means 9 class cross-subject classification task. Others are similar.)

In the experiments, we set the fold number 𝐾 = 10 for all validation protocols and the “inner” fold number 𝐾 ′ = 3 for NCV. In intra-subject tasks, the 30 seconds EEG signals among all video clips and subjects are equally split into 𝐾 folds. While in cross-subject tasks, the 123 subjects are split into 𝐾 folds, with the last fold containing 15 subjects and the former each containing 12 subjects. We tune the number of hidden dimensions from {20, 40, 80} and the learning rate from {0.0001, 0.001, 0.01} for all tasks and models. Moreover, the dropout rate is 0.5, the number of GNN layers is 2, the batch size is 256, and the maximum number of epochs is set as 100. To address potential overfitting in different settings, we have utilized different weights for the 𝐿1 and 𝐿2 norm in different tasks. Specifically, both weights are set as 0.001 for intra-2, 0.005 for cross-9, and 0.003 for cross-2 and intra-9.

GNN4EEG implements 4 EEG classification tasks on FACED as the benchmark, 2 data splitting protocols, 3 validation protocols, and 4 GNN models. Plenty optional parameters are provided for convience and flexibility.

Totally, GNN4EEG implements these functions:

-

protocols.data_split

-

protocols.data_FACED

-

protocols.evaluation

-

models.DGCNN

-

models.RGNN

-

models.SparseDGCNN

-

models.HetEmotionNet

and each model is equipped with train, predict, save and load function.

Detailed arguments and usage will be further discussed in here.

Generally, to train and evaluate a model on a certain dataset, users can follow the steps below (according to the "Structure" chapter):

-

Data Splitting: Use protocols.data_split or protocols.data_FACED to load data and define the data splitting protocol.

-

Model Selection: Use models.* to select and set some basic hyper-parameters of your model.

-

Validation Protocols and Other Training Configurations: Use protocols.evaluation to define the validation protocols and put other training configurations into the parameter "grid". Then, the training and evaluating progress will be launched! (Hint: in this step, if you do not need a cross-validation to find proper hyper-parameters, then simply using *.train and *.predict is enough. Here, * represents your model declared in step 2)

Entire codes and other examples can be found in here or here

@article{zhang2023gnn4eeg,

title={GNN4EEG: A Benchmark and Toolkit for Electroencephalography Classification with Graph Neural Network},

author={Zhang, Kaiyuan and Ye, Ziyi and Ai, Qingyao and Xie, Xiaohui and Liu, Yiqun},

journal={arXiv preprint arXiv:2309.15515},

year={2023}

}

Kaiyuan Zhang (kaiyuanzhang2001@gmail.com,1292202748@qq.com)

Ziyi Ye (yeziyi1998@gmail.com)