Implementation of two word2vec algorithms from scratch: skip-gram (with negative sampling) and CBOW (continuous bag of words).

No machine learning libraries were used. All gradients and cost functions are implemented from scratch. I provide various "sanity-check" tests for all main functionalities implemented.

Important: the entire project and implementation are inspired by the first assignment in Stanford's course "Deep Learning for NLP" (2017). The tasks can be found at this address.

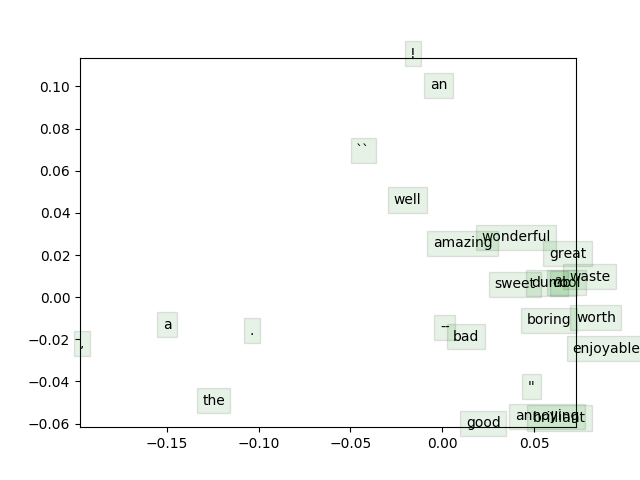

The word vectors are trained on the Stanford Sentiment Treebank (SST). Stochastic gradient descent is used for updating. The entire training process (roughly 40,000 iterations) will take ~3 hours on a standard machine (no GPUs). These word-vectors can be used to perform a (very simple) sentiment analysis task. Alternatively, pre-trained vectors can be used. More details about various parts of the implementation can be found in the assignment description (attached as a pdf, assignment1_description).

- python (tested with 2.7.12)

- numpy (tested with 1.15.4)

- scikit-learn (tested with 0.19.1)

- scipy (tested with 1.0.0)

- matplotlib (tested with 2.1.0)

- To download the datasets:

chmod +x get_datasets.sh

./get_datasets.shto 2. To train some word embeddings:

python word2vec.py- To perform sentiment analysis with your word vectors:

python sentiment_analysis.py --yourvectors- To perform sentiment analysis with pretrained word vectors (GloVe):

python sentiment_analysis.py --pretrainedAll my source code is licensed under the MIT license. Consider citing Stanford's Sentiment Treebank if you use the dataset. If you are using this code for purposes other than educational, please acknowledge Stanford's course as they were the initiators of the project, providing many core parts included in the current implementation.