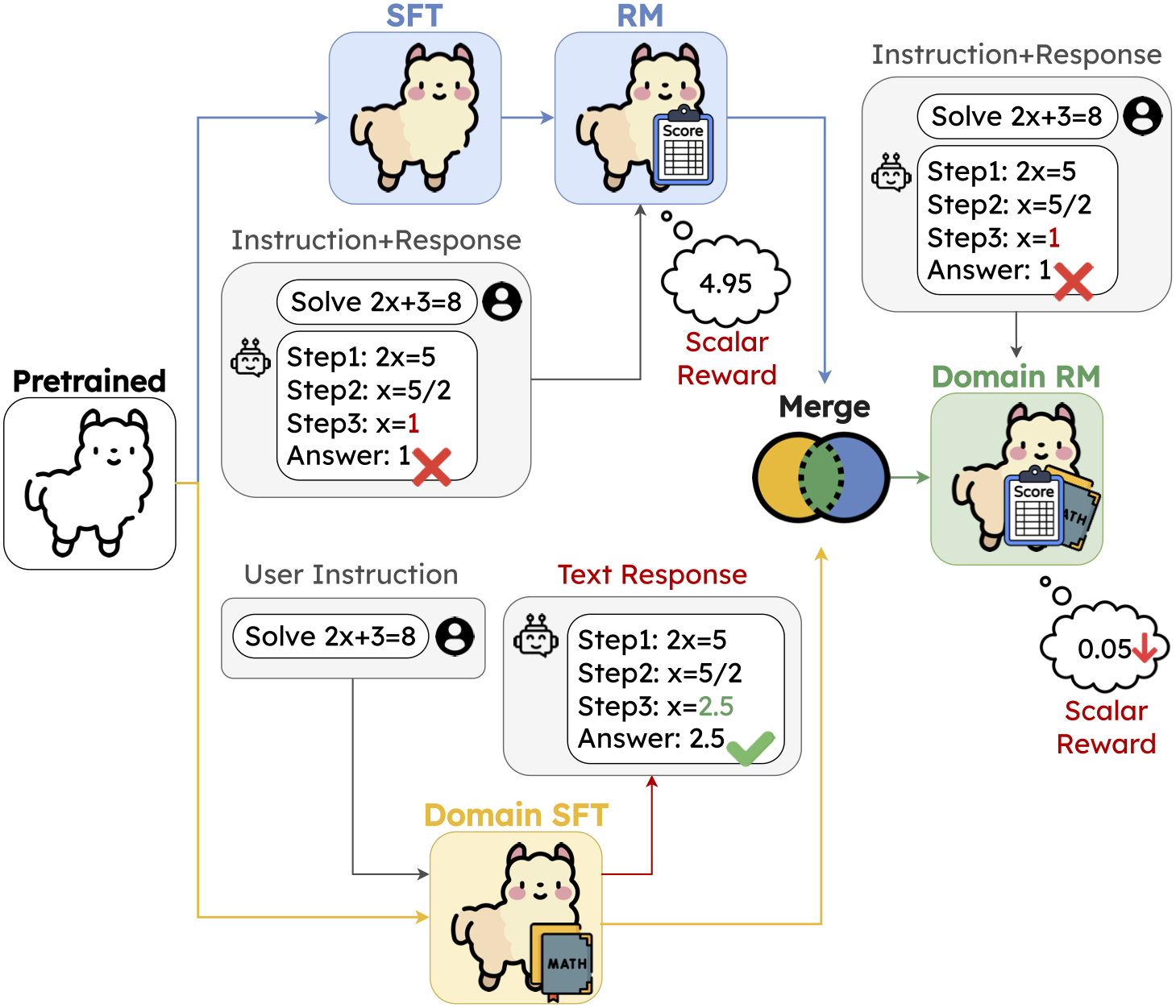

Overview of our DogeRM framework. We merge the general RM with a domain-specific LM to create the domain-specific RM. All icons used in this figure are sourced from https://www.flaticon.com/

- [2024.10.08] 🚧🚧🚧 Currently we are trying to integrating features such as system prompt into reward-bench. As a result, the evaluations on reward-bench are temporarily unavailable. 🚧🚧🚧

- [2024.10.06] We release our experimental codes and the model collections on 🤗huggingface.

- [2024.09.20]: 🎉🎉🎉 Our paper is accepted at EMNLP 2024 main conference (short paper). 🎉🎉🎉

Please clone our repo first:

git clone https://github.com/MiuLab/DogeRM.gitAdditionally, install the following repo for evaluation:

And clone Auto-J Eval Repo for accessing Auto-J Eval pairwise testing data, which can be found at data/testing/testdata_pairwise.jsonl.

After install conda:

cd DogeRM/

conda create -n {your_env_name} -f environment.yaml

conda activate {your_env_name}🚧🚧🚧

Note: We are in the process of integrating new features, including the system prompt functionality, into reward-bench. During this update, evaluations on reward-bench are temporarily unavailable. Thank you for your patience.

🚧🚧🚧

We include our modified RewardBench here, where the modification includes:

- use system prompt for our LLaMA-2 based RM, which is not included in the original implementation

- Load RM with

float16precision. - print the result of each subset to

stdout - report the result to

wandb

Since the python version required by reward-bench is different from what we used in RM training, so we create a separate environment for reward-bench.

You should create a new environment with python>=3.10 first:

conda create -n {env_name_for_reward_bench} python=3.10 # or later version of python

conda activate {env_name_for_reward_bench}

# install the required packages

cd reward-bench

pip install -e .Then, run the following code, which contains the whole pipeline for merging and evaluation:

reward_model="miulab/llama2-7b-ultrafeedback-rm"

langauge_model="miulab/llama2-7b-magicoder-evol" # or other langauge models

merged_model_output_path="../models/merged_model"

proj_name="{your_wandb_project_name}"

run_name_prefix="{wandb_run_name_prefix}"

# the run name on wandb will be $run_name_prefix-seq-$seq_ratio-lm-$lm_ratio

# where $seq_ratio is the weight for RM parameter

# and $lm_ratio is the weight for language model parameter

# in the merging process

bash run_merge.sh \

$reward_model \

$language_model \

$merged_model_output_path \

$project_name The evaluation result can be found at wandb.

For Auto-J Eval, you can run the whole pipeline, including merging and evaluation, using:

reward_model="miulab/llama2-7b-ultrafeedback-rm"

langauge_model="miulab/llama2-7b-magicoder-evol-instruct"

merged_model_output_path="../models/merged_model"

autoj_data_path="{path_to_autoj_pairwise_testing_data}"

cd scripts/

bash run_autoj_merge.sh \

$reward_model \

$langauge_model \

$merged_model_output_path \

$autoj_data_pathThe evaluation result will be printed to stdout.

For GSM8K, you can run the whole pipeline, including merging and evaluation, using:

reward_model="miulab/llama2-7b-ultrafeedback-rm"

langauge_model="miulab/llama2-7b-magicoder-evol-instruct"

merged_model_output_path="../models/merged_model"

bon_output_root="../bon_output/llama2/mbpp"

lm_output_file_name="llama2-chat.json"

log_file_name="log_file.txt"

rm_output_file_prefix="ultrafeedback-code"

cd scripts/

bash merge_gsm8k_bon.sh \

$reward_model \

$langauge_model \

$merged_model_output_path \

$bon_output_root \

$lm_output_file_name \

$log_file_name \

$rm_output_file_prefixThe evaluation results can be found at the DogeRM/bon_output/llama2/gsm8k/log_file/{log_file_name}.

For MBPP, you can run the whole pipeline, including merging and evaluation, using:

reward_model="miulab/llama2-7b-ultrafeedback-rm"

langauge_model="miulab/llama2-7b-magicoder-evol-instruct"

merged_model_output_path="../models/merged_model"

bon_output_root="../bon_output/llama2/mbpp"

lm_output_file_name="llama2-chat.json"

log_file_name="log_file.txt"

rm_output_file_prefix="ultrafeedback-code"

path_to_bigcode_eval="{path_to_bigcocde_evaluation_harness_repo}"

cd scripts/

bash merge_mbpp_bon.sh \

$reward_model \

$language_model \

$merged_model_output_path \

$bon_output_root \

$lm_output_file_name \

$log_file_name \

$rm_output_file_prefix \

$path_to_bigcode_evalThe evaluation results can be found at DogeRM/bon_output/execution_result/.

deepspeed --num_gpus {num_gpus_on_your_device} src/train_rm.py \

--model_name_or_path miulab/llama2-7b-alpaca-sft-10k \

--output_dir models/llama2-7b-ultrafeedback \

--report_to wandb \

--run_name {wabdb_run_name} \

--per_device_train_batch_size 4 \

--num_train_epochs 1 \

--fp16 True \

--gradient_accumulation_steps 1 \

--torch_dtype auto \

--learning_rate 1e-5 \

--warmup_ratio 0.05 \

--remove_unused_columns False \

--optim adamw_torch \

--logging_first_step True \

--logging_steps 10 \

--evaluation_strategy steps \

--eval_steps 0.1 \

--save_strategy steps \

--save_steps 100000000 \

--save_total_limit 1 \

--max_length 2048 \

--test_size 0.1 \

--dataset_shuffle_seed 42 \

--dataset_name argilla/ultrafeedback-binarized-preferences-cleaned \

--dataset_config_path config/dataset.yaml \

--gradient_checkpointing True \

--deepspeed config/ds_config.jsonpython src/merge_linear.py \

--seq_model {your_reward_mdoel} \

--seq_weight {weight_for_reward_model_parameters} \

--lm_model {your_langauge_model} \

--lm_weight {weight_for_language_model_parameters} \

--output_path {output_path_for_merged_model}If you find our code and models useful, please cite our paper using the following bibtex:

@article{lin2024dogerm,

title={DogeRM: Equipping Reward Models with Domain Knowledge through Model Merging},

author={Lin, Tzu-Han and Li, Chen-An and Lee, Hung-yi and Chen, Yun-Nung},

journal={arXiv preprint arXiv:2407.01470},

year={2024}

}We thank the reviewers for their insightful comments. This work was financially supported by the National Science and Technology Council (NSTC) in Taiwan, under Grants 111-2222-E-002-013-MY3 and 112-2223-E002-012-MY5. We thank to National Center for High-performance Computing (NCHC) of National Applied Research Laboratories (NARLabs) in Taiwan for providing computational and storage resources. We are also grateful to Yen-Ting Lin, Wei-Lin Chen, Chao-Wei Huang and Wan-Xuan Zhou from National Taiwan University for their insightful discussions and valuable advice on the overview figure.