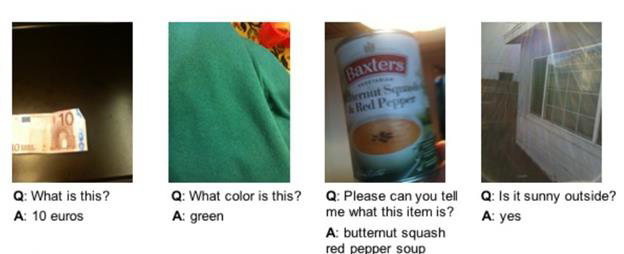

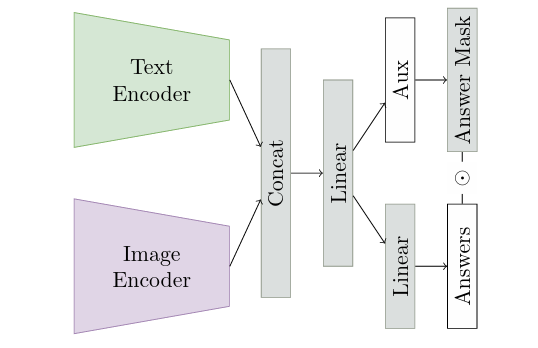

This project is a replication of the paper Less Is More: Linear Layers on CLIP Features as Powerful VizWiz Model, which uses OpenAI's CLIP model (without fine-tuning) as a feature extractor, along with a linear layer, to attempt the VizWiz Visual Question Answering and Answerability Challenges.

The dataset can be found here: VizWiz, along with the Kaggle notebook used in the attempt.

This project was developed as part of the course Pattern Recognition in the Spring 2023 semester at the Faculty of Engineering, Alexandria University, under the Computer and Communications Engineering department, supervised by Dr. Marwan Torki.

1- Examine the VizWiz dataset.

2- Build the model.

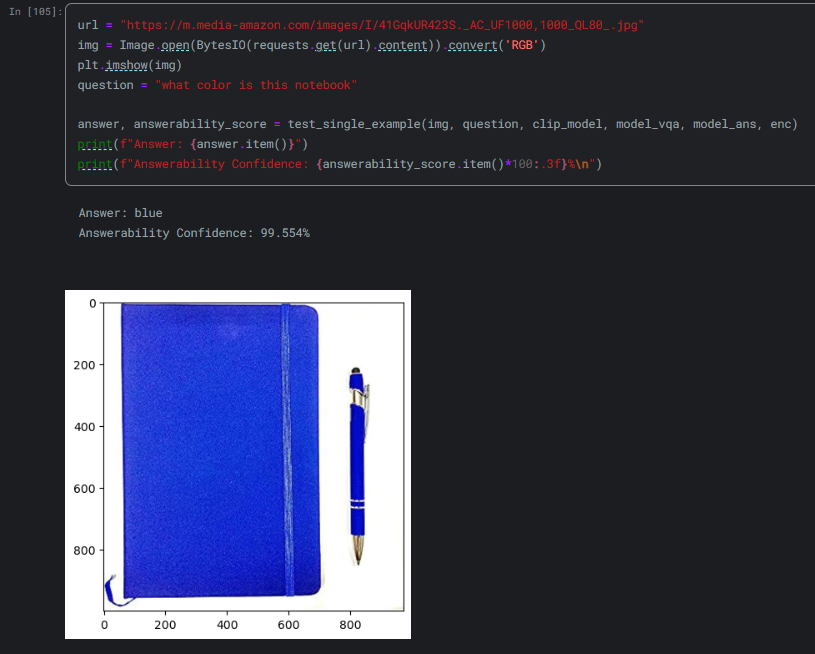

3- Evaluate the accuracy of the model according to the metrics defined by the competition.

4- Try your own example

This project was developed in the following environment:

- Jupyter Notebook

- Miniconda

- Python 3.11.5

- PyTorch

1- Clone the repository to your local machine:

git clone https://github.com/MohEsmail143/vizwiz-visual-question-answering.git2- Open Jupyter notebook.

3- Check out the the Jupyter notebook visual-question-answering.ipynb.

This project is licensed under the MIT License - see the LICENSE.md file for details.