fcmaes - a Python 3 gradient-free optimization library

fcmaes complements scipy optimize by providing additional optimization methods, faster C++/Eigen based implementations and a coordinated parallel retry mechanism. It supports the multi threaded application of different gradient free optimization algorithms.

fcmaes started as a fast CMA-ES implementation combined with a new smart parallel retry mechanism aimed to solve hard optimization problems from the space flight planning domain. It evolved to a general library of state-of-the-art gradient free optimization algorithms applicable to all kind of real world problems covering multi-objective and constrained problems. Its main algorithms are implemented both in Python and C++ and support parallel fitness function evaluation.

Documentation

Features

-

fcmaes is focused on optimization problems hard to solve utilizing modern many-core CPUs.

-

Parallel fitness function evaluation and different parallel retry mechanisms.

-

Minimized algorithm overhead - relative to the objective function evaluation time - even for high dimensions.

-

New multi-objective/constrained optimization algorithm combining features from Differential evolution and NSGA-II, supporting parallel function evaluation. Features enhanced multiple constraint ranking improving its performance in handling constraints for engineering design optimization.

-

BiteOpt algorithm from Aleksey Vaneev.

-

New DE (differential evolution) variant optimized for usage with parallel retry.

-

GCL-DE (differential evolution) variant from Mingcheng Zuo.

Performance

Engineering Design Optimization

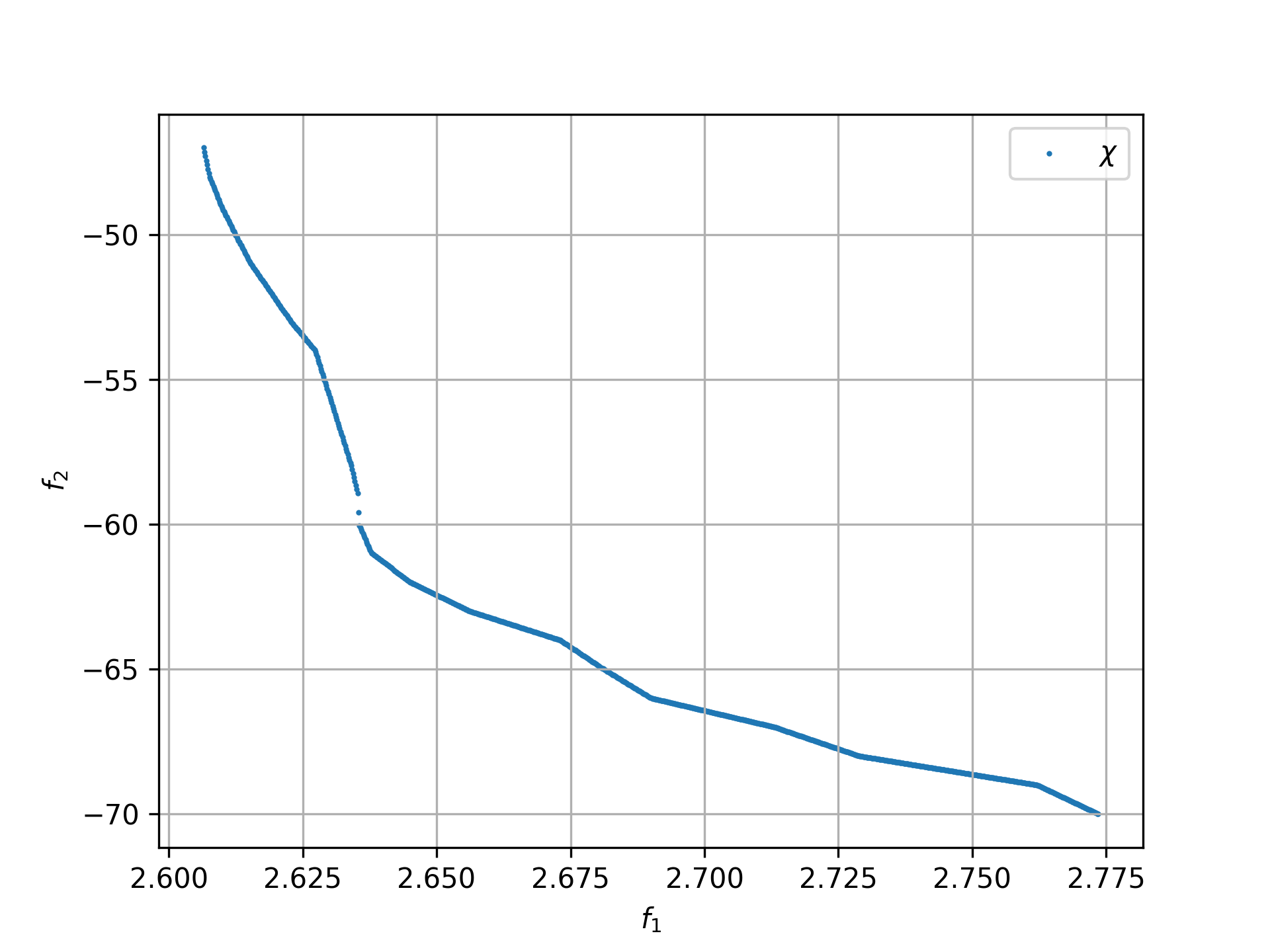

In this domain we often have multiple competing objectives and a lot of constraints. We present results for the Mazda real-world car structure design benchmark, the simultaneous optimization of three car models minimizing their weight, increasing the number of shared thicknesses of structural parts thereby fulfilling 54 constraints. 2017 there was a competition related to this problem Report of Evolutionary Computation Competition 2017, but until now not many of the ideas produced there have found their way into open source optimization libraries.

We applied modecpp.py for about 1 hour runtime using one AMD 5950x CPU on Linux, de/rand/1 strategy (nsga_update=False, pareto_update=False), population size = 512. We choose the best run out of two executed in parallel, each utilizing 16 threads (workers=16). This way about 8200 function evaluations are performed per second for both runs combined.

The resulting pareto front with hypervolume 0.3844 is:

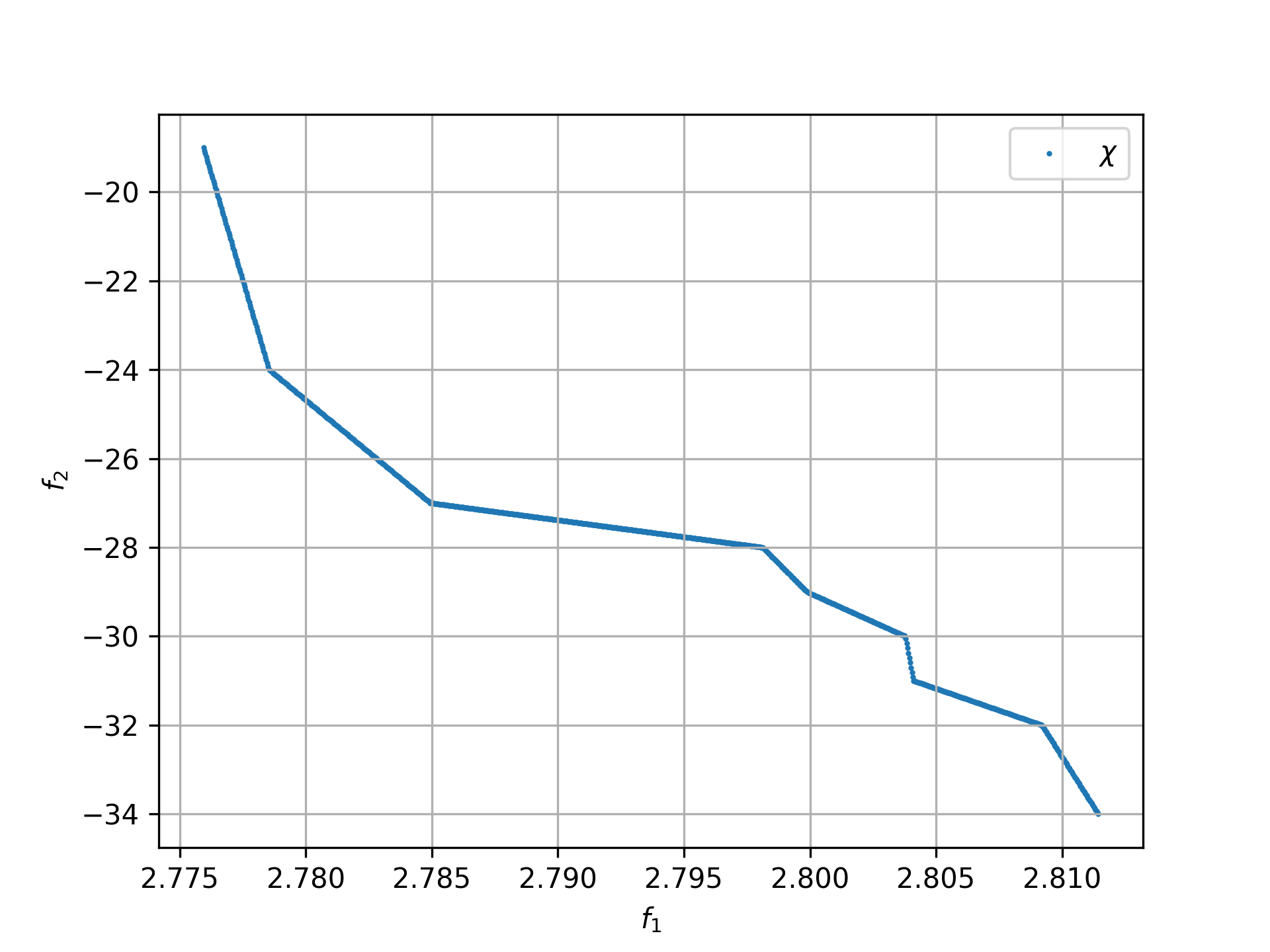

The "reference" NSGA-II solution given as part of the benchmark has hypervolume 0.1456:

Note, that the reference solution was computed using a limited budget. But NSGA-II scales much worse than fcmaes-MoDe using enhanced multiple constraint ranking.

Space Flight Trajectory Planning

fcmaes provides fast parallel example solvers for the real world space flight design problems GTOP and for the F-8_aircraft problem based on differential equations. On GTOPX you can find implementations of the corresponding objective functions using different programming languages. The solution times given in the tables below are for Linux / AMD 5950x CPU.

| problem | runs | absolute best | stopVal | success rate | mean time | sdev time |

|---|---|---|---|---|---|---|

Cassini1 |

100 |

4.9307 |

4.95535 |

98% |

7.43s |

10.7s |

Cassini2 |

100 |

8.383 |

8.42491 |

97% |

55.18s |

39.79s |

Gtoc1 |

100 |

-1581950 |

-1574080 |

100% |

25.88s |

22.15s |

Messenger |

100 |

8.6299 |

8.67305 |

100% |

18.12s |

15.48s |

Rosetta |

100 |

1.3433 |

1.35002 |

100% |

25.05s |

10.5s |

Tandem EVEES Constrained |

100 |

-1500.46 |

-1493 |

68% |

519.21s |

479.46s |

Sagas |

100 |

18.188 |

18.279 |

99% |

7.59s |

6.91s |

Messenger Full |

100 |

1.9579 |

1.96769 |

41% |

3497.25s |

2508.88s |

Messenger Full |

100 |

1.9579 |

2.0 |

59% |

1960.68s |

2024.24s |

Note that 'stopVal' is the threshold value determining success and 'mean time' includes the time for failed runs. Execute benchmark_gtop.py to reproduce these results. The same optimization algorithm was applied for all problems, using the same parameters both for the optimization algorithm and the coordinated retry.

| problem | runs | absolute best | stopVal | success rate | mean time | sdev time |

|---|---|---|---|---|---|---|

Cassini1 |

100 |

4.9307 |

4.93075 |

98% |

8.73s |

10.85s |

Cassini2 |

100 |

8.383 |

8.38305 |

44% |

310.18s |

283.52s |

Gtoc1 |

100 |

-1581950 |

-1581949 |

100% |

46.41s |

35.57s |

Messenger |

100 |

8.6299 |

8.62995 |

98% |

57.91s |

39.97s |

Rosetta |

100 |

1.3433 |

1.34335 |

27% |

268.18s |

207.59s |

Tandem |

100 |

-1500.46 |

-1500 |

65% |

564.26s |

517.94s |

Sagas |

100 |

18.188 |

18.189 |

99% |

8.76s |

7.01s |

Optimization algorithms

To utilize modern many-core processors all single-objective algorithms should be used with the parallel retry for cheap fitness functions, otherwise use parallel function evaluation.

-

MO-DE: A new multi-objective optimization algorithm merging concepts from differential evolution and NSGA. Implemented both in Python and in C++. Provides an ask/tell interface and supports constraints and parallel function evaluation. Can also be applied to single-objective problems with constraints.

-

BiteOpt algorithm from Aleksey Vaneev BiteOpt. Only a C++ version is provided. If your problem is single objective and if you have no clue what algorithm to apply, try this first. Works well with almost all problems. For constraints you have to use weighted penalties.

-

Differential Evolution: Implemented both in Python and in C++. Additional concepts implemented are temporal locality, stochastic reinitialization of individuals based on their age and oscillating CR/F parameters. Provides an ask/tell interface and supports parallel function evaluation.

-

CMA-ES: Implemented both in Python and in C++. Provides an ask/tell interface and supports parallel function evaluation.

-

GCL-DE: Eigen based implementation in C++. See "A case learning-based differential evolution algorithm for global optimization of interplanetary trajectory design, Mingcheng Zuo, Guangming Dai, Lei Peng, Maocai Wang, Zhengquan Liu", doi.

-

Dual Annealing: Eigen based implementation in C++. Use the scipy implementation if you prefer a pure python variant or need more configuration options.

-

Expressions: There are two operators for constructing expressions over optimization algorithms: Sequence and random choice. Not only the five single objective algorithms above, but also scipy and NLopt optimization methods and custom algorithms can be used for defining algorithm expressions.

Installation

Linux

-

pip install fcmaes. In case of issues with dependent libraries on old systems all dependent so-files are in https://github.com/dietmarwo/fast-cma-es/blob/master/fcmaes/lib.

Windows

-

pip install fcmaes -

install C++ runtime libraries https://support.microsoft.com/en-us/help/2977003/the-latest-supported-visual-c-downloads

Parallel fitness function evaluation works only with the native Python optimizers, not with the C++ ones. Use "workers = 1" for the C++-optimizers. Python multiprocessing is currently generally flawed on Windows. To get optimal scaling from parallel retry and parallel function evaluation use:

-

Linux subsystem for Windows:

The Linux subsystem can read/write NTFS, so you can do your development on a NTFS partition. Just the Python call is routed to Linux. If performance of the fitness function is an issue and you don’t want to use the Linux subsystem for Windows, think about switching to fcmaes-java.

MacOS

-

pip install fcmaes -

For using the C++ optimization algorithms:

-

adapt CMakeLists.txt

-

generate the shared library:

cmake . ; make install

-

Usage

Usage is similar to scipy.optimize.minimize.

For parallel retry use:

from fcmaes.optimizer import logger

from fcmaes import retry

ret = retry.minimize(fun, bounds, logger=logger())The retry logs mean and standard deviation of the results, so it can be used to test and compare optimization algorithms: You may choose different algorithms for the retry:

from fcmaes.optimizer import logger, Bite_cpp, De_cpp, Cma_cpp, Sequence

ret = retry.minimize(fun, bounds, logger=logger(), optimizer=Bite_cpp(100000))

ret = retry.minimize(fun, bounds, logger=logger(), optimizer=De_cpp(100000))

ret = retry.minimize(fun, bounds, logger=logger(), optimizer=Cma_cpp(100000))

ret = retry.minimize(fun, bounds, logger=logger(), optimizer=Sequence([De_cpp(50000), Cma_cpp(50000)]))Here examples.py you find examples. Check the Tutorial for more details.

In tutorial.py and advexamples.py you find examples for the smart retry.

Log output of the parallel retry

The log output of the parallel retry contains the following rows:

Parallel retry

-

time (in sec)

-

evaluations / sec

-

number of retries - optimization runs

-

total number of evaluations in all retries

-

best value found so far

-

mean of the values found by the retries below the defined threshold

-

standard deviation of the values found by the retries below the defined threshold

-

list of the best 20 function values in the retry store

-

best solution (x-vector) found so far

Mean and standard deviation would be misleading when using coordinated retry, because of the retries initiated by crossover. Therefore the rows of the log output differ slightly:

Smart retry

-

time (in sec)

-

evaluations / sec

-

number of retries - optimization runs

-

total number of evaluations in all retries

-

best value found so far

-

worst value in the retry store

-

number of entries in the retry store

-

list of the best 20 function values in the retry store

-

best solution (x-vector) found so far

Dependencies

Runtime:

Compile time (binaries for Linux and Windows are included):

-

Eigen https://gitlab.com/libeigen/eigen (version >= 3.9 is required for CMA).

-

pcg-cpp: https://github.com/imneme/pcg-cpp - used in all C++ optimization algorithms.

-

LBFGSpp: https://github.com/yixuan/LBFGSpp/tree/master/include - used for dual annealing local optimization.

Optional dependencies:

Example dependencies:

-

pykep: pykep. Install with 'pip install pykep'.

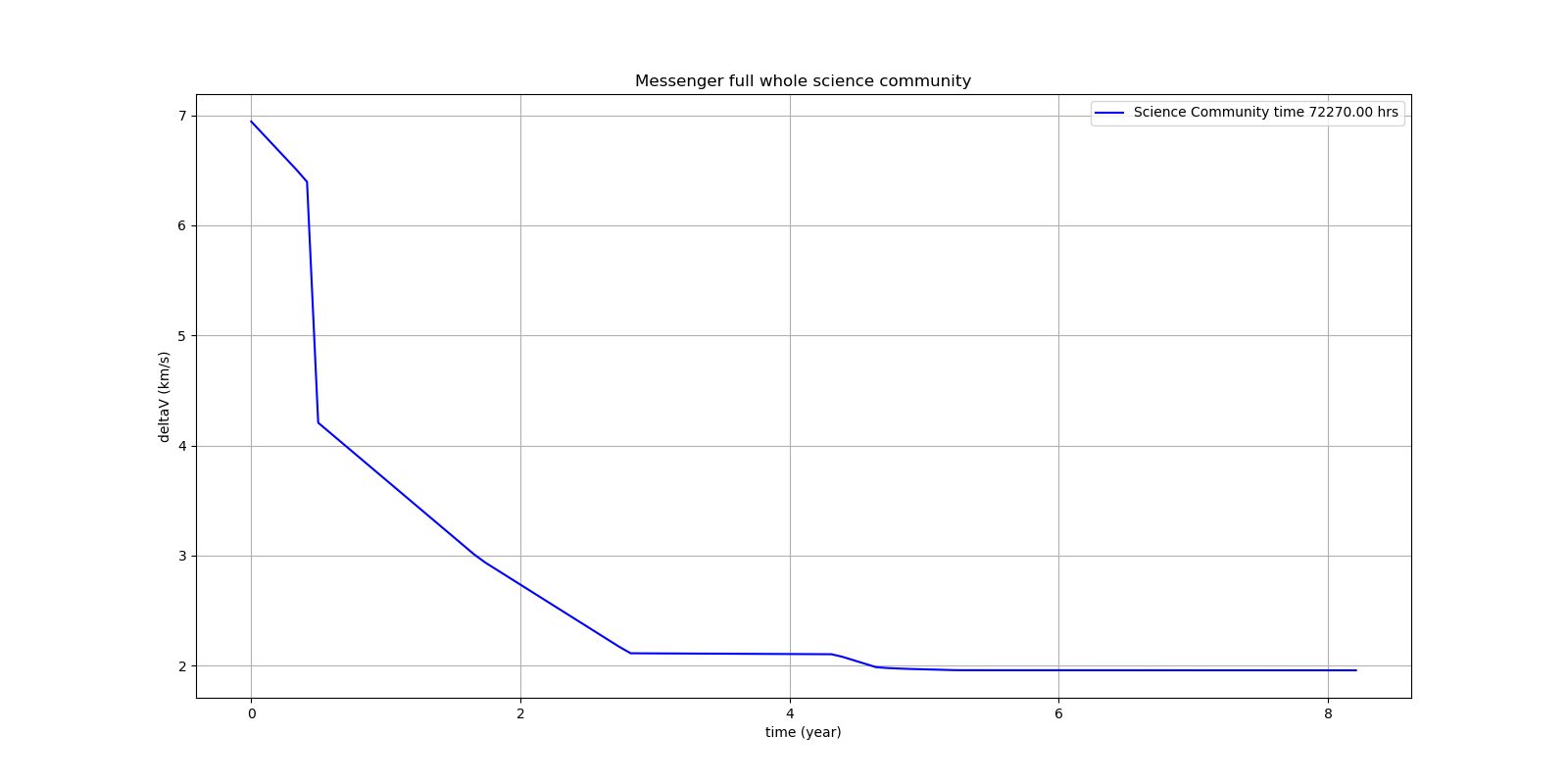

ESAs Messenger-Full Space Trajectory Design Problem

Because of its famous complexity ESAs 26-dimensional Messenger full problem is often referenced in the literature, see for instance MXHCP paper.

fcmaes is the only library capable of solving it using a single CPU: In about 1950 seconds on average using an AMD 5950x (1250 seconds for the java variant) .

The Problem models a multi-gravity assist interplanetary space mission from Earth to Mercury. In 2009 the first good solution (6.9 km/s) was submitted. It took more than five years to reach 1.959 km/s and three more years until 2017 to find the optimum 1.958 km/s. The picture below shows the progress of the whole science community since 2009:

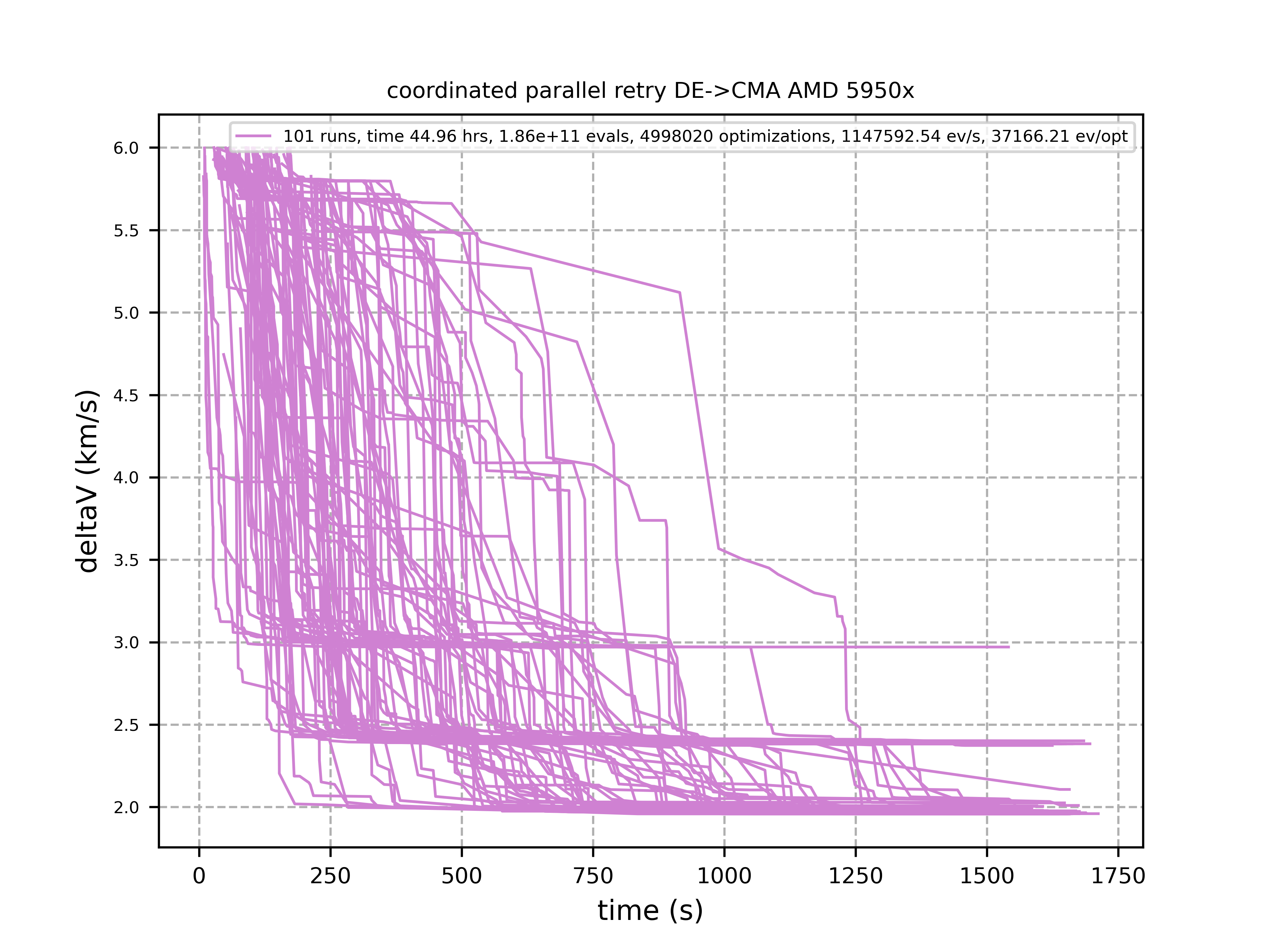

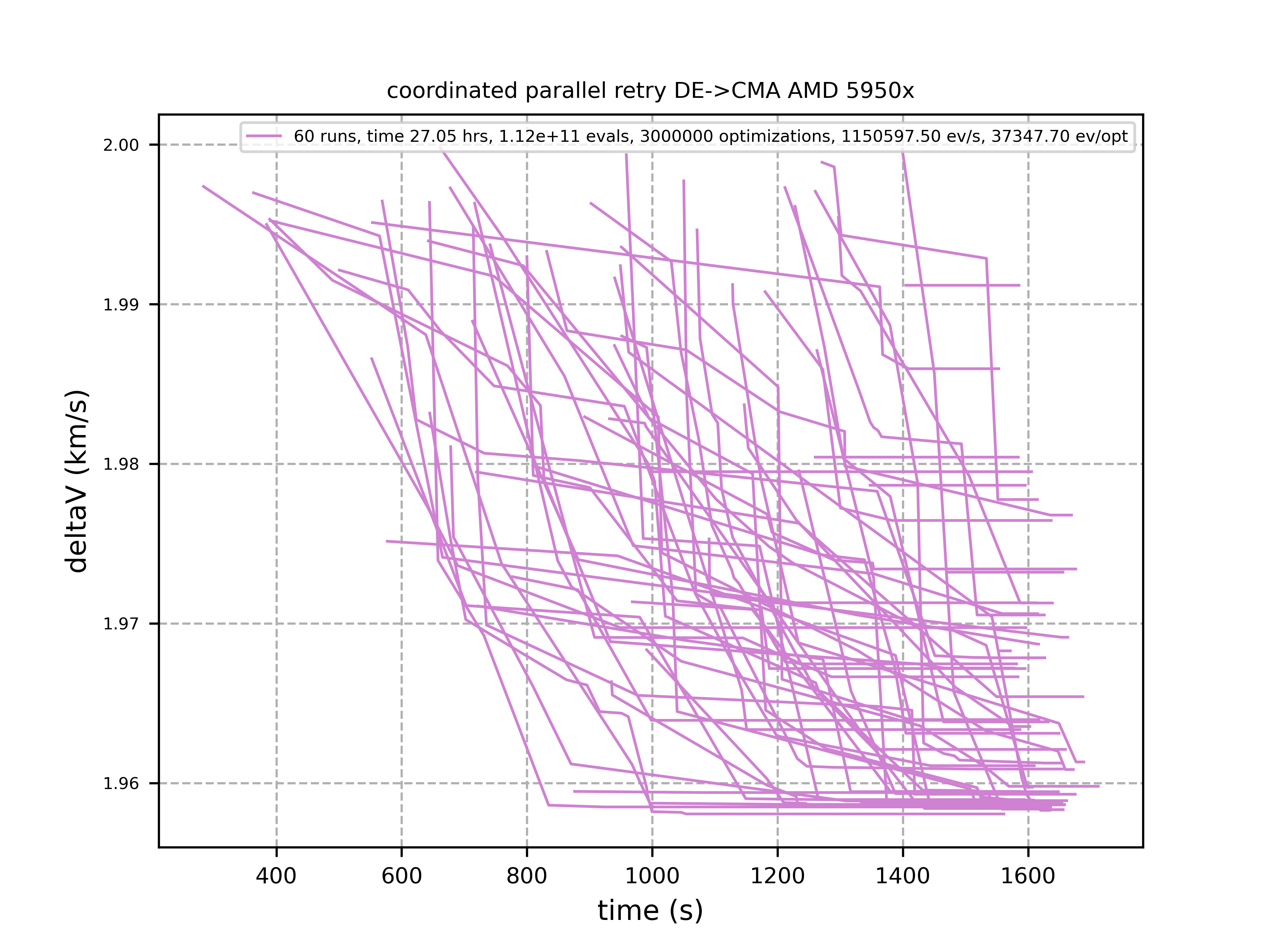

The following picture shows 101 coordinated retry runs:

60 out of these 101 runs produced a result better than 2 km/s:

About 1.2*10^6 function evaluations per second were performed which shows excellent scaling of the algorithm utilizing all 16 cores / 32 threads. https://github.com/dietmarwo/fcmaes-java/blob/master/README.adoc shows that the fcmaes java implementation sharing the same C++ code is significantly faster. fcmaesray shows how a 5 node cluster using 96 CPU-cores executing fcmaes coordinated retry performs in comparison.

Citing

@misc{fcmaes2021,

author = {Dietmar Wolz},

title = {fcmaes - A Python-3 derivative-free optimization library},

note = {Python/C++ source code, with description and examples},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {Available at \url{https://github.com/dietmarwo/fast-cma-es}},

}