Saltstack-Kubernetes is an open source Kubernetes cluster deployment platform which aims to evaluate and run Cloud Native Applications like those registered in the CNCF landscape. Server provisionning is managed using Terraform with a primarly target on low-cost Cloud providers like Scaleway and Hetzner. Kubernetes cluster deployment is managed using Saltstack to deploy the various software binaries, configuration files and cloud native applications required to operate.

The solution design carries the following requirements:

- Cloud provider agnostic: Works similarly on any clouds

- Networking privacy: All intra-cluster communications are TLS encrypted, pod network is encrypted, Firewall is enabled by default.

- Cluster security: Node security and RBAC are enabled by default

- Public endpoint: Leverage two servers stanting as edge gateway and allow the use of a single redudant Public IP address

- Secure admin network: Establish a private Mesh VPN between all servers

- Composable CRI: Support various Container Runtime Interface plugins (see: Features)

- Composable CNI: Support various Container Network Interface plugins (see: Features)

- Converged Storage: Persistent storage provided by cluster nodes

- API driven DNS: DNS records are managed just-in-time during the deployment

- Stable: Only leverage stable versions of software components

| Cloud provider | DNS provider | Kubernetes version | Container runtime | Container network |

|---|---|---|---|---|

|

|

|

|

|

- Default: bold

Before starting check that following requirements are met:

- Register a public domain name

- Associate the domain name with Cloudflare (Free)

- Register with the cloud provider of your choice. Expect 100$ for a full month (i.e Scaleway, Hetzner)

- Setup the

terraform/terraform.tfvarswith your appropriate credentials and configuration using this Example - Setup the

srv/pillar/cluster_config.slswith your appropriate credentials and configuration using this Example- Use this guide to customize the various credentials.

- Install the required tools (i.e. terraform, jq, wireguard-tools, etc.)

- Create the SSH key required to send commands to the servers.

Notice: The configuration files are recorded in the

.gitignorefile to avoid the accidental uploads on the Web.

Once the requirements are met, use the following command lines instanciate the server and the appropriate dns records.

cd terrafrom/

terraform init

terraform plan

terraform apply14 servers are instanciated by default. Terraform task parallelism is constrained in order to contraint the load on the cloud provider API.

At the end of the process a similar output should be displayed, listing all the generated servers and associated IP adresses.

Outputs:

hostnames = [

edge01,

edge02,

etcd01,

etcd02,

etcd03,

master01,

master02,

master03,

node01,

node02,

node03,

node04,

node05,

node06

]

...

vpn_ips = [

172.17.4.251,

172.17.4.252,

172.17.4.51,

172.17.4.52,

172.17.4.53,

172.17.4.101,

172.17.4.102,

172.17.4.103,

172.17.4.201,

172.17.4.202,

172.17.4.203,

172.17.4.204,

172.17.4.205,

172.17.4.206

]

The Kubernetes cluster deployment is acheived by connecting to the salt-master server (i.e edge01) to execute the salt states.

This can be acheived using the following one-liner...

ssh root@edge01.example.com -C "salt-run state.orchestrate _orchestrate"... Or by opening first a SSH session to get benefit of the salt state output coloring.

ssh root@edge01.example.com

root@edge01 ~ # salt-run state.orchestrate _orchestrateReplace example.com" with the "public-domain" value from the salt pillar.

Retrieve the admin user token stored in the salt pillar (i.e /srv/pillar/cluster_config.sls).

Install kubectl.

Download the Kubernetes cluster CA certificate.

export CLUSTER_DOMAIN="example.com"

mkdir -p ~/.kube/ssl/${CLUSTER_DOMAIN}

scp root@edge01.${CLUSTER_DOMAIN}:/etc/kubernetes/ssl/ca.pem ~/.kube/ssl/${CLUSTER_DOMAIN}/Create the kubectl configuration file.

export CLUSTER_TOKEN=mykubernetestoken

export CLUSTER_NAME="example"

export KUBECONFIG="~/.kube/config"

kubectl config set-cluster ${CLUSTER_NAME} \

--server=https://kubernetes.${CLUSTER_DOMAIN}:6443 \

--certificate-authority=~/.kube/ssl/${CLUSTER_DOMAIN}/ca.pem

kubectl config set-credentials admin-${CLUSTER_NAME} \

--token=${CLUSTER_TOKEN}

kubectl config set-context ${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=admin-${CLUSTER_NAME}

kubectl config use-context ${CLUSTER_NAME}Check the Kubernetes cluster component health.

kubectl get componentstatus

NAME STATUS MESSAGE ERROR

etcd-2 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}Check the Kubernetes cluster nodes status.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready master 11d v1.18.9

master02 Ready master 11d v1.18.9

master03 Ready master 11d v1.18.9

node01 Ready node 11d v1.18.9

node02 Ready node 11d v1.18.9

node03 Ready node 11d v1.18.9

node04 Ready node 11d v1.18.9

node05 Ready node 11d v1.18.9

node06 Ready node 11d v1.18.9

edge01 Ready ingress,node 11d v1.18.9

edge02 Ready ingress,node 11d v1.18.9Retreive the URLs protected by the Kube-APIserver.

kubectl cluster-info

Kubernetes master is running at https://kubernetes.example.com:6443

Elasticsearch is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/elasticsearch-logging/proxy

Heapster is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/heapster/proxy

Kibana is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy

CoreDNS is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

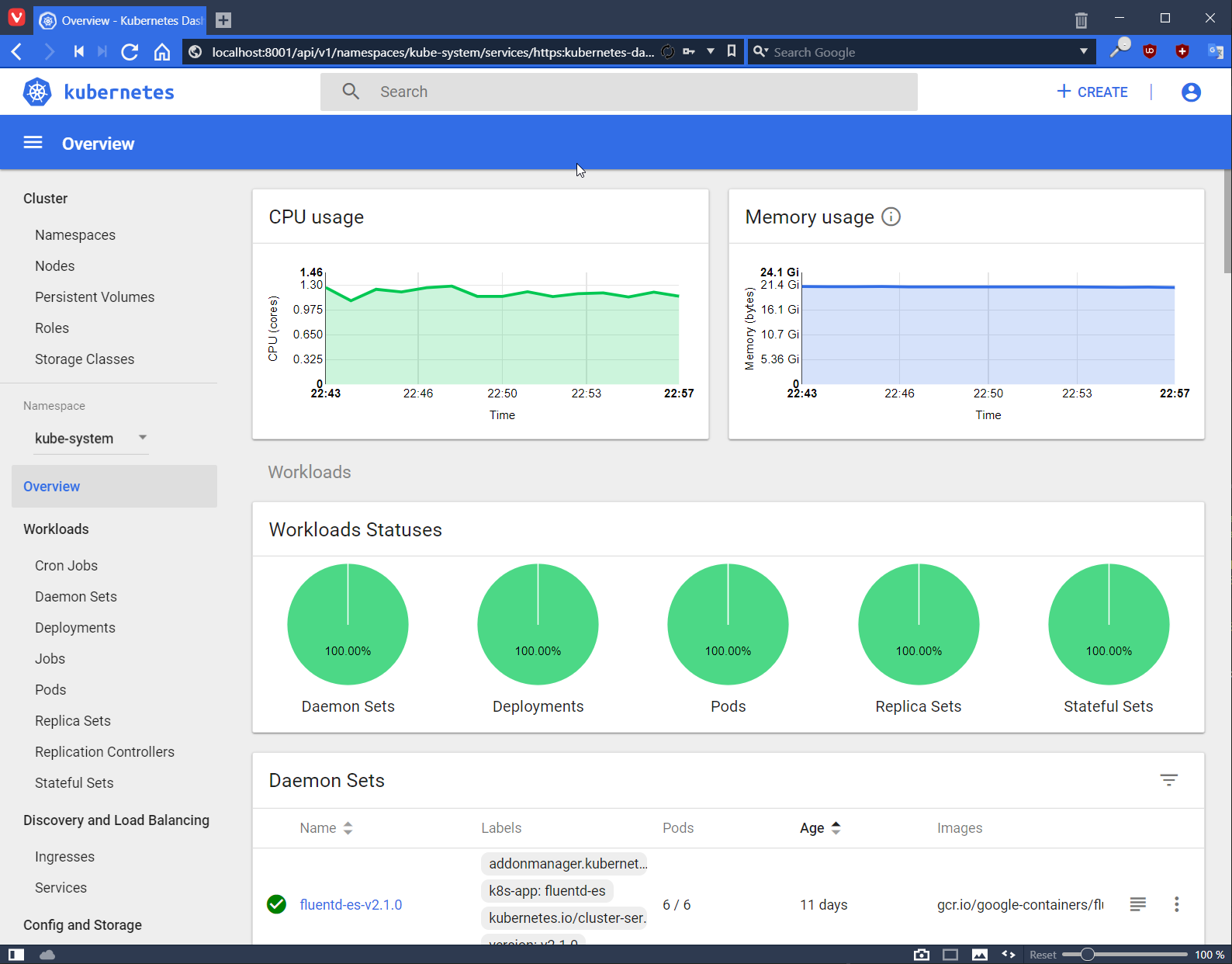

kubernetes-dashboard is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

Grafana is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

InfluxDB is running at https://kubernetes.example.com:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb:http/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.The URLs returned by kubectl cluster-info are protected by a mutual TLS authentification. Meaning that direct access from your Web Browser is denied until you register the appropriate certificate and private key in it.

Prefer the kubectl proxy command which enables the access to URL protected by the Kube-APIServer.

Once launched. URLs are available from the localhost on the HTTP port 8001.

e.g. http://localhost:8001/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

This project is vastly inspired by the following projects: