This repository contains the official PyTorch implementation and released dataset of the ACM MM 2022 paper:

SD-GAN: Semantic Decomposition for Face Image Synthesis with Discrete Attribute

Kangneng Zhou, Xiaobin Zhu, Daiheng Gao, Kai Lee, Xinjie Li, Xu-cheng Yin

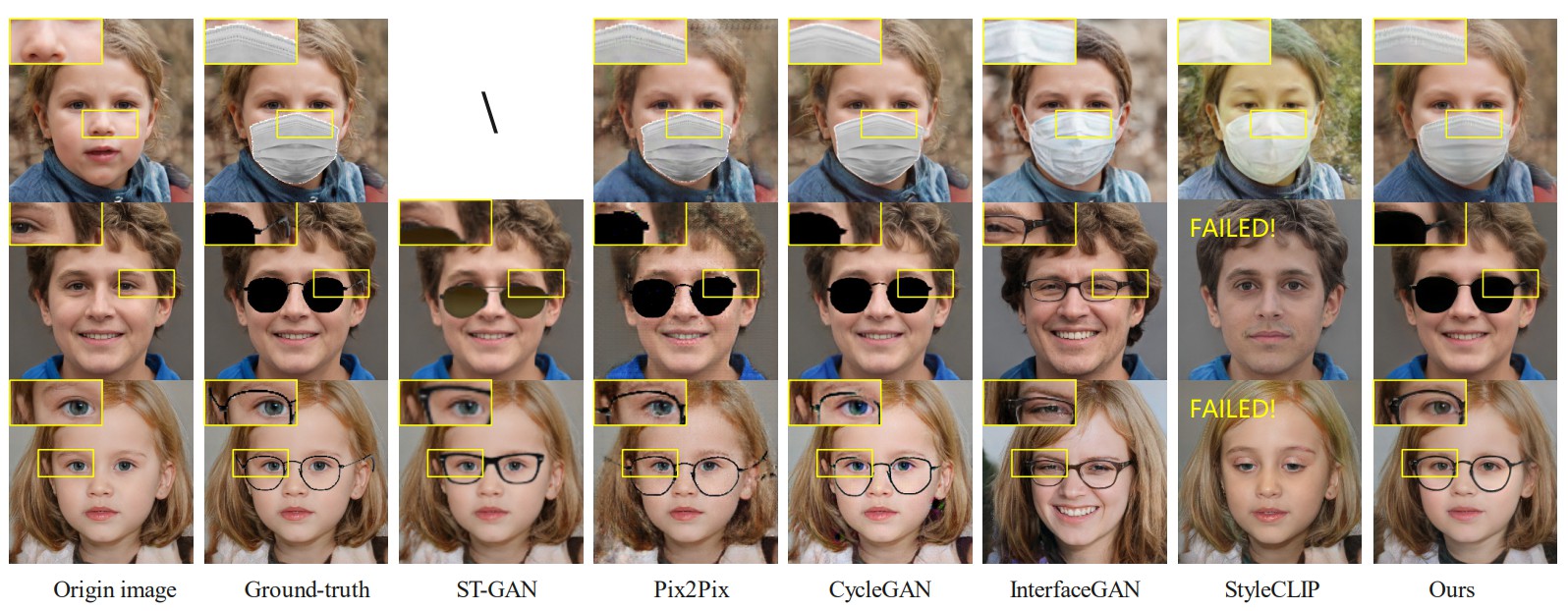

Manipulating latent code in generative adversarial networks (GANs) for facial image synthesis mainly focuses on continuous attribute synthesis (e.g., age, pose and emotion), while discrete attribute synthesis (like face mask and eyeglasses) receives less attention. Directly applying existing works to facial discrete attributes may cause inaccurate results. In this work, we propose an innovative framework to tackle challenging facial discrete attribute synthesis via semantic decomposing, dubbed SD-GAN. To be concrete, we explicitly decompose the discrete attribute representation into two components, i.e. the semantic prior basis and offset latent representation. The semantic prior basis shows an initializing direction for manipulating face representation in the latent space. The offset latent presentation obtained by 3D-aware semantic fusion network is proposed to adjust prior basis. In addition, the fusion network integrates 3D embedding for better identity preservation and discrete attribute synthesis. The combination of prior basis and offset latent representation enable our method to synthesize photo-realistic face images with discrete attributes. Notably, we construct a large and valuable dataset MEGN (Face Mask and Eyeglasses images crawled from Google and Naver) for completing the lack of discrete attributes in the existing dataset. Extensive qualitative and quantitative experiments demonstrate the state-of-the-art performance of our method.

Representative visual results of different methods. Our method outperforms other methods on visual quality.

- Getting Started

- Proposed Dataset for Traing StyleGAN

- Pretrained Models

- Inference

- Acknowledgments

- Citation

- Linux

- NVIDIA GPU + CUDA CuDNN (CPU may be possible with some modifications, but is not inherently supported)

- Python 3

- PyTorch >= 1.6.0

- Neural Render Requirement in unsup3d environment

- We train a StyleGAN2 model based on offical implement and convert it to Pytorch format using convert_weight.py.

- MEGN:

Our MEGN for training generator.

- Retrained StyleGAN2 Model

- Face Image Synthesis Model for Face Mask

- Face Image Synthesis Model for Sun Glasses

- Face Image Synthesis Model for Frame Glasses

put StyleGAN2 model and pretrained model from [Unsup3d](https://github.com/elliottwu/unsup3d) to 'pretrained'

mkdir data

modify 'output_dir' in hparams.py to a fold you want to save synthesized images

put face image synthesis model for face mask to 'output_dir'

modify 'kind' in hparams.py to 'mask'

modify 'w_file' in hparams.py to 'data/w_mask_test_1000.json'

modify 'interface_file' in hparams.py to 'data/mask_interfacegan_test_1000.json'

python test.py

modify 'output_dir' in hparams.py to a fold you want to save synthesized images

put face image synthesis model for glasses mask to 'output_dir'

modify 'kind' in hparams.py to 'glasses'

modify 'is_normal' in hparams.py to 'False'

modify 'w_file' in hparams.py to 'data/w_glasses_test_1000.json'

modify 'interface_file' in hparams.py to 'data/glasses_interfacegan_test_1000.json'

python test.py

modify 'output_dir' in hparams.py to a fold you want to save synthesized images

put face image synthesis model for glasses mask to 'output_dir'

modify 'kind' in hparams.py to 'glasses'

modify 'is_normal' in hparams.py to 'True'

modify 'w_file' in hparams.py to 'data/w_glasses_test_1000.json'

modify 'interface_file' in hparams.py to 'data/glasses_interfacegan_test_1000.json'

python test.py

The code borrows from SEAN, Unsup3d, StyleGAN2 and StyleGAN2-Pytorch. Jointly developed with Prof. Shuang Song. Thank you to Jie Zhang for all the help I received.

If you use this code for your research, please cite the following work:

@inproceedings{zhou2022sd,

title={SD-GAN: Semantic Decomposition for Face Image Synthesis with Discrete Attribute},

author={Zhou, Kangneng and Zhu, Xiaobin and Gao, Daiheng and Lee, Kai and Li, Xinjie and Yin, Xu-cheng},

booktitle={Proceedings of the 30th ACM International Conference on Multimedia},

pages={2513--2524},

year={2022}

}