This is the official Github repository for the paper "Generative Table Pre-training Empowers Models for Tabular Prediction" by Tianping Zhang, Shaowen Wang, Shuicheng Yan, Jian Li, and Qian Liu.

Recently, the topic of table pre-training has attracted considerable research interest. However, how to employ table pre-training to boost the performance of tabular prediction (e.g., Housing price prediction) remains an open challenge. In this project, we present TapTap, the first attempt that leverages table pre-training to empower models for tabular prediction.

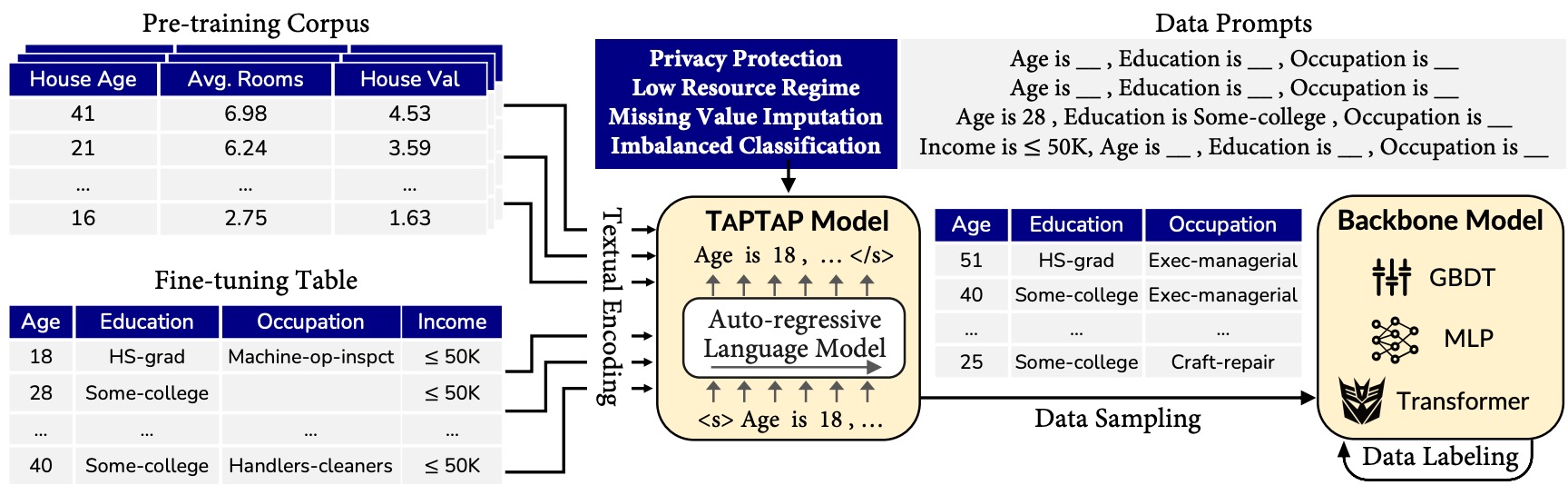

The TapTap model is firstly pre-trained on the pre-training corpus, and then fine-tuned on the downstream table. During both pre-training and fine-tuning, tables are serialized into sequences via textual encoding, and TapTap is trained to predict them token by token. During inference, TapTap is prompted to sample values for “___” in data prompts, and the filled values build up a synthetic table. Finally, once the backbone model has yielded labels for the synthetic table, it can be used to strengthen the backbone model. In theory TapTap can be applied to any backbone model!

TapTap can synthesize high-quality tabular data for data augmentation, privacy protection, missing value imputation, and imbalanced classification. For more details, please refer to our paper .

The example demonstrates the overall process of TapTap to synthesize high-quality data, including fine-tuning, sampling, and label generation.

We have uploaded our pre-training corpus to Huggingface datasets. You can download it from here and use this code to load all the datasets into a dictionary of pd.DataFrame.

If you find this repository useful in your research, please cite our paper:

@article{zhang2023generative,

title={Generative Table Pre-training Empowers Models for Tabular Prediction},

author={Zhang, Tianping and Wang, Shaowen and Yan, Shuicheng and Li, Jian and Liu, Qian},

journal={arXiv preprint arXiv:2305.09696},

year={2023}

}- GreaT: TapTap is inspired a lot by the awesome work of GReaT. We thank the authors of GReaT for releasing their codebase.

- Huggingface: We use the Huggingface transformers framework to pre-train / fine-tune our models. We thank the team of Huggingface for their great work.

- DeepFloyd IF: We use the DeepFloyd IF to generate the project logo.