Leveraging the Spatio-Temporal Structure of Image Sequences for Self-Supervised Monocular Depth-Pose Learning

Tao Han1, Tingxiang Fan2 and Jia Pan2

City University of Hong Kong1, The University of Hong Kong2

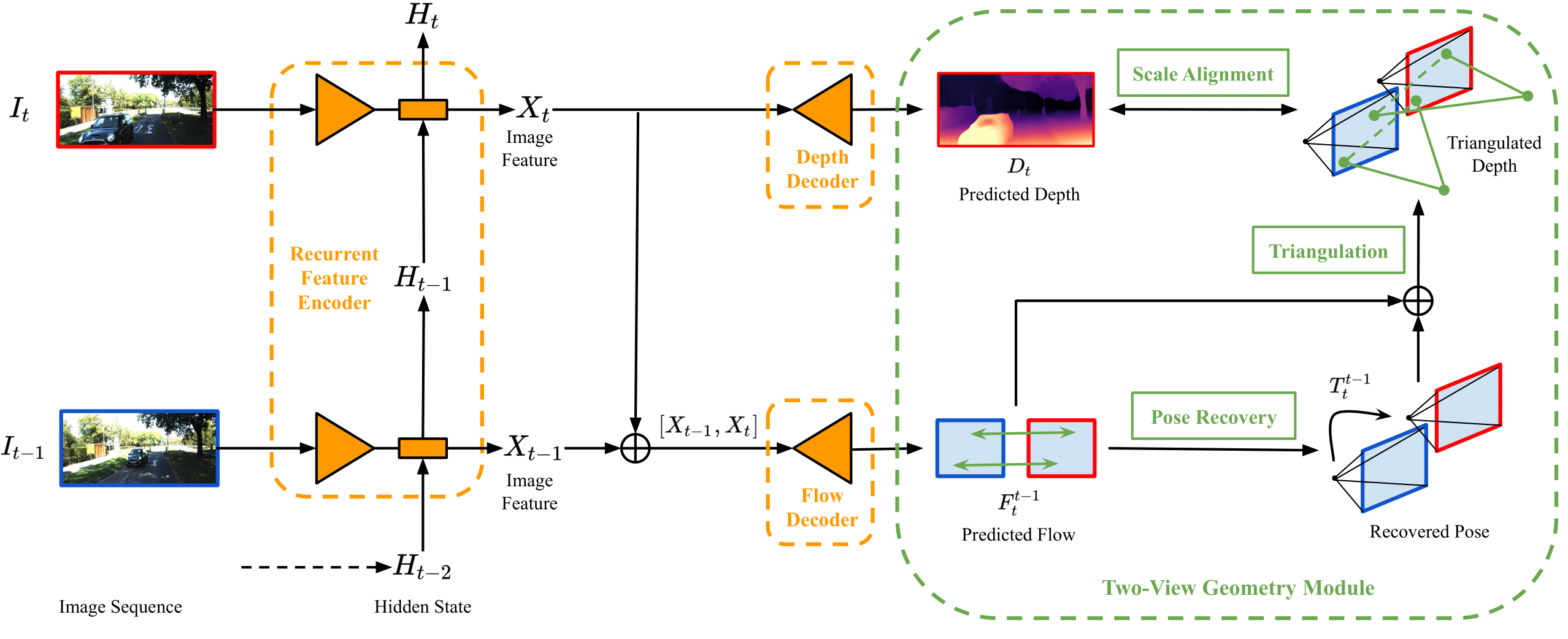

Most existing methods in self-supervised depth-pose learning have not fully explored the underlying spatio-temporal structure of video input, which limits their performance in both accuracy and generalization. In this work, we consider the issue from two aspects: i) the temporal connection between sequential images should be perceived and utilized by the models in inference stage, and ii) the multiple-view geometry between sequential images is essential for the estimation task which is unnecessary to be learned from scratch. To tackle the problem, we propose a novel system that can leverage the spatio-temporal structure of image sequences for the task. Specifically, we design a unified recurrent network architecture to capture temporal information in video data and extract reliable features for more accurate estimations. Secondly, we replace the widely used learn-from-scratch inferring framework with a geometry-aided framework, where network models focus on depth and optical flow learning and a two-view geometry module is employed for pose recovery and depth-pose scale alignment to boost system generalization. Thirdly, we introduce the technique of per-pixel reprojection loss into the geometry-aided framework to further improve system performance based on self-supervised training. Extensive experiments show that the proposed system not only reaches state-of-the-art performance on KITTI depth and pose estimation but also demonstrates its advantage in terms of generalization ability.

Figure 1. System overview. All system modules work online during training and inference. Please refer to our paper for more details about our system.