This repo includes some implementations of Computer Vision algorithms using tf2+. Codes are easy to read and follow. If you can read Chinese, I have a teaching website for studying AI models.

All toy implementations are organised as following:

- CNN

$ git clone https://github.com/MorvanZhou/Computer-Vision

$ cd Computer-Vision

$ pip install -r requirements.txtConvolution mechanism and feature map

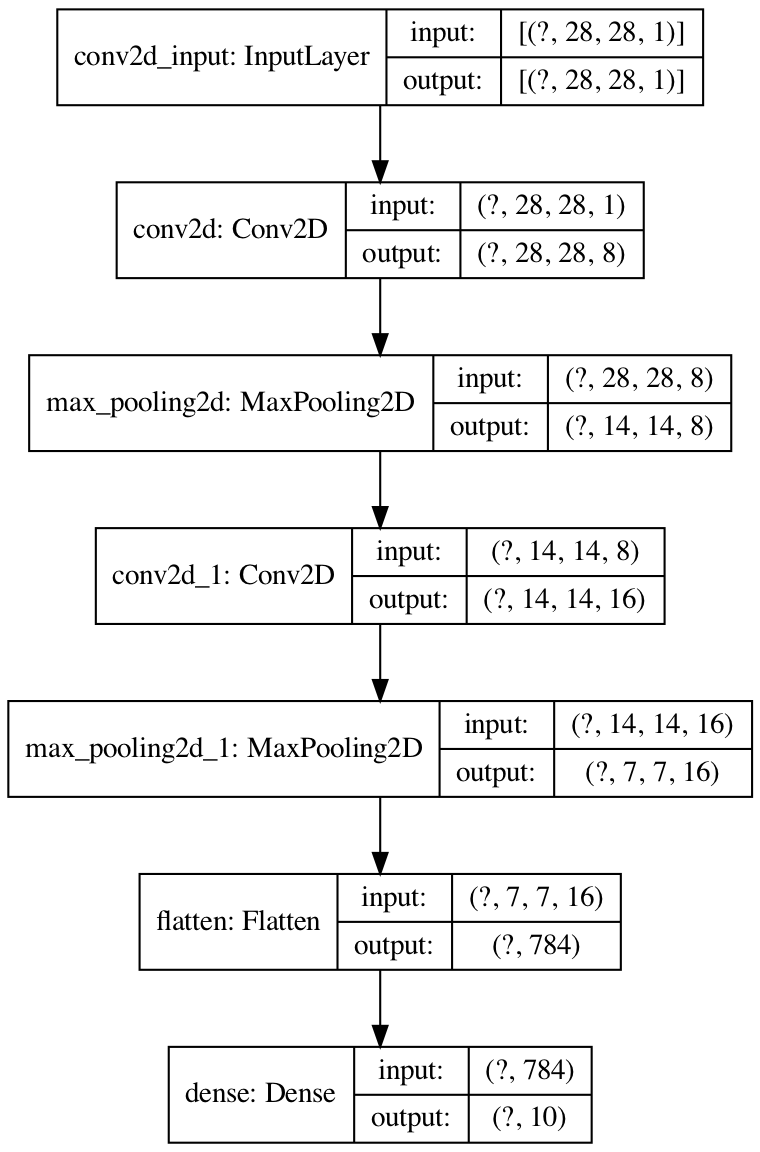

Gradient-Based Learning Applied to Document Recognition

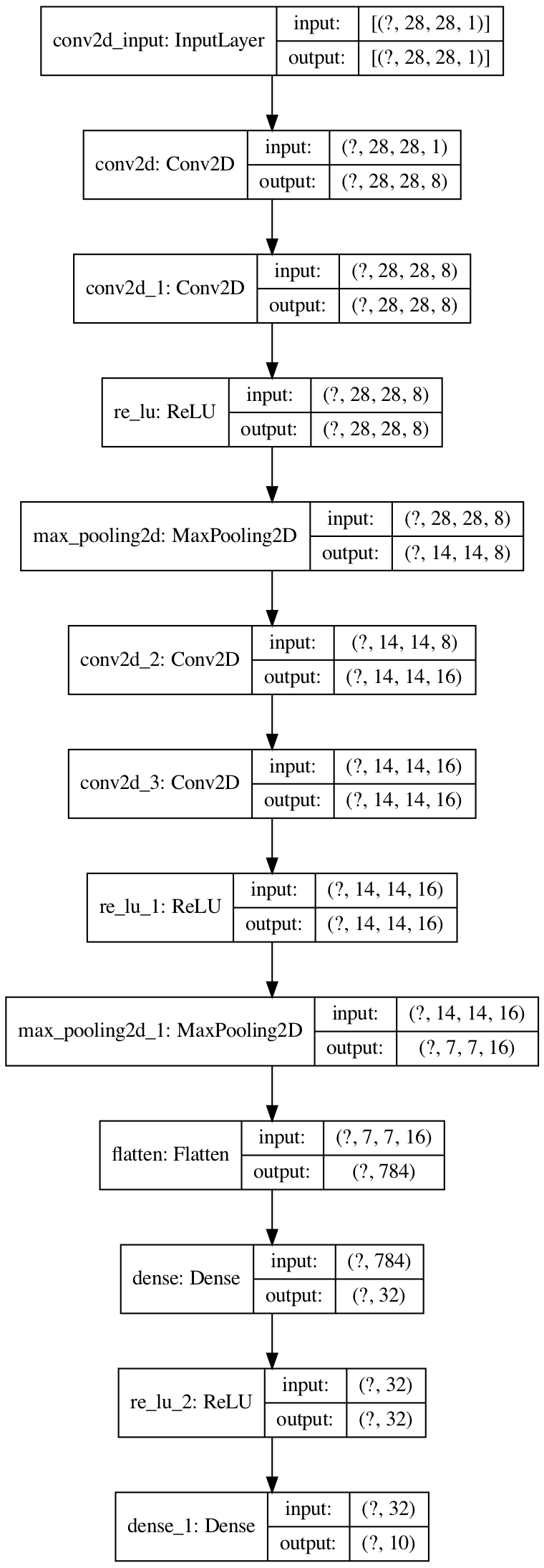

Very Deep Convolutional Networks for Large-Scale Image Recognition

Deep stacked CNN.

Going Deeper with Convolutions

Multi kernel size to capture different local information

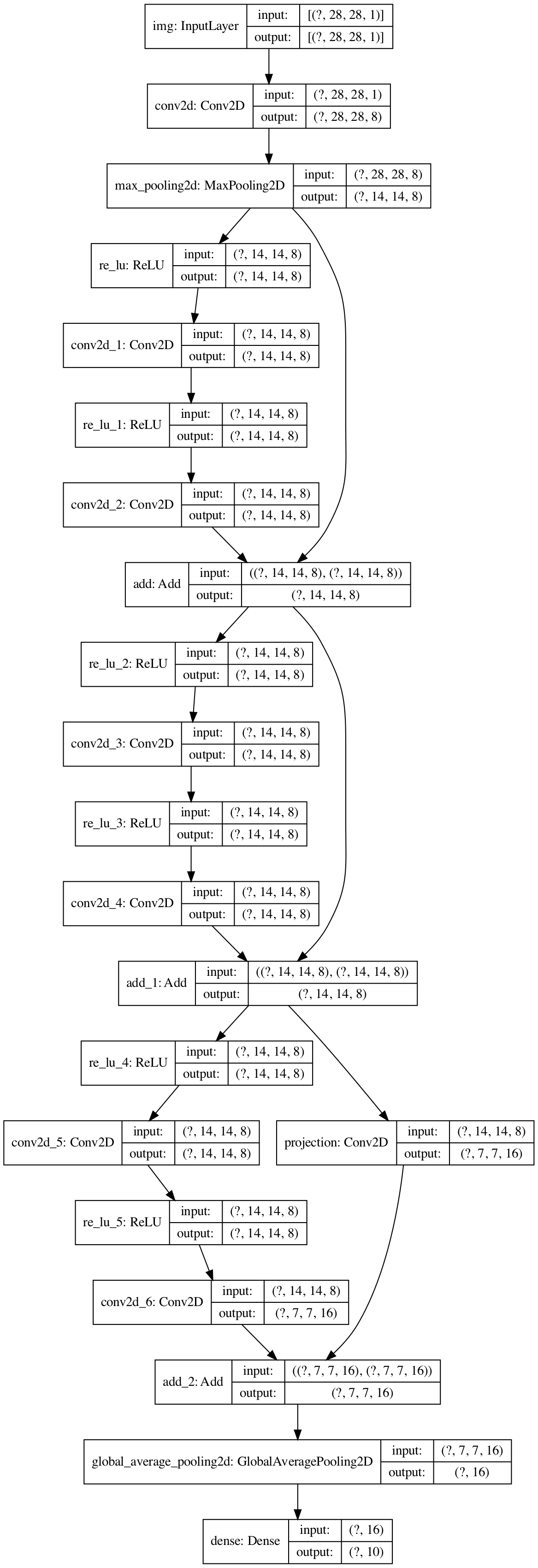

Deep Residual Learning for Image Recognition

Add residual connection for better gradients.

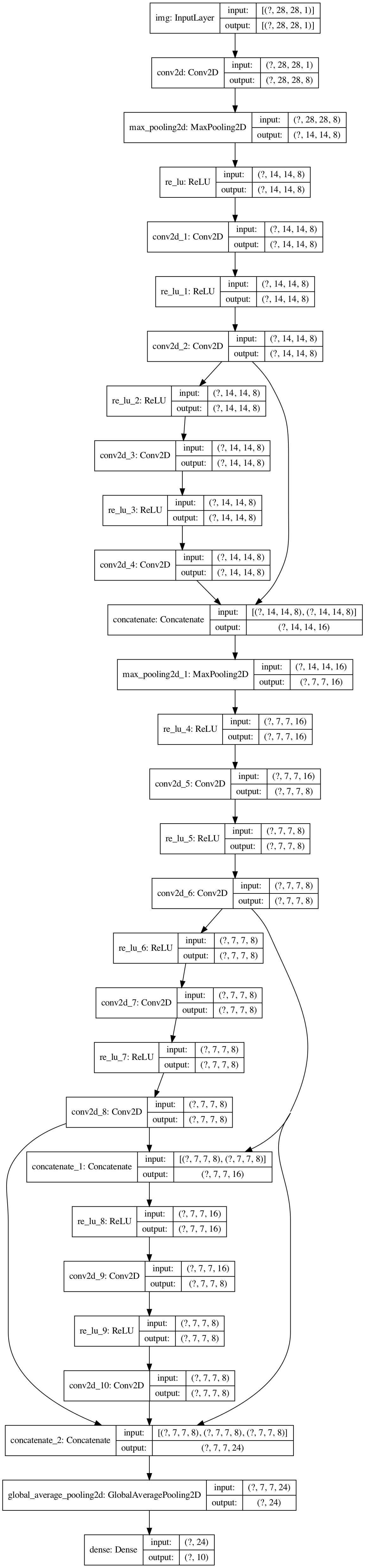

Densely Connected Convolutional Networks

Compared with resnet, it has less filter each conv, sees more previous inputs.

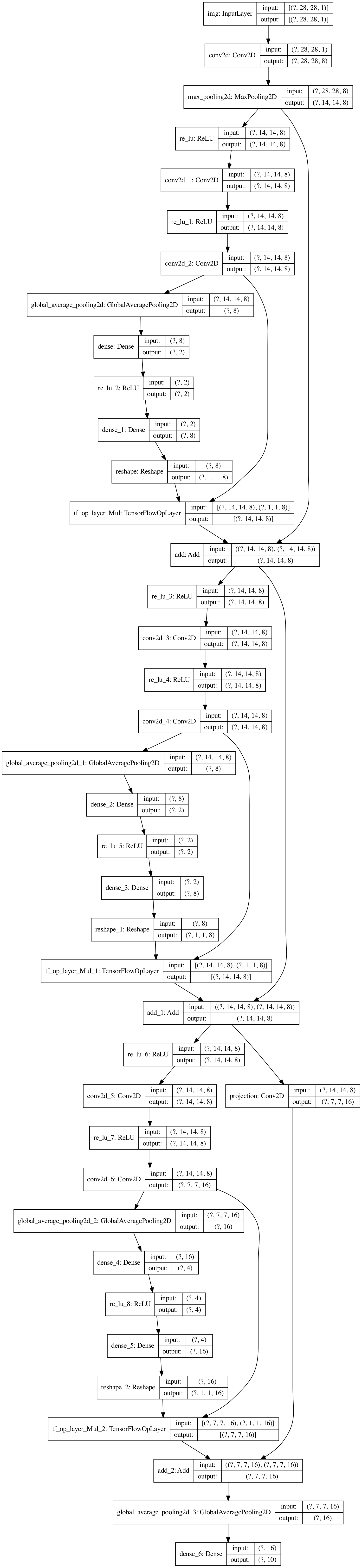

Squeeze-and-Excitation Networks

SE is a module that learns to scale each feature map, it can be plugged in many cnn block, larger reduction_ratio reduce parameter size in FC layers with limited accuracy drop.

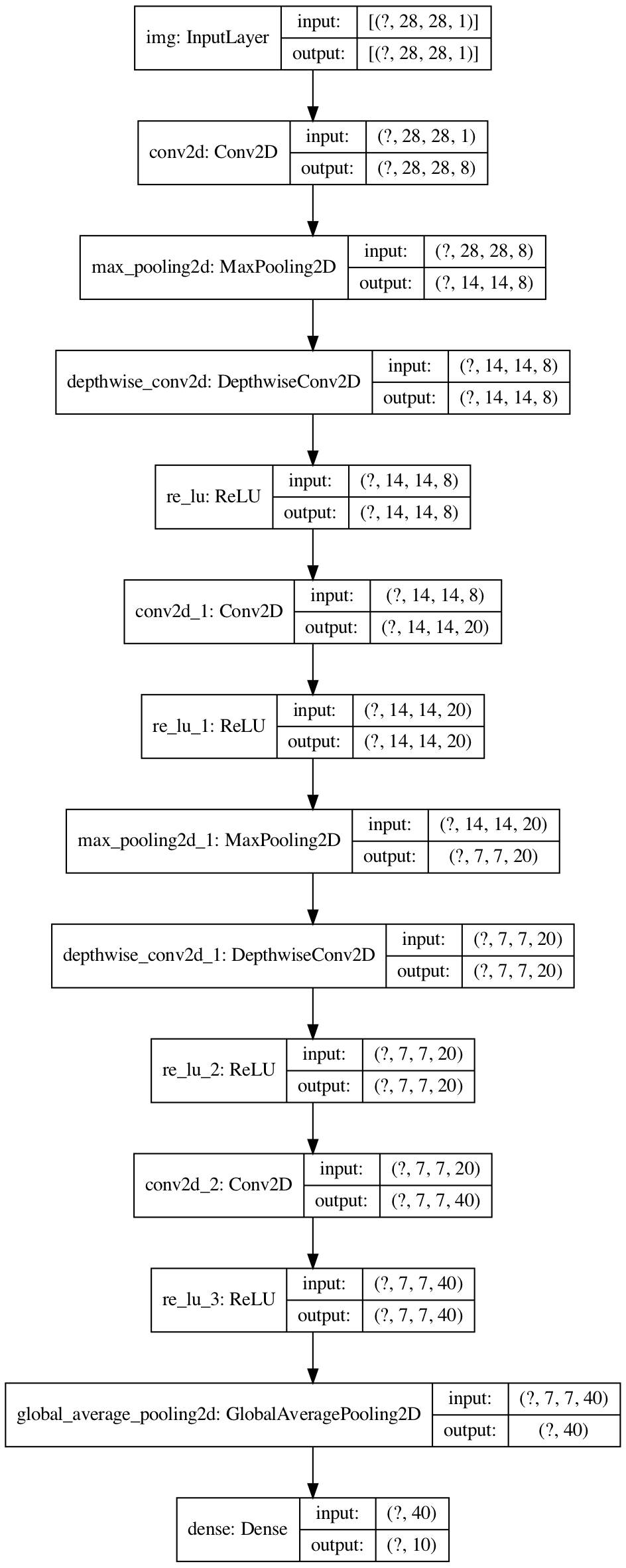

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

Decomposed classical conv to two operations (dw+pw). Small but effective cnn optimized on mobile (cpu).

MobileNetV2: Inverted Residuals and Linear Bottlenecks

MobileNet v2 is v1 with residual block and layer rearrange (residual+pw+dw+pw):

- mobilenet v1: dw > pw

- mobilenet v2: pw > dw > pw let dw see more feature maps

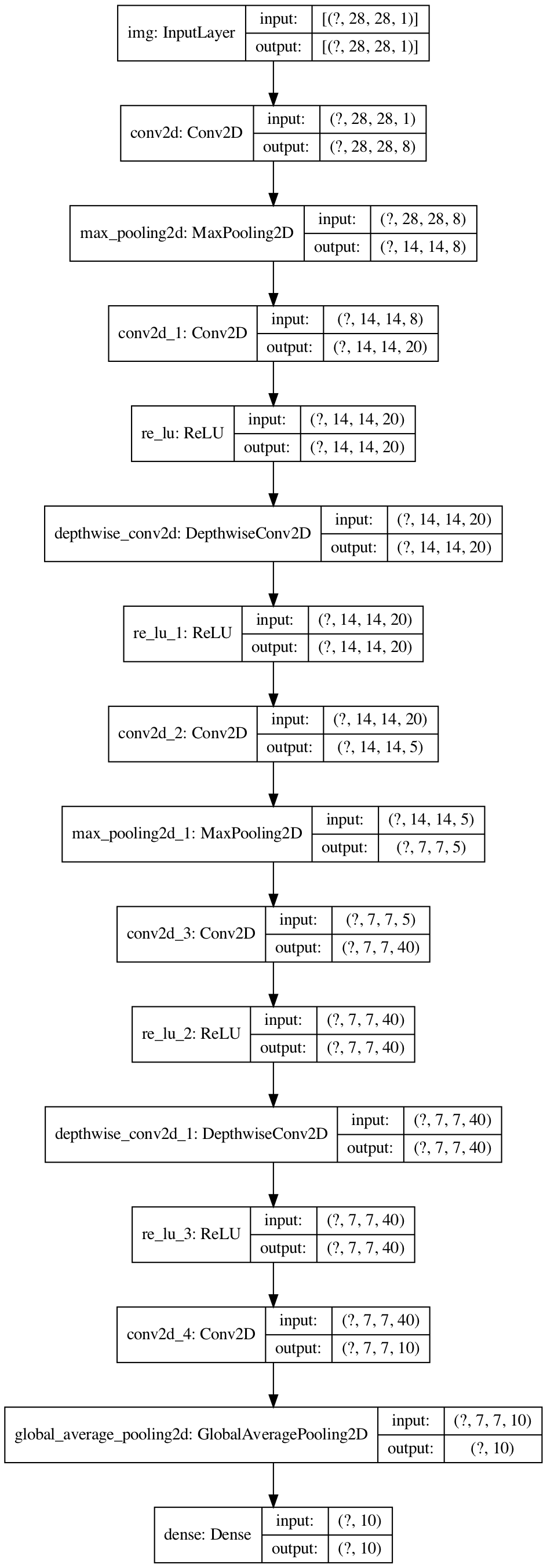

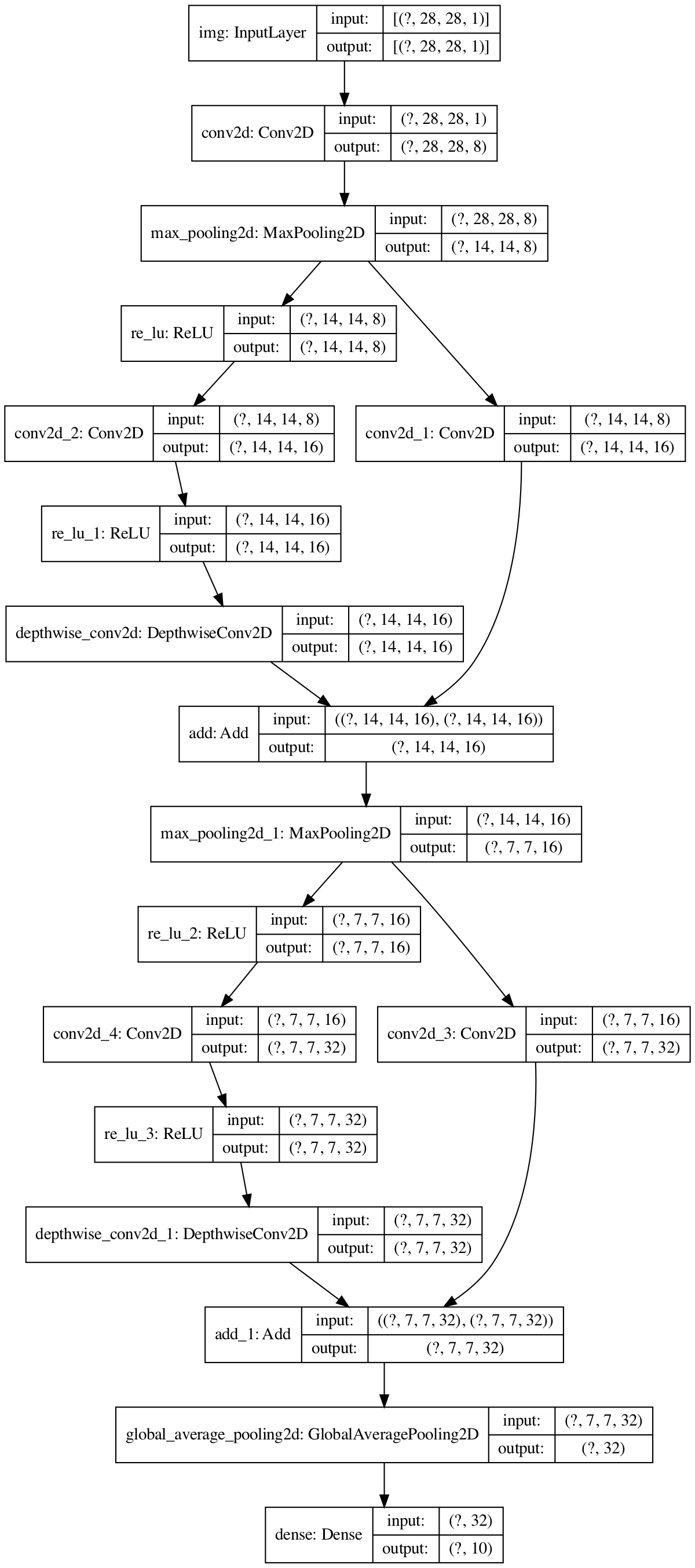

Xception: Deep Learning with Depthwise Separable Convolutions

Just like MobileNetV2 without last pw (residual+pw+dw).

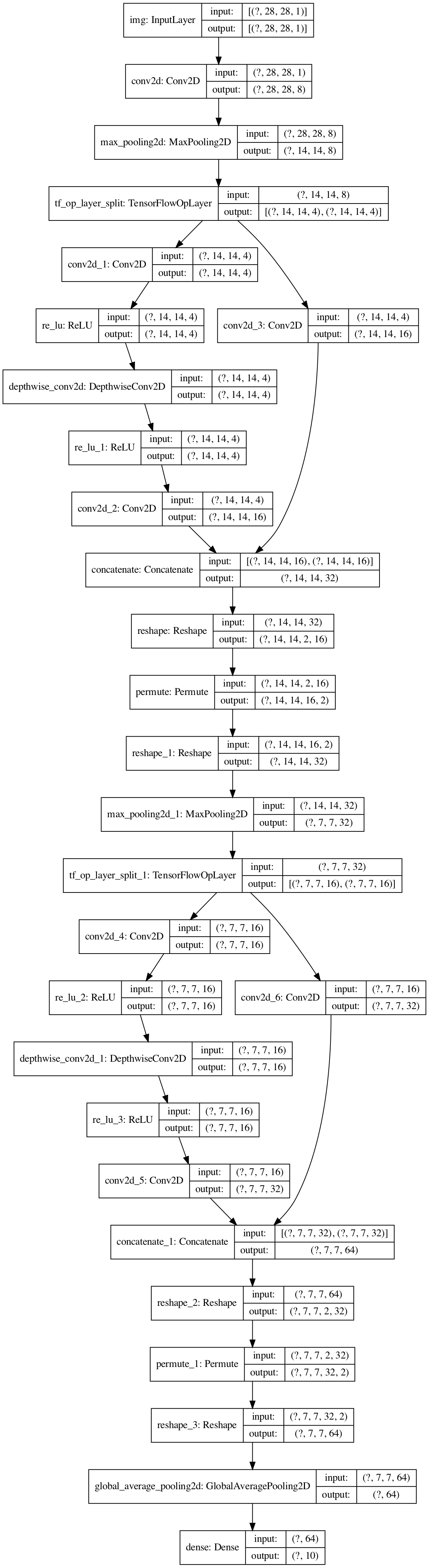

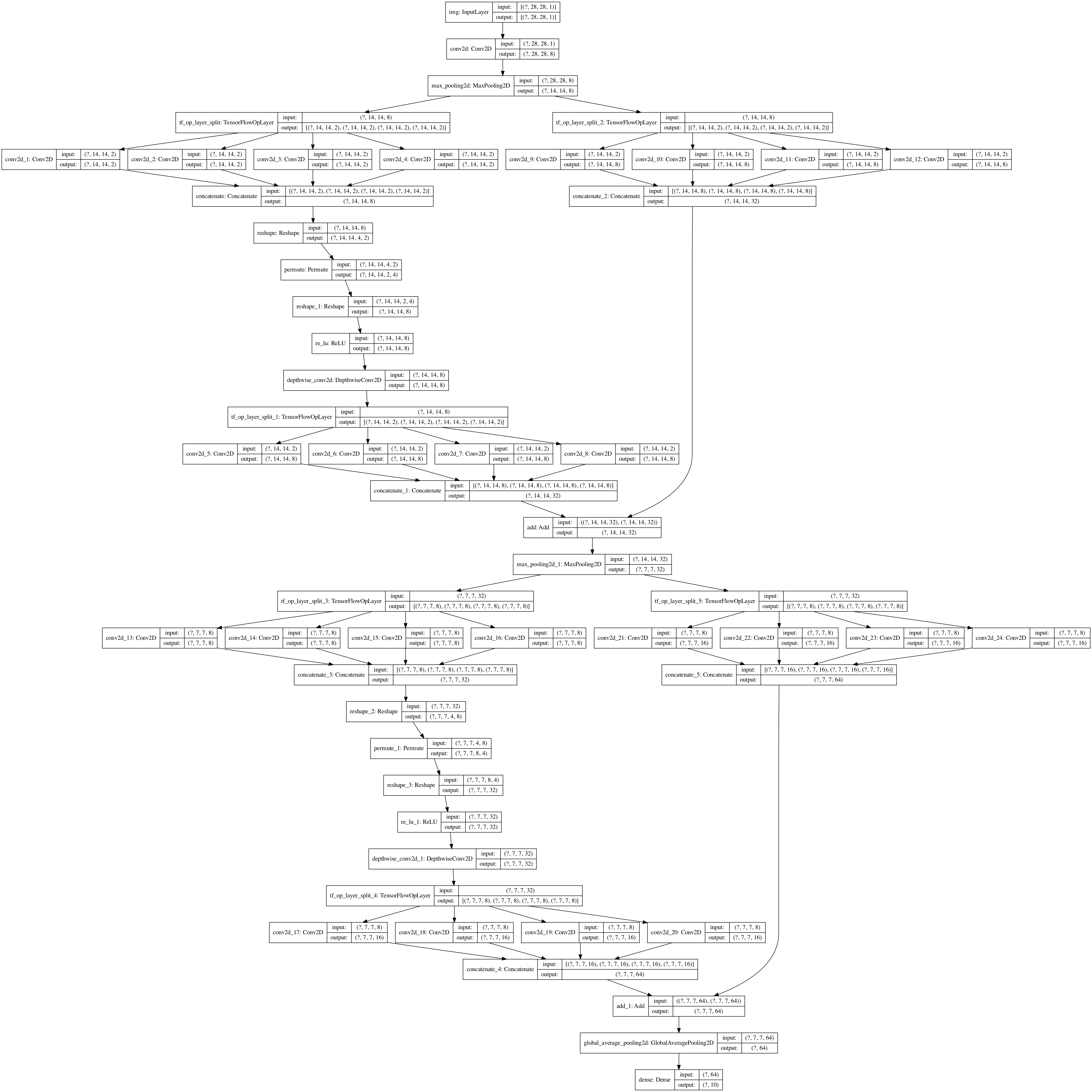

ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

Shuffle the output from 1x1 conv, and do group conv to reduce connections and speed up computing. But MobileNet is better in this case, this may caused by group conv cuts off some feature map communications.

ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design

Further reduces parameters by switching group conv with split+concat, perform shuffle at end of block. Speed up calculation. But MobileNet is better in this case, this may caused by group conv cuts off some feature map communications.