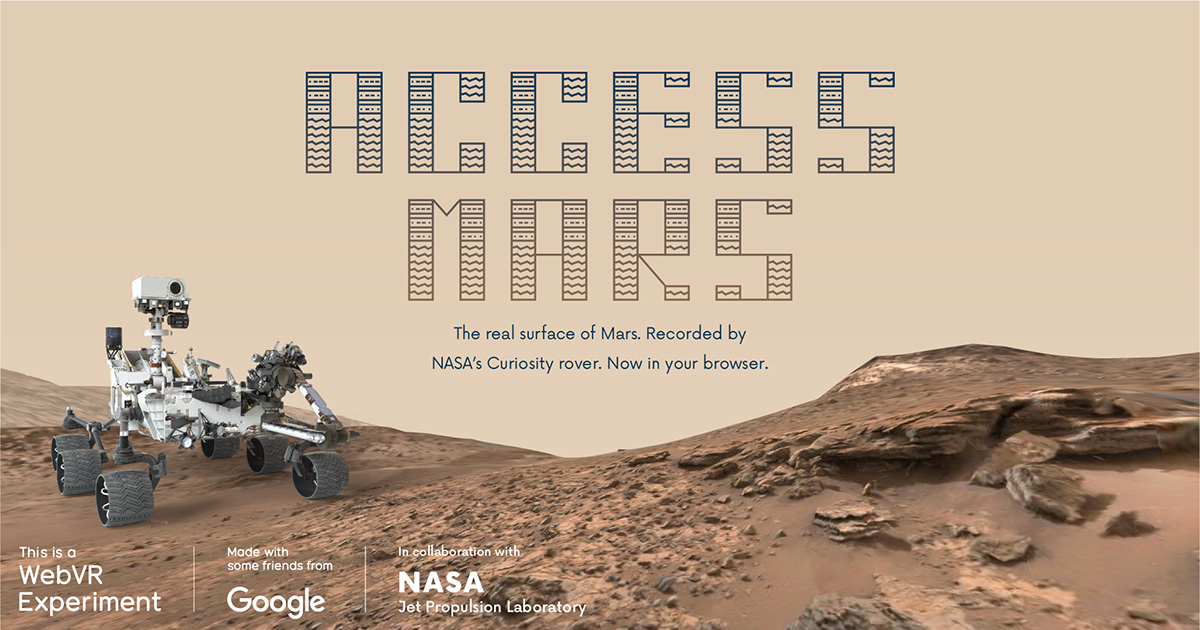

Access Mars is a collaboration between NASA, Jet Propulsion Lab, and Google Creative Lab to bring the real surface of Mars to your browser. It is an open source project released as a WebVR Experiment.

This is an experiment, not an official Google product. We will do our best to support and maintain this experiment but your mileage may vary.

The Curiosity rover has been on the surface of Mars for over five years. In that time, it has sent over 200,000 photos back to Earth. Using these photos, engineers at JPL have reconstructed the 3D surface of Mars for their scientists to use as a mission planning tool – surveying the terrain and identifying geologically significant areas for Curiosity to investigate further. And now you can explore the same Martian surface in your browser in an immersive WebVR experience.

Access Mars features four important mission locations: the Landing Site, Pahrump Hills, Marias Pass, and Murray Buttes. Additionally, users can visit Curiosity's "Current Location" for a look at where the rover has been in the past two to four weeks. And while you explore it all, JPL scientist Katie Stack Morgan will be your guide, teaching you about key mission details and highlighting points of interest.

Access Mars supports Desktop 360, Mobile 360, Cardboard, Daydream, GearVR, Oculus, and Vive.

Users use their primary interaction mode (mouse, finger, Cardboard button, etc.) to:

- learn about the Curiosity mission by clicking points of interest and highlighted rover parts.

- move from point to point by clicking on the terrain.

- travel to different mission sites by clicking the map icon.

6DOF users can use their room scale environments to explore on foot.

Access Mars is built with A-Frame, Three.js, and glTF with Draco mesh compression.

Our fork of Three.js implements a progressive JPEG decoding scheme originally outlined in this paper by Stefan Wagner. We load low-resolution textures initially, then high-resolution textures are loaded in the background as the user explores. The textures closest to the user are updated first.

These high-resolution textures are loaded using the progressive decoding scheme, which allows us to load a large texture without disrupting the render thread. An empty texture of the correct size is allocated before the rendering process begins. This avoids the usual stutter experienced when allocating a new texture during runtime. The desired JPEG is then decoded in 32x32 blocks of pixels at a time, and this data is sent to the texture we allocated earlier. Using the texSubImage2D function, only the relevant 32x32 portion of the texture is updated at once. The decoding itself is done in a WebWorker, which uses an emscripten version of libjpeg to decode the JPEG manually.

Install node and browserify if you haven't already npm install

Running npm run start will spin up a local Budo server for development. The URL will be given in the terminal.

This is not an official Google product, but an experiment that was a collaborative effort by friends from NASA JPL Ops Lab and Creative Lab.