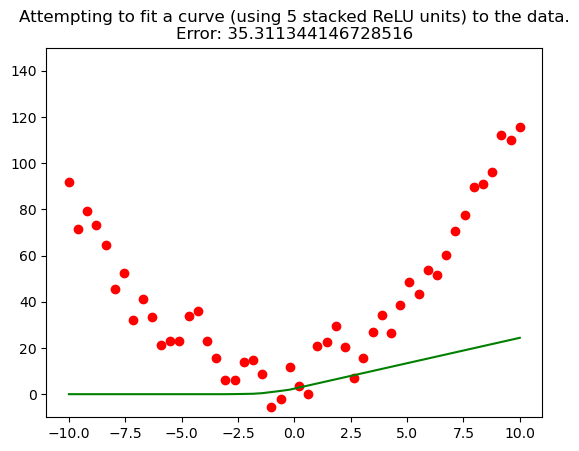

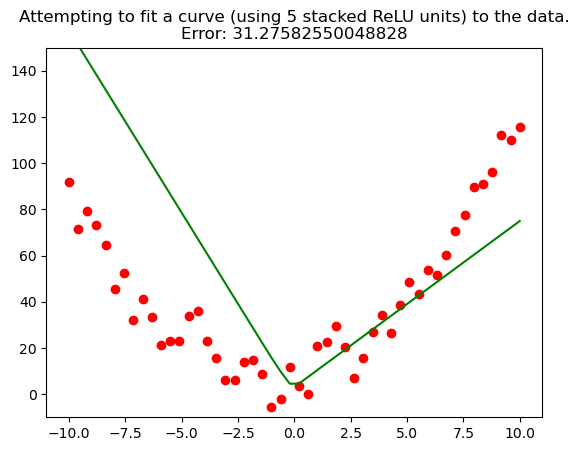

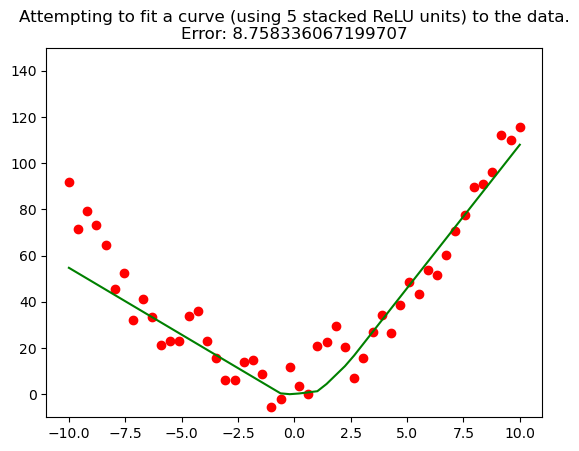

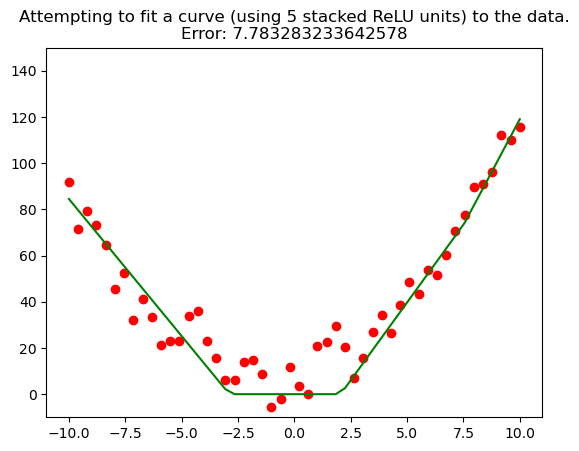

This repository contains a Jupyter Notebook demonstrating the approximation of continuous functions using Rectified Linear Units (ReLU).

The notebook explores how ReLU activation functions, commonly used in neural networks, can be used to approximate continuous mathematical functions.

It includes detailed visualizations and code examples to illustrate the concepts.

MusaChowdhury/Approximating-Continuous-Function-With-ReLU

ReLU's Function Approximation

Jupyter NotebookMIT