This repository is the official implementation of "Environment-Aware Dynamic Graph Learning for Out-of-Distribution Generalization (EAGLE)" accepted by the 37th Conference on Neural Information Processing Systems (NeurIPS 2023).

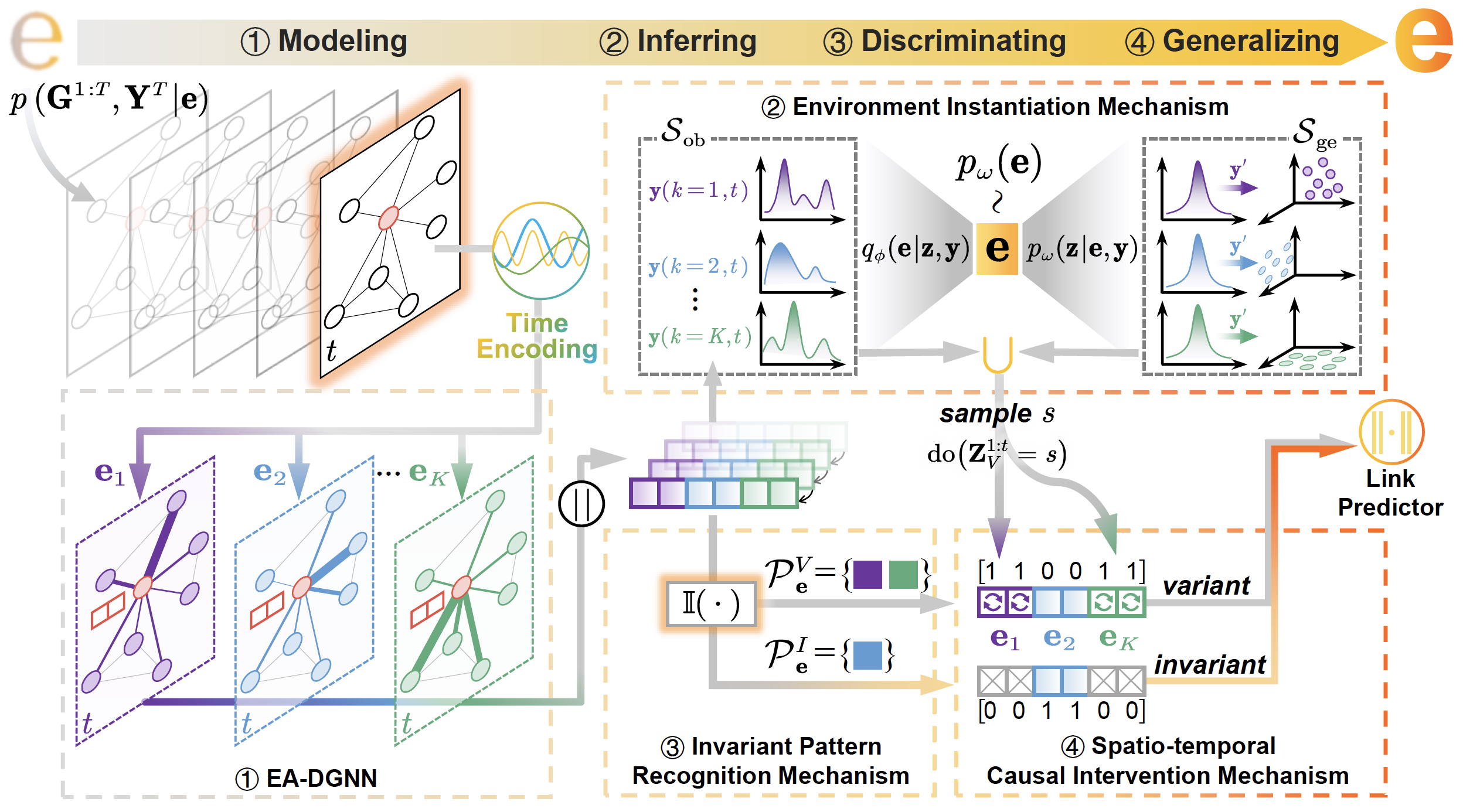

Dynamic graph neural networks (DGNNs) are increasingly pervasive in exploiting spatio-temporal patterns on dynamic graphs. However, existing works fail to generalize under distribution shifts, which are common in real-world scenarios. As the generation of dynamic graphs is heavily influenced by latent environments, investigating their impacts on the out-of-distribution (OOD) generalization is critical. However, it remains unexplored with the following two challenges: 1) How to properly model and infer the complex environments on dynamic graphs with distribution shifts? 2) How to discover invariant patterns given inferred spatio-temporal environments? To solve these challenges, we propose a novel Environment-Aware dynamic Graph LEarning (EAGLE) framework for OOD generalization by modeling complex coupled environments and exploiting spatio-temporal invariant patterns. Specifically, we first design the environment-aware EA-DGNN to model environments by multi-channel environments disentangling. Then, we propose an environment instantiation mechanism for environment diversification with inferred distributions. Finally, we discriminate spatio-temporal invariant patterns for out-of-distribution prediction by the invariant pattern recognition mechanism and perform fine-grained causal interventions node-wisely with a mixture of instantiated environment samples. Experiments on real-world and synthetic dynamic graph datasets demonstrate the superiority of our method against state-of-the-art baselines under distribution shifts. To the best of our knowledge, we are the first to study OOD generalization on dynamic graphs from the environment learning perspective.

Main package requirements:

CUDA == 10.1Python == 3.8.12PyTorch == 1.9.1PyTorch-Geometric == 2.0.1

To install the complete requiring packages, use following command at the root directory of the repository:

pip install -r requirements.txt

To train the EAGLE, run the following command in the directory ./scripts:

python main.py --mode=train --use_cfg=1 --dataset=<dataset_name>

Explanations for the arguments:

use_cfg: if training with the preset configurations.dataset: name of the datasets.collab,yelpandactare for Table 1, whilecollab_04,collab_06, andcollab_08are for Table 2.

To evaluate the EAGLE with trained models, run the following command in the directory ./scripts:

python main.py --mode=eval --use_cfg=1 --dataset=<dataset_name>

Please move the trained model in the directory ./saved_model. Note that, we have already provided all the pre-trained models in the directory for quick re-evaluation.

To reproduce the main results in Table 1 and Table 2, we have already provided all experiment logs in the directory ./logs/history. Run the following command in the directory ./scripts to reproduce the results in results.txt:

python show_result.py

If you find this repository helpful, please consider citing the following paper. We welcome any discussions with yuanhn@buaa.edu.cn.

@inproceedings{yuan2023environmentaware,

title={Environment-Aware Dynamic Graph Learning for Out-of-Distribution Generalization},

author={Yuan, Haonan and Sun, Qingyun and Fu, Xingcheng and Zhang, Ziwei and Ji, Cheng and Peng, Hao and Li, Jianxin},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=n8JWIzYPRz}

}Part of this code is inspired by Zeyang Zhang et al.'s DIDA. We owe sincere thanks to their valuable efforts and contributions.