Muzammal Naseer, Ahmad Mahmood, Salman Khan, & Fahad Khan

Paper (arxiv), Reviews & Response

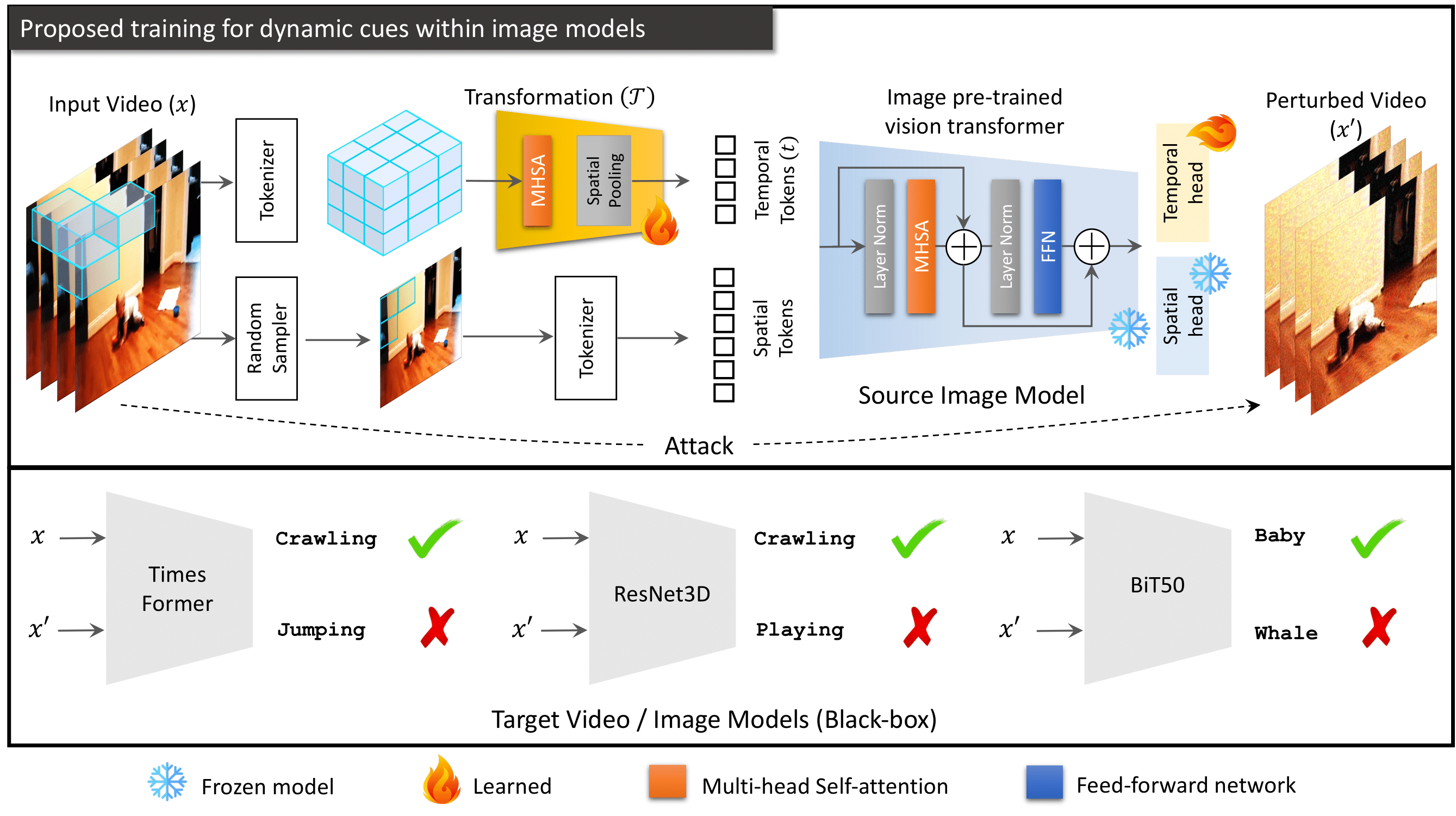

Abstract: The transferability of adversarial perturbations between image models has been extensively studied. In this case, an attack is generated from a known surrogate, the ImageNet trained model, and transferred to change the decision of an unknown (black-box) model trained on an image dataset. However, attacks generated from image models do not capture the dynamic nature of a moving object or a changing scene due to a lack of temporal cues within image models. This leads to reduced transferability of adversarial attacks from representation-enriched image models such as Supervised Vision Transformers (ViTs), Self-supervised ViTs (DINO), and Vision-language models (CLIP) to black-box video models. In this work, we induce dynamic cues within the image models without sacrificing their original performance on images. To this end, we optimize temporal prompts through frozen image models to capture motion dynamics. Our temporal prompts are the result of a learnable transformation that allows optimizing for temporal gradients during an adversarial attack to fool the motion dynamics. Specifically, we introduce spatial (image) and temporal (video) cues within the same source model through task-specific prompts. Attacking such prompts maximizes the adversarial transferability from image-to-video and image-to-image models using the attacks designed for image models. As an example, an iterative attack launched from image model Deit-B with temporal prompts reduces generalization (top1 % accuracy) of a video model by 35% on Kinetics-400. Our approach also improves adversarial transferability to image models by 9% on ImageNet w.r.t the current state-of-the-art approach. Our attack results indicate that the attacker does not need specialized architectures, divided space-time attention, 3D convolutions, or multi-view convolution networks for different data modalities. Image models are effective surrogates to optimize an adversarial attack to fool black-box models in a changing environment over time.

-

The attacker does not need specialized architectures, e.g., divided space-time attention, 3D convolutions, or multi-view convolution networks for different data modalities. Image models are effective surrogates to optimize an adversarial attack to fool black-box models in a changing environment over time.

-

We introduce dynamic cues within frozen image models without losing the original image representation (e.g. generalization on ImageNet). Both image and video representations enhance adversarial transferability from our adapted image models. For example, a pre-trained ImageNet ViT with approximately 6 million parameters exhibits 44.6 and 72.2 top-1 (%) accuracy on Kinetics-400 and ImageNet validation sets using our approach.

-

Our approach simply augments the existing adversarial attacks developed for image models to fool video or multi-view models.

-

We analyze three types of training schemes (supervised, self-supervised, and text-supervised) and highlight new insights into the adversarial space of vision-language models.

-

We further anlayze the textual bias within vision-language model, CLIP, for the low adversarial transferability to vision models.

-

We observe highly transferable adversaial space of self-supervised vision transformer models such as DINO.

- Our paper is accepted as a conference paper at ICLR 2023

- Pretrained weights released.

- Kinetics-400

DeiT-tiny- 44.6DeiT-small- 53.0DeiT-base- 57.0Dino- 57.4Clip- 67.3

- HMDB

DeiT-tiny- 36.2DeiT-small- 44.6DeiT-base- 47.7Dino- 45.1Clip- 54.6

- UCF

DeiT-tiny- 70.0DeiT-small- 77.2DeiT-base- 81.4Dino- 79.5Clip- 86.0

- SSV2

DeiT-tiny- 11.2DeiT-small- 15.3DeiT-base- 17.5Dino- 17.4Clip- 19.9

- Shadow - ModelNet40

DeiT-tiny- 81.0DeiT-small- 86.2DeiT-base- 88.2Dino- 89.8Clip- 88.9

- Depth - ModelNet40

DeiT-tiny- 86.0DeiT-small- 86.6DeiT-base- 90.1Dino- 90.1Clip- 89.5

- Kinetics-400

| Dataset | Input Size | Model | Pretrained Weights |

|---|---|---|---|

| ImageNet & Kinetics-400 | 224 x 224 & 8x224x224 | DeiT-tiny | Link |

| ImageNet & Hmdb-51 | 224 x 224 & 8x224x224 | DeiT-tiny | Link |

| ImageNet & Ucf-101 | 224 x 224 & 8x224x224 | DeiT-tiny | Link |

| ImageNet & Ssv2 | 224 x 224 & 8x224x224 | DeiT-tiny | Link |

| ImageNet & Kinetics-400 | 224 x 224 & 8x224x224 | DeiT-small | Link |

| ImageNet & Hmdb-51 | 224 x 224 & 8x224x224 | DeiT-small | Link |

| ImageNet & Ucf-101 | 224 x 224 & 8x224x224 | DeiT-small | Link |

| ImageNet & Ssv2 | 224 x 224 & 8x224x224 | DeiT-small | Link |

| ImageNet & Kinetics-400 | 224 x 224 & 8x224x224 | DeiT-base | Link |

| ImageNet & Hmdb-51 | 224 x 224 & 8x224x224 | DeiT-base | Link |

| ImageNet & Ucf-101 | 224 x 224 & 8x224x224 | DeiT-base | Link |

| ImageNet & Ssv2 | 224 x 224 & 8x224x224 | DeiT-base | Link |

| Dataset | Input Size | Model | Pretrained Weights |

|---|---|---|---|

| ImageNet & Kinetics-400 | 224 x 224 & 8x224x224 | Dino | Link |

| ImageNet & Hmdb-51 | 224 x 224 & 8x224x224 | Dino | Link |

| ImageNet & Ucf-101 | 224 x 224 & 8x224x224 | Dino | Link |

| ImageNet & Ssv2 | 224 x 224 & 8x224x224 | Dino | Link |

| Dataset | Input Size | Model | Pretrained Weights |

|---|---|---|---|

| ImageNet & Kinetics-400 | 224 x 224 & 8x224x224 | Clip | Link |

| ImageNet & Hmdb-51 | 224 x 224 & 8x224x224 | Clip | Link |

| ImageNet & Ucf-101 | 224 x 224 & 8x224x224 | Clip | Link |

| ImageNet & Ssv2 | 224 x 224 & 8x224x224 | Clip | Link |

git clone https://github.com/Muzammal-Naseer/DCViT-AT

cd DCViT-AT

conda env create -n [name] --file environment.yml

conda activate [name]

python setup.py build develop

See details in md files

The folder Dynamic_Cues contains all the code to train our models.

Change the arguments in train_video.sh file to train different variations. We provide a sample script to train DeiT-base on Ucf-101:

python tools/run_net.py \

--cfg configs/Ucf101/8_224.yaml \

DATA.PATH_TO_DATA_DIR '/path/to/ucf/annotations' \

NUM_GPUS 2 \

TRAIN.BATCH_SIZE 16 \

MODEL.MODEL_NAME deit_base_patch16_224_timeP_1 \

MODEL.NUM_CLASSES 101 \

OUTPUT_DIR /path/to/save/model \

TRAIN.FINETUNE False \

SOLVER.BASE_LR 0.005 \

SOLVER.MAX_EPOCH 15 \

TRAIN.EVAL_PERIOD 5 \

DATA.NUM_FRAMES 8 \

SOLVER.STEPS '[0,11,14]' \

SOLVER.LRS '[1,0.1,0.01]' \

TRAIN.CHECKPOINT_PERIOD 15 \

DATA.TRAIN_JITTER_SCALES '[256,320]' \

DATA.TRAIN_CROP_SIZE 224

The folder Dynamic_Cues contains all the code to train our models.

Change the arguments in train_img3d.sh file to train different variations. We provide a sample script to train DeiT-base on Depth:

python tools/run_net.py \

--cfg configs/Img3d/8_224.yaml \

DATA.PATH_TO_DATA_DIR '/root/Depth/*/' \ # path should end with '*' to read all classes

NUM_GPUS 2 \

TRAIN.BATCH_SIZE 16 \

MODEL.MODEL_NAME deit_base_patch16_224_timeP_1 \

MODEL.NUM_CLASSES 40 \

OUTPUT_DIR /path/to/save/model \

TRAIN.FINETUNE False \

SOLVER.BASE_LR 0.005 \

SOLVER.MAX_EPOCH 15 \

TRAIN.EVAL_PERIOD 5 \

DATA.NUM_FRAMES 16 \

SOLVER.STEPS '[0,11,14]' \

SOLVER.LRS '[1,0.1,0.01]' \

TRAIN.CHECKPOINT_PERIOD 15 \

DATA.TRAIN_JITTER_SCALES '[256,320]' \

DATA.TRAIN_CROP_SIZE 224

Change the arguments in test_net.sh file to train different variations. We provide a sample script to test DeiT-base on Ucf-101:

python tools/run_net.py \

--cfg configs/Ucf-101/8_224_TEST.yaml \

DATA.PATH_TO_DATA_DIR '/path/to/ucf/annotations' \

TRAIN.ENABLE False \

TEST.CHECKPOINT_FILE_PATH /path/to/saved/model \

MODEL.MODEL_NAME deit_base_patch16_224_timeP_1 \

TEST.SAVE_RESULTS_PATH /path/to/save/pkl/file \

DATA.TEST_CROP_SIZE 224 \

DATA.NUM_FRAMES 8 \

TEST.NUM_ENSEMBLE_VIEWS 3 \

NUM_GPUS 4 \

TEST.BATCH_SIZE 16 \

TEST.NUM_SPATIAL_CROPS 3

Get the top-1 and top-5 accuracies by running the command:

python get_pickle_acc.py /path/to/saved/pkl/file

Four types of models are used as the target model - Timesformer, Resnet50, MVCNN, I3D. We provide pretrained models that can be directly used to evaluate the performance of attacks.

Timesformer, Resnet50, and I3D are trained on input size 8x224x224. Mvcnn are trained on 12x224x224.

- Divided Space Time Attention is used to train these models.

| Dataset | Pretrained Weights |

|---|---|

| Kinetics-400 | Link |

| Hmdb-51 | Link |

| Ucf-101 | Link |

| SSv2 | Link |

| Dataset | Pretrained Weights |

|---|---|

| Kinetics-400 | Link |

| Hmdb-51 | Link |

| Ucf-101 | Link |

| SSv2 | Link |

| Dataset | Pretrained Weights |

|---|---|

| Kinetics-400 | Link |

| Hmdb-51 | Link |

| Ucf-101 | Link |

| SSv2 | Link |

| Dataset | Pretrained Weights |

|---|---|

| Shaded | Link |

| Depth | Link |

Three types of attacks have been presented in our paper.

- Simple Black-box attack

- Ensemble attack

- Cross Task attack

Each attack can be run using the corresponding bash file under the scripts folder. For example, the simple black box attack is run using the following command:

evaluation() {

python eval_adv_base.py \

--test_dir "path/to/annotation/file" \ # path to the annotation file

--data_type "$1" \ # dataset name

--src_model "$2" \ # source model name

--tar_model "$3" \ # target model name

--image_list "$4" \

--attack_type "$5" \ # attack type

--target_label "$6" \ # target label for targeted attacks (-1 for untargeted attacks)

--iter "$7" \ # number of iterations

--eps "$8" \ # epsilon (should be 16 for IN and 70 for videos because of normalization)

--index "$9" \ # index of the frames to be attacked (last or all)

--pre_trained "${10}" \ # path to the source model

--tar_pre_trained "${11}" \ # path to the target model

--num_temporal_views 3 \ # number of temporal views

--num_classes 101 \ # number of classes in the target dataset

--src_num_cls 101 \ # number of classes in the source dataset

--batch_size 8 \

--num_frames 8 \ # number of frames in the input of the target model

--num_gpus 1 \ # number of GPUs - currently only 1 is supported

--src_frames 8 \ # number of frames in the input of the source model

--num_div_gpus 1 \

--add_grad True \ # add the gradient of the main frame to all other frames

--variation "20iter"

}

for ATTACK in 'dim' 'mifgsm' 'pgd' 'fgsm'; do

evaluation 'ucf101' "deit_base_patch16_224_timeP_1_cat" "resnet_50" "" "$ATTACK" -1 20 70 "all" "path/to/source/model" "path/to/target/model"

done

After adding relevant path names to the arguments, run the command bash Attacks/run_attacks/adv.sh.

If you find our work, this repository, or pretrained image models with temporal prompts useful, please consider giving a star ⭐ and citation.

@inproceedings{

naseer2023boosting,

title={Boosting Adversarial Transferability using Dynamic Cues},

author={Muzammal Naseer and Ahmad Mahmood and Salman Khan and Fahad Khan},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023},

url={https://openreview.net/forum?id=SZynfVLGd5}

}Our code is based on Deit, TimeSformer and DINO repositories. We thank the authors for releasing their code.