Run one command, get a QEMU or gem5 Buildroot BusyBox virtual machine built from source with several minimal Linux kernel 4.16 module development example tutorials with GDB and KGDB step debugging and minimal educational hardware models. "Tested" in x86, ARM and MIPS guests, Ubuntu 17.10 host.

- 1. Getting started

- 1.1. Module documentation

- 1.2. Rebuild

- 1.3. Don’t retype arguments all the time

- 1.4. Clean the build

- 1.5. Filesystem persistency

- 1.6. Message control

- 1.7. Text mode

- 1.8. Automatic startup commands

- 1.9. Kernel command line parameters

- 1.10. Kernel command line parameters escaping

- 1.11. What command was actually run?

- 1.12. modprobe

- 1.13. myinsmod

- 2. GDB step debug

- 3. KGDB

- 4. gdbserver

- 5. Architectures

- 6. init

- 7. KVM

- 8. X11

- 9. initrd

- 10. Linux kernel

- 11. QEMU

- 12. gem5

- 12.1. gem5 getting started

- 12.2. gem5 vs QEMU

- 12.3. gem5 run benchmark

- 12.4. gem5 kernel command line parameters

- 12.5. gem5 GDB step debug

- 12.6. gem5 checkpoint

- 12.7. Pass extra options to gem5

- 12.8. Run multiple gem5 instances at once

- 12.9. gem5 and QEMU with the same kernel configuration

- 12.10. m5

- 12.11. gem5 limitations

- 13. Insane action

- 14. Buildroot

- 15. Benchmark this repo

- 16. Conversation

Reserve 12Gb of disk and run:

git clone https://github.com/************/linux-kernel-module-cheat cd linux-kernel-module-cheat ./configure && ./build && ./run

The first configure will take a while (30 minutes to 2 hours) to clone and build, see [benchmarking-this-repo] for more details.

If you don’t want to wait, you could also try to compile the examples and run them on your host computer as explained on at Run on host, but as explained on that section, that is dangerous, limited, and will likely not work.

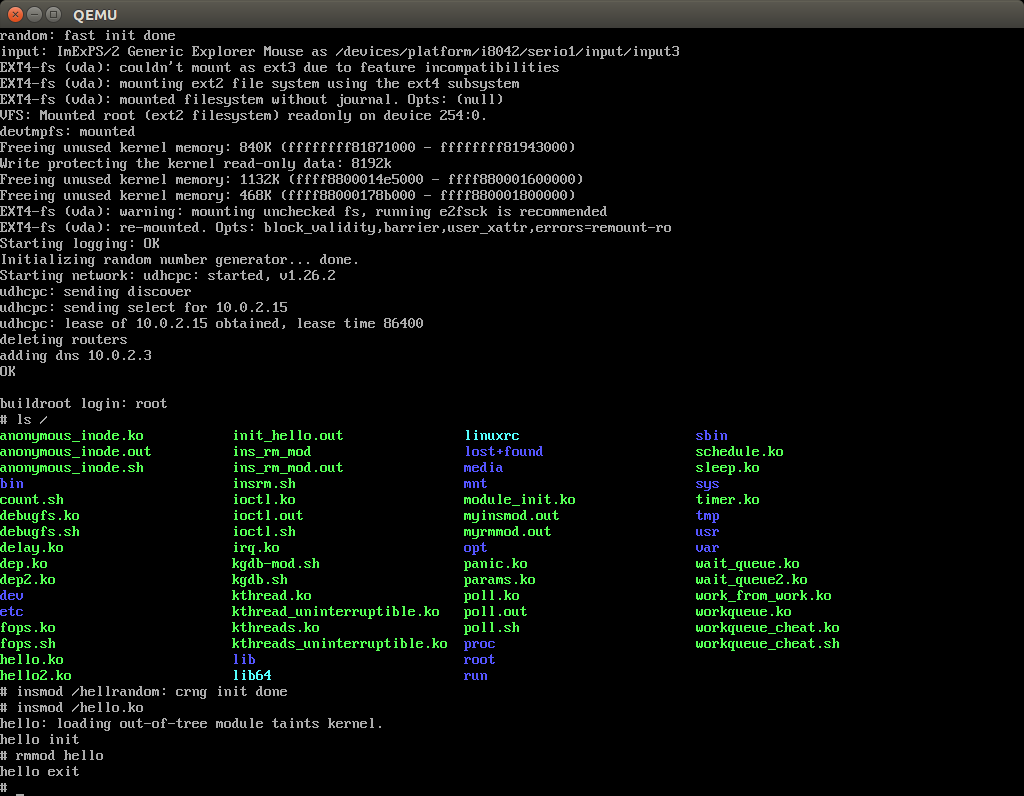

After QEMU opens up, you can start playing with the kernel modules:

insmod /hello.ko insmod /hello2.ko rmmod hello rmmod hello2

This should print to the screen:

hello init hello2 init hello cleanup hello2 cleanup

which are printk messages from init and cleanup methods of those modules.

All available modules can be found in the kernel_module directory.

head kernel_module/modulename.c

Many of the modules have userland test scripts / executables with the same name as the module, e.g. form inside the guest:

/modulename.sh /modulename.out

The sources of those tests will further clarify what the corresponding kernel modules does. To find them on the host, do a quick:

git ls-files | grep modulename

After making changes to a package, you must explicitly request it to be rebuilt.

For example, you you modify the kernel modules, you must rebuild with:

./build -k

which is just an alias for:

./build -- kernel_module-reconfigure

where kernel_module is the name of out Buildroot package that contains the kernel modules.

Other important targets are:

./build -l -q -G

which rebuild the Linux kernel, and QEMU and gem5 respectively. They are essentially aliases for:

./build -- linux-reconfigure host-qemu-reconfigure gem5-reconfigure

However, some of our aliases such as -l also have some magic convenience properties. So generally just use the aliases instead.

We don’t rebuild by default because, even with make incremental rebuilds, the timestamp check takes a few annoying seconds.

Not all packages have an alias, when they don’t, just use the form:

./build -- <pkg>-reconfigure

It gets annoying to retype -a aarch64 for every single command, or to remember ./build -B setups.

So simplify that, do:

cp cli.gitignore.example cli.gitignore

and then edit the cli.gitignore file to your needs.

That file is used to pass extra command line arguments to most of our utilities.

Of course, you could get by with the shell history, or your own aliases, but we’ve felt that it was worth introducing a common mechanism for that.

You did something crazy, and nothing seems to work anymore?

All builds are stored under buildroot/,

The most coarse thing you can do is:

cd buildroot git checkout -- . git clean -xdf .

To only nuke one architecture, do:

rm -rf out/x86_64/buildroot

Only nuke one one package:

rm -rf out/x86_64/buildroot/build/host-qemu-custom ./build

This is sometimes necessary when changing the version of the submodules, and then builds fail. We should try to understand why and report bugs.

The root filesystem is persistent across:

./run date >f # poweroff syncs by default without -n. poweroff

then:

./run cat f

The command:

sync

also saves the disk.

This is particularly useful to re-run shell commands from the history of a previous session with Ctrl + R.

However, when you do:

./build

the disk image gets overwritten by a fresh filesystem and you lose all changes.

Remember that if you forcibly turn QEMU off without sync or poweroff from inside the VM, e.g. by closing the QEMU window, disk changes may not be saved.

When booting from initrd however without a disk, persistency is lost.

We use printk a lot, and it shows on the QEMU terminal by default. If that annoys you (e.g. you want to see stdout separately), do:

dmesg -n 1

See also: https://superuser.com/questions/351387/how-to-stop-kernel-messages-from-flooding-my-console

When in graphical mode, you can scroll up a bit on the default TTY with:

Shift + PgUp

but I never managed to increase that buffer:

The superior alternative is to use text mode or a telnet connection.

By default, we show the serial console directly on the current terminal, without opening a QEMU window.

To enable graphic mode, use:

./run -x

Text mode is the default due to the following considerable advantages:

-

copy and paste commands and stdout output to / from host

-

get full panic traces when you start making the kernel crash :-) See also: https://unix.stackexchange.com/questions/208260/how-to-scroll-up-after-a-kernel-panic

-

have a large scroll buffer, and be able to search it, e.g. by using tmux on host

-

one less window floating around to think about in addition to your shell :-)

-

graphics mode has only been properly tested on

x86_64.

Text mode has the following limitations over graphics mode:

-

you can’t see graphics such as those produced by X11

-

very early kernel messages such as

early console in extract_kernelonly show on the GUI, since at such early stages, not even the serial has been setup.

Both good and bad:

-

Ctrl + C kills the host emulator instead of sending SIGINT to the guest process.

On one hand, this provides an easy way to quit QEMU.

On the other, we are unable to easily kill the foreground process, which is specially problematic when it is something like an infinite loop. and not sent to guest processes.

TODO: understand why and how to change that. See:

It is also hard to enter the monitor for the same reason:

Our workaround is:

./qemumonitor

I think the problem was reversed in older QEMU versions: https://superuser.com/questions/1087859/how-to-quit-the-qemu-monitor-when-not-using-a-gui/1211516#1211516

sendkey sendkey ctrl-cdoes not work on the text terminal either.This is however fortunate when running QEMU with GDB, as the Ctrl + C reaches GDB and breaks.

-

Not everything generates interrupts, only the final enter.

This makes things a bit more reproducible, since the microsecond in which you pressed a key does not matter.

But on the other hand maybe you are interested in observing the interrupts generated by key presses.

When debugging a module, it becomes tedious to wait for build and re-type:

/modulename.sh

every time.

To automate that, use the methods described at: init

Bootloaders can pass a string as input to the Linux kernel when it is booting to control its behaviour, much like the execve system call does to userland processes.

This allows us to control the behaviour of the kernel without rebuilding anything.

With QEMU, QEMU itself acts as the bootloader, and provides the -append option and we expose it through ./run -e, e.g.:

./run -e 'foo bar'

Then inside the host, you can check which options were given with:

cat /proc/cmdline

They are also printed at the beginning of the boot message:

dmesg | grep "Command line"

See also:

The arguments are documented in the kernel documentation: https://www.kernel.org/doc/html/v4.14/admin-guide/kernel-parameters.html

When dealing with real boards, extra command line options are provided on some magic bootloader configuration file, e.g.:

-

GRUB configuration files: https://askubuntu.com/questions/19486/how-do-i-add-a-kernel-boot-parameter

-

Raspberry pi

/boot/cmdline.txton a magic partition: https://raspberrypi.stackexchange.com/questions/14839/how-to-change-the-kernel-commandline-for-archlinuxarm-on-raspberry-pi-effectly

Double quotes can be used to escape spaces as in opt="a b", but double quotes themselves cannot be escaped, e.g. opt"a\"b"

This even lead us to use base64 encoding with -E!

When asking for help on upstream repositories outside of this repository, you will need to provide the commands that you are running in detail without referencing our scripts.

For example, QEMU developers will only want to see the final QEMU command that you are running.

We make that easy by building commands as strings, and then echoing them before evaling.

So for example when you run:

./run -a arm

Stdout shows a line with the full command of type:

./out/arm/buildroot/host/usr/bin/qemu-system-arm -m 128M -monitor telnet::45454,server,nowait -netdev user,hostfwd=tcp::45455-:45455,id=net0 -smp 1 -M versatilepb -append 'root=/dev/sda nokaslr norandmaps printk.devkmsg=on printk.time=y' -device rtl8139,netdev=net0 -dtb ./out/arm/buildroot/images/versatile-pb.dtb -kernel ./out/arm/buildroot/images/zImage -serial stdio -drive file='./out/arm/buildroot/images/rootfs.ext2.qcow2,if=scsi,format=qcow2'

This line is also saved to a file for convenience:

cat ./run.log

If you are feeling fancy, you can also insert modules with:

modprobe dep2 lsmod # dep and dep2

This method also deals with module dependencies, which we almost don’t use to make examples simpler:

Removal also removes required modules that have zero usage count:

modprobe -r dep2 lsmod # Nothing.

but it can’t know if you actually insmodded them separately or not:

modprobe dep modprobe dep2 modprobe -r dep2 # Nothing.

so it is a bit risky.

modprobe searches for modules under:

ls /lib/modules/*/extra/

Kernel modules built from the Linux mainline tree with CONFIG_SOME_MOD=m, are automatically available with modprobe, e.g.:

modprobe dummy-irq

If you are feeling raw, you can insert and remove modules with our own minimal module inserter and remover!

/myinsmod.out /hello.ko /myrmmod.out hello

which teaches you how it is done from C code.

-d makes QEMU wait for a GDB connection, otherwise we could accidentally go past the point we want to break at:

./run -d

Say you want to break at start_kernel. So on another shell:

./rungdb start_kernel

or at a given line:

./rungdb init/main.c:1088

Now QEMU will stop there, and you can use the normal GDB commands:

l n c

See also:

O=0 is an impossible dream, O=2 being the default: https://stackoverflow.com/questions/29151235/how-to-de-optimize-the-linux-kernel-to-and-compile-it-with-o0 So get ready for some weird jumps, and <value optimized out> fun. Why, Linux, why.

Let’s observe the kernel as it reacts to some userland actions.

Start QEMU with just:

./run

and after boot inside a shell run:

/count.sh

which counts to infinity to stdout. Then in another shell, run:

./rungdb

and then hit:

Ctrl + C break sys_write continue continue continue

And you now control the counting on the first shell from GDB!

When you hit Ctrl + C, if we happen to be inside kernel code at that point, which is very likely if there are no heavy background tasks waiting, and we are just waiting on a sleep type system call of the command prompt, we can already see the source for the random place inside the kernel where we stopped.

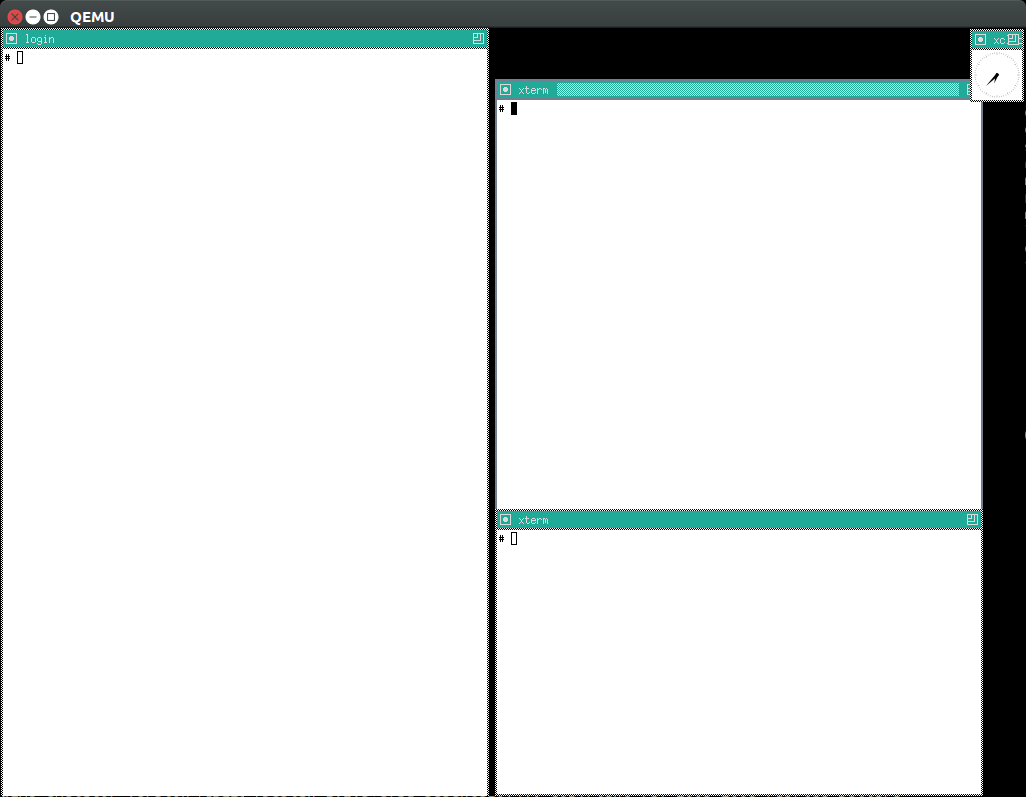

tmux just makes things even more fun by allowing us to see both terminals at once without dragging windows around! https://unix.stackexchange.com/questions/152738/how-to-split-a-new-window-and-run-a-command-in-this-new-window-using-tmux/432111#432111

./tmu ./rungdb && ./run -d

Loadable kernel modules are a bit trickier since the kernel can place them at different memory locations depending on load order.

So we cannot set the breakpoints before insmod.

However, the Linux kernel GDB scripts offer the lx-symbols command, which takes care of that beautifully for us.

Shell 1:

./run

Wait for the boot to end and run:

insmod /timer.ko

This prints a message to dmesg every second.

Shell 2:

./rungdb

In GDB, hit Ctrl + C, and note how it says:

scanning for modules in /home/ciro/bak/git/linux-kernel-module-cheat/out/x86_64/buildroot/build/linux-custom loading @0xffffffffc0000000: ../kernel_module-1.0//timer.ko

That’s lx-symbols working! Now simply:

b lkmc_timer_callback c c c

and we now control the callback from GDB!

Just don’t forget to remove your breakpoints after rmmod, or they will point to stale memory locations.

TODO: why does break work_func for insmod kthread.ko not break the first time I insmod, but breaks the second time?

Useless, but a good way to show how hardcore you are. Disable lx-symbols with:

./rungdb -L

From inside guest:

insmod /fops.ko cat /proc/modules

This will give a line of form:

fops 2327 0 - Live 0xfffffffa00000000

And then tell GDB where the module was loaded with:

Ctrl + C add-symbol-file ../kernel_module-1.0/fops.ko 0xfffffffa00000000

TODO: why can’t we break at early startup stuff such as:

./rungdb extract_kernel ./rungdb main

Maybe it is because they are being copied around at specific locations instead of being run directly from inside the main image, which is where the debug information points to?

QEMU’s -gdb GDB breakpoints are set on virtual addresses, so you can in theory debug userland processes as well.

You will generally want to use gdbserver for this as it is more reliable, but this method can overcome the following limitations of gdbserver:

-

the emulator does not support host to guest networking. This seems to be the case for gem5: gem5 host to guest networking

-

cannot see the start of the

initprocess easily -

gdbserveralters the working of the kernel, and makes your run less representative

Known limitations of direct userland debugging:

-

the kernel might switch context to another process or to the kernel itself e.g. on a system call, and then TODO confirm the PIC would go to weird places and source code would be missing.

-

TODO step into shared libraries. If I attempt to load them explicitly:

(gdb) sharedlibrary ../../staging/lib/libc.so.0 No loaded shared libraries match the pattern `../../staging/lib/libc.so.0'.

since GDB does not know that libc is loaded.

-

Shell 1:

./run -d -e 'init=/sleep_forever.out'

-

Shell 2:

./rungdb-user kernel_module-1.0/user/sleep_forever.out main

BusyBox custom init process:

-

Shell 1:

./run -d -e 'init=/bin/ls'

-

Shell 2:

./rungdb-user busybox-1.26.2/busybox ls_main

This follows BusyBox' convention of calling the main for each executable as <exec>_main since the busybox executable has many "mains".

BusyBox default init process:

-

Shell 1:

./run -d

-

Shell 2:

./rungdb-user busybox-1.26.2/busybox init_main

This cannot be debugged in another way without modifying the source, or /sbin/init exits early with:

"must be run as PID 1"

Non-init process:

-

Shell 1:

./run -d

-

Shell 2:

./rungdb-user kernel_module-1.0/user/myinsmod.out main

-

Shell 1 after the boot finishes:

/myinsmod.out /hello.ko

This is the least reliable setup as there might be other processes that use the given virtual address.

TODO: on QEMU, it works on x86 and aarch64 but fails on arm as follows:

-

Shell 1:

./run -a arm

-

Shell 2: wait for boot to finish, and run:

./rungdb-user -a arm kernel_module-1.0/user/myinsmod.out main

-

Shell 1:

/myinsmod.out /hello.ko

The problem is that the b main that we do inside ./rungdb-user says:

Cannot access memory at address 0x107b8

However, if we do a Ctrl + C, and then a direct:

b *0x107b8

it works. Why?! On GEM5, x86 can also give te Cannot access memory at address, so maybe it is also unreliable on QEMU, and works just by coincidence.

GDB can call functions as explained at: https://stackoverflow.com/questions/1354731/how-to-evaluate-functions-in-gdb

However this is failing for us:

-

some symbols are not visible to

calleven thoughbsees them -

for those that are,

callfails with an E14 error

E.g.: if we break on sys_write on /count.sh:

>>> call printk(0, "asdf") Could not fetch register "orig_rax"; remote failure reply 'E14' >>> b printk Breakpoint 2 at 0xffffffff81091bca: file kernel/printk/printk.c, line 1824. >>> call fdget_pos(fd) No symbol "fdget_pos" in current context. >>> b fdget_pos Breakpoint 3 at 0xffffffff811615e3: fdget_pos. (9 locations) >>>

even though fdget_pos is the first thing sys_write does:

581 SYSCALL_DEFINE3(write, unsigned int, fd, const char __user *, buf,

582 size_t, count)

583 {

584 struct fd f = fdget_pos(fd);

KGDB is kernel dark magic that allows you to GDB the kernel on real hardware without any extra hardware support.

It is useless with QEMU since we already have full system visibility with -gdb, but this is a good way to learn it.

Cheaper than JTAG (free) and easier to setup (all you need is serial), but with less visibility as it depends on the kernel working, so e.g.: dies on panic, does not see boot sequence.

Usage:

./run -k ./rungdb -k

In GDB:

c

In QEMU:

/count.sh & /kgdb.sh

In GDB:

b sys_write c c c c

And now you can count from GDB!

If you do: b sys_write immediately after ./rungdb -k, it fails with KGDB: BP remove failed: <address>. I think this is because it would break too early on the boot sequence, and KGDB is not yet ready.

See also:

In QEMU:

/kgdb-mod.sh

In GDB:

lx-symbols ../kernel_module-1.0/ b fop_write c c c

and you now control the count.

TODO: if I -ex lx-symbols to the gdb command, just like done for QEMU -gdb, the kernel oops. How to automate this step?

If you modify runqemu to use:

-append kgdboc=kbd

instead of kgdboc=ttyS0,115200, you enter a different debugging mode called KDB.

Usage: in QEMU:

[0]kdb> go

Boot finishes, then:

/kgdb.sh

And you are back in KDB. Now you can:

[0]kdb> help [0]kdb> bp sys_write [0]kdb> go

And you will break whenever sys_write is hit.

The other KDB commands allow you to instruction steps, view memory, registers and some higher level kernel runtime data.

But TODO I don’t think you can see where you are in the kernel source code and line step as from GDB, since the kernel source is not available on guest (ah, if only debugging information supported full source).

Step debug userland processes to understand how they are talking to the kernel.

Guest:

/gdbserver.sh /myinsmod.out /hello.ko

Host:

./rungdbserver kernel_module-1.0/user/myinsmod.out

You can find the executable with:

find out/x86_64/buildroot/build -name myinsmod.out

TODO: automate the path finding:

-

using the executable from under

out/x86_64/buildroot/targetwould be easier as the path is the same as in guest, but unfortunately those executables are stripped to make the guest smaller.BR2_STRIP_none=yshould disable stripping, but make the image way larger. -

outputx86_64~/staging/would be even better thantarget/as the docs say that this directory contains binaries before they were stripped. However, only a few binaries are pre-installed there by default, and it seems to be a manual per package thing.E.g.

pciutilshas forlspci:define PCIUTILS_INSTALL_STAGING_CMDS $(TARGET_MAKE_ENV) $(MAKE1) -C $(@D) $(PCIUTILS_MAKE_OPTS) \ PREFIX=$(STAGING_DIR)/usr SBINDIR=$(STAGING_DIR)/usr/bin \ install install-lib install-pcilib endefand the docs describe the

*_INSTALL_STAGINGper package config, which is normally set for shared library packages.Feature request: https://bugs.busybox.net/show_bug.cgi?id=10386

An implementation overview can be found at: https://reverseengineering.stackexchange.com/questions/8829/cross-debugging-for-mips-elf-with-qemu-toolchain/16214#16214

As usual, different archs work with:

./rungdbserver -a arm kernel_module-1.0/user/myinsmod.out

BusyBox executables are all symlinks, so if you do on guest:

/gdbserver.sh ls

on host you need:

./rungdbserver busybox-1.26.2/busybox

Our setup gives you the rare opportunity to step debug libc and other system libraries e.g. with:

b open c

Or simply by stepping into calls:

s

This is made possible by the GDB command:

set sysroot ${buildroot_out_dir}/staging

which automatically finds unstripped shared libraries on the host for us.

The portability of the kernel and toolchains is amazing: change an option and most things magically work on completely different hardware.

To use arm instead of x86 for example:

./build -a arm ./run -a arm

Debug:

./run -a arm -d # On another terminal. ./rungdb -a arm

Known quirks of the supported architectures are documented in this section.

TODOs:

-

only managed to run in the terminal interface (but weirdly a blank QEMU window is still opened)

-

GDB not connecting to KGDB. Possibly linked to

-serial stdio. See also: https://stackoverflow.com/questions/14155577/how-to-use-kgdb-on-arm

/poweroff.out does not exit QEMU nor gem5, the terminal just hangs after the message:

reboot: System halted

A blunt resolution for QEMU is to do a Ctrl + c on host, or run on a nother shell:

pkill qemu

On gem5, it is possible to use the m5 instrumentation from guest as a good workaround:

m5 exit

It does work on aarch64 however, presumably because of magic virtio functionality.

As usual, we use Buildroot’s recommended QEMU setup QEMU aarch64 setup:

This makes aarch64 a bit different from arm:

-

uses

-M virt. https://wiki.qemu.org/Documentation/Platforms/ARM explains:Most of the machines QEMU supports have annoying limitations (small amount of RAM, no PCI or other hard disk, etc) which are there because that’s what the real hardware is like. If you don’t care about reproducing the idiosyncrasies of a particular bit of hardware, the best choice today is the "virt" machine.

-M virthas some limitations, e.g. I could not pass-drive if=scsias forarm, and so Snapshot fails.

Keep in mind that MIPS has the worst support compared to our other architectures due to the smaller community. Patches welcome as usual.

TODOs:

-

networking is not working. See also:

-

GDB step debug does not work properly, does not find

start_kernel

When the Linux kernel finishes booting, it runs an executable as the first and only userland process.

This init process is then responsible for setting up the entire userland (or destroying everything when you want to have fun).

This typically means reading some configuration files (e.g. /etc/initrc) and forking a bunch of userland executables based on those files.

systemd provides a "popular" init implementation for desktop distros as of 2017.

BusyBox provides its own minimalistic init implementation which Buildroot, and therefore this repo, uses by default.

To have more control over the system, you can replace BusyBox’s init with your own.

The following method replaces init and evals a command from the Kernel command line parameters:

./run -E 'echo "asdf qwer";insmod /hello.ko;/poweroff.out'

It is basically a shortcut for:

./run -e 'init=/eval.sh - lkmc_eval="insmod /hello.ko;/poweroff.out"'

although -E is smarter:

-

allows quoting and newlines by using base64 encoding, see: Kernel command line parameters escaping

-

automatically chooses between

init=andrcinit=for you, see: Path to init

so you should almost always use it, unless you are really counting each cycle ;-)

If the script is large, you can add it to a gitignored file and pass that to -E as in:

echo ' insmod /hello.ko /poweroff.out ' > gitignore.sh ./run -E "$(cat gitignore.sh)"

or add it to a file to the root filesystem guest and rebuild:

echo '#!/bin/sh insmod /hello.ko /poweroff.out ' > rootfs_overlay/gitignore.sh chmod +x rootfs_overlay/gitignore.sh ./build ./run -e 'init=/gitignore.sh'

Remember that if your init returns, the kernel will panic, there are just two non-panic possibilities:

-

run forever in a loop or long sleep

-

poweroffthe machine

Just using BusyBox' poweroff at the end of the init does not work and the kernel panics:

./run -E poweroff

because BusyBox' poweroff tries to do some fancy stuff like killing init, likely to allow userland to shutdown nicely.

But this fails when we are init itself!

poweroff works more brutally and effectively if you add -f:

./run -E 'poweroff -f'

but why not just use your super simple and effective /poweroff.out and be done with it?

If you rely on something that BusyBox' init set up for you like networking, you could do:

./run -f 'lkmc_eval="insmod /hello.ko;wget -S google.com;poweroff.out;"'

The lkmc_eval option gets evaled by our default S98 startup script if present.

Alternatively, add them to a new init.d entry to run at the end o the BusyBox init:

cp rootfs_overlay/etc/init.d/S98 rootfs_overlay/etc/init.d/S99.gitignore vim rootfs_overlay/etc/init.d/S99.gitignore ./build ./run

and they will be run automatically before the login prompt.

Scripts under /etc/init.d are run by /etc/init.d/rcS, which gets called by the line ::sysinit:/etc/init.d/rcS in /etc/inittab.

The init is selected at:

-

initrd or initramfs system:

/init, a custom one can be set with therdinit=kernel command line parameter -

otherwise: default is

/sbin/init, followed by some other paths, a custom one can be set withinit=

The kernel parses parameters from the kernel command line up to "-"; if it doesn’t recognize a parameter and it doesn’t contain a '.', the parameter gets passed to init: parameters with '=' go into init’s environment, others are passed as command line arguments to init. Everything after "-" is passed as an argument to init.

And you can try it out with:

./run -e 'init=/init_env_poweroff.sh - asdf=qwer zxcv'

Also note how the annoying dash - also gets passed as a parameter to init, which makes it impossible to use this method for most executables.

Finally, the docs are lying, arguments with dots that come after - are still treated specially (of the form subsystem.somevalue) and disappear:

./run -e 'init=/init_env_poweroff.sh - /poweroff.out'

We disable networking by default because it starts an userland process, and we want to keep the number of userland processes to a minimum to make the system more understandable.

Enable:

/sbin/ifup -a

Disable:

/sbin/ifdown -a

Test:

wget google.com

BusyBox' ping does not work with hostnames even when networking is working fine:

ping google.com

To enable networking by default, use the methods documented at Automatic startup commands

You can make QEMU or gem5 run faster by passing enabling KVM with:

./run -K

but it was broken in gem5 with pending patches: https://www.mail-archive.com/gem5-users@gem5.org/msg15046.html

KVM uses the KVM Linux kernel feature of the host to run most instructions natively.

We don’t enable KVM by default because:

-

only works if the architecture of the guest equals that of the host.

We have only tested / supported it on x86, but it is rumoured that QEMU and gem5 also have ARM KVM support if you are running an ARM desktop for some weird reason :-)

-

limits visibility, since more things are running natively:

-

can’t use GDB

-

can’t do instruction tracing

-

-

kernel boots are already fast enough without

-enable-kvm

The main use case for -enable-kvm in this repository is to test if something that takes a long time to run is functionally correct.

For example, when porting a benchmark to Buildroot, you can first use QEMU’s KVM to test that benchmarks is producing the correct results, before analysing them more deeply in gem5, which runs much slower.

Only tested successfully in x86_64.

Build:

./build -b br2_x11 ./run -x

We don’t build X11 by default because it takes a considerable amount of time (about 20%), and is not expected to be used by most users: you need to pass the -x flag to enable it.

Inside QEMU:

startx

And then from the GUI you can start exciting graphical programs such as:

xcalc xeyes

More details: https://unix.stackexchange.com/questions/70931/how-to-install-x11-on-my-own-linux-buildroot-system/306116#306116

Not sure how well that graphics stack represents real systems, but if it does it would be a good way to understand how it works.

TODO 9076c1d9bcc13b6efdb8ef502274f846d8d4e6a1 I’m 100% sure that it was working before, but I didn’t run it forever, and it stopped working at some point. Needs bisection, on whatever commit last touched x11 stuff.

-show-cursor did not help, I just get to see the host cursor, but the guest cursor still does not move.

Doing:

watch -n 1 grep i8042 /proc/interrupts

shows that interrupts do happen when mouse and keyboard presses are done, so I expect that it is some wrong either with:

-

QEMU. Same behaviour if I try the host’s QEMU 2.10.1 however.

-

X11 configuration. We do have

BR2_PACKAGE_XDRIVER_XF86_INPUT_MOUSE=y.

/var/log/Xorg.0.log contains the following interesting lines:

[ 27.549] (II) LoadModule: "mouse" [ 27.549] (II) Loading /usr/lib/xorg/modules/input/mouse_drv.so [ 27.590] (EE) <default pointer>: Cannot find which device to use. [ 27.590] (EE) <default pointer>: cannot open input device [ 27.590] (EE) PreInit returned 2 for "<default pointer>" [ 27.590] (II) UnloadModule: "mouse"

The file /dev/inputs/mice does not exist.

On ARM, startx hangs at a message:

vgaarb: this pci device is not a vga device

and nothing shows on the screen, and:

grep EE /var/log/Xorg.0.log

says:

(EE) Failed to load module "modesetting" (module does not exist, 0)

A friend told me this but I haven’t tried it yet:

-

xf86-video-modesettingis likely the missing ingredient, but it does not seem possible to activate it from Buildroot currently without patching things. -

xf86-video-fbdevshould work as well, but we need to make sure fbdev is enabled, and maybe add some line to theXorg.conf

Also if I do:

cat /dev/urandom > /dev/fb0

the screen fills up with random colors, so I think it can truly work.

Haven’t tried it, doubt it will work out of the box! :-)

The kernel can boot from an CPIO file, which is a directory serialization format much like tar: https://superuser.com/questions/343915/tar-vs-cpio-what-is-the-difference

The bootloader, which for us is QEMU itself, is then configured to put that CPIO into memory, and tell the kernel that it is there.

With this setup, you don’t even need to give a root filesystem to the kernel, it just does everything in memory in a ramfs.

To enable initrd instead of the default ext2 disk image, do:

./build -i ./run -i

Notice how it boots fine, even though this leads to not giving QEMU the -drive option, as can be verified with:

cat ./run.log

Also as expected, there is no filesystem persistency, since we are doing everything in memory:

date >f poweroff cat f # can't open 'f': No such file or directory

which can be good for automated tests, as it ensures that you are using a pristine unmodified system image every time.

One downside of this method is that it has to put the entire filesystem into memory, and could lead to a panic:

end Kernel panic - not syncing: Out of memory and no killable processes...

This can be solved by increasing the memory with:

./run -im 256M

The main ingredients to get initrd working are:

-

BR2_TARGET_ROOTFS_CPIO=y: make Buildroot generateimages/rootfs.cpioin addition to the other images.It is also possible to compress that image with other options.

-

qemu -initrd: make QEMU put the image into memory and tell the kernel about it. -

CONFIG_BLK_DEV_INITRD=y: Compile the kernel with initrd support, see also: https://unix.stackexchange.com/questions/67462/linux-kernel-is-not-finding-the-initrd-correctly/424496#424496Buildroot forces that option when

BR2_TARGET_ROOTFS_CPIO=yis given

https://unix.stackexchange.com/questions/89923/how-does-linux-load-the-initrd-image asks how the mechanism works in more detail.

Most modern desktop distributions have an initrd in their root disk to do early setup.

The rationale for this is described at: https://en.wikipedia.org/wiki/Initial_ramdisk

One obvious use case is having an encrypted root filesystem: you keep the initrd in an unencrypted partition, and then setup decryption from there.

I think GRUB then knows read common disk formats, and then loads that initrd to memory with a /boot/grub/grub.cfg directive of type:

initrd /initrd.img-4.4.0-108-generic

initramfs is just like initrd, but you also glue the image directly to the kernel image itself.

So the only argument that QEMU needs is the -kernel, no -drive not even -initrd! Pretty cool.

Try it out with:

./build -I -l && ./run -I

The -l (ell) should only be used the first time you move to / from a different root filesystem method (ext2 or cpio) to initramfs to overcome: https://stackoverflow.com/questions/49260466/why-when-i-change-br2-linux-kernel-custom-config-file-and-run-make-linux-reconfi

./build -I && ./run -I

It is interesting to see how this increases the size of the kernel image if you do a:

ls -lh out/x86_64/buildroot/images/bzImage

before and after using initramfs, since the .cpio is now glued to the kernel image.

In the background, it uses BR2_TARGET_ROOTFS_INITRAMFS, and this makes the kernel config option CONFIG_INITRAMFS_SOURCE point to the CPIO that will be embedded in the kernel image.

http://nairobi-embedded.org/initramfs_tutorial.html shows a full manual setup.

TODO we were not able to get it working yet: https://stackoverflow.com/questions/49261801/how-to-boot-the-linux-kernel-with-initrd-or-initramfs-with-gem5

By default, we use a .config that is a mixture of:

-

Buildroot’s minimal per machine

.config, which has the minimal options needed to boot -

our kernel_config_fragment which enables options we want to play with

If you want to just use your own exact .config instead, do:

./build -K myconfig -l

Beware that Buildroot can sed override some of the configurations we make no matter what, e.g. it forces CONFIG_BLK_DEV_INITRD=y when BR2_TARGET_ROOTFS_CPIO is on, so you might want to double check as explained at Find the kernel config. TODO check if there is a way to prevent that patching and maybe patch Buildroot for it, it is too fuzzy. People should be able to just build with whatever .config they want.

Build configuration can be observed in guest with:

/conf.sh

or on host:

cat out/*/buildroot/build/linux-custom/.config

We try to use the latest possible kernel major release version.

In QEMU:

cat /proc/version

or in the source:

cd linux git log | grep -E ' Linux [0-9]+\.' | head

# Last point before out patches.

last_mainline_revision=v4.15

next_mainline_revision=v4.16

cd linux

# Create a branch before the rebase in case things go wrong.

git checkout -b "lkmc-${last_mainline_revision}"

git remote set-url origin git@github.com:************/linux.git

git push

git remote add up git://git.kernel.org/pub/scm/linux/kernel/git/stable/linux-stable.git

git fetch up

git rebase --onto "$next_mainline_revision" "$last_mainline_revision"

cd ..

./build -lk

# Manually fix broken kernel modules if necessary.

git branch "buildroot-2017.08-linux-${last_mainline_revision}"

git add .

# And update the README to show off.

git commit -m "Linux ${next_mainline_revision}"

# Test the heck out of it, especially kernel modules and GDB.

./run

git push

During update all you kernel modules may break since the kernel API is not stable.

They are usually trivial breaks of things moving around headers or to sub-structs.

The userland, however, should simply not break, as Linus enforces strict backwards compatibility of userland interfaces.

This backwards compatibility is just awesome, it makes getting and running the latest master painless.

This also makes this repo the perfect setup to develop the Linux kernel.

The kernel is not forward compatible, however, so downgrading the Linux kernel requires downgrading the userland too to the latest Buildroot branch that supports it.

The default Linux kernel version is bumped in Buildroot with commit messages of type:

linux: bump default to version 4.9.6

So you can try:

git log --grep 'linux: bump default to version'

Those commits change BR2_LINUX_KERNEL_LATEST_VERSION in /linux/Config.in.

You should then look up if there is a branch that supports that kernel. Staying on branches is a good idea as they will get backports, in particular ones that fix the build as newer host versions come out.

You can also try those on the Ctrl + Alt + F3 of your Ubuntu host, but it is much more fun inside a VM!

Must be run in graphical mode.

Stop the cursor from blinking:

echo 0 > /sys/class/graphics/fbcon/cursor_blink

Rotate the console 90 degrees!

echo 1 > /sys/class/graphics/fbcon/rotate

Requires CONFIG_FRAMEBUFFER_CONSOLE_ROTATION=y.

Documented under: fb/.

TODO: font and keymap. Mentioned at: https://cmcenroe.me/2017/05/05/linux-console.html and I think can be done with Busybox loadkmap and loadfont, we just have to understand their formats, related:

Trace a single function:

cd /sys/kernel/debug/tracing/ # Stop tracing. echo 0 > tracing_on # Clear previous trace. echo '' > trace # List the available tracers, and pick one. cat available_tracers echo function > current_tracer # List all functions that can be traced # cat available_filter_functions # Choose one. echo __kmalloc >set_ftrace_filter # Confirm that only __kmalloc is enabled. cat enabled_functions echo 1 > tracing_on # Latest events. head trace # Observe trace continuously, and drain seen events out. cat trace_pipe &

Sample output:

# tracer: function

#

# entries-in-buffer/entries-written: 97/97 #P:1

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

head-228 [000] .... 825.534637: __kmalloc <-load_elf_phdrs

head-228 [000] .... 825.534692: __kmalloc <-load_elf_binary

head-228 [000] .... 825.534815: __kmalloc <-load_elf_phdrs

head-228 [000] .... 825.550917: __kmalloc <-__seq_open_private

head-228 [000] .... 825.550953: __kmalloc <-tracing_open

head-229 [000] .... 826.756585: __kmalloc <-load_elf_phdrs

head-229 [000] .... 826.756627: __kmalloc <-load_elf_binary

head-229 [000] .... 826.756719: __kmalloc <-load_elf_phdrs

head-229 [000] .... 826.773796: __kmalloc <-__seq_open_private

head-229 [000] .... 826.773835: __kmalloc <-tracing_open

head-230 [000] .... 827.174988: __kmalloc <-load_elf_phdrs

head-230 [000] .... 827.175046: __kmalloc <-load_elf_binary

head-230 [000] .... 827.175171: __kmalloc <-load_elf_phdrs

Trace all possible functions, and draw a call graph:

echo 1 > max_graph_depth echo 1 > events/enable echo function_graph > current_tracer

Sample output:

# CPU DURATION FUNCTION CALLS

# | | | | | | |

0) 2.173 us | } /* ntp_tick_length */

0) | timekeeping_update() {

0) 4.176 us | ntp_get_next_leap();

0) 5.016 us | update_vsyscall();

0) | raw_notifier_call_chain() {

0) 2.241 us | notifier_call_chain();

0) + 19.879 us | }

0) 3.144 us | update_fast_timekeeper();

0) 2.738 us | update_fast_timekeeper();

0) ! 117.147 us | }

0) | _raw_spin_unlock_irqrestore() {

0) 4.045 us | _raw_write_unlock_irqrestore();

0) + 22.066 us | }

0) ! 265.278 us | } /* update_wall_time */

TODO: what do + and ! mean?

Each enable under the events/ tree enables a certain set of functions, the higher the enable more functions are enabled.

Results (boot not excluded):

| Commit | Arch | Simulator | Instruction count |

|---|---|---|---|

7228f75ac74c896417fb8c5ba3d375a14ed4d36b |

arm |

QEMU |

680k |

7228f75ac74c896417fb8c5ba3d375a14ed4d36b |

arm |

gem5 AtomicSimpleCPU |

160M |

7228f75ac74c896417fb8c5ba3d375a14ed4d36b |

arm |

gem5 HPI |

155M |

7228f75ac74c896417fb8c5ba3d375a14ed4d36b |

x86_64 |

QEMU |

3M |

7228f75ac74c896417fb8c5ba3d375a14ed4d36b |

x86_64 |

gem5 AtomicSimpleCPU |

528M |

QEMU:

./trace-boot -a x86_64

sample output:

instruction count all: 1833863 entry address: 0x1000000 instruction count firmware: 20708

gem5:

./run -a aarch64 -g -E 'm5 exit' # Or: # ./run -a arm -g -E 'm5 exit' -- --cpu-type=HPI --caches grep sim_insts out/aarch64/gem5/m5out/stats.txt

Notes:

-

0x1000000is the address where QEMU puts the Linux kernel at with-kernelin x86.It can be found from:

readelf -e out/x86_64/buildroot/build/linux-*/vmlinux | grep Entry

TODO confirm further. If I try to break there with:

./rungdb *0x1000000

but I have no corresponding source line. Also note that this line is not actually the first line, since the kernel messages such as

early console in extract_kernelhave already shown on screen at that point. This does not break at all:./rungdb extract_kernel

It only appears once on every log I’ve seen so far, checked with

grep 0x1000000 trace.txtThen when we count the instructions that run before the kernel entry point, there is only about 100k instructions, which is insignificant compared to the kernel boot itself.

TODO

-a armand-a aarch64does not count firmware instructions properly because the entry point address of the ELF file does not show up on the trace at all. -

We can also discount the instructions after

initruns by usingreadelfto get the initial address ofinit. One easy way to do that now is to just run:./rungdb-user kernel_module-1.0/user/poweroff.out main

And get that from the traces, e.g. if the address is

4003a0, then we search:grep -n 4003a0 trace.txt

I have observed a single match for that instruction, so it must be the init, and there were only 20k instructions after it, so the impact is negligible.

-

on arm, you need to hit

Ctrl + Conce after seeing the messagereboot: System halteddue to arm shutdown -

to disable networking. Is replacing

initenough?CONFIG_NET=ndid not significantly reduce instruction counts, so maybe replacinginitis enough. -

gem5 simulates memory latencies. So I think that the CPU loops idle while waiting for memory, and counts will be higher.

I once got UML running on a minimal Buildroot setup at: https://unix.stackexchange.com/questions/73203/how-to-create-rootfs-for-user-mode-linux-on-fedora-18/372207#372207

But in part because it is dying, I didn’t spend much effort to integrate it into this repo, although it would be a good fit in principle, since it is essentially a virtualization method.

Maybe some brave should will send a pull request one day.

Let’s have some fun.

Those only work in graphical mode.

I think most are implemented under:

drivers/tty

TODO find all.

Scroll up / down the terminal:

Shift + PgDown Shift + PgUp

Or inside ./qemumonitor:

sendkey shift-pgup sendkey shift-pgdown

https://en.wikipedia.org/wiki/Magic_SysRq_key Those can be tested through the monitor with:

sendkey alt-sysrq-c

or you can try the much more boring method of:

echo c > /proc/sysrq-trigger

Implemented in

drivers/tty/sysrq.c

Switch between TTYs with:

sendkey alt-left sendkey alt-right sendkey alt-f1 sendkey alt-f2

TODO: only works after I do a chvt 1, but then how to put a terminal on alt-f2? I just get a blank screen. One day, one day:

Also tried to add some extra lines to /etc/inittab of type:

console::respawn:/sbin/getty -n -L -l /loginroot.sh ttyS1 0 vt100

but nothing changed.

Note that on Ubuntu 17.10, to get to the text terminal from the GUI we first need Ctrl + Alt + Fx, and once in the text terminals, Alt + Fn works without Ctrl.

Some QEMU specific features to play with and limitations to cry over.

QEMU allows us to take snapshots at any time through the monitor.

You can then restore CPU, memory and disk state back at any time.

qcow2 filesystems must be used for that to work.

To test it out, login into the VM with and run:

/count.sh

On another shell, take a snapshot:

echo 'savevm my_snap_id' | ./qemumonitor

The counting continues.

Restore the snapshot:

echo 'loadvm my_snap_id' | ./qemumonitor

and the counting goes back to where we saved. This shows that CPU and memory states were reverted.

We can also verify that the disk state is also reversed. Guest:

echo 0 >f

Monitor:

echo 'savevm my_snap_id' | ./qemumonitor

Guest:

echo 1 >f

Monitor:

echo 'loadvm my_snap_id' | ./qemumonitor

Guest:

cat f

And the output is 0.

Our setup does not allow for snapshotting while using initrd.

We have added and interacted with a few educational hardware models in QEMU.

This is useful to learn:

-

how to create new hardware models for QEMU. Overview: https://stackoverflow.com/questions/28315265/how-to-add-a-new-device-in-qemu-source-code

-

how the Linux kernel interacts with hardware

To get started, have a look at the "Hardware device drivers" section under kernel_module/README.adoc, and try to run those modules, and then grep the QEMU source code.

This protocol allows sharing a mountable filesystem between guest and host.

With networking, it’s boring, we can just use any of the old tools like sshfs and NFS.

One advantage of this method over NFS is that can run without sudo on host, or having to pass host credentials on guest for sshfs.

TODO performance compared to NFS.

As usual, we have already set everything up for you. On host:

cd 9p uname -a > host

Guest:

cd /mnt/9p cat host uname -a > guest

Host:

cat guest

The main ingredients for this are:

-

9Psettings in our kernel_config_fragment -

9pentry on our rootfs_overlay/etc/fstabAlternatively, you could also mount your own with:

mkdir /mnt/my9p mount -t 9p -o trans=virtio,version=9p2000.L host0 /mnt/my9p

-

Launch QEMU with

-virtfsas in your run scriptWhen we tried:

security_model=mapped

writes from guest failed due to user mismatch problems: https://serverfault.com/questions/342801/read-write-access-for-passthrough-9p-filesystems-with-libvirt-qemu

The feature is documented at: https://wiki.qemu.org/Documentation/9psetup

Seems possible! Lets do it:

It would be uber awesome if we could overlay a 9p filesystem on top of the root.

That would allow us to have a second Buildroot target/ directory, and without any extra configs, keep the root filesystem image small, which implies:

-

less host disk usage, no need to copy the entire

target/to the image again -

faster rebuild turnaround:

-

no need to regenerate the root filesystem at all and reboot

-

overcomes the

check_bin_archproblem: Buildroot rebuild is slow when the root filesystem is large

-

-

no need to worry about BR2_TARGET_ROOTFS_EXT2_SIZE

But TODO we didn’t get it working yet:

Test with the script:

/overlayfs.sh

It shows that files from the upper/ does not show on the root.

Furthermore, if you try to mount the root elsewhere to prepare for a chroot:

/overlayfs.sh / /overlay # chroot /overlay

it does not work well either because sub filesystems like /proc do not show on the mount:

ls /overlay/proc

A less good alternative is to set LD_LIBRARY_PATH on the 9p mount and run executables directly from the mount.

Even mor awesome than chroot be to pivot_root, but I couldn’t get that working either:

First ensure that networking is enabled before trying out anything in this section: Networking

Guest, BusyBox nc enabled with CONFIG_NC=y:

nc -l -p 45455

Host, nc from the netcat-openbsd package:

echo asdf | nc localhost 45455

Then asdf appears on the guest.

Only this specific port works by default since we have forwarded it on the QEMU command line.

We us this exact procedure to connect to gdbserver.

Not enabled by default due to the build / runtime overhead. To enable, build with:

./build -B 'BR2_PACKAGE_OPENSSH=y'

Then inside the guest turn on sshd:

/sshd.sh

and finally on host:

ssh root@localhost -p 45456

Could not do port forwarding from host to guest, and therefore could not use gdbserver: https://stackoverflow.com/questions/48941494/how-to-do-port-forwarding-from-guest-to-host-in-gem5

TODO. There is guestfwd, which sounds analogous to hostwfd used in the other sense, but I was not able to get it working, e.g.:

-netdev user,hostfwd=tcp::45455-:45455,guestfwd=tcp::45456-,id=net0 \

gives:

Could not open guest forwarding device 'guestfwd.tcp.45456'

Related:

This has nothing to do with the Linux kernel, but it is cool:

sudo apt-get install qemu-user ./build -a arm cd out/arm/buildroot/target qemu-arm -L . bin/ls

This uses QEMU’s user-mode emulation mode that allows us to run cross-compiled userland programs directly on the host.

The reason this is cool, is that ls is not statically compiled, but since we have the Buildroot image, we are still able to find the shared linker and the shared library at the given path.

In other words, much cooler than:

arm-linux-gnueabi-gcc -o hello -static hello.c qemu-arm hello

It is also possible to compile QEMU user mode from source with BR2_PACKAGE_HOST_QEMU_LINUX_USER_MODE=y, but then your compilation will likely fail with:

package/qemu/qemu.mk:110: *** "Refusing to build qemu-user: target Linux version newer than host's.". Stop.

since we are using a bleeding edge kernel, which is a sanity check in the Buildroot QEMU package.

Anyways, this warns us that the userland emulation will likely not be reliable, which is good to know. TODO: where is it documented the host kernel must be as new as the target one?

GDB step debugging is also possible with:

qemu-arm -g 1234 -L . bin/ls ../host/usr/bin/arm-buildroot-linux-uclibcgnueabi-gdb -ex 'target remote localhost:1234'

TODO: find source. Lazy now.

When you start interacting with QEMU hardware, it is useful to see what is going on inside of QEMU itself.

This is of course trivial since QEMU is just an userland program on the host, but we make it a bit easier with:

./run -D

Then you could:

b edu_mmio_read c

And in QEMU:

/pci.sh

Just make sure that you never click inside the QEMU window when doing that, otherwise you mouse gets captured forever, and the only solution I can find is to go to a TTY with Ctrl + Alt + F1 and kill QEMU.

You can still send key presses to QEMU however even without the mouse capture, just either click on the title bar, or alt tab to give it focus.

QEMU has a mechanism to log all instructions executed to a file.

To do it for the Linux kernel boot we have a helper:

./trace-boot -a x86_64

You can then inspect the instructions with:

less ./out/x86_64/qemu/trace.txt

This functionality relies on the following setup:

-

./configure --enable-trace-backends=simple. This logs in a binary format to the trace file.It makes 3x execution faster than the default trace backend which logs human readable data to stdout.

Logging with the default backend

loggreatly slows down the CPU, and in particular leads to this boot message:All QSes seen, last rcu_sched kthread activity 5252 (4294901421-4294896169), jiffies_till_next_fqs=1, root ->qsmask 0x0 swapper/0 R running task 0 1 0 0x00000008 ffff880007c03ef8 ffffffff8107aa5d ffff880007c16b40 ffffffff81a3b100 ffff880007c03f60 ffffffff810a41d1 0000000000000000 0000000007c03f20 fffffffffffffedc 0000000000000004 fffffffffffffedc ffffffff00000000 Call Trace: <IRQ> [<ffffffff8107aa5d>] sched_show_task+0xcd/0x130 [<ffffffff810a41d1>] rcu_check_callbacks+0x871/0x880 [<ffffffff810a799f>] update_process_times+0x2f/0x60

in which the boot appears to hang for a considerable time.

-

patch QEMU source to remove the

disablefromexec_tbin thetrace-eventsfile. See also: https://rwmj.wordpress.com/2016/03/17/tracing-qemu-guest-execution/

We can further use Binutils' addr2line to get the line that corresponds to each address:

./trace-boot -a x86_64 ./trace2line -a x86_64 less ./out/x86_64/qemu/trace-lines.txt

The format is as follows:

39368 _static_cpu_has arch/x86/include/asm/cpufeature.h:148

Where:

-

39368: number of consecutive times that a line ran. Makes the output much shorter and more meaningful -

_static_cpu_has: name of the function that contains the line -

arch/x86/include/asm/cpufeature.h:148: file and line

This could of course all be done with GDB, but it would likely be too slow to be practical.

TODO do even more awesome offline post-mortem analysis things, such as:

-

detect if we are in userspace or kernelspace. Should be a simple matter of reading the

-

read kernel data structures, and determine the current thread. Maybe we can reuse / extend the kernel’s GDB Python scripts??

QEMU supports deterministic record and replay by saving external inputs, which would be awesome to understand the kernel, as you would be able to examine a single run as many times as you would like.

This mechanism first requires a trace to be generated on an initial record run. The trace is then used on the replay runs to make them deterministic.

Unfortunately it is not working in the current QEMU: https://stackoverflow.com/questions/46970215/how-to-use-qemus-deterministic-record-and-replay-feature-for-a-linux-kernel-boo

Alternatively, mozilla/rr claims it is able to run QEMU: but using it would require you to step through QEMU code itself. Likely doable, but do you really want to?

gem5 also has a tracing mechanism, as documented at: http://www.gem5.org/Trace_Based_Debugging

Try it out with:

./run -a aarch64 -E 'm5 exit' -G '--debug-flags=Exec' -g

The trace file is located at:

less out/aarch64/gem5/m5out/trace.txt

but be warned, it is humongous, at 16Gb.

It made the runtime about 4x slower on the P51, with or without .gz compression.

The list of available debug flags can be found with:

./run -a aarch64 -G --debug-help -g

but for meaningful descriptions you need to look at the source code:

less gem5/gem5/src/cpu/SConscript

The default Exec format reads symbol names from the Linux kernel image and show them, which is pretty awesome if you ask me.

TODO can we get just the executed addresses out of gem5? The following gets us closer, but not quite:

./run -a aarch64 -E 'm5 exit' -G '--debug-flags=ExecEnable,ExecKernel,ExecUse' -g

We could of course just pipe it to stdout and awk it up.

Sometimes in Ubuntu 14.04, after the QEMU SDL GUI starts, it does not get updated after keyboard strokes, and there are artifacts like disappearing text.

We have not managed to track this problem down yet, but the following workaround always works:

Ctrl + Shift + U Ctrl + C root

This started happening when we switched to building QEMU through Buildroot, and has not been observed on later Ubuntu.

Using text mode is another workaround if you don’t need GUI features.

gem5 is a system simulator, much like QEMU: http://gem5.org/

For the most part, just add the -g option to the QEMU commands and everything should magically work:

./configure -g && ./build -a arm -g && ./run -a arm -g

On another shell:

./gem5-shell

A full rebuild is currently needed even if you already have QEMU working unfortunately, see: gem5 and QEMU with the same kernel configuration

Tested architectures:

-

arm -

aarch64 -

x86_64

Like QEMU, gem5 also has a syscall emulation mode (SE), but in this tutorial we focus on the full system emulation mode (FS). For a comparison see: https://stackoverflow.com/questions/48986597/when-should-you-use-full-system-fs-vs-syscall-emulation-se-with-userland-program

-

advantages of gem5:

-

simulates a generic more realistic pipelined and optionally out of order CPU cycle by cycle, including a realistic DRAM memory access model with latencies, caches and page table manipulations. This allows us to:

-

do much more realistic performance benchmarking with it, which makes absolutely no sense in QEMU, which is purely functional

-

make certain functional cache observations that are not possible in QEMU, e.g.:

-

use Linux kernel APIs that flush memory like DMA, which are crucial for driver development. In QEMU, the driver would still work even if we forget to flush caches.

-

TODO spectre / meltdown

It is not of course truly cycle accurate, as that

-

-

-

would require exposing proprietary information of the CPU designs: https://stackoverflow.com/questions/17454955/can-you-check-performance-of-a-program-running-with-qemu-simulator/33580850#33580850

-

would make the simulation even slower TODO confirm, by how much

but the approximation is reasonable.

It is used mostly for microarchitecture research purposes: when you are making a new chip technology, you don’t really need to specialize enormously to an existing microarchitecture, but rather develop something that will work with a wide range of future architectures.

-

runs are deterministic by default, unlike QEMU which has a special [record-and-replay] mode, that requires first playing the content once and then replaying

-

-

disadvantage of gem5: slower than QEMU, see: gem5 vs QEMU performance

This implies that the user base is much smaller, since no Android devs.

Instead, we have only chip makers, who keep everything that really works closed, and researchers, who can’t version track or document code properly >:-) And this implies that:

-

the documentation is more scarce

-

it takes longer to support new hardware features

Well, not that AOSP is that much better anyways.

-

-

not sure: gem5 has BSD license while QEMU has GPL

This suits chip makers that want to distribute forks with secret IP to their customers.

On the other hand, the chip makers tend to upstream less, and the project becomes more crappy in average :-)

We have benchmarked a Linux kernel boot with the commands:

# Try to manually hit Ctrl + C as soon as system shutdown message appears. time ./run -a arm -e 'init=/poweroff.out' time ./run -a arm -E 'm5 exit' -g time ./run -a arm -E 'm5 exit' -g -- --caches --cpu-type=HPI time ./run -a x86_64 -e 'init=/poweroff.out' time ./run -a x86_64 -e 'init=/poweroff.out' -- -enable-kvm time ./run -a x86_64 -e 'init=/poweroff.out' -g

and the results were:

| Arch | Emulator | Subtype | Time | N times slower than QEMU | Instruction count | Commit |

|---|---|---|---|---|---|---|

arm |

QEMU |

6 seconds |

1 |

da79d6c6cde0fbe5473ce868c9be4771160a003b |

||

arm |

gem5 |

AtomicSimpleCPU |

1 minute 40 seconds |

17 |

da79d6c6cde0fbe5473ce868c9be4771160a003b |

|

arm |

gem5 |

HPI |

10 minutes |

100 |

da79d6c6cde0fbe5473ce868c9be4771160a003b |

|

aarch64 |

QEMU |

1.3 seconds |

1 |

170k |

b6e8a7d1d1cb8a1d10d57aa92ae66cec9bfb2d01 |

|

aarch64 |

gem5 |

AtomicSimpleCPU |

1 minute |

43 |

110M |

b6e8a7d1d1cb8a1d10d57aa92ae66cec9bfb2d01 |

x86_64 |

QEMU |

3.8 seconds |

1 |

1.8M |

4cb8a543eeaf7322d2e4493f689735cb5bfd48df |

|

x86_64 |

QEMU |

KVM |

1.3 seconds |

0.3 |

4cb8a543eeaf7322d2e4493f689735cb5bfd48df |

|

x86_64 |

gem5 |

AtomicSimpleCPU |

6 minutes 30 seconds |

102 |

630M |

4cb8a543eeaf7322d2e4493f689735cb5bfd48df |

tested on the P51.

One methodology problem is that gem5 and QEMU were run with different kernel configs, due to gem5 and QEMU with the same kernel configuration. This could have been improved if we normalized by instruction counts, but we didn’t think of that previously.

OK, this is why we used gem5 in the first place, performance measurements!

Let’s benchmark Dhrystone which Buildroot provides.

The most flexible way is to do:

# Generate a checkpoint after Linux boots. # The boot takes a while, be patient young Padawan. printf 'm5 exit' >readfile.gitignore ./run -a aarch64 -g -E 'm5 checkpoint;m5 readfile > a.sh;sh a.sh' # Restore the checkpoint, and run the benchmark with parameter 1.000. # We skip the boot completely, saving time! printf 'm5 resetstats;dhrystone 1000;m5 exit' >readfile.gitignore ./run -a aarch64 -g -- -r 1 ./gem5-ncycles -a aarch64 # Now with another parameter 10.000. printf 'm5 resetstats;dhrystone 10000;m5 exit' >readfile.gitignore ./run -a aarch64 -g -- -r 1 ./gem5-ncycles -a aarch64

These commands output the approximate number of CPU cycles it took Dhrystone to run.

A more naive and simpler to understand approach would be a direct:

./run -a aarch64 -g -E 'm5 checkpoint;m5 resetstats;dhrystone 10000;m5 exit'

but the problem is that this method does not allow to easily run a different script without running the boot again, see: gem5 checkpoint restore and run a different script

A few imperfections of our benchmarking method are:

-

when we do

m5 resetstatsandm5 exit, there is some time passed before theexecsystem call returns and the actual benchmark starts and ends -

the benchmark outputs to stdout, which means so extra cycles in addition to the actual computation. But TODO: how to get the output to check that it is correct without such IO cycles?

Solutions to these problems include:

-

modify benchmark code with instrumentation directly, as PARSEC and ARM employees have been doing: https://github.com/arm-university/arm-gem5-rsk/blob/aa3b51b175a0f3b6e75c9c856092ae0c8f2a7cdc/parsec_patches/xcompile-patch.diff#L230

-

monitor known addresses

Those problems should be insignificant if the benchmark runs for long enough however.

Now you can play a fun little game with your friends:

-

pick a computational problem

-

make a program that solves the computation problem, and outputs output to stdout

-

write the code that runs the correct computation in the smallest number of cycles possible

To find out why your program is slow, a good first step is to have a look at the statistics for the run:

cat out/aarch64/gem5/m5out/stats.txt

Whenever we run m5 dumpstats or m5 exit, a section with the following format is added to that file:

---------- Begin Simulation Statistics ---------- [the stats] ---------- End Simulation Statistics ----------

Besides optimizing a program for a given CPU setup, chip developers can also do the inverse, and optimize the chip for a given benchmark!

The rabbit hole is likely deep, but let’s scratch a bit of the surface.

./run -a arm -c 2 -g

Check with:

cat /proc/cpuinfo getconf _NPROCESSORS_CONF

A quick ./run -g -- -h leads us to the options:

--caches --l1d_size=1024 --l1i_size=1024 --l2cache --l2_size=1024 --l3_size=1024

But keep in mind that it only affects benchmark performance of the most detailed CPU types:

| arch | CPU type | caches used |

|---|---|---|

X86 |

|

no |

X86 |

|

?* |

ARM |

|

no |

ARM |

|

yes |

*: couldn’t test because of:

Cache sizes can in theory be checked with the methods described at: https://superuser.com/questions/55776/finding-l2-cache-size-in-linux:

getconf -a | grep CACHE lscpu cat /sys/devices/system/cpu/cpu0/cache/index2/size

but for some reason the Linux kernel is not seeing the cache sizes:

Behaviour breakdown:

-

arm QEMU and gem5 (both

AtomicSimpleCPUorHPI), x86 gem5:/sysfiles don’t exist, andgetconfandlscpuvalue empty -

x86 QEMU:

/sysfiles exist, butgetconfandlscpuvalues still empty

So we take a performance measurement approach instead:

./gem5-bench-cache -a aarch64 cat out/aarch64/gem5/bench-cache.txt

TODO: sort out HPI, and then paste results here, why the --cpu-type=HPI there always generates a switch_cpu, even if the original run was also on HPI?

TODO These look promising:

--list-mem-types --mem-type=MEM_TYPE --mem-channels=MEM_CHANNELS --mem-ranks=MEM_RANKS --mem-size=MEM_SIZE

TODO: now to verify this with the Linux kernel? Besides raw performance benchmarks.

TODO These look promising:

--ethernet-linkspeed --ethernet-linkdelay

and also: gem5-dist: https://publish.illinois.edu/icsl-pdgem5/

Clock frequency: TODO how does it affect performance in benchmarks?

./run -a arm -g -- --cpu-clock 10000000

Check with:

m5 resetstats && sleep 10 && m5 dumpstats

and then:

grep numCycles out/aarch64/gem5/m5out/stats.txt

TODO: why doesn’t this exist:

ls /sys/devices/system/cpu/cpu0/cpufreq

If you are benchmarking compiled programs instead of hand written assembly, remember that we configure Buildroot to disable optimizations by default with:

BR2_OPTIMIZE_0=y

to improve the debugging experience.

You will likely want to change that to:

BR2_OPTIMIZE_3=y

and do a full rebuild.

TODO is it possible to compile a single package with optimizations enabled? In any case, this wouldn’t be very representative, since calls to an unoptimized libc will also have an impact on performance. Kernel-wise it should be fine though, since the kernel requires O=2.

Buildroot built-in libraries, mostly under Libraries > Other:

-

Armadillo

C++: linear algebra -

fftw: Fourier transform

-

Eigen: linear algebra

-

Flann

-

GSL: various

-

liblinear

-

libspacialindex

-

libtommath

-

qhull

There are not yet enabled, but it should be easy to so:

-

enable them in br2 and rebuild

-

create a test program that uses each library under kernel_module/user

External open source benchmarks. We will try to create Buildroot packages for them, add them to this repo, and potentially upstream:

Buildroot supports it, which makes everything just trivial:

./build \ -a arm \ -B 'BR2_PACKAGE_OPENBLAS=y' \ ;

and then inside the guest run our test program:

/openblas.out

For x86, you also need:

-B 'BR2_PACKAGE_OPENBLAS_TARGET="NEHALEM"'

to overcome this bug: https://bugs.busybox.net/show_bug.cgi?id=10856

sgemm_kernel.o: No such file or directory

We have ported parts of the PARSEC benchmark for cross compilation at: https://github.com/************/parsec-benchmark See the documentation on that repo to find out which benchmarks have been ported. Some of the benchmarks were are segfaulting, they are documented in that repo.

There are two ways to run PARSEC with this repo:

-

without

pasecmgmt, most likely what you want

configure -gpq && ./build -a arm -B 'BR2_PACKAGE_PARSEC_BENCHMARK=y' -g && ./run -a arm -g

Once inside the guest, launch one of the test input sized benchmarks manually as in:

cd /parsec/ext/splash2x/apps/fmm/run ../inst/arm-linux.gcc/bin/fmm 1 < input_1

To find run out how to run many of the benchmarks, have a look at the test.sh script of the parse-benchmark repo.

From the guest, you can also run it as:

cd /parsec ./test.sh

but this might be a bit time consuming in gem5.

Running a benchmark of a size different than test, e.g. simsmall, requires a rebuild with:

./build \ -a arm \ -B 'BR2_PACKAGE_PARSEC_BENCHMARK=y' \ -B 'BR2_PACKAGE_PARSEC_BENCHMARK_INPUT_SIZE="simsmall"' \ -g \ -- parsec-benchmark-reconfigure \ ;

Large input may also require tweaking:

-

BR2_TARGET_ROOTFS_EXT2_SIZE if the unpacked inputs are large

-

Memory size, unless you want to meet the OOM killer, which is admittedly kind of fun

test.sh only contains the run commands for the test size, and cannot be used for simsmall.

The easiest thing to do, is to scroll up on the host shell after the build, and look for a line of type:

Running /full/path/to/linux-kernel-module-cheat/out/aarch64/buildroot/build/parsec-benchmark-custom/ext/splash2x/apps/ocean_ncp/inst/aarch64-linux.gcc/bin/ocean_ncp -n2050 -p1 -e1e-07 -r20000 -t28800

and then tweak the command found in test.sh accordingly.

Yes, we do run the benchmarks on host just to unpack / generate inputs. They are expected fail to run since they were build for the guest instead of host, including for x86_64 guest which has a different interpreter than the host’s (see file myexecutable).

The rebuild is required because we unpack input files on the host.

Separating input sizes also allows to create smaller images when only running the smaller benchmarks.

This limitation exists because parsecmgmt generates the input files just before running via the Bash scripts, but we can’t run parsecmgmt on gem5 as it is too slow!

One option would be to do that inside the guest with QEMU, but this would required a full rebuild due to gem5 and QEMU with the same kernel configuration.

Also, we can’t generate all input sizes at once, because many of them have the same name and would overwrite one another…

PARSEC simply wasn’t designed with non native machines in mind…

Most users won’t want to use this method because:

-

running the

parsecmgmtBash scripts takes forever before it ever starts running the actual benchmarks on gem5Running on QEMU is feasible, but not the main use case, since QEMU cannot be used for performance measurements

-

it requires putting the full

.tarinputs on the guest, which makes the image twice as large (1x for the.tar, 1x for the unpacked input files)

It would be awesome if it were possible to use this method, since this is what Parsec supports officially, and so:

-

you don’t have to dig into what raw command to run

-

there is an easy way to run all the benchmarks in one go to test them out

-

you can just run any of the benchmarks that you want

but it simply is not feasible in gem5 because it takes too long.

If you still want to run this, try it out with:

./build \ -a aarch64 \ -B 'BR2_PACKAGE_PARSEC_BENCHMARK=y' \ -B 'BR2_PACKAGE_PARSEC_BENCHMARK_PARSECMGMT=y' \ -B 'BR2_TARGET_ROOTFS_EXT2_SIZE="3G"' \ -g \ -- parsec-benchmark-reconfigure \ ;

And then you can run it just as you would on the host:

cd /parsec/ bash . env.sh parsecmgmt -a run -p splash2x.fmm -i test

If you want to remove PARSEC later, Buildroot doesn’t provide an automated package removal mechanism as documented at: https://github.com/buildroot/buildroot/blob/2017.08/docs/manual/rebuilding-packages.txt#L90, but the following procedure should be satisfactory:

rm -rf \ ./out/common/dl/parsec-* \ ./out/arm-gem5/buildroot/build/parsec-* \ ./out/arm-gem5/buildroot/build/packages-file-list.txt \ ./out/arm-gem5/buildroot/images/rootfs.* \ ./out/arm-gem5/buildroot/target/parsec-* \ ; ./build -a arm -g

If you end up going inside parsec-benchmark/parsec-benchmark to hack up the benchmark (you will!), these tips will be helpful.

Buildroot was not designed to deal with large images, and currently cross rebuilds are a bit slow, due to some image generation and validation steps.

A few workarounds are:

-

develop in host first as much as you can. Our PARSEC fork supports it.

If you do this, don’t forget to do a:

cd parsec-benchmark/parsec-benchmark git clean -xdf .

before going for the cross compile build.

-

patch Buildroot to work well, and keep cross compiling all the way. This should be totally viable, and we should do it.

Don’t forget to explicitly rebuild PARSEC with:

./build -a arm -g -b br2_parsec parsec-benchmark-reconfigure

You may also want to test if your patches are still functionally correct inside of QEMU first, which is a faster emulator.

-

sell your soul, and compile natively inside the guest. We won’t do this, not only because it is evil, but also because Buildroot explicitly does not support it: https://buildroot.org/downloads/manual/manual.html#faq-no-compiler-on-target ARM employees have been known to do this: https://github.com/arm-university/arm-gem5-rsk/blob/aa3b51b175a0f3b6e75c9c856092ae0c8f2a7cdc/parsec_patches/qemu-patch.diff

Analogous to QEMU:

./run -a arm -e 'init=/poweroff.out' -g

Internals: when we give --command-line= to gem5, it overrides default command lines, including some mandatory ones which are required to boot properly.

Our run script hardcodes the require options in the default --command-line and appends extra options given by -e.

To find the default options in the first place, we removed --command-line and ran:

./run -a arm -g

and then looked at the line of the Linux kernel that starts with:

Kernel command line:

Analogous to QEMU, on the first shell:

./run -a arm -d -g

On the second shell:

./rungdb -a arm -g

On a third shell:

./gem5-shell

When you want to break, just do a Ctrl + C on GDB shell, and then continue.

And we now see the boot messages, and then get a shell. Now try the /continue.sh procedure described for QEMU.

TODO: how to stop at start_kernel? gem5 listens for GDB by default, and therefore does not wait for a GDB connection to start like QEMU does. So when GDB connects we might have already passed start_kernel. Maybe --debug-break=0 can be used? https://stackoverflow.com/questions/49296092/how-to-make-gem5-wait-for-gdb-to-connect-to-reliably-break-at-start-kernel-of-th

TODO: GDB fails with: