IngestFlow

- Scalable & Fault-Tolerant Log Ingestion with IngestFlow: Harness Redpanda's Speed and Elasticsearch's Robust Search for Effortless Log Management

Contents

- Introduction

- Problem Statement

- Requirements

- Features Implemented

- Demo Video

- Solution Architecture

- Technologies Used

- Why this Architecture

- Benchmarking

- How it Can be Improved Further

- Commit Histories

- References Used

- Note of Thanks

🌐 Introduction

- Name: Niku Singh

- Email: nikusingh319@gmail.com, soapmactavishmw4@gmail.com

- Github Username: NIKU-SINGH

- LinkedIn: https://www.linkedin.com/in/niku-singh/

- Twitter: https://twitter.com/Niku_Singh_

- University: Dr B R Ambedkar National Institute of Technology, Jalandhar

🤔 Problem Statement

Develop a log ingestor system that can efficiently handle vast volumes of log data, and offer a simple interface for querying this data using full-text search or specific field filters.

Both the systems (the log ingestor and the query interface) can be built using any programming language of your choice.

The logs should be ingested (in the log ingestor) over HTTP, on port 3000.

📝 Requirements

Log Ingestor:

- Develop a mechanism to ingest logs in the provided format.

- Ensure scalability to handle high volumes of logs efficiently.

- Mitigate potential bottlenecks such as I/O operations, database write speeds, etc.

- Make sure that the logs are ingested via an HTTP server, which runs on port

3000by default.

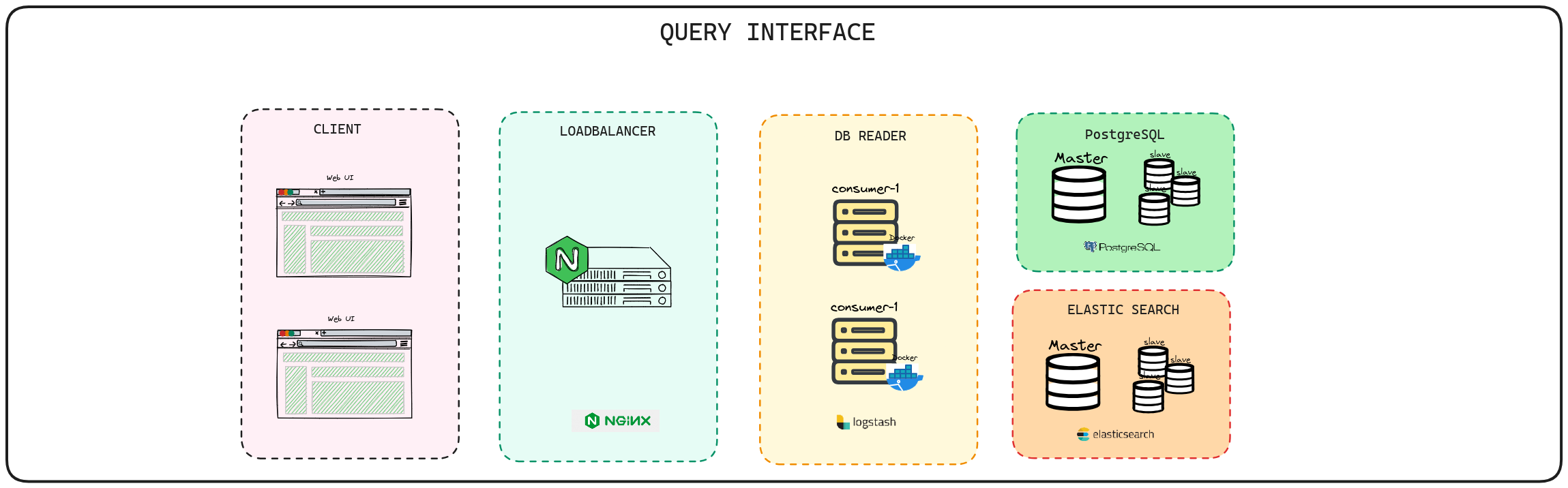

Query Interface:

- Offer a user interface (Web UI or CLI) for full-text search across logs.

- Include filters based on:

- level

- message

- resourceId

- timestamp

- traceId

- spanId

- commit

- metadata.parentResourceId

- Aim for efficient and quick search results.

⚙️ Features Implemented

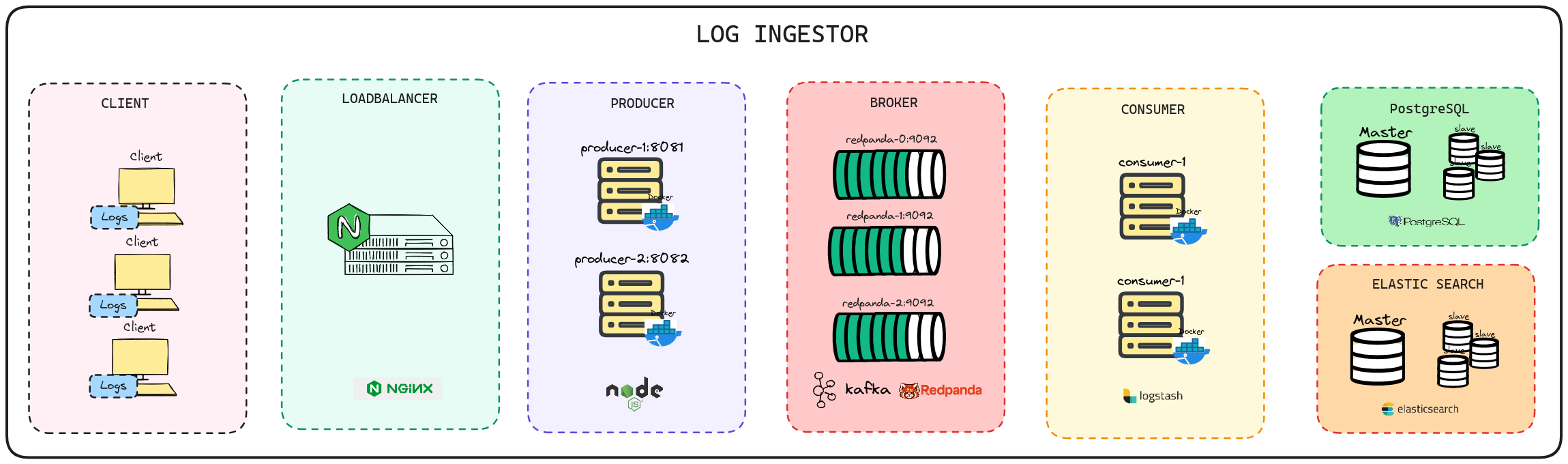

- Log Ingestor

- Ability to send http response

- Loadbalancing

- Redpanda and Kafka support

- Logstash integration

- Elasticsearch support

- PostgreSQL support

- Query Interface

📹 Demo Video

https://www.loom.com/share/cd05ff3e9dbe4963afdff4dc5de35f0a

💡 Solution Proposed

🏛️ Proposed Architecture

💻 Technologies Used

Frontend

| Technology Used | Reason |

|---|---|

| ReactJS | UI Development |

| Vite | Fast Development |

| Tailwind CSS | Styling Efficiency |

| Axios | HTTP Requests |

Backend

| Technology Used | Reason |

|---|---|

| Node.js | Server-Side JavaScript and Backend Development |

| Express | Minimalist Web Application Framework for Node.js |

| PostgreSQL | Robust Relational Database Management System |

| Elasticsearch | Distributed Search and Analytics Engine |

| Logstash | Data Processing and Ingestion Tool for Elasticsearch |

| Pino | Fast and Low Overhead Node.js Logger |

| Apache Kafka | Distributed Streaming Platform for Real-Time Data |

| Docker | Container Orchestration |

| NGINX | Load Balancer and Web Server |

| Redpanda | Modern Streaming Platform Built on Kafka |

🏆 Why this Architecure

📊 Benchmarking

For testing a Node.js application to assess its performance and scalability some of the tools that I can use are

- Artillery

- K6

🔄 How it can be Improved Further

⚒️ Usage

Forking the Repository

To get started with this project, fork the repository by clicking on the "Fork" button in the upper right corner of the page.

Local Installation

-

Clone the forked repository to your local machine.

git clone https://github.com/dyte-submissions/november-2023-hiring-NIKU-SINGH.git

-

Navigate to the project directory.

cd november-2023-hiring-NIKU-SINGH -

Install dependencies.

npm install

Docker Installation

-

Ensure Docker is installed on your system. If not, download and install it from Docker's official website.

-

Run the Docker Container

docker compose up --build

Sending Requests with cURL

Once the project is running either locally or using Docker, you can interact with the endpoint using cURL commands.

Example: Sending a GET request to the endpoint to check health status

curl -X POST -H "Content-Type: application/json" -d '{"name": "Niku", "message": "Your app is lovely please Star and Fork it"}' http://localhost:3000