Solution for the Deepfake Detection Challenge.

Private LB score: 0.43452

Our solution consists of three EfficientNet-B7 models (we used the Noisy Student pre-trained weights). We did not use external data, except for pre-trained weights. One model runs on frame sequences (a 3D convolution has been added to each EfficientNet-B7 block). The other two models work frame-by-frame and differ in the size of the face crop and augmentations during training. To tackle overfitting problem, we used mixup technique on aligned real-fake pairs. In addition, we used the following augmentations: AutoAugment, Random Erasing, Random Crops, Random Flips, and various video compression parameters. Video compression augmentation was done on-the-fly. To do this, short cropped tracks (50 frames each) were saved in PNG format, and at each training iteration they were loaded and reencoded with random parameters using ffmpeg. Due to the mixup, model predictions were “uncertain”, so at the inference stage, model confidence was strengthened by a simple transformation. The final prediction was obtained by averaging the predictions of models with weights proportional to confidence.The total training and preprocessing time is approximately 5 days on DGX-1.

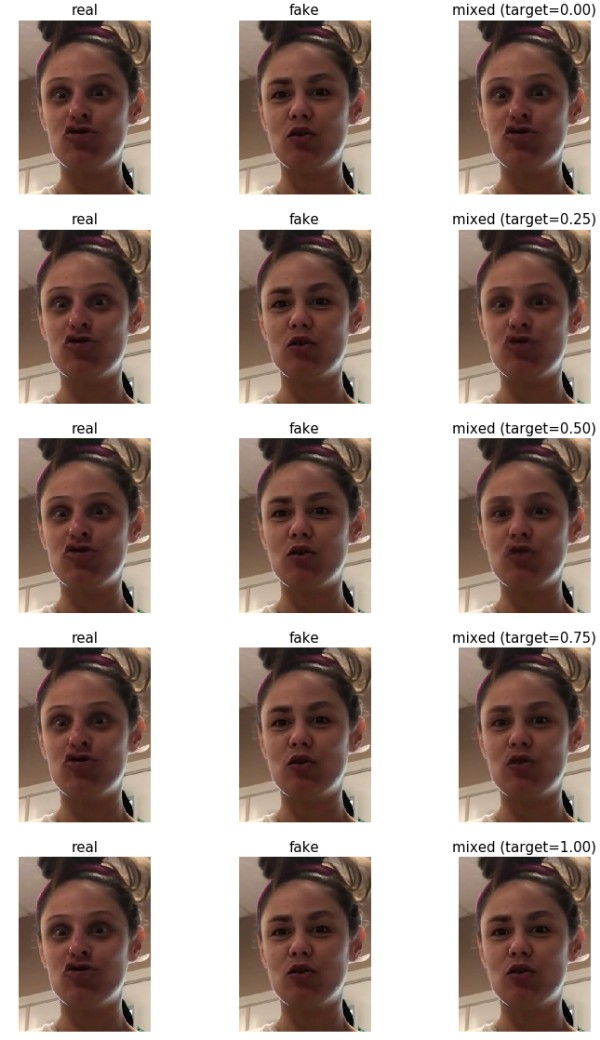

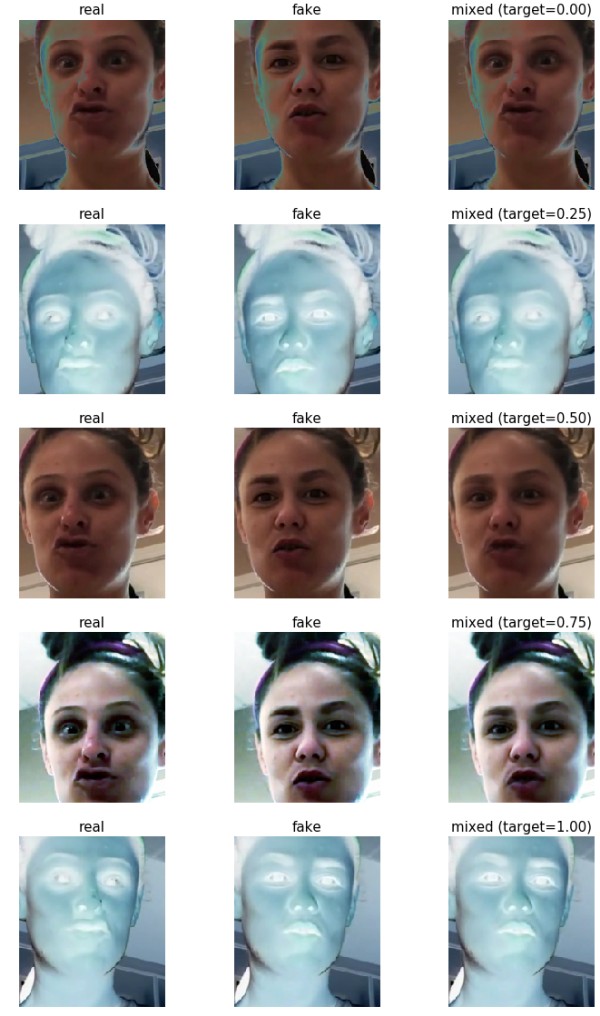

One of the main difficulties of this competition is a severe overfitting. Initially, all models overfitted in 2-3 epochs (the validation loss started to increase). The idea, which helped a lot with the overfitting, is to train the model on a mix of real and fake faces: for each fake face, we take the corresponding real face from the original video (with the same box coordinates and the same frame number) an do a linear combination of them. In terms of tensor it’s

input_tensor = (1.0 - target) * real_input_tensor + target * fake_input_tensorwhere target is drawn from a Beta distribution with parameters alpha=beta=0.5. With these parameters, there is a very

high probability of picking values close to 0 or 1 (pure real or pure fake face). You can see the examples below:

Due to the fact that real and fake samples are aligned, the background remains almost unchanged on interpolated samples,

which reduces overfitting and makes the model pay more attention to the face.

In the paper [1] it was pointed out that augmentations close to degradations seen in real-life video distributions

were applied to the test data. Specifically, these augmentations were (1) reduce the FPS of the video to 15; (2) reduce

the resolution of the video to 1/4 of its original size; and (3) reduce the overall encoding quality. In order to make

the model resistant to various parameters of video compression, we added augmentations with random parameters of video

encoding to training. It would be infeasible to apply such augmentations to the original videos on-the-fly during

training, so instead of the original videos, cropped (1.5x areas around the face) short (50 frames) clips were used.

Each clip was saved as separate frames in png format. An example of a clip is given below:

For on-the-fly augmentation, ffmpeg-python was used. At each iteration, the following parameters were randomly sampled

(see [2]):

- FPS (15 to 30)

- scale (0.25 to 1.0)

- CRF (17 to 40)

- random tuning option

As a result of the experiments, we found out that the EfficientNet models work better than others (we checked ResNet, ResNeXt, SE-ResNeXt). The best model was EfficientNet-B7 with Noisy Student pre-trained weights [3]. The size of the input image is 224x192 (most of the faces in the training dataset are smaller). The final ensemble consists of three models, two of which are frame-by-frame, and the third works on sequence.

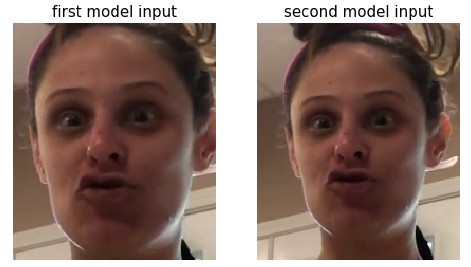

Frame-by-frame models work quite well. They differ in the size of the area around the face and augmentations during

training. Below are examples of input images for each of the models:

Probably, time dependencies can be useful for detecting fakes. Therefore, we added a 3d convolution to each block of the

EfficientNet model. This model worked slightly better than similar frame-by-frame model. The length of the input

sequence is 7 frames. The step between frames is 1/15 of a second. An example of an input sequence is given below:

To improve model generalization, we used the following augmentations: AutoAugment [4], Random Erasing, Random Crops,

Random Horizontal Flips. Since we used mixup, it was important to augment real-fake pairs the same way (see example).

For a sequence-based model, it was important to augment frames that belong to the same clip in the same way.

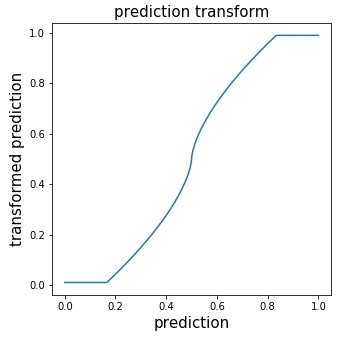

Due to mixup, the predictions of the models were uncertain, which was not optimal for the logloss. To increase

confidence, we applied the following transformation:

Due to computational limitations, predictions are made on a subsample of frames. Half of the frames were horizontally

flipped. The prediction for the video is obtained by averaging all the predictions with weights proportional to the

confidence (the closer the prediction to 0.5, the lower its weight). Such averaging works like attention, because the

model gives predictions close to 0.5 on poor quality frames (profile faces, blur, etc.).

[1] Brian Dolhansky, Russ Howes, Ben Pflaum, Nicole Baram, Cristian Canton Ferrer, “The Deepfake Detection Challenge

(DFDC) Preview Dataset”

[2] https://trac.ffmpeg.org/wiki/Encode/H.264

[3] Qizhe Xie, Minh-Thang Luong, Eduard Hovy, Quoc V. Le, “Self-training with Noisy Student improves ImageNet classification”

[4] Ekin D. Cubuk, Barret Zoph, Dandelion Mane, Vijay Vasudevan, Quoc V. Le, “AutoAugment: Learning Augmentation Policies from Data”

- CPU: Intel(R) Xeon(R) CPU E5-2698 v4 @ 2.20GHz

- GPU: 8x NVIDIA Tesla V100 SXM2 32 GB

- RAM: 512 GB

- SSD: 6 TB

Use the docker to get an environment close to what was used in the training. Run the following command to build the docker image:

cd path/to/solution

sudo docker build -t dfdc .Download the deepfake-detection-challenge-data and extract all files to /path/to/dfdc-data. This directory must have the following structure:

dfdc-data

├── dfdc_train_part_0

├── dfdc_train_part_1

├── dfdc_train_part_10

├── dfdc_train_part_11

├── dfdc_train_part_12

├── dfdc_train_part_13

├── dfdc_train_part_14

├── dfdc_train_part_15

├── dfdc_train_part_16

├── dfdc_train_part_17

├── dfdc_train_part_18

├── dfdc_train_part_19

├── dfdc_train_part_2

├── dfdc_train_part_20

├── dfdc_train_part_21

├── dfdc_train_part_22

├── dfdc_train_part_23

├── dfdc_train_part_24

├── dfdc_train_part_25

├── dfdc_train_part_26

├── dfdc_train_part_27

├── dfdc_train_part_28

├── dfdc_train_part_29

├── dfdc_train_part_3

├── dfdc_train_part_30

├── dfdc_train_part_31

├── dfdc_train_part_32

├── dfdc_train_part_33

├── dfdc_train_part_34

├── dfdc_train_part_35

├── dfdc_train_part_36

├── dfdc_train_part_37

├── dfdc_train_part_38

├── dfdc_train_part_39

├── dfdc_train_part_4

├── dfdc_train_part_40

├── dfdc_train_part_41

├── dfdc_train_part_42

├── dfdc_train_part_43

├── dfdc_train_part_44

├── dfdc_train_part_45

├── dfdc_train_part_46

├── dfdc_train_part_47

├── dfdc_train_part_48

├── dfdc_train_part_49

├── dfdc_train_part_5

├── dfdc_train_part_6

├── dfdc_train_part_7

├── dfdc_train_part_8

├── dfdc_train_part_9

└── test_videos

According to the rules of the competition, external data is allowed. The solution does not use other external data, except for pre-trained models. Below is a table with information about these models.

| File Name | Source | Direct Link | Forum Post |

|---|---|---|---|

| WIDERFace_DSFD_RES152.pth | github | google drive | link |

| noisy_student_efficientnet-b7.tar.gz | github | link | link |

Download these files and copy them to the external_data folder.

Run the docker container with the paths correctly mounted:

sudo docker run --runtime=nvidia -i -t -d --rm --ipc=host -v /path/to/dfdc-data:/kaggle/input/deepfake-detection-challenge:ro -v /path/to/solution:/kaggle/solution --name dfdc dfdc

sudo docker exec -it dfdc /bin/bash

cd /kaggle/solutionConvert pre-trained model from tensorflow to pytorch:

bash convert_tf_to_pt.shDetect faces on videos:

python3.6 detect_faces_on_videos.pyNote: You can parallelize this operation using the --part and --num_parts arguments

Generate tracks:

python3.6 generate_tracks.pyGenerate aligned tracks:

python3.6 generate_aligned_tracks.pyExtract tracks from videos:

python3.6 extract_tracks_from_videos.pyNote: You can parallelize this operation using the --part and --num_parts arguments

Generate track pairs:

python3.6 generate_track_pairs.pyTrain models:

python3.6 train_b7_ns_aa_original_large_crop_100k.py

python3.6 train_b7_ns_aa_original_re_100k.py

python3.6 train_b7_ns_seq_aa_original_100k.pyCopy the final weights and convert them to FP16:

python3.6 copy_weights.pyYou can download the final weights that were used in the competition (the result of the copy_weights.py script): GoogleDrive

Run the following command

python3.6 predict.py