This code is for the paper "Holistic Prototype Attention Network for Few-Shot Video Object Segmentation".

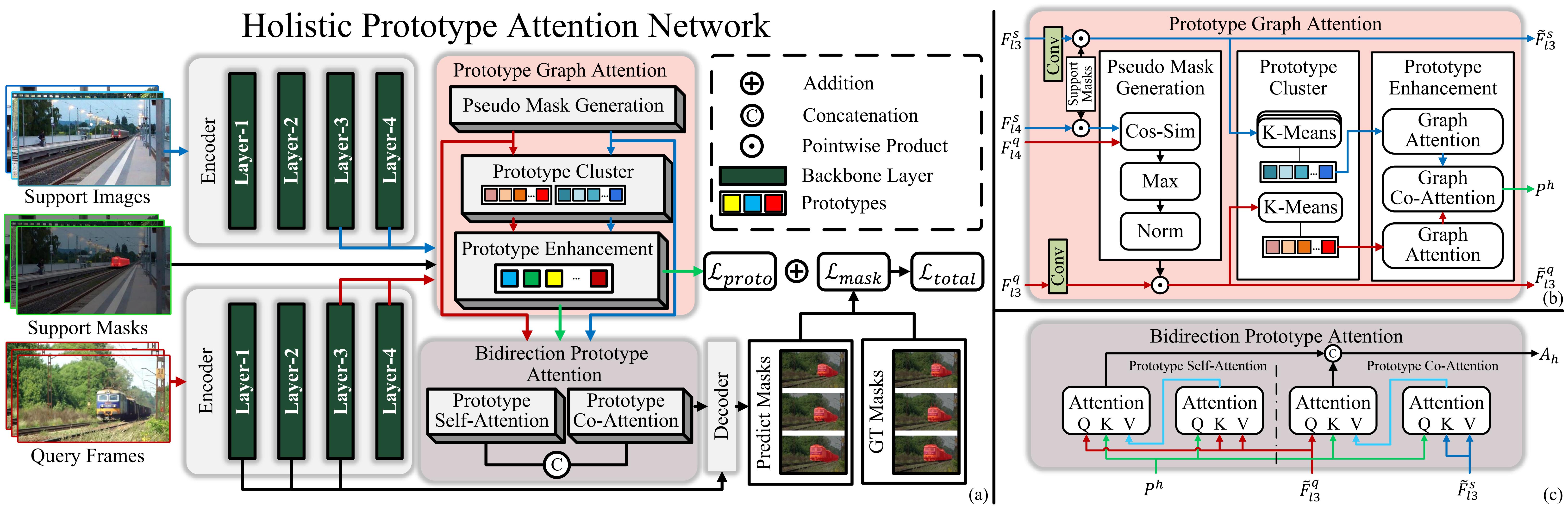

The architecture of our Holistic Prototype Attention Network:

git clone git@github.com:HPAN-FSVOS/HPAN.git

cd HPAN

conda create -n HPAN python=3.9

conda activate HPAN

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

pip install -r requirements.txt

# https://github.com/youtubevos/cocoapi.git

git clone git@github.com:youtubevos/cocoapi.git

cd cocoapi/PythonAPI

python setup.py build_ext install- Download the 2019 version of Youtube-VIS dataset.

- Put the dataset in the

./datafolder.

data

└─ Youtube-VOS

└─ train

├─ Annotations

├─ JPEGImages

└─ train.json

- Install cocoapi for Youtube-VIS.

- Download the ImageNet pretrained backbone and put it into the

pretrain_modelfolder.

pretrain_model

└─ resnet50_v2.pth

% PAANet with proto_loss

CUDA_VISIBLE_DEVICES=0 python train_HPAN.py --batch_size 8 --num_workers 8 --group 1 --trainid 0 --lr 5e-5 --with_prior_mask --with_proto_attn --proto_with_self_attn --proto_per_frame 5 --with_proto_loss% PAANet with proto_loss

CUDA_VISIBLE_DEVICES=0 python test_HPAN.py --group 1 --trainid 0 --test_num 1 --finetune_idx 1 --test_best --lr 2e-5 --with_prior_mask --with_proto_attn --proto_with_self_attn --proto_per_frame 5 --with_proto_lossYou can download our pretrained model to test.

| Methods | Publish | Query | Mean F | Mean J |

|---|---|---|---|---|

| PMM (Yang et al. 2020) | ECCV 2020 | Image | 47.9 | 51.7 |

| PFENet (Tian et al. 2020) | TPAMI 2020 | Image | 46.8 | 53.7 |

| PPNet (Liu et al. 2020) | TPAMI 2020 | Image | 45.6 | 57.1 |

| RePRI (Boudiaf et al. 2021) | CVPR 2021 | Image | - | 59.5 |

| DANet w/o OL (Chen et al. 2021) | CVPR 2021 | Video | 55.6 | 57.2 |

| DANet (Chen et al. 2021) | CVPR 2021 | Video | 56.3 | 58.0 |

| TTI w/o DCL (Siam et al. 2022) | arXiv 2022 | Video | - | 60.3 |

| TTI (Siam et al. 2022) | arXiv 2022 | Video | - | 60.8 |

| HPAN w/o OL | This paper | Video | 61.4 | 62.2 |

| HPAN | This paper | Video | 62.4 | 63.5 |

Part of the code is based upon: