This repository is the official PyTorch implementation of the paper "Dynamic in Static: Hybrid Visual Correspondence for Self-Supervised Video Object Segmentation"

Gensheng Pei, Yazhou Yao, Jianbo Jiao, Wenguan Wang, Liqiang Nie, Jinhui Tang

Conventional video object segmentation (VOS) methods usually necessitate a substantial volume of pixel-level annotated video data for fully supervised learning. In this paper, we present HVC, a hybrid static-dynamic visual correspondence framework for self-supervised VOS. HVC extracts pseudo-dynamic signals from static images, enabling an efficient and scalable VOS model. Our approach utilizes a minimalist fully-convolutional architecture to capture static-dynamic visual correspondence in image-cropped views. To achieve this objective, we present a unified self-supervised approach to learn visual representations of static-dynamic feature similarity. Firstly, we establish static correspondence by utilizing a priori coordinate information between cropped views to guide the formation of consistent static feature representations. Subsequently, we devise a concise convolutional layer to capture the forward / backward pseudo-dynamic signals between two views, serving as cues for dynamic representations. Finally, we propose a hybrid visual correspondence loss to learn joint static and dynamic consistency representations. Our approach, without bells and whistles, necessitates only one training session using static image data, significantly reducing memory consumption (16GB) and training time (2h). Moreover, HVC achieves state-of-the-art performance in several self-supervised VOS benchmarks and additional video label propagation tasks.

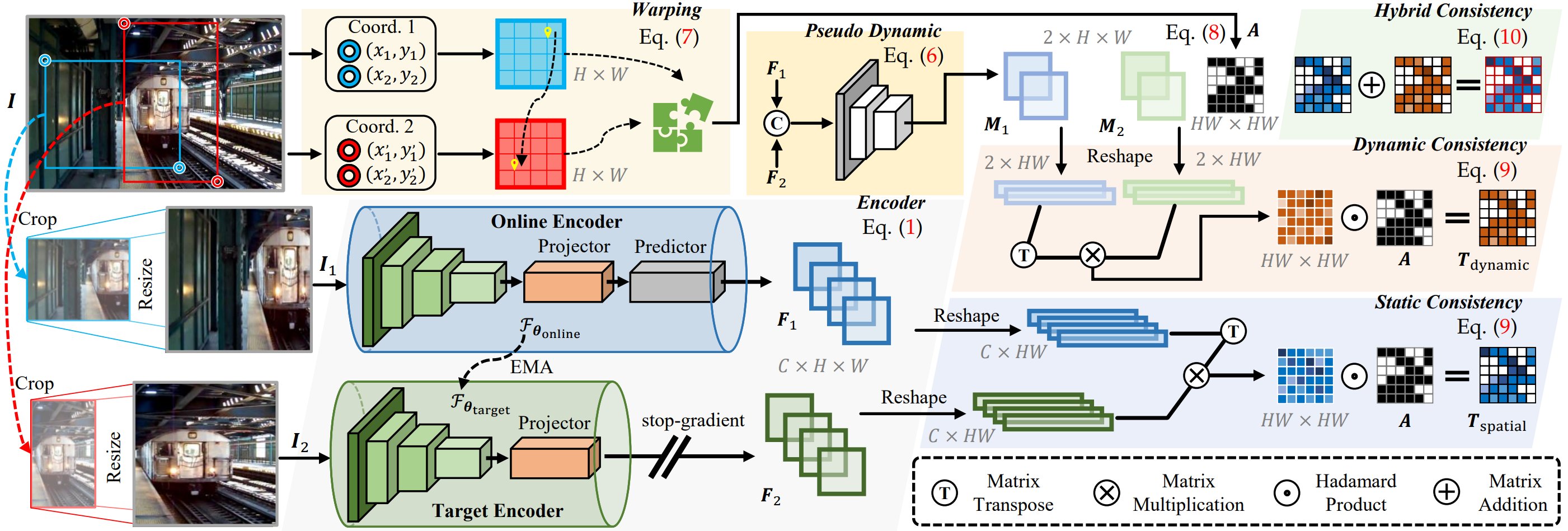

The architeture of HVC.

- Performance: HVC achieves SOTA self-supervised results on video object segmentation, part propagation, and pose tracking.

video object segmentation (J&F Mean):

DAVIS16 val-set: 80.1

DAVIS17 val-set: 73.1

DAVIS17 dev-set: 61.7

YouTube-VOS 2018 val-set: 71.9

YouTube-VOS 2019 val-set: 71.6

VOST val-set: 26.3 J Mean; 15.3 J Last_Mean

part propagation (mIoU):

VIP val-set: 44.6

pose tracking (PCK):

JHMDB val-set: 61.7 PCK@0.1; 82.8 PCK@0.2 - Efficiency: HVC necessitates only one training session from static image data (COCO), minimizing memory consumption (∼16GB) and training time (∼2h).

- Robustness: HVC enables the same self-supervised VOS performance with static image datasets (COCO: 73.1, PASCAL VOC: 72.0, and MSRA10k: 71.1) as with the video dataset (e.g. YouTube-VOS: 73.1).

- python 3.9

- torch==1.12.1

- torchvision==0.13.1

- CUDA 11.3

Create a conda envvironment:

conda create -n hvc python=3.9 -y

conda activate hvc

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113| Model | J&F Mean ↑ | Download |

|---|---|---|

| HVC pre-trained on COCO | DAVIS17 val-set: 73.1 | model / results |

| HVC pre-trained on PASCAL VOC | DAVIS17 val-set: 72.0 | model / results |

| HVC pre-trained on MSRA10k | DAVIS17 val-set: 71.1 | model / results |

| HVC pre-trained on YouTube-VOS | DAVIS17 val-set: 73.1 | model / results |

Note: HVC requires only one training session to infer all test datasets for VOS.

For the static image dataset:

- Donwload the COCO 2017 train-set from the COCO website.

- Please ensure the datasets are organized as following format.

data

|--filelists

|--Static

|--JPEGImages

|--COCO

|--MSRA10K

|--PASCAL

For the video dataset:

- Download the DAVIS 2017 val-set from the DAVIS website, the direct download link.

- Download the full YouTube-VOS dataset (version 2018) from the YouTube-VOS website, the direct download link. Please move

ytvos.csvfromdata/to the pathdata/YTB/2018/train_all_frames. - Please ensure the datasets are organized as following format.

data

|--filelists

|--DAVIS

|--Annotations

|--ImageSets

|--JPEGImages

|--YTB

|--2018

|--train_all_frames

|--ytvos.csv

|--valid_all_frames

Note: Please prepare the following datasets if you want to test the DAVIS dev-set and YouTube-VOS val-set (2019 version).

Download link: DAVIS dev-set, YTB 2019 val-set.

# pre-train on COCO

bash ./scripts/run_train_img.shOr

# pre-train on YouTube-VOS

bash ./scripts/run_train_vid.sh- Download MoCo V1, and put it in the folder

checkpoints/. - Download HVC and unzip them into the folder

checkpoints/.

# DAVIS 17 val-set

bash ./scripts/run_test.sh hvc davis17

bash ./scripts/run_metrics hvc davis17# YouTube-VOS val-set

bash ./scripts/run_test.sh hvc ytvos

# Please use the official YouTube-VOS server to calculate scores.# DAVIS 17 dev-set

bash ./scripts/run_test.sh hvc davis17dev

# Please use the official DAVIS server to calculate scores.Note: YouTube-VOS servers (2018 server and 2019 server); DAVIS server (2017 dev-set).

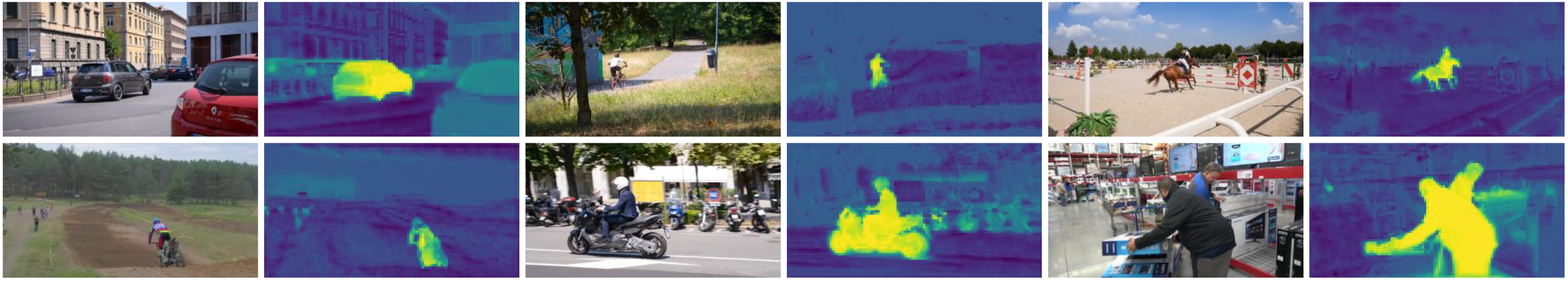

Learned representation visualization from HVC without any supervision.

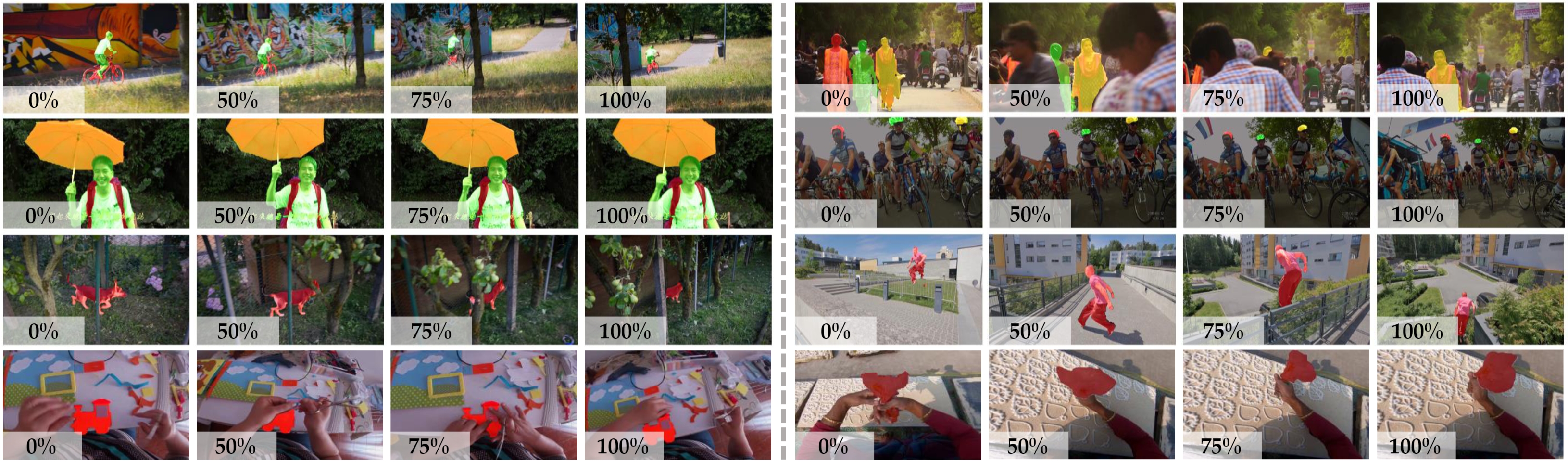

Qualitative results for video object segmentation.

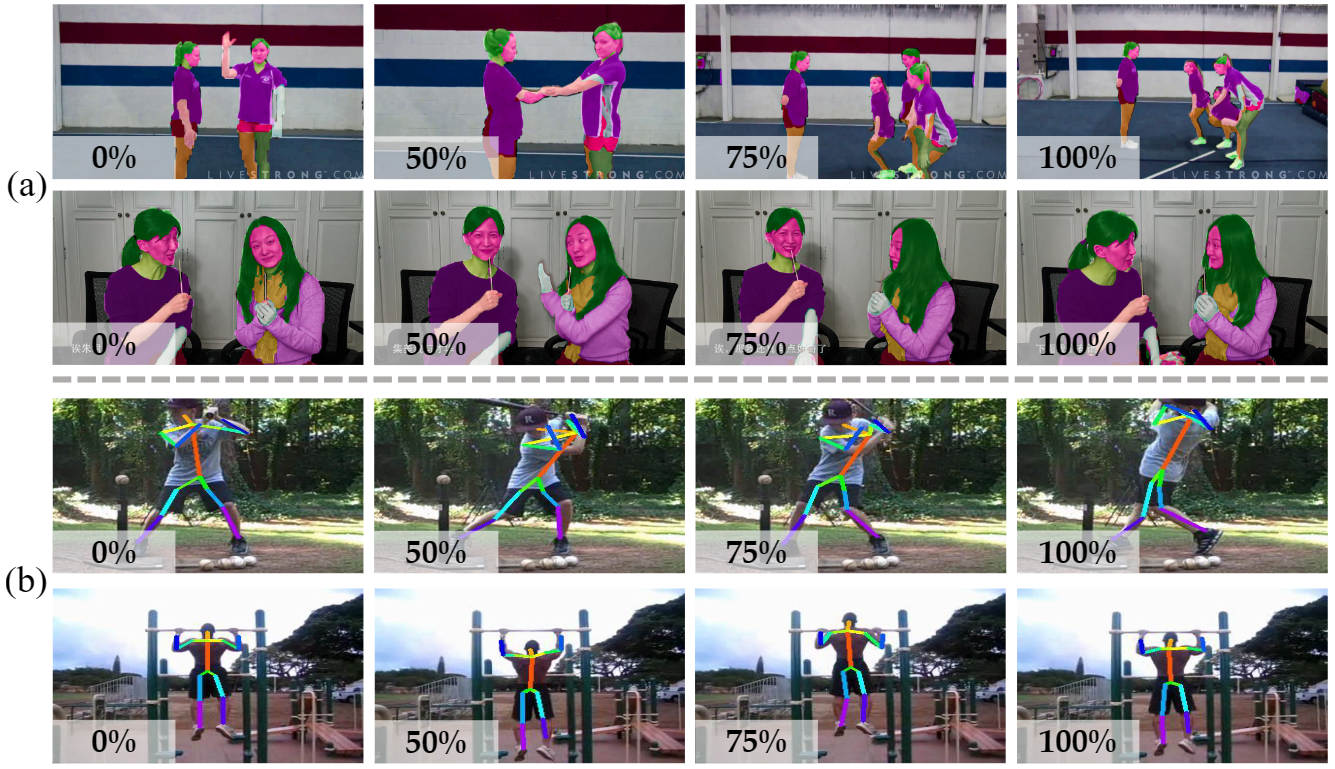

Qualitative results of the proposed method for (a) body part propagation and (b) human pose tracking .DAVIS 2017 val-set

|

|

|---|

|

|

|---|

|

|

|---|

-

We thank PyTorch, YouTube-VOS, and DAVIS contributors.

-

Thanks to videowalk for the label propagation codebases.

@article{pei2024dynamic,

title={Dynamic in Static: Hybrid Visual Correspondence for Self-Supervised Video Object Segmentation},

author={Pei, Gensheng and Yao, Yazhou and Jiao, Jianbo and Wang, Wenguan and Nie, Liqiang and Tang, Jinhui},

journal={arXiv preprint arXiv:2404.13505},

year={2024}

}