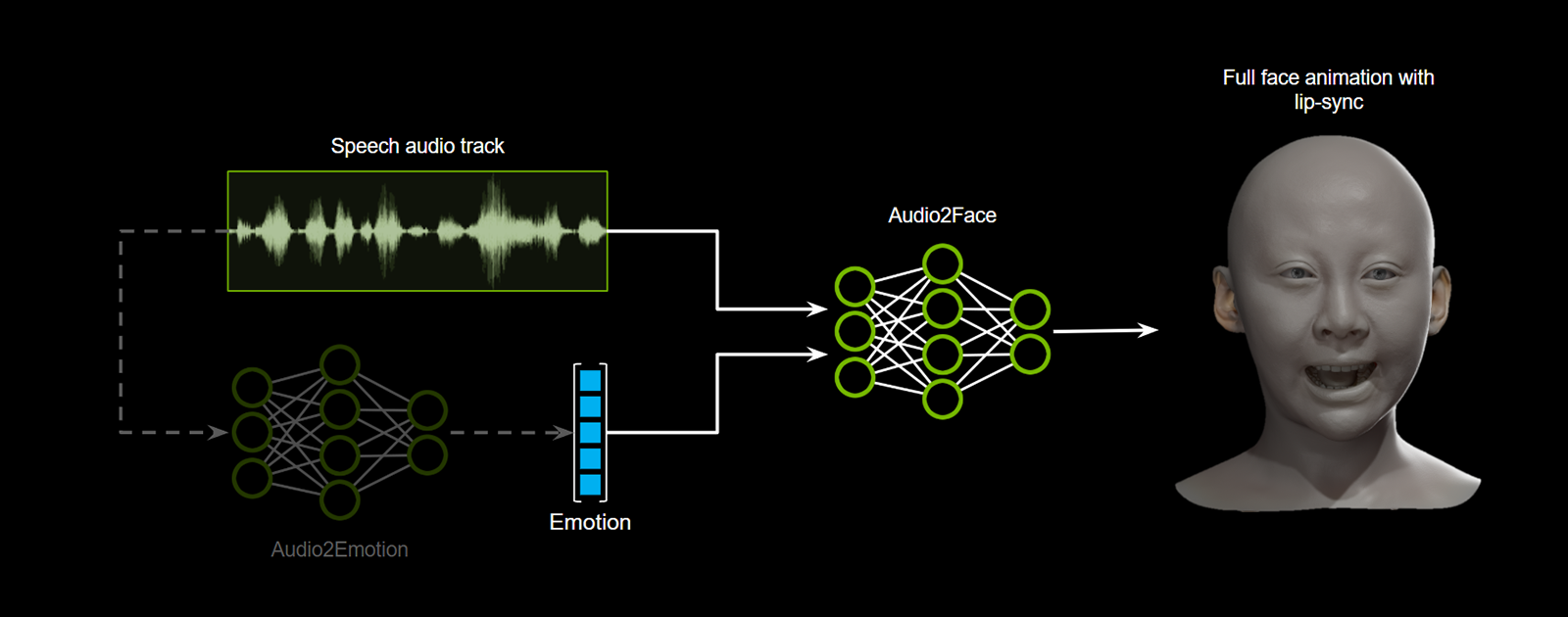

NVIDIA Audio2Face-3D NIM (A2F-3D NIM) is delivering generative AI avatar animation solutions based on audio and emotion inputs.

The Audio2Face-3D Microservice converts speech into facial animation in the form of ARKit Blendshapes. The facial animation includes emotional expression. Where emotions can be detected, the facial animation system captures key poses and shapes to replicate character facial performance by automatically detecting emotions in the input audio. Additionally emotions can be directly specified as part of the input to the microservice. A rendering engine can consume Blendshape topology to display a 3D avatar's performance.

This Git repository stores resources presented in Audio2Face-3D Microservice documentation. However, Audio2Face-3D NIM can be obtained through an evaluation license of NV AI Enterprise (NVAIE) through NGC.

For example worflows, please see the NVIDIA ACE samples, workflows, and resources Git repository.

Full Audio2Face-3D NIM developer documentation.

Here is a quick overview of the available Audio2Face-3D resources in this Git repository:

configs/folder:- Contains example static deployment, advanced and stylization configurations.

early_access/folder:- Contains examples and sample applications for our early access releases.

example_audio/folder:- Contains example audio files.

migration/folder:- Contains scripts to help you migrate from previous versions of A2F-3D to latest.

proto/folder:- Contains gRPC proto definitions for the microservices and instructions for installing them.

quick-start/folder:- Contains docker compose files deploying Audio2Face-3D (A2F-3D) and collecting telemetry data.

scripts/folder:- Contains example applications using Audio2Face-3D (A2F-3D) Microservice and instructions for running the scripts.

Github - Apache 2

ACE NIMs and NGC Microservices - NVIDIA AI Product License

Note: This project will download and install additional third-party open source software projects. Review the license terms of these open source projects before use.