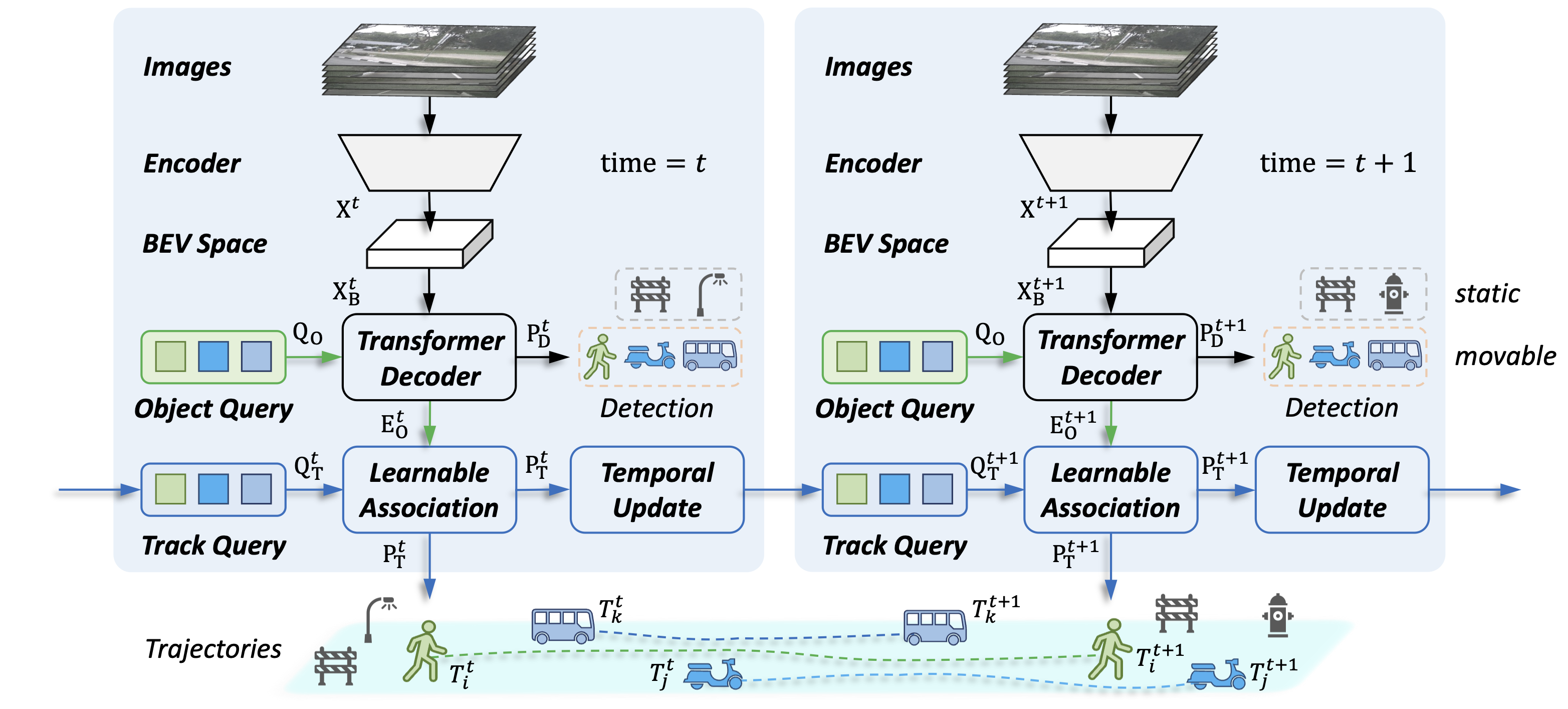

This project provides an implementation for the ICCV 2023 paper "End-to-end 3D Tracking with Decoupled Queries" based on mmDetection3D. In this work, we propose a simple yet effective framework for 3D object tracking, termed DQTrack. Specifically, it utilizes decoupled queries to address the task conflict representation in previous query-based approaches. With the designed task-specific queries, DQTrack enhances the query capability while maintaining a compact tracking pipeline.

This project is based on mmDetection3D, which can be constructed as follows.

- Download and install

mmdet3d(v1.0.0rc3) from the official repo.

git clone --branch v1.0.0rc3 https://github.com/open-mmlab/mmdetection3d.git- Our model is tested with

torch 1.13.1,mmcv-full 1.4.0, andmmdet 2.24.0. You can install them by

pip3 install torch==1.13.1 mmcv-full==1.4.0 mmdet==2.24.0-

Install mmdet3d following

mmdetection3d/docs/en/getting_started.md. -

To avoid potential error in MOT evaluation, please make sure

motmetrics<=1.1.3. -

Copy our project and related files to installed mmDetection3D:

cp -r projects mmdetection3d/

cp -r extra_tools mmdetection3d/- (Optional) Compile and install essential

VoxelPoolingif you want to use stereo-based network.

python3 extra_tools/setup.py developPlease prepare the data and download the preprocessed info as follows:

-

Download nuScenes 3D detection data HERE and unzip all zip files.

-

Like the general way to prepare dataset, it is recommended to symlink the dataset root to

mmdetection3d/data. -

Download pretrained models & infos HERE and move the downloaded info and models to

mmdetection3d/data/infosandmmdetection3d/ckpts.

The folder structure should be organized as follows before our processing.

mmdetection3d

├── mmdet3d

├── tools

├── configs

├── extra_tools

├── projects

├── ckpts

│ ├── model_val

│ ├── pretrain

├── data

│ ├── nuscenes

│ │ ├── maps

│ │ ├── samples

│ │ ├── sweeps

│ │ ├── v1.0-test

│ │ ├── v1.0-trainval

│ ├── infos

│ │ ├── track_cat_10_infos_train.pkl

│ │ ├── track_cat_10_infos_val.pkl

│ │ ├── track_test_cat_10_infos_test.pkl

│ │ ├── mmdet3d_nuscenes_30f_infos_train.pkl

│ │ ├── mmdet3d_nuscenes_30f_infos_val.pkl

│ │ ├── mmdet3d_nuscenes_30f_infos_test.pkl

You can train the model following the instructions.

You can find the pretrained models HERE if you want to train the model.

If you want to train the detector and tracker in an end-to-end manner from scratch, please turn the parameter train_track_only in config file to False.

For example, to launch DQTrack training on multi GPUs, one should execute:

cd /path/to/mmdetection3d

bash extra_tools/dist_train.sh ${CFG_FILE} ${NUM_GPUS}or train with a single GPU:

python3 extra_tools/train.py ${CFG_FILE}You can evaluate the model following the instructions.

ATTENTION: Because the sequential property of data, only the single GPU evaluation manner is supported:

python3 extra_tools/test.py ${CFG_FILE} ${CKPT} --eval=bboxWe provide results on nuScenes val set with pretrained models. All the models can be founded in model_val of HERE.

| Encoder | Decoder | Resolution | AMOTA | AMOTP | |

|---|---|---|---|---|---|

| DQTrack-DETR3D | R101 | DETR3D | 900x1600 | 36.7% | 1.351 |

| DQTrack-UVTR | R101 | UVTR-C | 900x1600 | 39.6% | 1.310 |

| DQTrack-Stereo | R50 | Stereo | 512x1408 | 36.9% | 1.371 |

| DQTrack-Stereo | R101 | Stereo | 512x1408 | 40.7% | 1.317 |

| DQTrack-PETRV2 | V2-99 | PETRV2 | 320x800 | 44.4% | 1.252 |

Copyright © 2023, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click here to view a copy of this license.

The pre-trained models are shared under CC-BY-NC-SA-4.0. If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

For business inquiries, please visit our website and submit the form: NVIDIA Research Licensing.

If this work is helpful for your research, please consider citing:

@inproceedings{li2023end,

title={End-to-end 3D Tracking with Decoupled Queries},

author={Li, Yanwei and Yu, Zhiding and Philion, Jonah and Anandkumar, Anima and Fidler, Sanja and Jia, Jiaya and Alvarez, Jose},

booktitle={IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2023}

}

We would like to thank the authors of DETR3D, MUTR3D, UVTR, PETR, and BEVStereo for their open-source release.