De-An Huang, Zhiding Yu, Anima Anandkumar

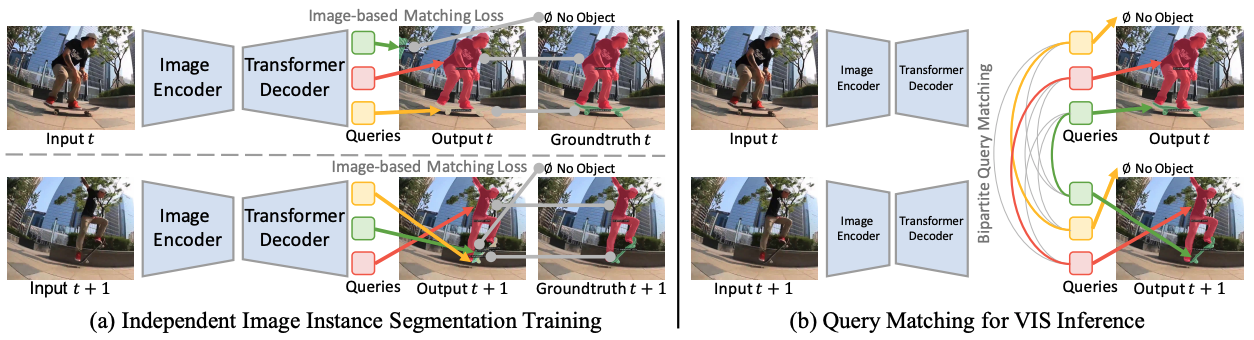

- Video instance segmentation by only training an image instance segmentation model.

- Support major video instance segmentation datasets: YouTubeVIS 2019/2021, Occluded VIS (OVIS).

See installation instructions.

See Preparing Datasets for MinVIS.

See Getting Started with MinVIS.

Trained models are available for download in the MinVIS Model Zoo.

The majority of MinVIS is made available under the Nvidia Source Code License-NC. The trained models in the MinVIS Model Zoo are made available under the CC BY-NC-SA 4.0 License.

Portions of the project are available under separate license terms: Mask2Former is licensed under a MIT License. Swin-Transformer-Semantic-Segmentation is licensed under the MIT License, Deformable-DETR is licensed under the Apache-2.0 License.

@inproceedings{huang2022minvis,

title={MinVIS: A Minimal Video Instance Segmentation Framework without Video-based Training},

author={De-An Huang and Zhiding Yu and Anima Anandkumar},

journal={NeurIPS},

year={2022}

}This repo is largely based on Mask2Former (https://github.com/facebookresearch/Mask2Former).