PyTorch implementations of the paper, 'Convolutional Tensor-Train LSTM for Spatio-Temporal Learning', NeurIPS 2020. [project page]

- code/ (original): The original implementation of the paper.

- code_opt/ (optimized): The optimized implementation to accelerate training.

Copyright (c) 2020 NVIDIA Corporation. All rights reserved. This work is licensed under a NVIDIA Open Source Non-commercial license.

-

Moving-MNIST-2

- Generator [link]

- Save the output of generate_moving_mnist(...) in npz format:

np.savez(moving-mnist-train.npz, data) -

KTH action

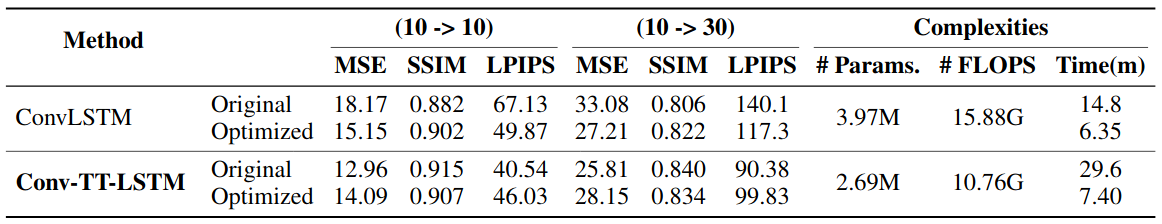

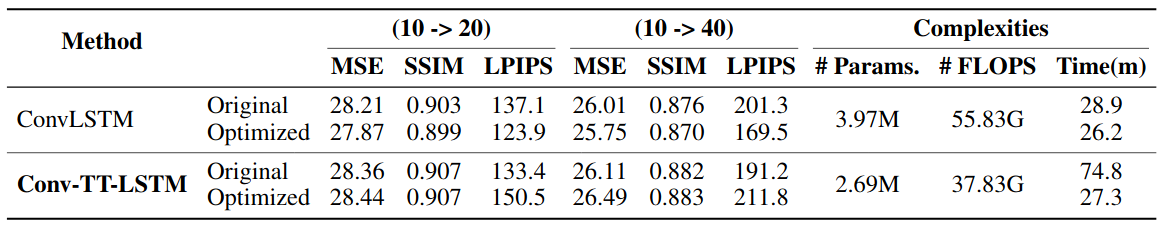

Higher PSNR/SSIM and lower MSE/LPIPS values indicate better predictive results. # of FLOPs denotes the multiplications for one-step prediction per sample, and Time(m) represents the clock time (in minutes) required by training the model for one epoch (10,000 samples)

This code was written by Wonmin Byeon (wbyeon@nvidia.com) and Jiahao Su (jiahaosu@terpmail.umd.edu).