Training Million parameter language model on AWS Habana EC2 instance with Gaudi Accelerator to help people in a war zone to reduce their mental issues.

Since Russia launched a full-scale military invasion into Ukraine on February 24, 2022, fighting has caused over one hundred civilian casualties and pushed tens of thousands of Ukrainians to flee to neighboring countries—including Poland. During war, people can be exposed to many different traumatic events. That raises the chances of developing mental health problems—like post-traumatic stress disorder (PTSD), anxiety, and depression—and poorer life outcomes as adults. Sometimes all they need is someone to talk, someone to make them feel better, and someone to share their feelings.

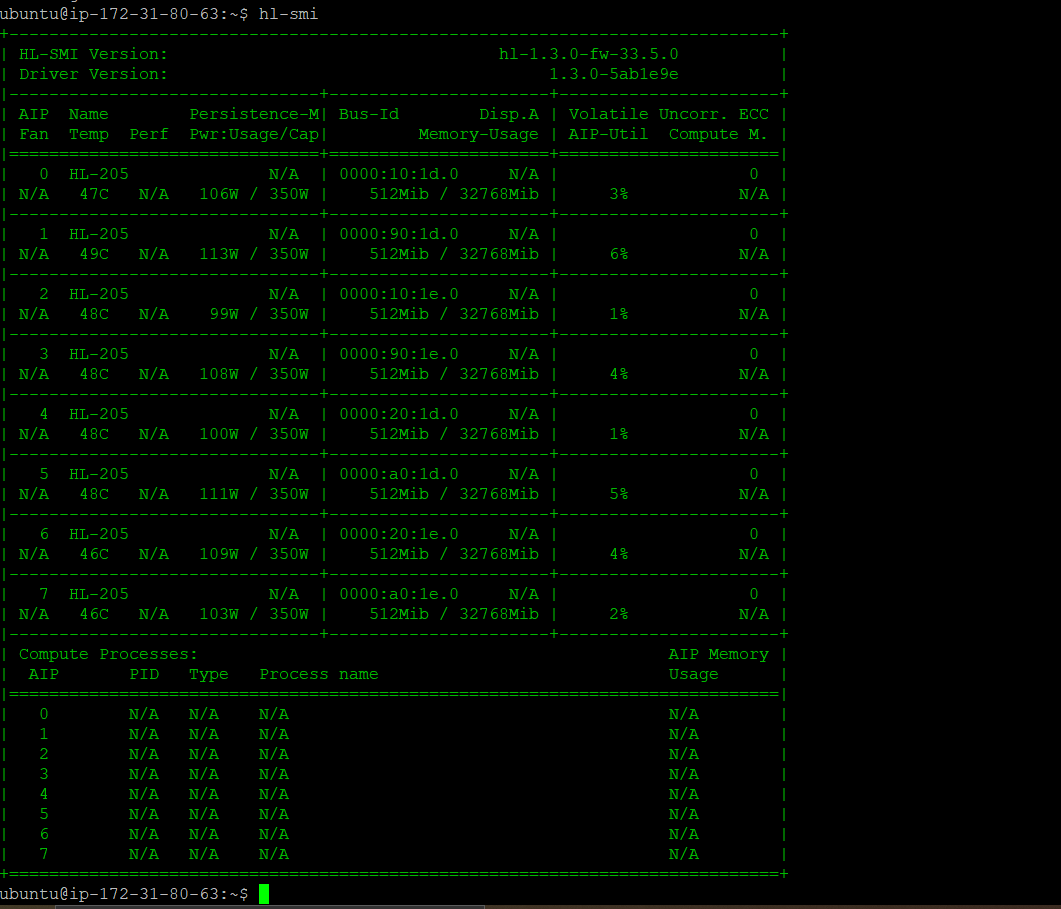

- Select the Habana base AMI which contain the OS image and the Habana driver and SynapseAI Software Stack. link

- After launching the EC2 instance, install PyTorch using link

- Install Jupyter Notebook on root using command

sudo pip install jupyter notebook4.clone this repository. After setting the AWS inbound rule, opened to 8888 port run

git clone https://github.com/kishorkuttan/language-model-training-on-habana.git

cd language-model-training-on-habana

sudo jupyter notebook --ip=* --allow-rootgo to https://your_instance_public_ip4 :8888

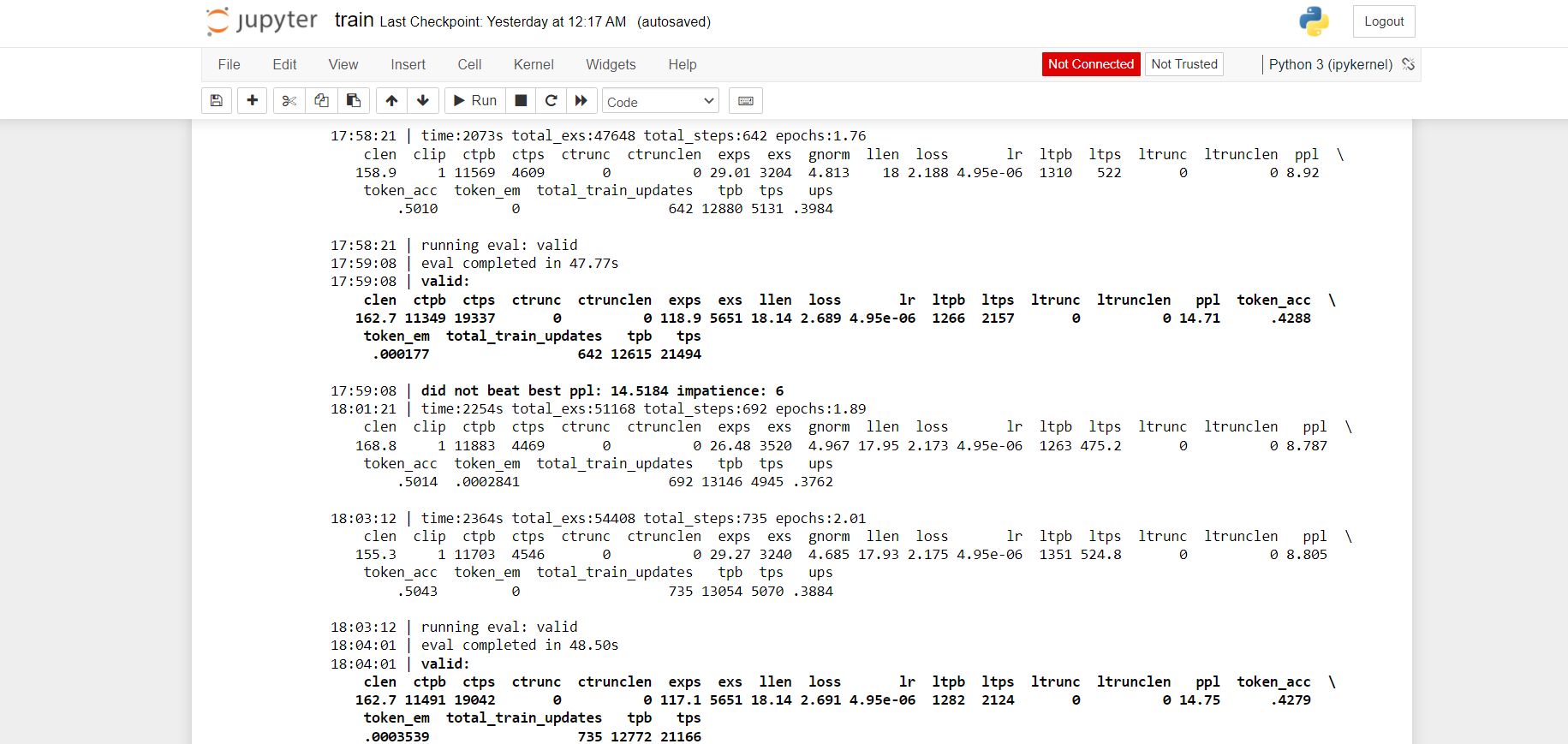

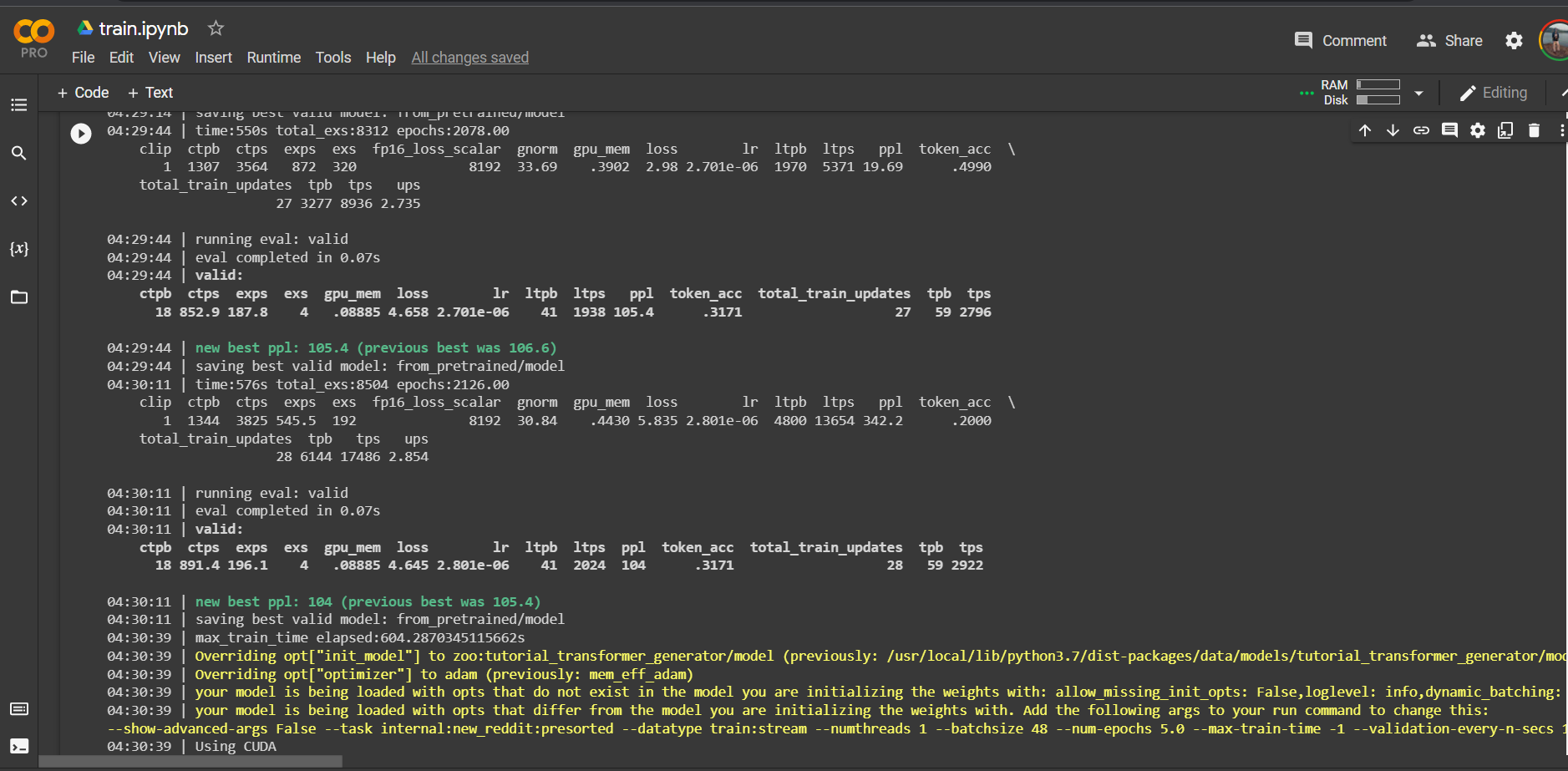

- run train.ipynb

text:I am feeling really sad? labels:why are you feeling sad?

text:A war is going on here in Ukrain? labels:yes, I know. Russia and Ukraine have had no formal diplomatic relations since 24 February 2022. The Russian Federation and Ukraine are currently in a state of war: the Russo-Ukrainian War began in 2014 following the Russian annexation of Crimea from Ukraine. In February 2022, Russia invaded Ukraine across a broad front.

text:Indeed. When will it end labels:Hope it end soon

text:I am feeling really scared labels:Don't be scared. Be strong and be brave

text:How can I feel secured, feeling so insecured now labels:I am here for you. Don't feel scared. We will get through it.

text:I am in a bunker now labels:That is scary. But things will be better soon. Don't lose hope.

text:thank you labels:you are welcome. Hope everything works fine.

text:bye labels:bye. episode_done=TrueOpen-Domain chatbots are the need of the hour. Most of the enterprise chatbots are closed domain chatbots and are not capable of understanding deep human conversational context and don't have any persona. The main difference between a closed domain and an open domain is the understanding of complex sentences and long conversational engagement. The framework is called Blender. It has 3 distinctive features.

1. Consistent persona throughout the conversation.

2. Empathetic conversation

3. Factual information. Eg: Wikipedia

1. Blended Skill Talk(BST)

2. Generation Strategy

BST mainly focuses on understanding the user's emotions, knowledge and switching tasks according to the conversation of the user. Like Serious to funny. Generation Strategy is to minimize perplexity during training the neural network. It measures how well the model can predict or generate the next words. Short text can be considered dull while long text can be considered as an arrogant response. An optimal beam length is chosen and the value is between 1-3.

Three types of transformer-based architectures.

1. Retriever - Find the next best dialogue. Eg: Poly-Encoder

2. Generative - A seq2seq architecture is used for generating responses instead of picking from a fixed set.

3. Retrieve-and-refine - A new approach for reading or accessing external knowledge other than what is embedded in our model. it is also sub-categorized as

- dialogue retrieval

- knowledge retrieval

The response will be retrieved for utterance based on the retrieval mechanism and then passed the input sequence to the generator by adding a special separator token in the sequence. This is the flow of Dialogue retrieval. Generators are more capable of producing vibrant language than high probability utterances. Knowledge retrieval, retrieves from the large knowledge base. They used the proposed model Wizard of Wikipedia task.

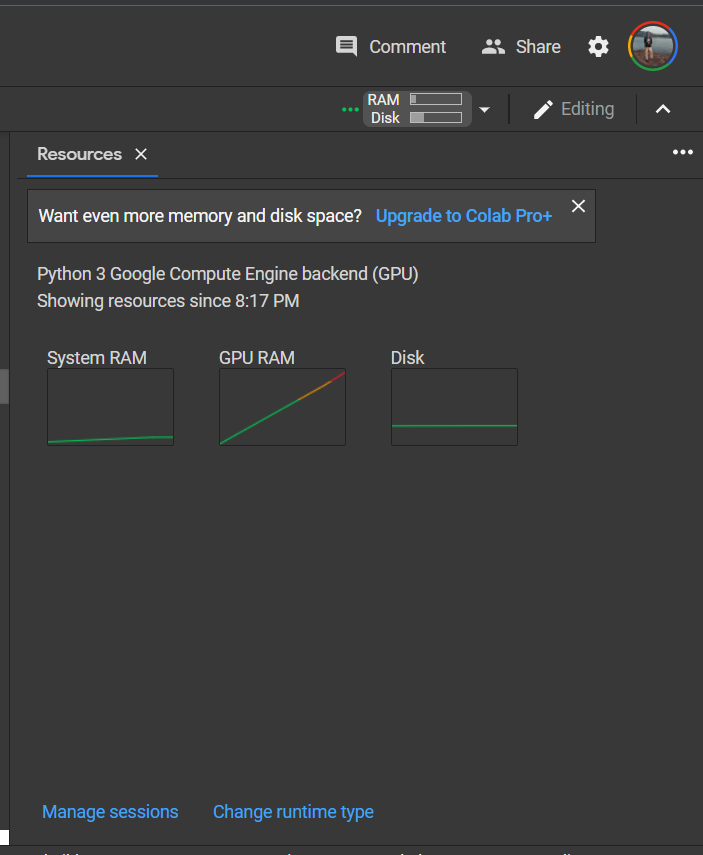

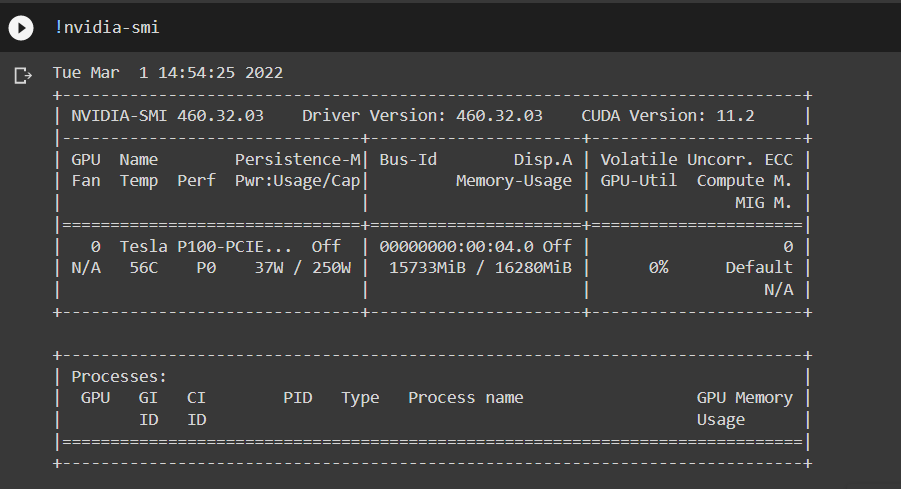

After benchmarking we closely monitored the performance and we got far better performace of Habana Gaudi AWS instance compared to Colab Pro.

A unified platform for sharing, training and evaluating dialogue models across many tasks.