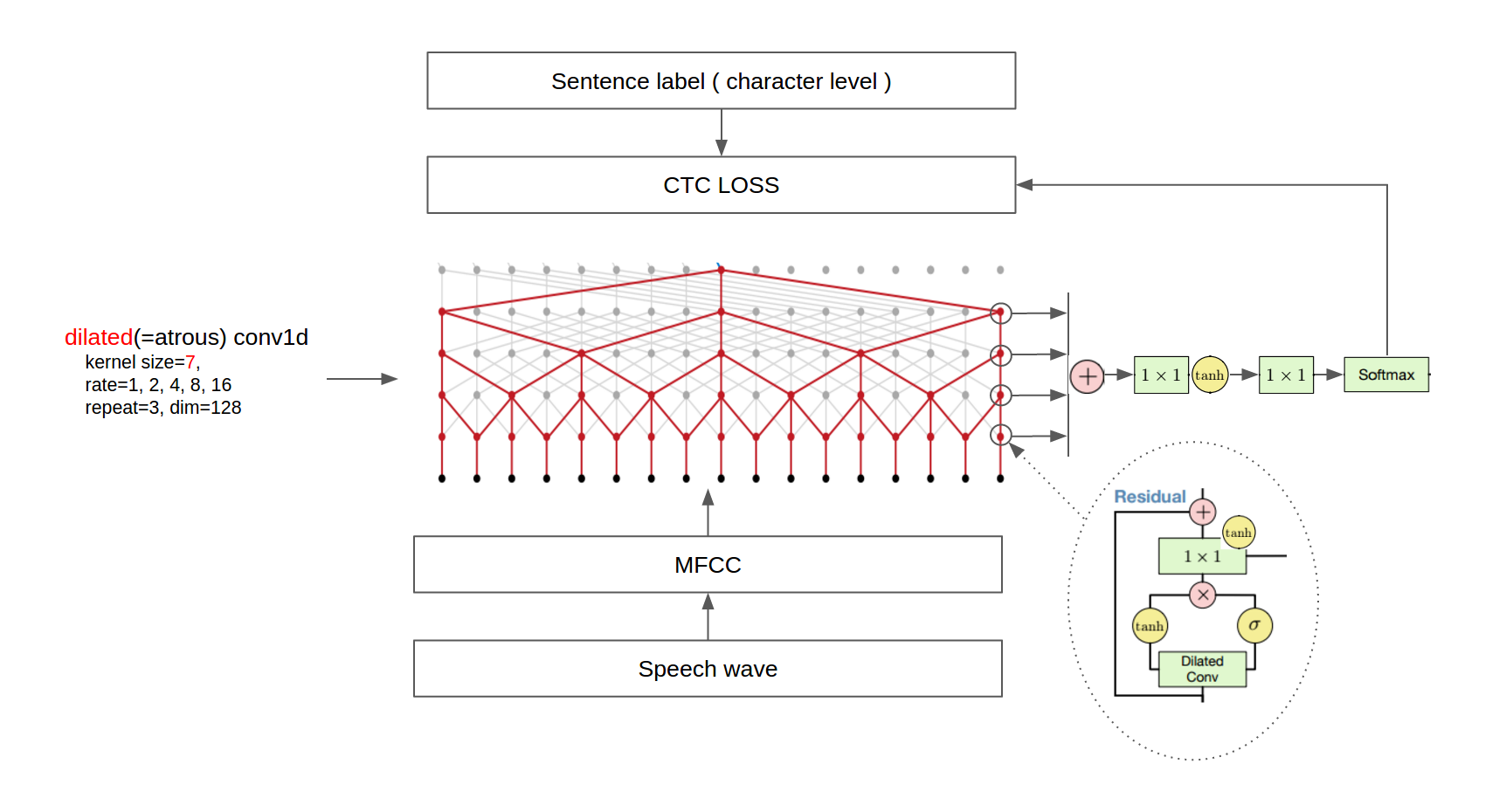

Python and C++ implementation of end-to-end sentence level Speech Recognition using DeepMind's recent research on audio processing and synthesis. This is based on WaveNet: A Generative Model for Raw Audio where DeepMind proposed a neural network architecture that could generate human-like audio from text, the model is also capable of performing speech-to-text. This repo provides speech-to-text implementation of Wavenet. The model takes Mel-spectograph as input and produces text as output using wavenet + beam search decoder.

Those who wish to modify or play with wavenet architecture can go to core directory.

Refer README.md

TO build C++ api, you have to build tensorflow from scratch along with its dependencies as a monolithic shared library, also make sure the headers are properly exported. If you don't want to build tensorflow, use pre-built shared libraries from FloopCZ/tensorflow_cc. I would recommend building tensorflow from scratch as it properly compiles to your hardware, using prebuilt shared libraries can lead to segmentation faults and illegal instruction execution attempts as they would have compiled tensorflow with different versions of gcc and different hardware optminzations that your processor lacks.

- Install bazel and clone tensorflow repository, run

configscript and answer the questions carefully. - Build

libtensorflow_cc:bazel build -c opt --config monolithic //tensorflow:libtensorflow_cc.so - Export headers:

bazel build -c opt --config monolithic //tensorflow:install_headers

- Install

pybind11and python headers.sudo apt install python3-dev && pip3 install pybind11 - Go to

platform/buld_env - Make sure you properly set these env variables:

TENSORFLOW_CC_LIBS_PATH=${HOME}/Documents/installation/tensorflow/lib #libtensorflow_cc.so* directory TENSORFLOW_CC_INCLUDE_PATH=${HOME}/Documents/installation/tensorflow/include #tensorflow headers directory #misc : keep them default present_dir=$(pwd) SST_SOURCE_DIR=${present_dir}/../cc/src SST_INCLUDE_PATH=${present_dir}/../cc/include SST_PYTHON_PATH=${present_dir}/../wavenetsst/wavenetpy

- Build wavenet CPython module

./tensorflow_env.sh python

The wavenet python module wavenetpy is located at platform/wavenetstt, the module requires wavenet CPython shared library. Since static build is yet to be implemeted, the shared library dynamically links with libtensorflow_cc during runtime. So, make sure you export a proper LD_LIBRARY_PATH.

TENSORFLOW_CC_LIBS_PATH=${HOME}/Documents/installation/tensorflow/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:${TENSORFLOW_CC_LIBS_PATH}Install requirements -> numpy and librosa

pip3 install -r requirements.txt

Example : Running speech recognition

from wavenetpy import WavenetSTT

#load the model

wavenet = WavenetSTT('../../pb/wavenet-stt.pb')

#pass the audio file

result = wavenet.infer_on_file('test.wav')

print(result)- Build static library to avoid dynamic linking with

libtensorflow_cc - We are using

librosafor MFCC, the goal is to use custom C++ implementation. - Use custom C++

ctc_beam_search_decoderbecause it is not supported in tensorflow lite. - Provide a Dockerfile

- Implement Tensorflow Lite implementation for embedded devices and android.

- Add Tensorflow.js support.

- Optimize C++ code.

- Provide CI/CD pipeline for C++ build.

- Provide a way to directly access mfcc pointer instead of memory copy. This is not possible as now because of the limitation in Tensorflow C++ api. In other words, add a custom memory allocator for tensors.

We welcome contributors especially beginners. Contributors can :

- Raise issues

- Suggest features

- Fix issues and bugs

- Impelement features specified in TODO and Roadmap.

- Deepmind

- buriburisuri for providing the pretrained ckpt files and

wavenet.py. - kingscraft for tensorflow reference implementation.

- Stackoverflow and Tensorflow Docs