Authors: Navid Mohammadi Foumani,

Geoffrey Mackellar, Soheila Ghane, Saad Irtza, Nam Nguyen, Mahsa Salehi

This work follows from the project with Emotiv Research, a bioinformatics research company based in Australia, and Emotiv, a global technology company specializing in the development and manufacturing of wearable EEG products.

EEG2Rep Paper: PDF

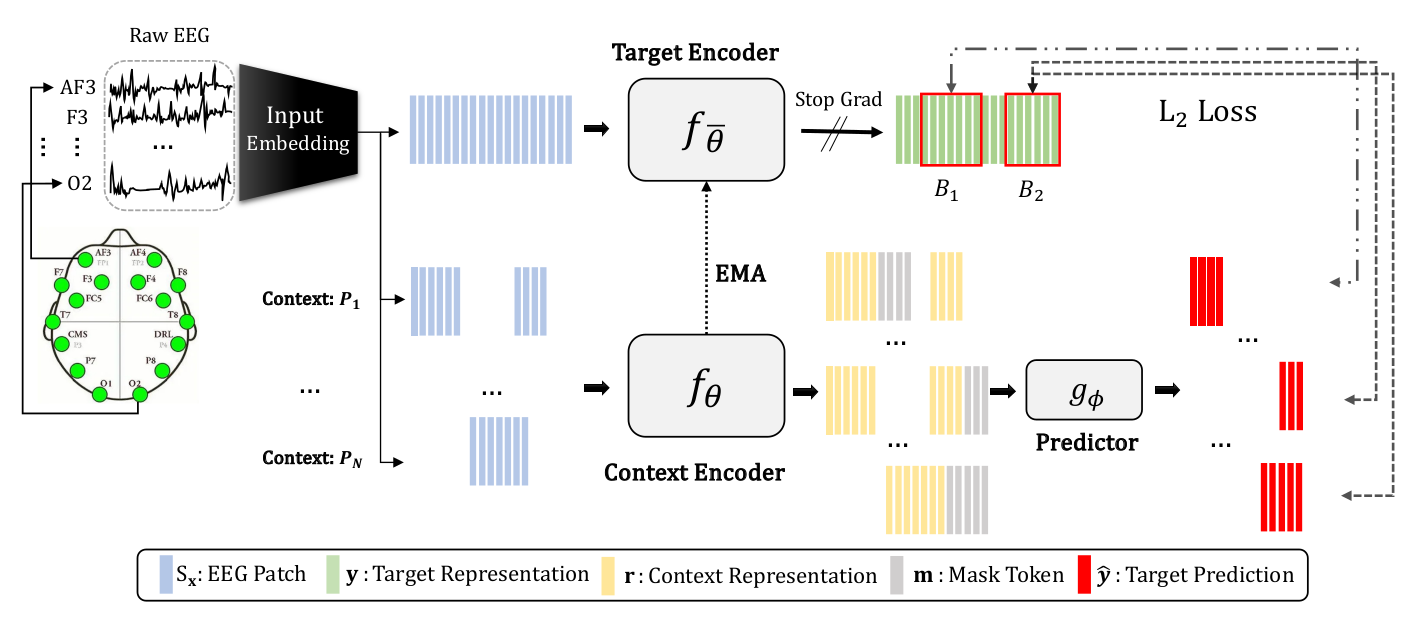

This is a PyTorch implementation of EEG2Rep: Enhancing Self-supervised EEG Representation Through Informative Masked Inputs

-

Emotiv: To download the Emotiv public datasets, please follow the link below to access the preprocessed datasets, which are split subject-wise into train and test sets. After downloading, copy the datasets to your Dataset directory.

-

Temple University Datasets: Please use the following link to download and preprocess the TUEV and TUAB datasets.

Instructions refer to Unix-based systems (e.g. Linux, MacOS).

This code has been tested with Python 3.7 and 3.8.

pip install -r requirements.txt

To see all command options with explanations, run: python main.py --help

In main.py you can select the datasets and modify the model parameters.

For example:

self.parser.add_argument('--epochs', type=int, default=100, help='Number of training epochs')

or you can set the parameters:

python main.py --epochs 100 --data_dir Dataset/Crowdsource

If you find EEG2Rep useful for your research, please consider citing this paper using the following information:

```

@article{foumani2024eeg2rep,

title={EEG2Rep: Enhancing Self-supervised EEG Representation Through Informative Masked Inputs},

author={Foumani, Navid Mohammadi and Mackellar, Geoffrey and Ghane, Soheila and Irtza, Saad and Nguyen, Nam and Salehi, Mahsa},

journal={arXiv preprint arXiv:2402.17772},

year={2024}

}

```