This repository is the PyTorch implementation of our ACL-2022 [paper] (https://arxiv.org/abs/2109.12814)

Source code for Investigating Non-local Features for Neural Constituency Parsing.

We inject non-local sub-tree structure features to chart-based constituency parser by introducing two auxiliary training objectives.

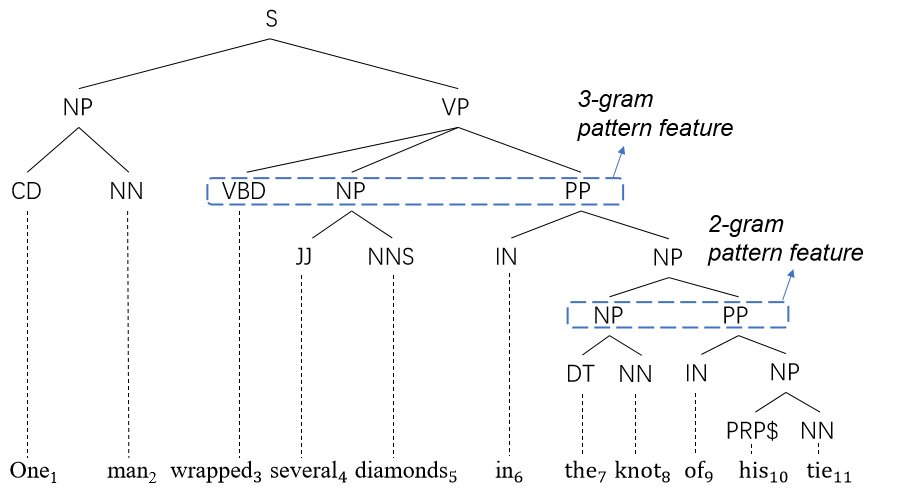

We define pattern as the n-gram constituents sharing the same parent, and ask model to predict the pattern based on span representation.The consistency loss regularizes the co-occurrence between constituents and pattern by collecting corpus-level statistics.

NFC is developed on the basis of Self-Attentive Parser, whose code base is at this url.

$ pip install -r requirement.txtpython src/export test \

--pretrained-model-path "bert-large-uncased" \

--model-path path-to-the-checkpoint\

--test-path path-to-the-test-file

If for Chinese, add '''--text-processing chinese''' and change the --pretrained-model-path.

We release our model checkpoints at Google Drive. (Models for PTB and for CTB5.1.)

For English:

$ RANDOM_SEED=42

$ python src/main.py train \

--train-path data/02-21.10way.clean \

--dev-path data/22.auto.clean \

--subbatch-max-tokens 500 \

--use-pretrained --pretrained-model bert-large-uncased \

--use-encoder --num-layers 2 \

--max-consecutive-decays 10 --batch-size 64 \

--use-pattern --pattern-num-threshold 5 --num-ngram "3" --pattern-loss-scale 1.0 \

--use-compatible --compatible-loss-scale 5.0 \

--numpy-seed ${RANDOM_SEED} \

--model-path-base models/ptb_bert_tri-task_negative-5_scale-5_${RANDOM_SEED}For Chinese:

$ RANDOM_SEED=42

$ python src/main.py train \

--train-path data/ctb_5.1/ctb.train \

--dev-path data/ctb_5.1/ctb.dev \

--subbatch-max-tokens 500 \

--use-pretrained --pretrained-model pretrained_models/bert-base-chinese \

--max-consecutive-decays 10 --batch-size 32 \

--use-pattern \

--frequent-threshold 0.005 --num-ngram "2" --pattern-loss-scale 1.0 \

--use-compatible --compatible-loss-scale 5.0 \

--numpy-seed ${RANDOM_SEED} \

--text-processing chinese --predict-tags \

--model-path-base models/ctb_bert_tri-task_negative-5_scale-5_${RANDOM_SEED}If you use this software for research, please cite our papers as follows:

@inproceedings{nfc,

title = "Investigating Non-local Features for Neural Constituency Parsing",

author = "Cui, Leyang and Yang, Sen and Zhang, Yue" ,

booktitle = "Proceedings of the 60th Conference of the Association for Computational Linguistics",

year = "2022",

publisher = "Association for Computational Linguistics",

}

The code in this repository is developed on the basis of the released code from https://github.com/mitchellstern/minimal-span-parser and https://github.com/nikitakit/self-attentive-parser.