1 University of Macau

2 Intellindust

3 Chongqing University of Posts and Telecommunications 4 Tencent AI Lab

3 Chongqing University of Posts and Telecommunications 4 Tencent AI Lab

CVPR 2024

This code was implemented with Python 3.6 and PyTorch 1.10.0. You can install all the requirements via:

pip install -r requirements.txt- Download the YouTube-VOS dataset, DAVIS-16 dataset, FBMS dataset, Long-Videos dataset, MCL dataset, and SegTrackV2 dataset. You could get the processed data provided by HFAN. The depth maps are obtained by MonoDepth2, We also provide the processed data here.

- Download the pre-trained Mit-b1 or Swin-Tiny backbone.

- Training:

python train.py --config ./configs/train_sample.yaml- Evaluation:

python ttt_demo.py --config configs/test_sample.yaml --model model.pth --eval_type base- Test-time training:

python ttt_demo.py --config configs/test_sample.yaml --model model.pth --eval_type TTT-MWIWe provide our checkpoints here.

If you find this useful in your research, please consider citing:

@inproceedings{

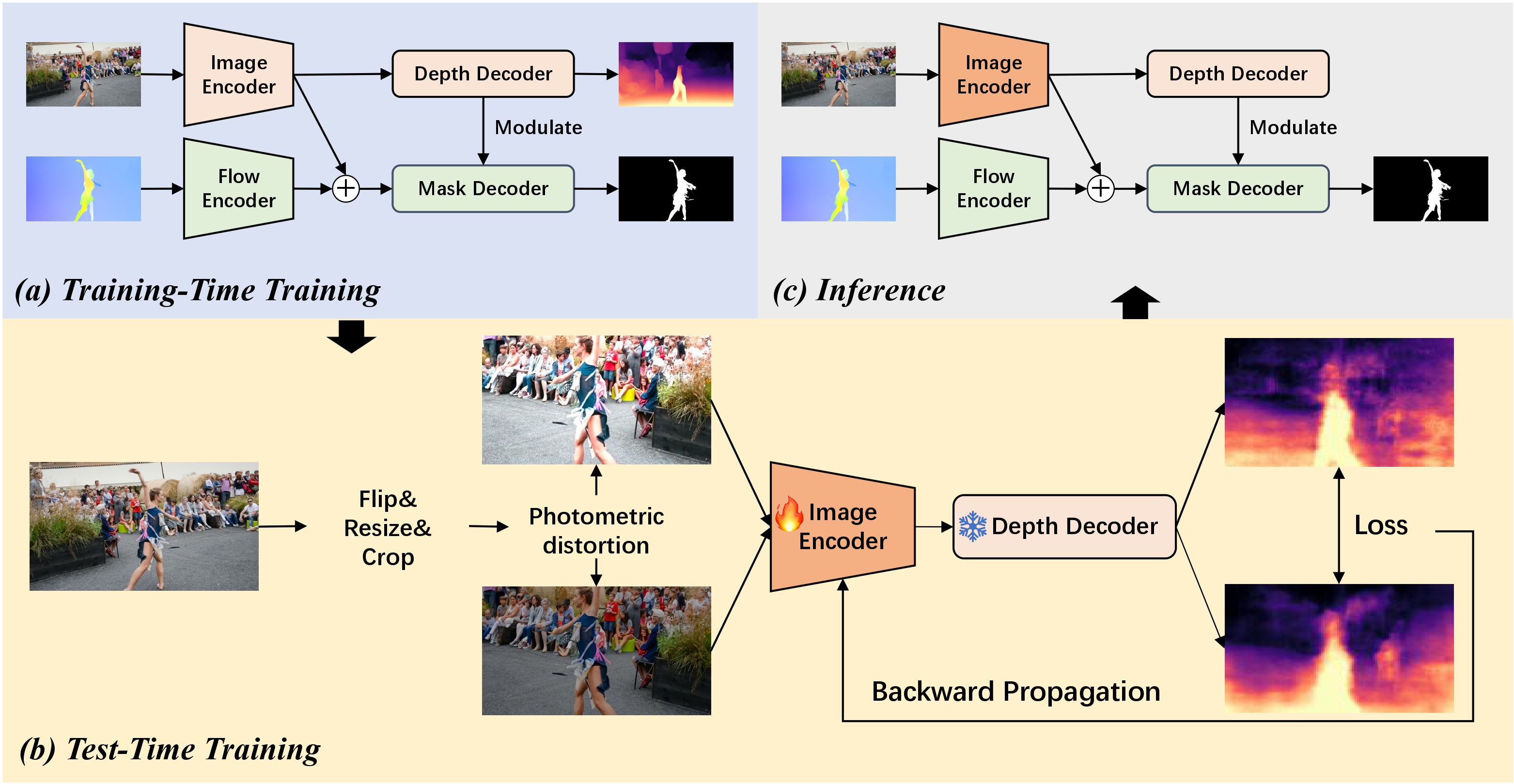

title={Depth-aware Test-Time Training for Zero-shot Video Object Segmentation},

author={Weihuang Liu, Xi Shen, Haolun Li, Xiuli Bi, Bo Liu, Chi-Man Pun, Xiaodong Cun},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

EVP code borrows heavily from EVP, Swin and SegFormer. We thank the author for sharing their wonderful code.