Geometry Processing – Smoothing

To get started: Fork this repository then issue

git clone --recursive http://github.com/[username]/geometry-processing-smoothing.git

Installation, Layout, and Compilation

See introduction.

Execution

Once built, you can execute the assignment from inside the build/ by running

on a given mesh with given scalar field (in

dmat format).

./smoothing [path to mesh.obj] [path to data.dmat]

or to load a mesh with phony noisy data use:

./smoothing [path to mesh.obj] n

or to load a mesh with smooth z-values as data (for mesh smoothing only):

./smoothing [path to mesh.obj]

Background

In this assignment we will explore how to smooth a data signal defined over a curved surface. The data signal may be a scalar field defined on a static surface: for example, noisy temperatures on the surface of an airplane. Smoothing in this context can be understood as data denoising:

The signal could also be the geometric positions of the surface itself. In this context, smoothing acts also affects the underlying geometry of the domain. We can understand this operation as surface fairing:

Flow-based formulation

In both cases, the underlying mathematics of both operations will be very

similar. If we think of the signal as undergoing a flow toward a smooth

solution over some phony notion of "time", then the governing partial

differential equation we will start with sets the change in signal value

\[ \frac{∂ u}{∂ t} = λ ∆ u, \]

where the scalar parameter

When the signal is the surface geometry, we call this a geometric flow.

There are various ways to motivate this choice of flow for data-/geometry-smoothing. Let us consider one way that will introduce the Laplacian as a form of local averaging.

Given a noisy signal

\[ u(\x) = \frac{1}{|B(\x))|} ∫_{B(\x)} f(\z) ;d\z, \]

If the ball

\[ u^{t+δt}(\x) = \frac{1}{|B(\x))|} ∫_{B(\x)} u^t(\z) ;d\z. \]

Subtracting the current value

\[ \frac{∂ u}{∂ t} = λ \frac{1}{|B(\x))|} ∫_{B(\x)} (u(\z)-u(\x)) ;d\z. \]

For harmonic functions,

\[ \frac{∂ u}{∂ t} = \lim_{|B(\x)| → 0} λ \frac{1}{|B(\x))|} ∫_{B(\x)} (u(\z)-u(\x)) ;d\z = λ ∆ u. \]

Energy-based formulation

Alternatively, we can think of a single smoothing operation as the solution to

an energy minimization problem. If

\[ u^* = \argmin_u E_(u)

\argmin_u ½∫_\S ( \underbrace{(f-u)²}\text{data} + \underbrace{λ‖∇u‖²}\text{smoothness} );dA, \]

where again the scalar parameter

Calculus of variations

In the smooth setting, our energy

We are used to working with minimizing quadratic functions with respect to a discrete set of variables, where the minimum is obtained when the gradient of the energy with respect to the variables is zero.

In our case, the functional

\[

Φ(ε) = E(w+εv) = ½∫_\S ((f-w+εv)² + λ ‖∇w + ε∇v‖²) ;dA,

\]

where we observe that

Since

\[ \begin{align} 0 & = \left.\frac{∂Φ}{∂ε} \right|{ε = 0},\ & = \left.\frac{∂}{∂ε} ½∫\S ( (f-w-εv)² + λ ‖∇w + ε∇v‖²);dA, \right|{ε = 0} \ & = \left.\frac{∂}{∂ε} ½∫\S ( f^2 - 2wf - 2εfv +w²+2εvw +ε²v² + λ ‖∇w‖² + λ2ε∇v⋅∇w + λ ε²‖∇w‖²) ;dA \right|{ε = 0}\ & = \left.∫\S (-fv + vw +2εvw + λ∇v⋅∇w + λ ε‖∇w‖²) ;dA \right|{ε = 0}\ & = ∫\S (v(w-f) + λ∇v⋅∇w );dA. \end{align} \]

The choice of "test" function

\[ 0 = ∫_\S (v(w-f) + λ∇v⋅∇w) ;dA \quad ∀ v. \]

It is difficult to claim much about

\[

0 = ∫_\S (v(w-f) - λv∆w );dA \quad (+ \text{boundary term} )\quad ∀ v,

\]

where we choose to ignore the boundary terms (for now) or equivalently we

agree to work on closed surfaces

Since this equality must hold of any

\[

w(\x)-f(\x) = λ∆w(\x).

\]

The choice of

Because we invoke variations to arrive at this equation, we call the energy-based formulation a variational formulation.

Implicit smoothing iteration

Now we understand that the flow-based formulation and the variational formulation lead to the same system, let us concretely write out the implicit smoothing step.

Letting

\[

u^t(\x) = (\text{id}-λ∆)u^{t+1}(\x), \quad ∀ \x ∈ \S

\]

where

Discrete Laplacian

There are many ways to derive a discrete

approximation of the Laplacian

- finite volume method,

- "The solution of partial differential equations by means of electrical networks" [MacNeal 1949, pp. 68],

- "Discrete differential-geometry operators for triangulated 2-manifolds" [Meyer et al. 2002],

- Polygon mesh processing [Botsch et al. 2010],

- finite element

method,

- "Variational methods for the solution of problems of equilibrium and vibrations" [Courant 1943],

- Algorithms and Interfaces for Real-Time Deformation of 2D and 3D Shapes [Jacobson 2013, pp. 9]

- discrete exterior

calculus

- Discrete Exterior Calculus [Hirani 2003, pp. 69]

- Discrete Differential Geometry: An Applied Introduction [Crane 2013, pp. 71]

- gradient of surface area → mean curvature

flow

- "Computing Discrete Minimal Surfaces and Their Conjugates" [Pinkall & Polthier 1993]

All of these techniques will produce the same sparse Laplacian matrix

Finite element derivation of the discrete Laplacian

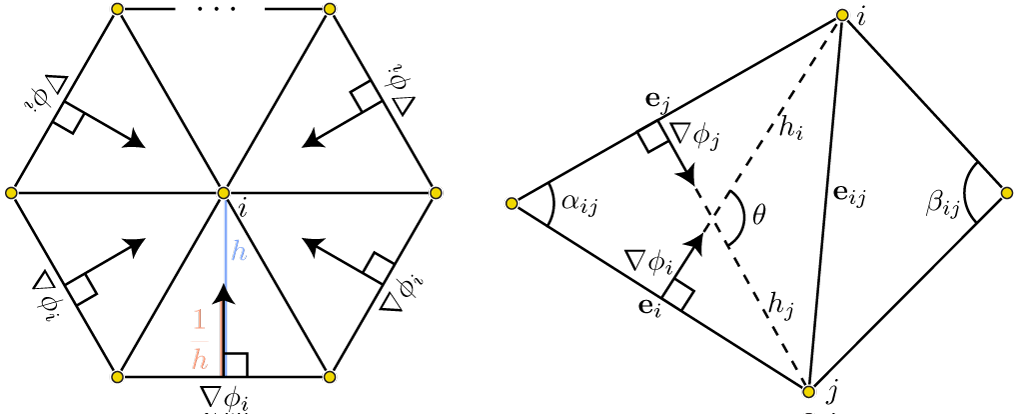

We want to approximate the Laplacian of a function

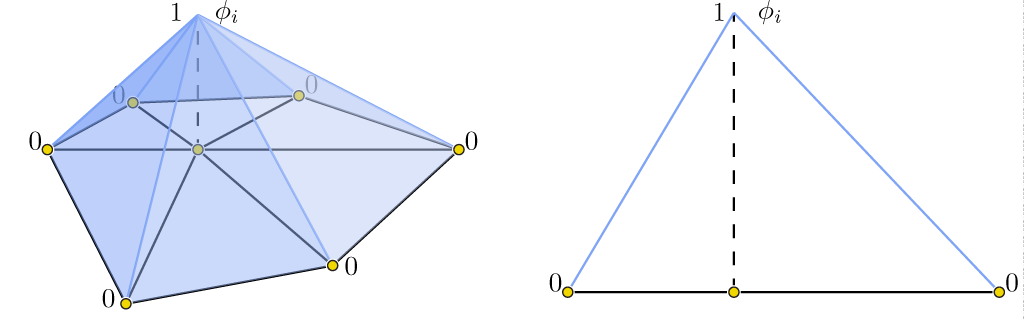

Any piecewise-linear function can be expressed as a sum of values at mesh

vertices times corresponding piecewise-linear basis functions (a.k.a hat

functions,

\[

\begin{align}

u(\x) &= ∑_{i=1}^n u_i φ_i(\x), \

φ(\x) &= \begin{cases}

1 & \text{if

By plugging this definition into our smoothness energy above, we have discrete energy that is quadratic in the values at each mesh vertex:

\[

\begin{align}

∫_\S ‖∇u(\x)‖² ;dA

&= ∫_\S \left|∇\left(∑_{i=1}^n u_i φ_i(\x)\right)\right|^2 ;dA \

&= ∫_\S \left(∑_{i=1}^n u_i ∇φ_i(\x)\right)⋅\left(∑_{i=1}^n u_i ∇φ_i(\x)\right) ;dA \

&= ∫_\S ∑_{i=1}^n ∑_{j=1}^n ∇φ_i⋅∇φ_j u_i u_j ;dA \

&= \u^\transpose \L \u, \quad \text{where } L_{ij} = ∫_\S ∇φ_i⋅∇φ_j ;dA.

\end{align}

\]

By defining

We first notice that

Now, consider two neighboring nodes

\[ ∇ φ_i ⋅ ∇ φ_j = |∇ φ_i| |∇ φ_j| \cos θ = \frac{|\e_j|}{2A}\frac{|\e_i|}{2A} \cos θ. \]

Now notice that the angle between

Combine the cosine and sine terms: \[ -\frac{|\e_j|}{2A} \frac{h_j}{2A} \cot α_{ij}. \]

Finally, since

Similarly, inside the other triangle

Recall that $φ_i$ and $φ_j$ are only both nonzero inside these two

triangles, $T_α$ and $T_β$ . So, since these constants are inside an

integral over area we may write:

\[

\int\limits_\S ∇ φ_i ⋅ ∇ φ_j ;dA =

\left.A∇ φ_i ⋅ ∇ φ_j \right|{T_α} + \left.B∇ φ_i ⋅ ∇ φ_j \right|{T_β}

-\frac{1}{2} \left( \cot α_{ij} + \cot β_{ij} \right). \]

Mass matrix

Treated as an operator (i.e., when used multiplied against a vector

\[

∫_\S (u-f)² ;dA

= ∫_\S ∑_{i=1}^n ∑_{j=1}^n φ_i⋅φ_j (u_i-f_i) (u_j-f_j) ;dA =

(\u-\f)^\transpose \M (\u-\f),

\]

where

This matrix

\[

M_{ij} =

\begin{cases}

⅓ ∑_{t=1}^m \begin{cases}

\text{Area}(t) & \text{if triangle

If we start directly with the continuous smoothing iteration equation, then we

have a point-wise equality. To fit in our integrated Laplacian

\[ \u^t = (\I - λ\M^{-1} \L)\u^{t+1}, \]

where

Instead, we could take the healthier view of requiring our smoothing iteration equation to hold in a locally integrated sense. In this case, we replace mass matrices on either side:

\[ \M \u^t = (\M - λ\L)\u^{t+1}. \]

Now the system matrix

Laplace Operator is Intrinsic

The discrete Laplacian operator and its accompanying mass matrix are

intrinsic operators in the sense that they only depend on lengths. In

practical terms, this means we do not need to know where vertices are

actually positioned in space (i.e.,

This also means that applying a transformation to a shape that does not change any lengths on the surface (e.g., bending a sheet of paper) will have no affect on the Laplacian.

Data denoising

For the data denoising application, our geometry of the domain is not changing

only the scalar function living upon it. We can build our discrete Laplacian

Geometric smoothing

For geometric smoothing, the Laplacian operator (both

\[ \M^{t+1} \V^t = (\M^{t+1} - λ\L^{t+1})\V^{t+1}. \]

However, if we assume that small changes in

\[ \M^{t} \V^t = (\M^{t} - λ\L^{t}) \V^{t+1}. \]

Why did my mesh disappear?

Repeated application of geometric smoothing may cause the mesh to "disappear". Actually the updated vertex values are being set to NaNs due to degenerate numerics. We are rebuilding the discrete Laplacian at every new iteration, regardless of the "quality" of the mesh's triangles. In particular, if a triangle tends to become skinnier and skinnier during smoothing, what will happen to the cotangents of its angles?

In "Can Mean-Curvature Flow Be Made Non-Singular?", Kazhdan et al. derive a new

type of geometric flow that is stable (so long as the mesh at time

Tasks

Learn an alternative derivation of cotangent Laplacian

The "cotangent Laplacian" by far the most important tool in geometry processing. It comes up everywhere. It is important to understand where it comes from and be able to derive it (in one way or another).

The background section above contains a FEM derivation of the discrete "cotangnet Laplacian". For this (unmarked) task, read and understand one of the other derivations listed above.

Hint: The finite-volume method used in [Botsch et al. 2010] is perhaps the most accessible alternative.

White list

igl::doubleareaigl::edge_lengths

Black list

igl::cotmatrix_entriesigl::cotmatrixigl::massmatrix- Trig functions

sin,cos,tanetc. (e.g., from#include <cmath>) See background notes about "intrinisic"-ness

src/cotmatrix.cpp

Construct the "cotangent Laplacian" for a mesh with edge lengths l. Each

entry in the output sparse, symmetric matrix L is given by:

\[

L_{ij} = \begin{cases}

½ \cot{α_{ij}} + ½ \cot{β_{ij}} & \text{if edge

Hint: Review the law of sines and law of cosines and Heron's ancient formula to derive a formula for the cotangent of each triangle angle that only uses edge lengths.

src/massmatrix.cpp

Construct the diagonal(ized) mass matrix M for a mesh with given face indices

in F and edge lengths l.

src/smooth.cpp

Given a mesh (V,F) and data specified per-vertex (G), smooth this data

using a single implicit Laplacian smoothing step.

This data could be a scalar field on the surface and smoothing corresponds to data denoising.

Or the data could be the vector field of the surface's own geometry. This corresponds to geometric smoothing.