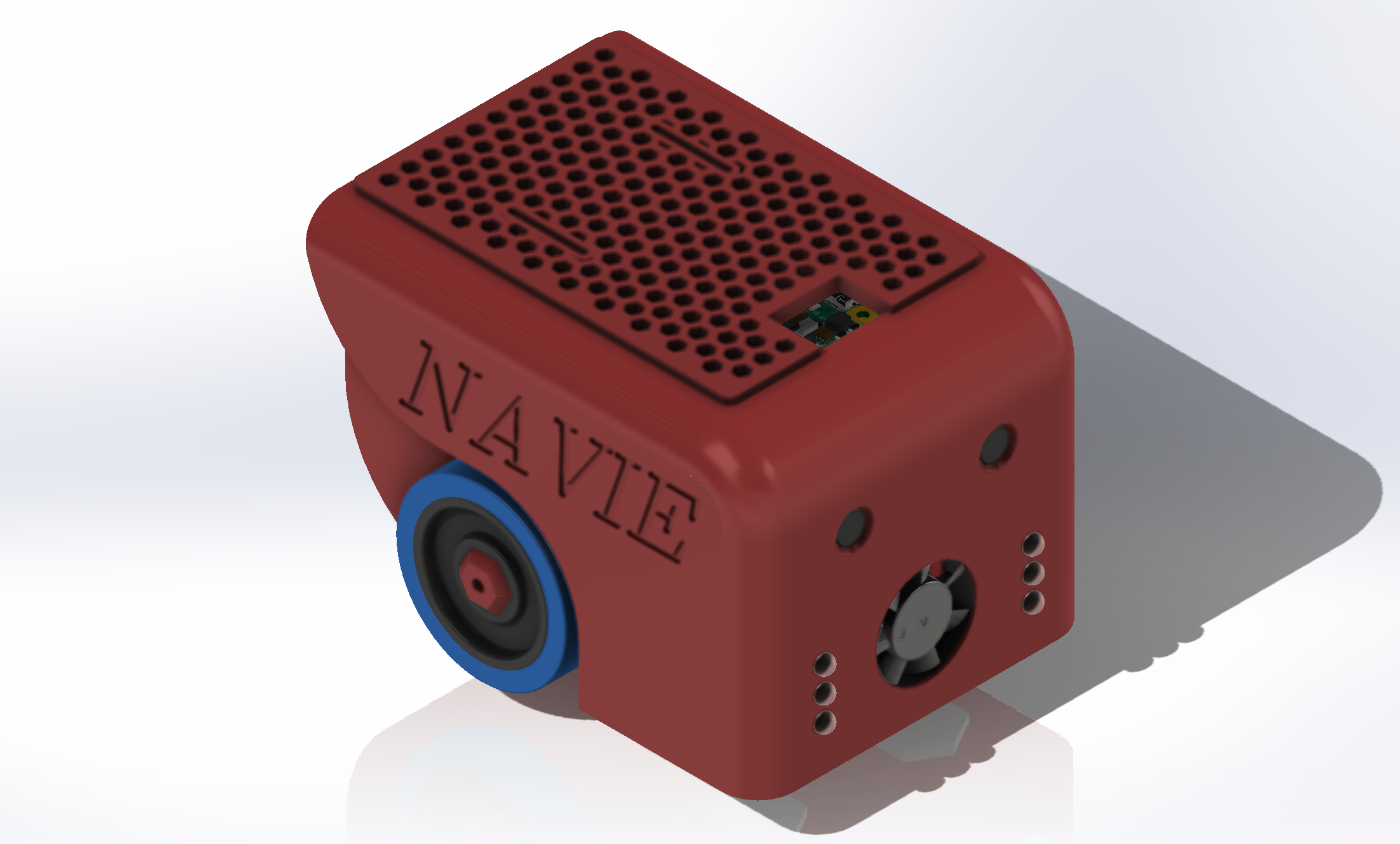

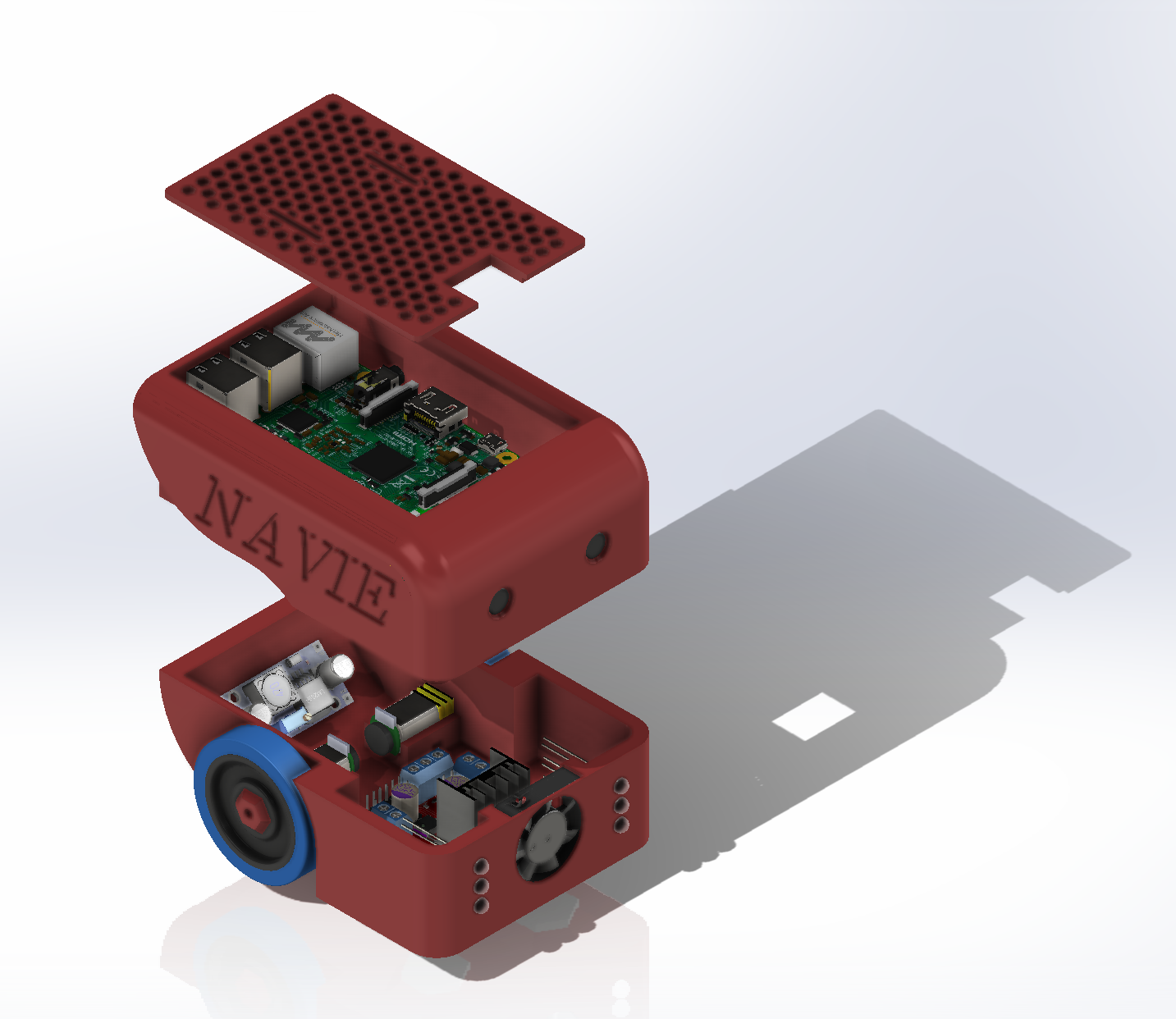

A little robot that navigates entirely on its own. Hence the name, Navie.

- Onboard navigation processing

- Onboard vision processing

- Extremely low upfront cost (<$100)

- Stereo camera for navigation

- Use brushless motors as drive system

- FOC on brushless motors

- Custom motor driver circuit

- Really small (Fit in the palm of the hand)

- Integrated rechargeable battery

- WIFI connectivity

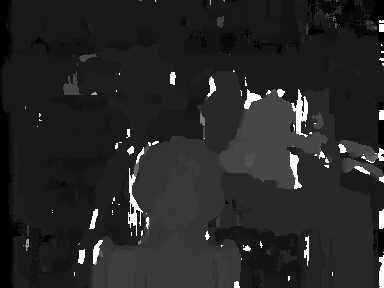

Depth processing is done using a block-matching algorithm where each pixel is calculated individually, and neighbors are only processed for sub-pixel calculations. In the future, I plan to implement pyramiding and already have the Gaussian resizing function completed.

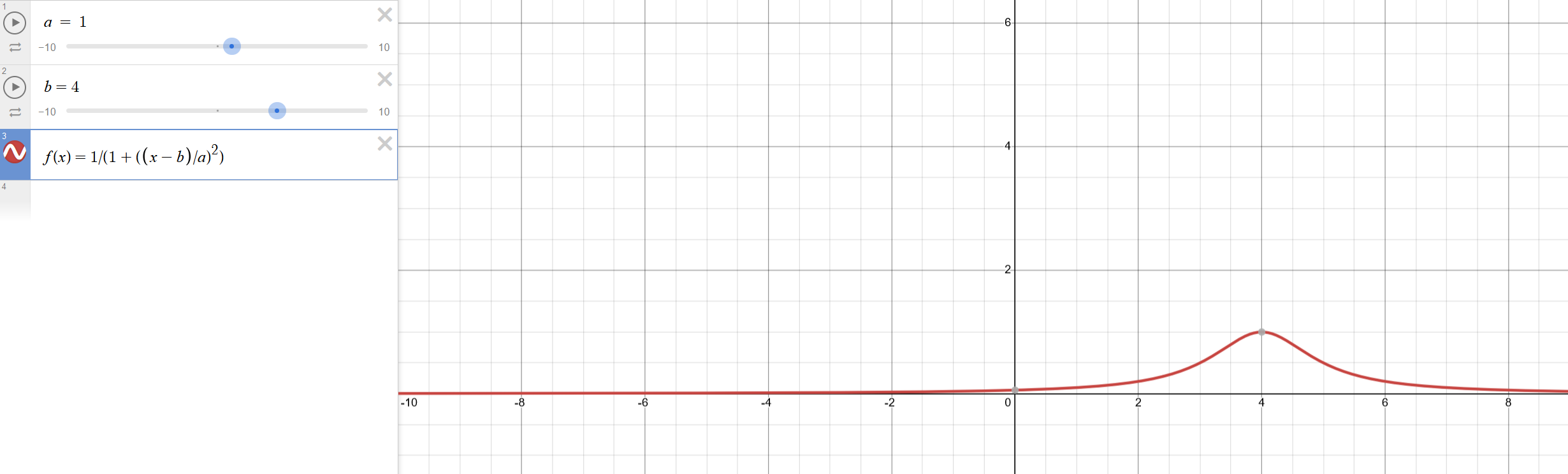

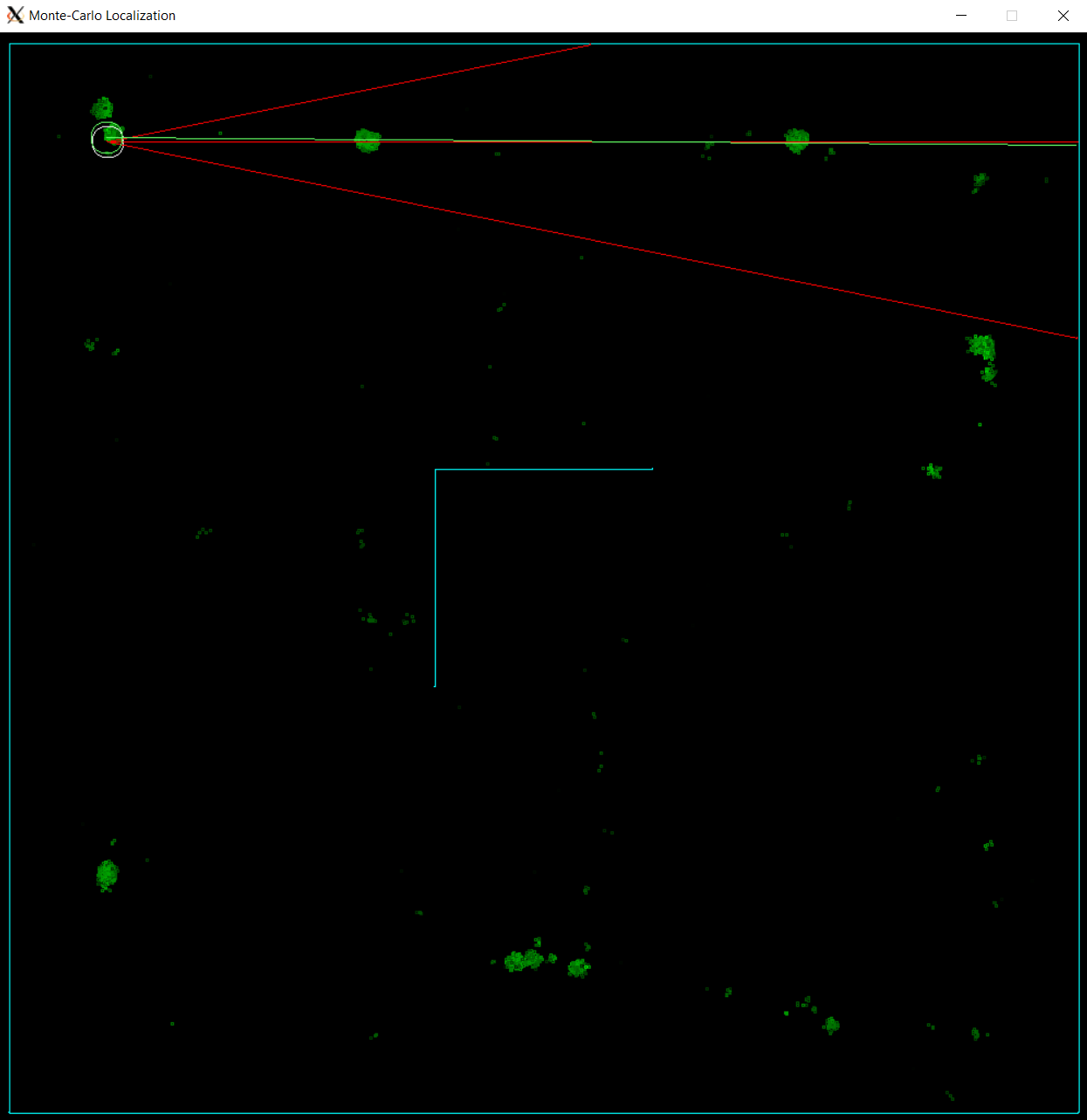

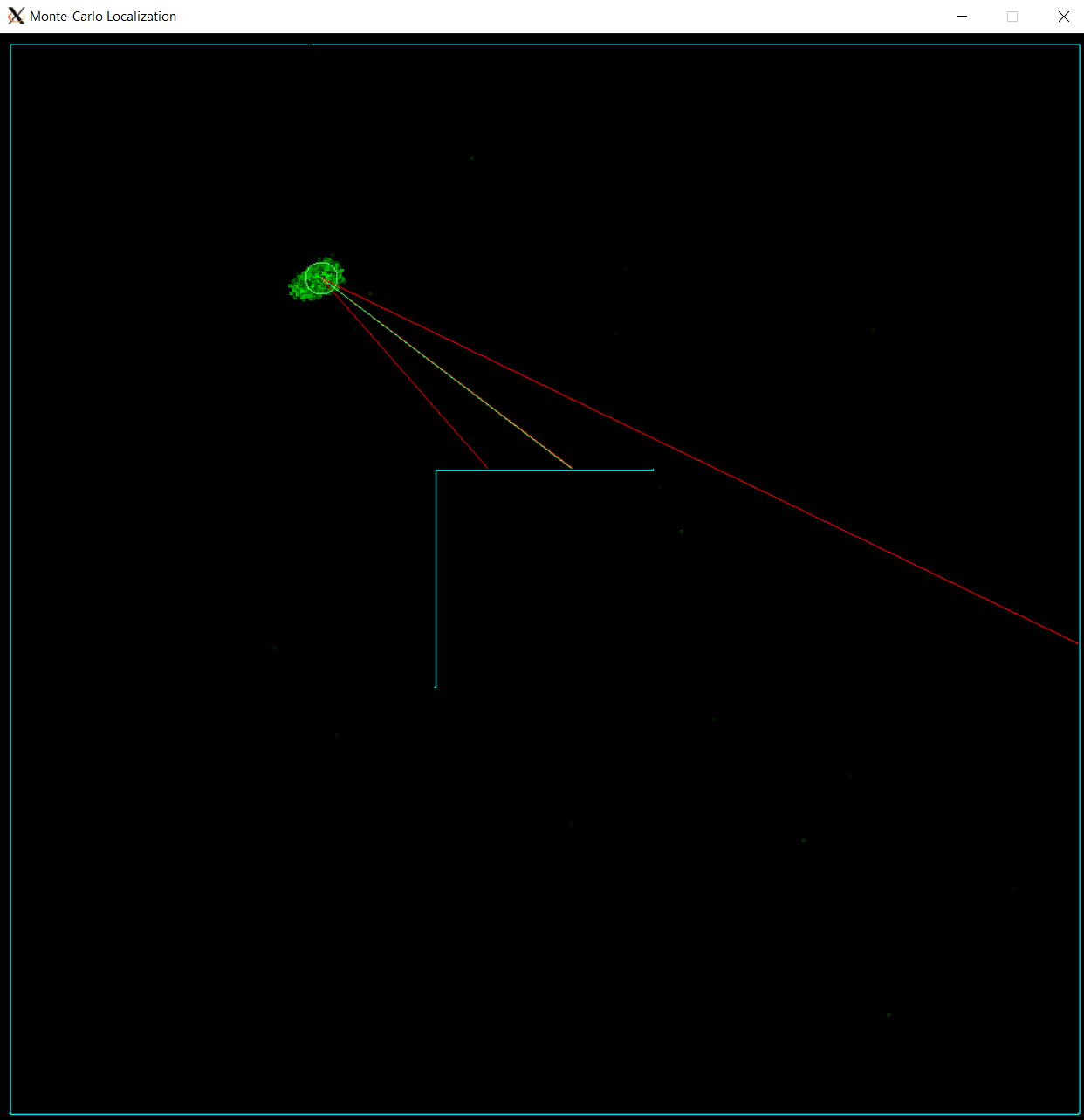

Localization is currently a simple particle filter, with Gaussian noise generated by this function. Currently, there is no past knowledge within each particle, but there are plans to update this in the future.

The algorithm outputs a "best guess" position on the map that the path planner then uses to attempt to route the robot to the goal location.

The path planning implementation is designed to take a single path with x_1,y_1 start points and x_2,y_2 end points, and recursively split it using an A* approach to generate a valid path. Currently, the path-splitting is buggy so its been removed. Below is a demonstration of the localization and path planning working together to get a robot (white circle with red lines) to the goal position (end of white line) using only the knowledge of the length of the 3 sensors and the map.

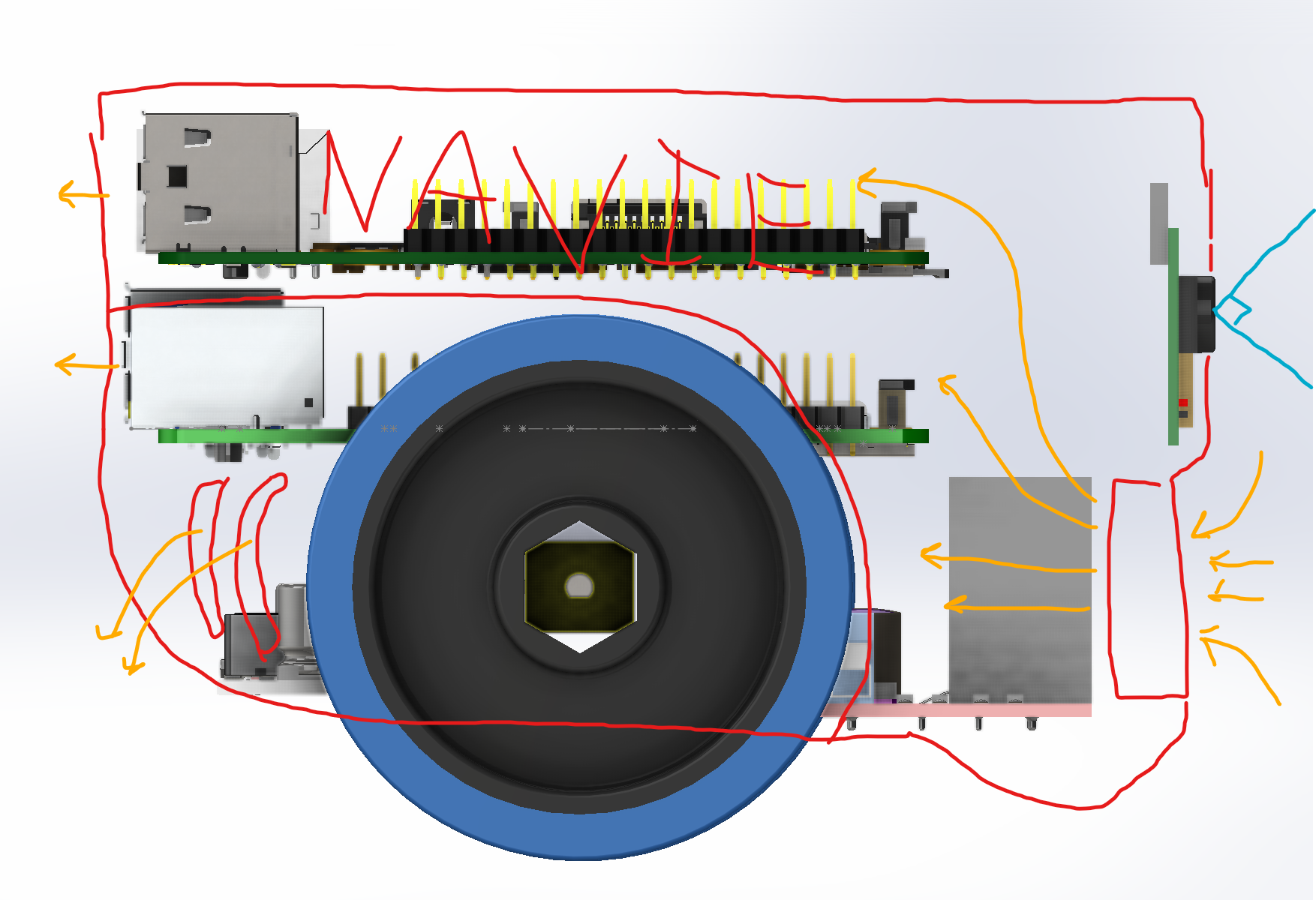

You might notice that the green circle/line (the best guess robot) is not correct at first. This is because the map is highly symmetric, so there are lots of valid positions at first

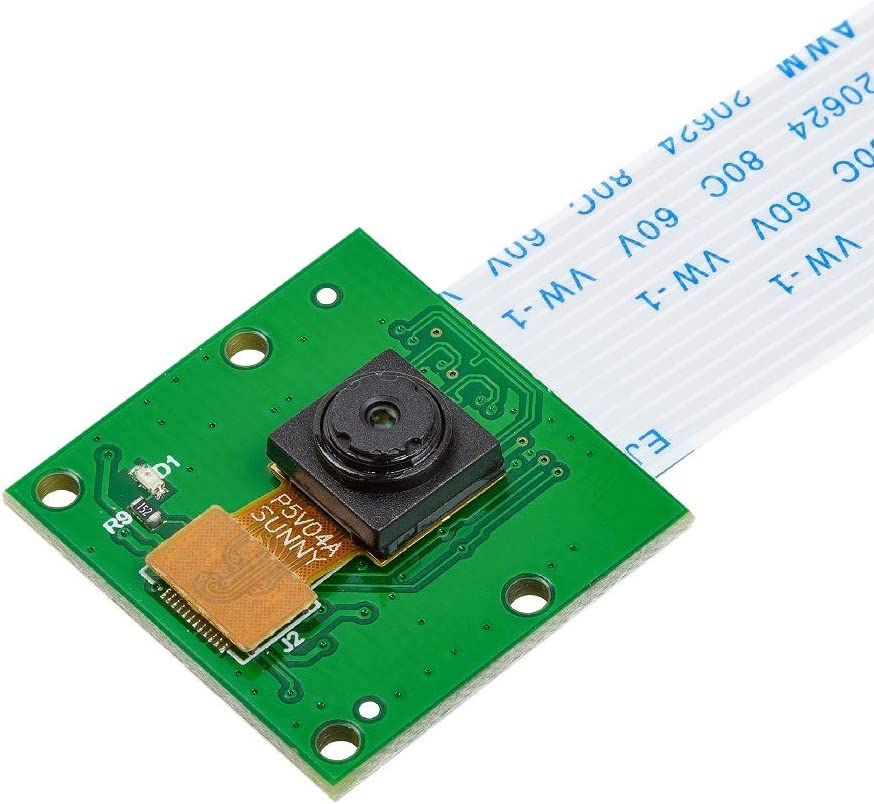

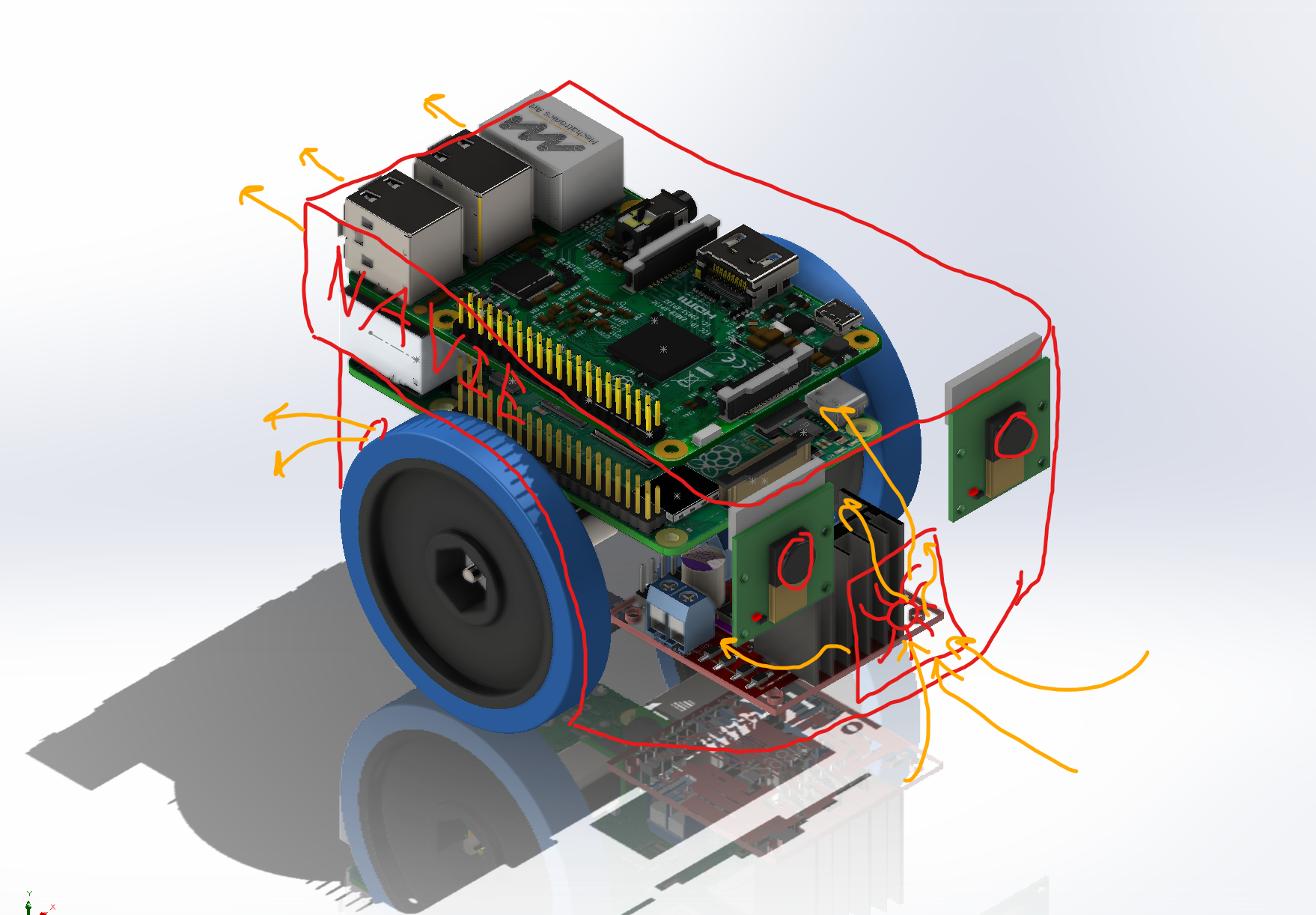

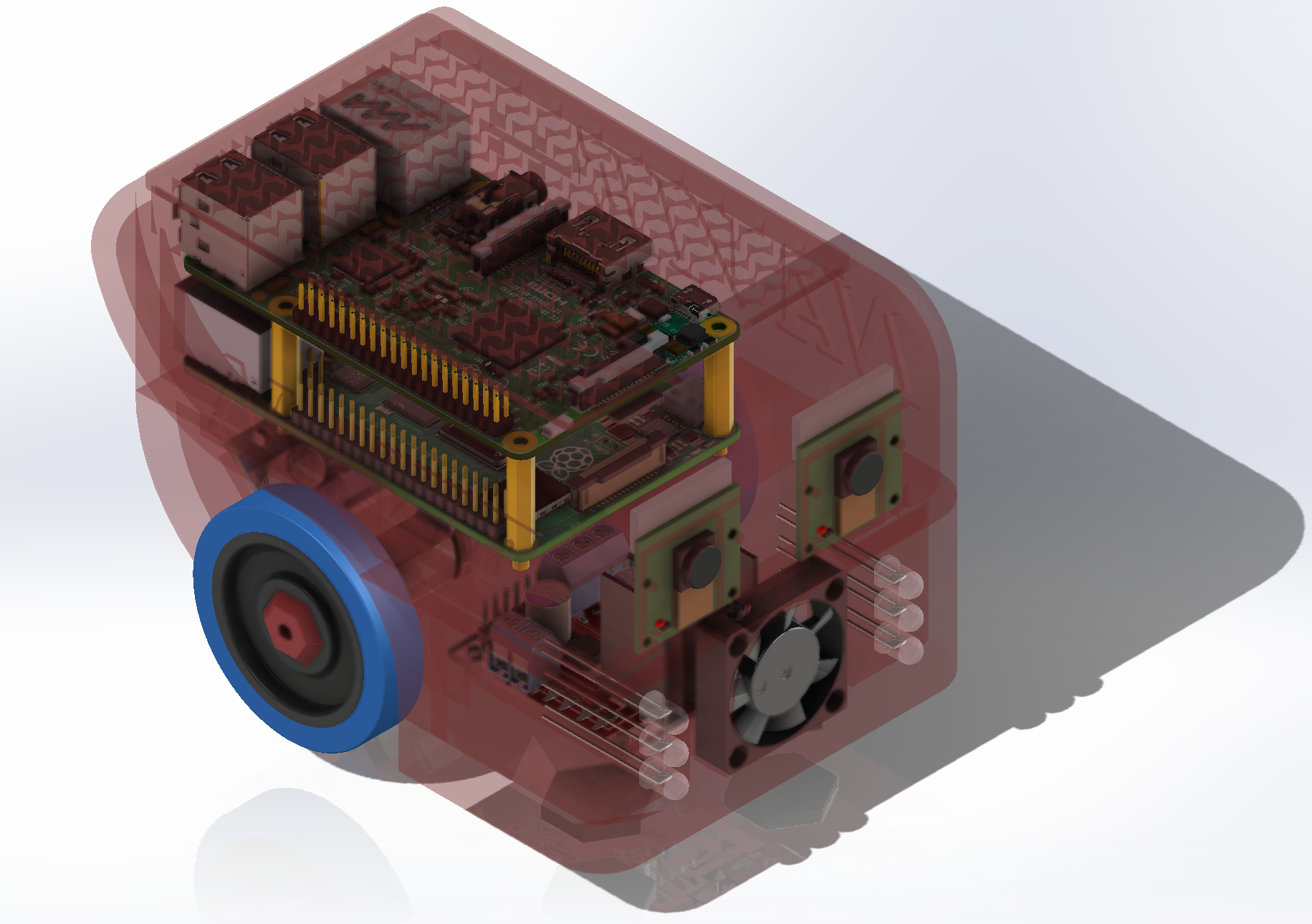

Ideally, this will eventually be a fully custom processor. However, I've been able to benchmark the processing on a Raspberry Pi 4B and it looks like this will be able to run under a second for a full cycle without any additional optimizations. Seeing as 1 second was my initial target when I started this project, this is good enough for now. However, due to my choice of camera, I'll have to run 2 pies. The cameras plug into the pi's camera port, and I don't want to pay for a camera multiplexer. So, the plan is for the Pi 4 to transfer the image to the Pi 3, which will run the depth processing. Then the Pi 3 will output sensor vectors to the Pi 4 which will run the particle filter and the motors.

Why these cameras? 1: They are cheap. I managed to find a set of 2 of these on amazon for $9. 2: They are high performing, promising 2592 x 1944 still images, 1080p video, and up to 90 fps at 640x480. TODO What more do I need?

What do I need in a motor? Encoder feedback, decent build quality, low size and weight. I wanted to get these from servo city, but I found what looks to be a knockoff on Amazon for half the price. TODO We'll see if I get what I paid for.

Cheap, reliable, and most importantly cheap.

https://banebots.com/banebots-wheel-2-3-8-x-0-4-1-2-hex-mount-50a-black-blue/

TODO write this section

- Ubuntu running under WSL with VcXsrv for test processes,

- Non-STL libraries

- Simple Direct Media Layer (SDL 2)

<SDL2/SDL.h>. Used to write pixels to the screen. Used due to strong support, ease of use, and cross-platform support.

sudo apt install libsdl2-dev- NCurses

<ncurses.h>. Used to get key inputs from the user to drive the robot in manual mode.

sudo apt install libncurses5-dev libncursesw5-dev- Terminos

<termios.h>. Used to set the terminal to non-cannonical mode for easier driving. Seems to come pre-installed with linux, TODO need to check. - bcm2835

<bcm2835.h>. Used to control the bcm2835 chip on the raspberry pi that handles GPIO. This is our GPIO library.

wget http://www.airspayce.com/mikem/bcm2835/bcm2835-1.71.tar.gz tar zxvf bcm2835-1.71.tar.gz cd bcm2835-1.71 ./configure make sudo make check sudo make install - Simple Direct Media Layer (SDL 2)

gcc -g main.c -o main.o

gcc main.c -o main.o `sdl2-config --cflags --libs` -lm -O3

gcc -o main main.c -lm -lbcm2835

gcc main.c -o main.o

- Confidence rejection

- Filter output image

- Rectify images (not using Middlebury dataset)

- Particle filter often finds the wrong node cluster at first.

- Particle filter does not take into account recent history.

- Path planner

- Flood on loss of confidence

- Consider installing a laser pointer to aid in depth perception of featureless walls. (structured light)

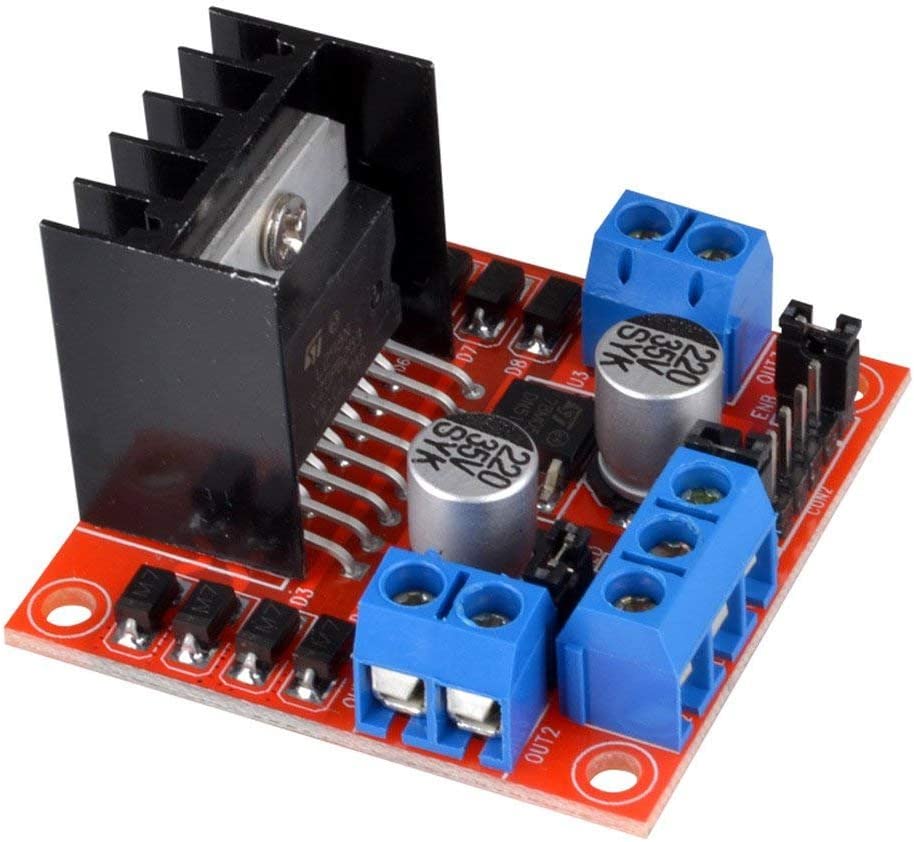

- move zipties back

- Motor driver needs to be filed for fit (too tight)

- Motor driver aleged ineficiencies

- motor driver size

- Wheel hub D shaft is not tight enough. Radius is good, increase length of D-line

- Camera cad is incorrect

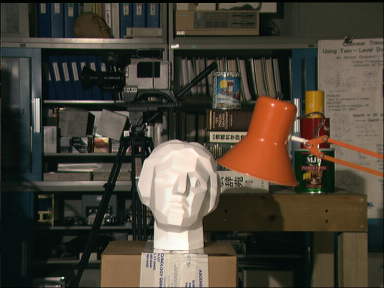

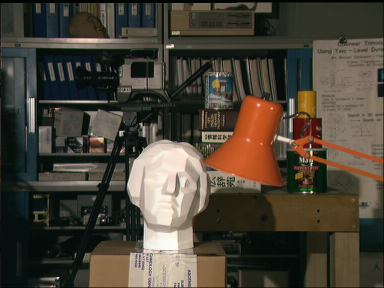

Execution time for depth_processing\tsukuba\scene1.row3.col1.ppm and depth_processing\tsukuba\scene1.row3.col2.ppm. All performance is single threaded to make comparisons to future hardware more apt.

287/288 - 100%

block_match() took 90.131191 seconds to execute

287/288 - 100%

block_match() took 87.297683 seconds to execute

287/288 - 100%

block_match() took 21.699990 seconds to execute

287/288 - 100%

block_match() took 21.609720 seconds to execute

287/288 - 100%

block_match() took 3.112148 seconds to execute

block_match() took 0.682072 seconds to execute

block_match() took 0.682072 seconds to execute

processing (not including graphics) took 0.027040 seconds to execute.

Execution time is ~0.015s per frame, including path planning and particle filtering with 5000 particles.

Software Version: 2d94655dcabb89866f78500f899f6fc5ea158938

processing (not including graphics) took 0.522824 seconds to execute

Software Version: 2d94655dcabb89866f78500f899f6fc5ea158938

processing (not including graphics) took 0.190935 seconds to execute

These are the sources that I used to inform my decision on this project, and that I think might be helpful to someone attempting something similar. I've made an effort to provide a general explanation of each source.

-

Stereo Vision: Depth Estimation between object and camera - Apar Garg

- Generalist beginner explanation of depth processing using block matching, and some of the math behind determining the depth of each pixel.

- Includes some source code in python

- https://medium.com/analytics-vidhya/distance-estimation-cf2f2fd709d8

-

Middlebury Stereo Datasets

- Great resource for image pairs to test depth processing. Ground truth images are sometimes included.

- https://vision.middlebury.edu/stereo/data/

-

Depth Estimation: Basics and Intuition - Daryl Tan

- Overview of the state of depth processing in CS, including stereo and monocular techniques.

- Great for understanding the options available for depth processing.

- https://towardsdatascience.com/depth-estimation-1-basics-and-intuition-86f2c9538cd1

Background (Research paper): https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=32aedb3d4e52b879de9a7f28ee0ecee997003271

Background: https://ww2.mathworks.cn/help/visionhdl/ug/stereoscopic-disparity.html

TODO + Source: https://docs.opencv.org/3.4/d3/d14/tutorial_ximgproc_disparity_filtering.html

Dataset: http://sintel.is.tue.mpg.de/depth

Background: https://www.cs.cmu.edu/~16385/s17/Slides/13.2_Stereo_Matching.pdf

Background: http://mccormickml.com/2014/01/10/stereo-vision-tutorial-part-i/

TODO: https://developer.nvidia.com/how-to-cuda-c-cpp

Background: https://dsp.stackexchange.com/questions/75899/appropriate-gaussian-filter-parameters-when-resizing-image

Background (Research paper): https://www.ri.cmu.edu/pub_files/pub1/dellaert_frank_1999_2/dellaert_frank_1999_2.pdf

Background + Source: https://fjp.at/posts/localization/mcl/

Background + Source: https://ros-developer.com/2019/04/10/parcticle-filter-explained-with-python-code-from-scratch/

Background (REALLY GOOD): https://www.usna.edu/Users/cs/taylor/courses/si475/notes/slam.pdf

Example: https://www.youtube.com/watch?v=m3L8OfbTXH0

Background (REALLY GOOD):https://www.youtube.com/watch?v=3Yl2aq28LFQ

Background (Research paper): https://research.google.com/pubs/archive/45466.pdf

Background (REALLY GOOD): https://cs.gmu.edu/~kosecka/cs685/cs685-icp.pdf

Background (Research paper): https://arxiv.org/pdf/2007.07627

Background (Research paper): https://www.researchgate.net/figure/Hybrid-algorithm-ideology-ICP-step-by-step-comes-to-local-minima-After-local-minima_fig4_281412803

https://towardsdatascience.com/optimization-techniques-simulated-annealing-d6a4785a1de7 https://resources.mpi-inf.mpg.de/deformableShapeMatching/EG2011_Tutorial/slides/2.1%20Rigid%20ICP.pdf https://www.visiondummy.com/2014/04/geometric-interpretation-covariance-matrix/ https://www.youtube.com/watch?v=cOUTpqlX-Xs https://cs.fit.edu/~dmitra/SciComp/Resources/singular-value-decomposition-fast-track-tutorial.pdf https://iosoft.blog/2020/07/16/raspberry-pi-smi/ https://forums.raspberrypi.com/viewtopic.php?t=228727 https://raspberrypi.stackexchange.com/questions/130529/how-fast-are-c-python-libraries https://forums.raspberrypi.com/viewtopic.php?t=244031 PERIPHERAL BASE ADDRESS FOR RASPBERRY PI 4 is 0xFE000000 https://raspberrypi.stackexchange.com/questions/124985/using-motor-encoders-with-raspberry-pi

Background: https://www.raspberrypi.com/documentation/computers/camera_software.html#getting-started

Source: https://stackoverflow.com/questions/41440245/reading-camera-image-using-raspistill-from-c-program

Server Stream: libcamera-vid -t 0 --inline --listen -o tcp://0.0.0.0:8000 Server Stream 60fps: libcamera-vid -t 0 --inline --listen -o tcp://0.0.0.0:8000 --level 4.2 --framerate 120 --width 1280 --height 720 --denoise cdn_off

Client: ffplay tcp://10.0.0.73:8000 -vf "setpts=N/30" -fflags nobuffer -flags low_delay -framedrop ffplay tcp://10.0.0.73:8000 -vf "hflip,vflip" -flags low_delay -framedrop

Contributions are always welcome. If you want to contribute to the project, please create a pull request.

This project is not currently licensed, but I will look into adding a license at a later date.