This is the official implementation of the following paper:

GLBench: A Comprehensive Benchmark for Graphs with Large Language Models [Paper]

Yuhan Li, Peisong Wang, Xiao Zhu, Aochuan Chen, Haiyun Jiang, Deng Cai, Victor Wai Kin Chan, Jia Li

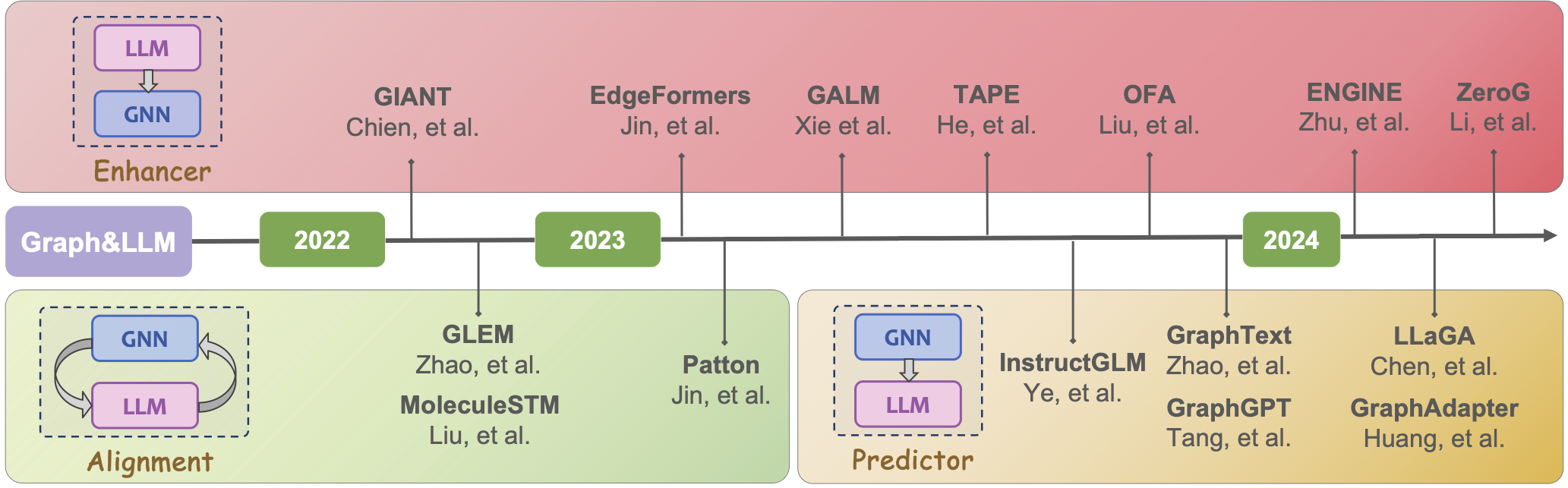

Trend of Graph&LLM.

Before you begin, ensure that you have Anaconda or Miniconda installed on your system. This guide assumes that you have a CUDA-enabled GPU. After create your conda environment (we recommend python==3.10), please run

pip install -r requirements.txt

to install python packages.

All datasets in GLBench are available in this link.

Please place them in the datasets folder.

Benchmark the Classical GNNs (grid-search hyperparameters)

cd models/gnn

bash models/gnn/run.sh

Benchmark the LLMs in supervised settings (Sent-BERT, BERT, RoBERTa)

cd models/llm/llm_supervised

bash roberta_search.sh

Benchmark the LLMs in zero-shot settings (gpt-4o, gpt-3.5-turbo,llama3-70b,deepseek-chat)

cd models/llm/llm_zeroshot

python inference.py --model gpt-4o --data cora

- GIANT

Due to some package conflicts or version limitations, we recommend using docker to run GIANT. The docker file is in

models/enhancer/giant-xrt/dockerfile

After starting the Docker container, run

cd models/enhancer/giant-xrt/

bash run_all.sh

- TAPE

cd models/enhancer/TAPE/

bash run.sh

- OFA

cd models/enhancer/OneForAll/

bash run.sh

- ENGINE

- InstructGLM

- GraphText

Due to some package conflicts or version limitations, we recommend using docker to run GraphText. The docker file is in

models/predictor/GraphText/dockerfile

After starting the Docker container, run

cd models/predictor/GraphText

bash run.sh

- GraphAdapter

cd models/predictor/GraphAdapter

bash run.sh

- LLaGA

- GLEM

cd models/alignment/GLEM

bash run.sh

- Patton

bash run_pretrain.sh

bash nc_class_train.sh

bash nc_class_test.sh

We also provide seperate scripts for different datasets.

- Zero-shot

Benchmark the LLMs(LLaMA3, GPT-3.5-turbo, GPT-4o, DeepSeek-chat)

cd models/llm

You can use your own API key for OpenAI.

- OFA

cd models/enhancer/OneForAll/

bash run_zeroshot.sh

- ZeroG

cd models/enhancer/ZeroG/

bash run.sh

- GraphGPT

cd models/predictor/GraphGPT

bash ./scripts/eval_script/graphgpt_eval.sh

🔥 A Survey of Graph Meets Large Language Model: Progress and Future Directions (IJCAI'24)

🔥 ZeroG: Investigating Cross-dataset Zero-shot Transferability in Graphs (KDD'24)

We are appreciated to all authors of works we cite for their solid work and clear code organization! The orginal version of the GraphLLM methods are listed as follows:

Alignment:

GLEM:

- (2022.10) [ICLR' 2023] Learning on Large-scale Text-attributed Graphs via Variational Inference [Paper | Code]

Patton:

Enhancer:

ENGINE:

- (2024.01) [IJCAI' 2024] Efficient Tuning and Inference for Large Language Models on Textual Graphs [Paper]

GIANT:

- (2022.03) [ICLR' 2022] Node Feature Extraction by Self-Supervised Multi-scale Neighborhood Prediction [Paper | Code]

OFA:

- (2023.09) [ICLR' 2024] One for All: Towards Training One Graph Model for All Classification Tasks [Paper | Code]

TAPE:

- (2023.05) [ICLR' 2024] Harnessing Explanations: LLM-to-LM Interpreter for Enhanced Text-Attributed Graph Representation Learning [Paper | Code]

ZeroG:

- (2024.02) [KDD' 2024] ZeroG: Investigating Cross-dataset Zero-shot Transferability in Graphs [Paper] | Code]

Predictor:

GraphAdapter:

- (2024.02) [WWW' 2024] Can GNN be Good Adapter for LLMs? [Paper]

GraphGPT:

GraphText:

InstructGLM:

LLaGA:

$CODE_DIR

├── datasets

└── models

├── alignment

│ ├── GLEM

│ └── Patton

├── enhancer

│ ├── ENGINE

│ ├── giant-xrt

│ ├── OneForAll

│ ├── TAPE

│ └── ZeroG

├── gnn

├── llm

│ ├── deepseek-chat

│ ├── gpt-3.5-turbo

│ ├── gpt-4o

│ └── llama3-70b

└── predictor

├── GraphAdapter

├── GraphGPT

├── GraphText

├── InstructGLM

└── LLaGA