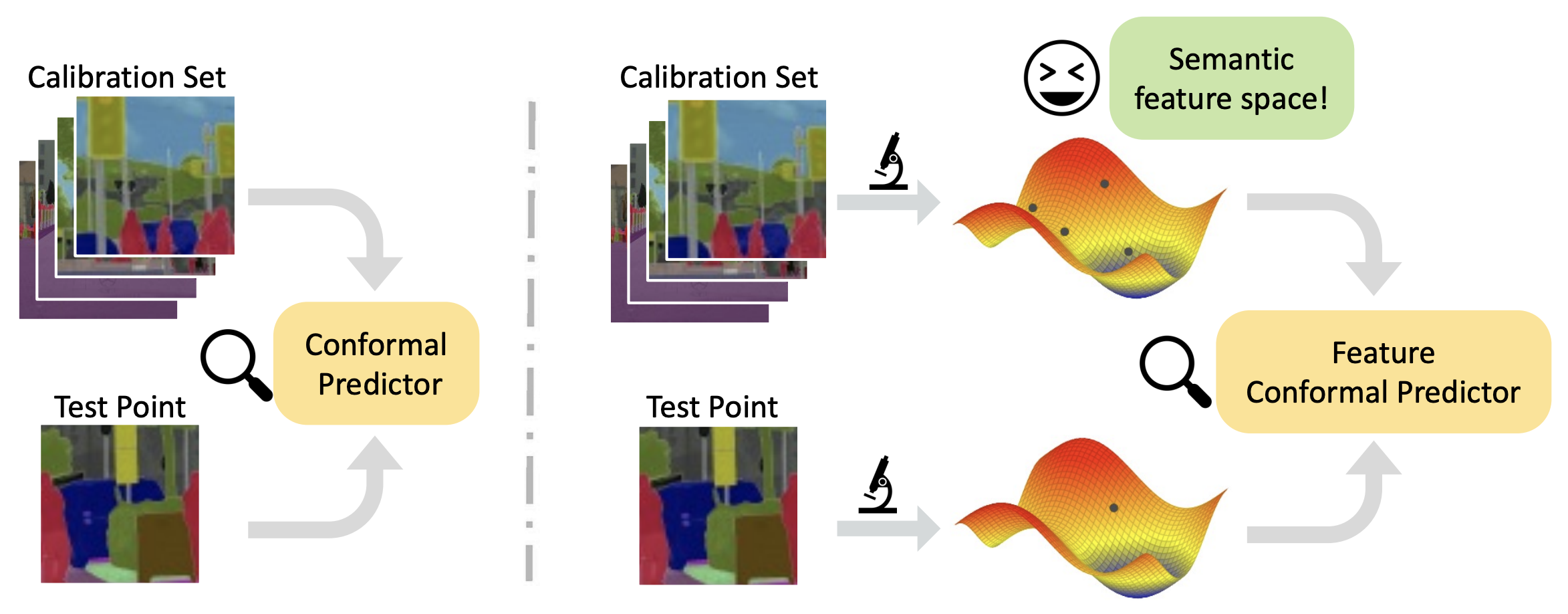

Predictive Inference with Feature Conformal Prediction (Accepted by ICLR 2023)

Jiaye Teng*, Chuan Wen*, Dinghuai Zhang*, Yoshua Bengio, Yang Gao, Yang Yuan

We use Python 3.7, and other packages can be installed by:

pip install -r requirements.txt

The 1-dim datasets include Community, Facebook1, Facebook2, Meps19, Meps20, Meps21,

Star, Blog, Bio and Bike, which have been placed in ./datasets/.

The results of FeatureCP on these datasets can be obtained by the following commands:

python main.py --data com --no-resume --seed 0 1 2 3 4

python main.py --data fb1 --no-resume --seed 0 1 2 3 4

python main.py --data fb2 --no-resume --seed 0 1 2 3 4

python main.py --data meps19 --no-resume --seed 0 1 2 3 4

python main.py --data meps20 --no-resume --seed 0 1 2 3 4

python main.py --data meps21 --no-resume --seed 0 1 2 3 4

python main.py --data star --no-resume --seed 0 1 2 3 4

python main.py --data blog --no-resume --seed 0 1 2 3 4

python main.py --data bio --no-resume --feat_lr 0.001 --seed 0 1 2 3 4

python main.py --data bike --no-resume --feat_lr 0.001 --seed 0 1 2 3 4

python main.py --data x100-y10-reg --no-resume --feat_lr 0.001 --feat_step 80 --seed 0 1 2 3 4

Cityscapes is a commonly-used semantic segmentation dataset. Please download it from the official website.

The FCN model need to firstly be trained on Cityscapes with the codebase in ./FCN-Trainer/.

We also provide the checkpoint trained by us in this link.

Please train the FCN to get the model checkpoint or simply download our checkpoint, and move it into ./ckpt/cityscapes/.

Note: As discussed in Section 5.1 and Appendix B.1 in our paper, we transform the

original pixel-wise classification problem into a high-dimensional pixel-wise regression problem. Specifically, we convert the label space from output = torch.exp(-torch.exp(output)).

The command to execute the Cityscapes experiment is:

export $CITYSCAPES_PATH = 'your path to the cityscapes'

python main_fcn.py --device 0 --data cityscapes --dataset-dir $CITYSCAPES_PATH --batch_size 20 --feat_step 10 --feat_lr 0.1 --workers 10 --seed 0 1 2 3 4

If you want to save the visualization of estimated length like Figure 3, you can add --visualize in the command.

If you find our work is helpful to you, please cite our paper:

@inproceedings{

teng2023predictive,

title={Predictive Inference with Feature Conformal Prediction},

author={Jiaye Teng and Chuan Wen and Dinghuai Zhang and Yoshua Bengio and Yang Gao and Yang Yuan},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023}

}