The Edge TPU has a great sample.

However, the samples are only image classification(slim) and object detection(object detection api), and there is no way to build and learn your own model.

This is a sample for converting your own model from learning to Edge TPU Model.

The model uses TensorFlow's MNIST Network sample (fully_connected_feed).

- Coral USB Accelerator(May be Devboard)

- Tensorflow (Learning only)

- Edge TPU Python library (Get started with the USB Accelerator)

It is a sample of Jupyter notebook.

This sample is based on the Tensorflow tutorial (MNIST fully_connected_feed.py).

Open train/MNIST_fully_connected_feed.ipynb and Run All.

When learning is complete, a model is generated in the following directory:

train

+ logs

| + GraphDef

+ checkpoint

+ model.ckpt-xxxx.data-00000-of-00001

+ model.ckpt-xxxx.index

+ model.ckpt-xxxx.meta

+ mnist_fully_connected_feed_graph.pb

model.ckpt is a ckecpoint file.

The number of learning steps is recorded in "xxxx".

The default is 4999 maximum.

mnist_fully_connected_feed_graph.pb is a GrarhDef file.

The GraphDef directory contains the structure of the model.

You can check with tensorboard.

(Move dir and tensorboard --logdir=./)

"Quantization-aware training" is required to generate Edge TPU Model.

See Quantization-aware training for details.

Converts checkpoint variables into Const ops in a standalone GraphDef file.

Use the freeze_graph command.

The freeze_mnist_fully_connected_feed_graph.pb file is generated in the logs directory.

$ freeze_graph \

--input_graph=./logs/mnist_fully_connected_feed_graph.pb \

--input_checkpoint=./logs/model.ckpt-4999 \

--input_binary=true \

--output_graph=./logs/freeze_mnist_fully_connected_feed_graph.pb \

--output_node_names=softmax_tensor

Generate a TF-Lite model using the tflite_convert command.

The output_tflite_graph.tflite file is generated in the logs directory.

$ tflite_convert \

--output_file=./logs/output_tflite_graph.tflite \

--graph_def_file=./logs/freeze_mnist_fully_connected_feed_graph.pb \

--inference_type=QUANTIZED_UINT8 \

--input_arrays=input_tensor \

--output_arrays=softmax_tensor \

--mean_values=0 \

--std_dev_values=255 \

--input_shapes="1,784"

Compile the TensorFlow Lite model for compatibility with the Edge TPU.

See Edge TPU online compiler for details.

First, Copy the Edge TPU Model to the classify directory.

The directory structure is as follows.

classify

+ classify_mnist.py

+ mnist_data.py

+ xxxx_edgetpu.tflite

Note: "xxxx_edgetpu.tflite" is Edge TPU Model. This repository contains "mnist_tflite_edgetpu.tflite"

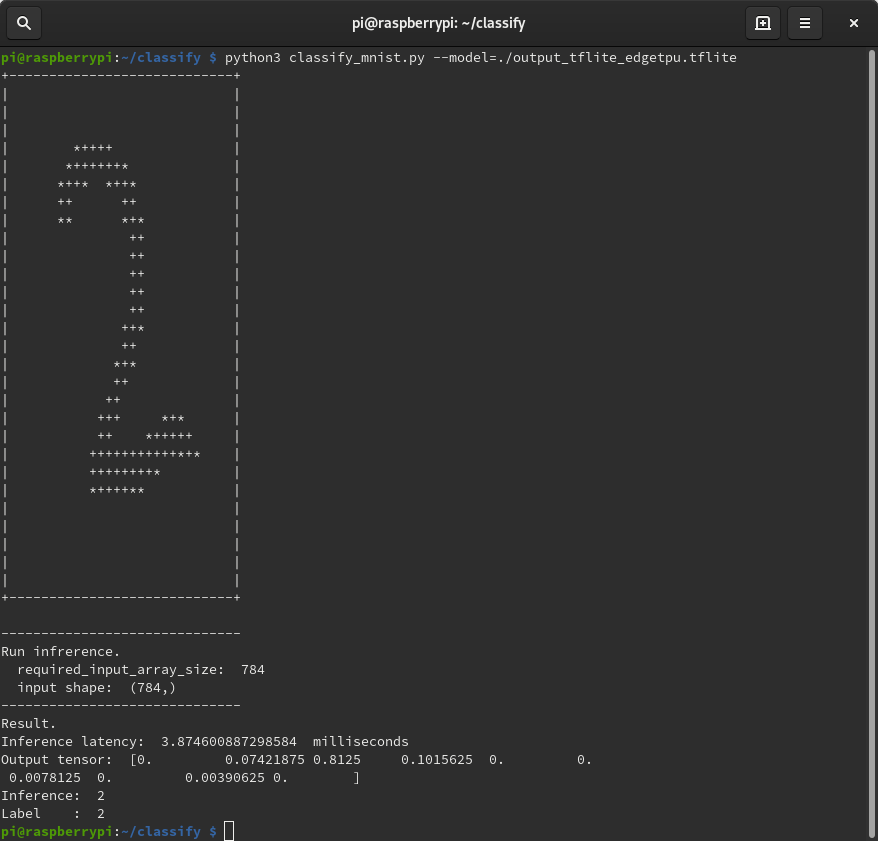

Then, classify MNIST data:

$ python3 classify_mnist.py --model=./mnist_tflite_edgetpu.tflite

The result of classification with images is displayed on the console as follows.

"Inference latency:" and "Output tensor:" are return values of the RunInference method of eddypu.basic.basic_engine.BasicEngine.

(See details Python API)

"Inference" is the most accurate value of "output tensor" (correct and predicted numbers).

"Label" is the correct label.

Note: Full command-line options:

$ python3 classify_mnist.py -h

If you want to use the TF-Lite API, take a look at the notebook sample. Classify MNIST using TF-Lite Model.

For more information, please refer my blogs.

(comming soon. but sorry japanese only.)