The primary objective of this project is to develop and implement a Long Short-Term Memory (LSTM) deep learning model for quantitative trading. This model aims to leverage historical and real-time financial data to predict stock prices accurately, thereby enabling the execution of profitable trading strategies. The project will focus on integrating multiple data sources, cleaning and processing the data, training the LSTM model, and deploying it in a real-time trading environment.

1. Create Virtual Environment (Python 3.8)

-

If you want to create a new virtual enviroment, you can use the following command in the terminal of the project directory:

- In Windows or Linux, you can use the following command:

python -m venv venv

- Then, you can activate the virtual enviroment by using the following command:

venv\Scripts\activate

- In MacOs, you can use the following command:

python3 -m venv venv

- Then, you can activate the virtual enviroment by using the following command:

source venv/Scripts/activate -

Make sure the virtual environment needed for project is activate with corresponding project directory, you can use the following command:

- In Windows or Linux, you can use the following command:

venv\Scripts\activate

- In MacOs, you can use the following command:

source venv/Scripts/activate

2. Install Dependencies

Install requirements.txt: Automatically installed dependencies that needed for the project:

pip install NolanMQuantTradingEnvSetUpor

pip install -r requirements.txt- Spark: https://medium.com/@ansabiqbal/setting-up-apache-spark-pyspark-on-windows-11-machine-e16b7382624a

- Kafka: https://github.com/NolanMM/Notebook-Practice-NolanM/blob/master/Set Up Tool For Data/Windows11/Kafka/Set Up Kafka Instruction.ipynb

- PostgreSQL: https://medium.com/@Abhisek_Sahu/installing-postgresql-on-windows-for-sql-practices-671212203e9b

- PyTorch: https://pytorch.org/get-started/locally/

Details

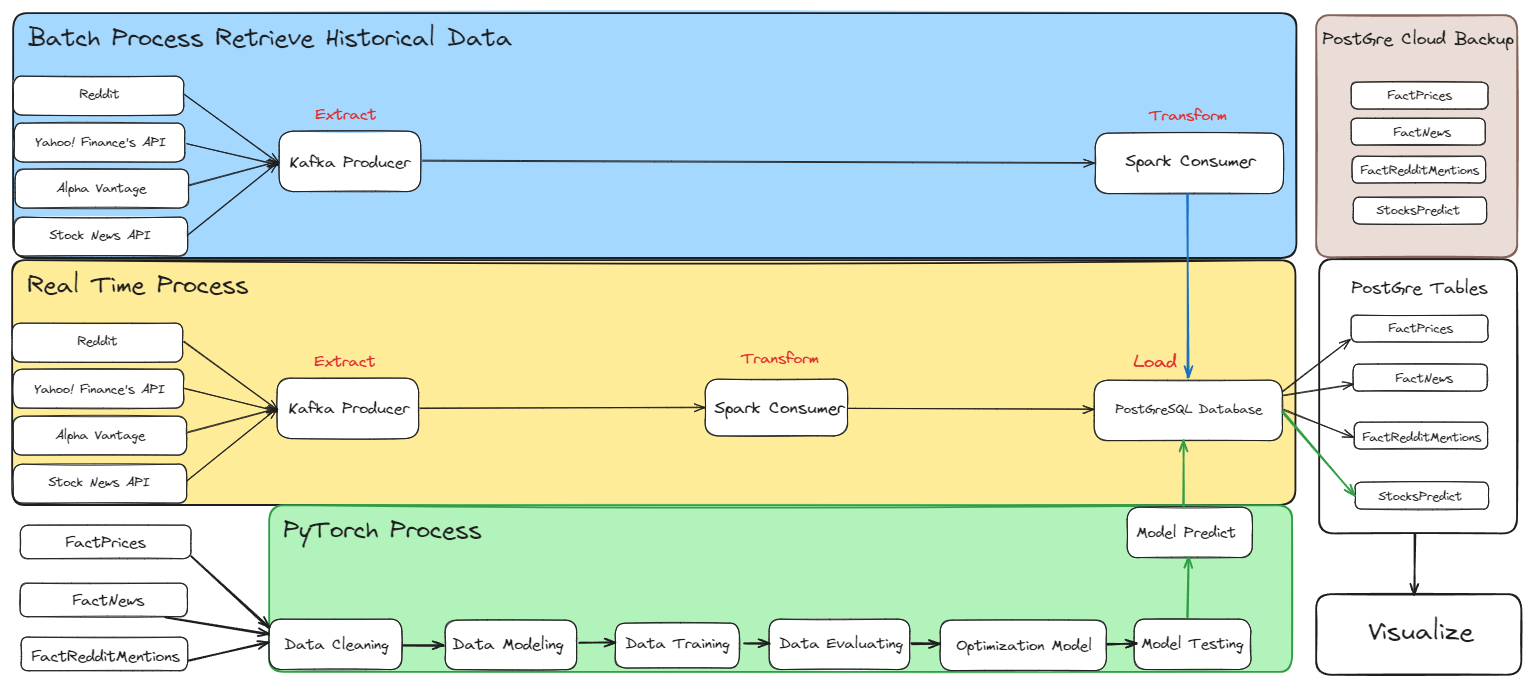

- Reddit: For extracting sentiment data related to stock mentions.

- Yahoo Finance API: For obtaining historical and real-time stock prices and financial data.

- Alpha Vantage: For additional financial data and technical indicators.

- Stock News API: For news articles and headlines related to stocks.

- Kafka: Used as a producer to extract data from various sources.

- Apache Spark: Used for data transformation and processing, both in batch and real-time.

- PostgreSQL Database: Centralized storage for processed data including stock prices, news, Reddit mentions, and predictions.

- PyTorch: For building, training, and evaluating the LSTM deep learning model.

- Streamlit: To visualize model predictions, trading performance, and generate interactive reports.

- PostgreSQL Cloud Backup: For regular data and model backups to ensure data integrity and availability.

- System Monitoring: Continuous monitoring and maintenance of the system to handle issues and improve performance

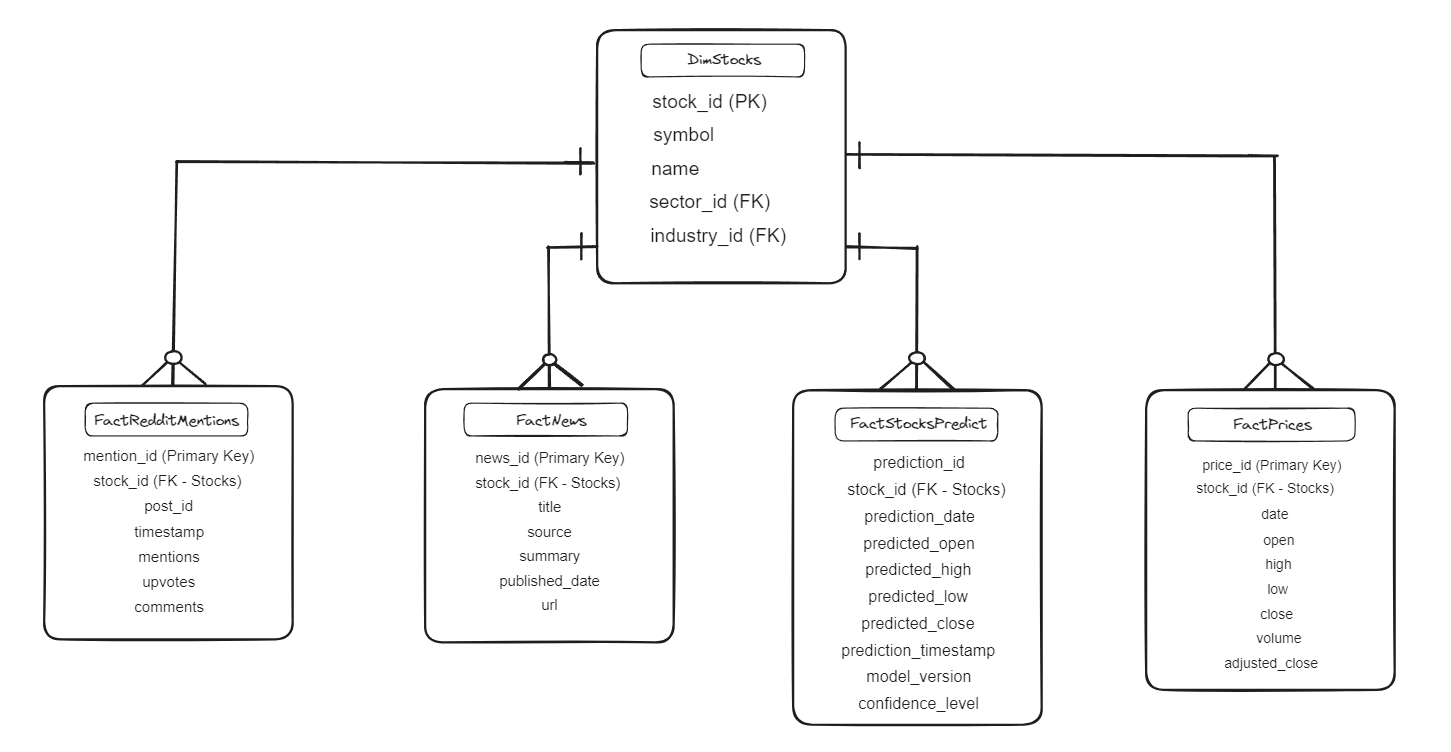

We applying star schema architecture database include

- DimStocks: This is the central fact table containing information about stocks. It includes the following fields:

- stock_id (PK): Primary Key, a unique identifier for each stock.

- symbol: The stock ticker symbol.

- name: The full name of the stock.

- sector_id (FK): Foreign Key, links to the sector dimension.

- industry_id (FK): Foreign Key, links to the industry dimension.

- FactPrices: Contains detailed pricing information for each stock.

- price_id (Primary Key): Unique identifier for each price entry.

- stock_id (FK - Stocks): Foreign Key, links to the central stock table.

- date: The date of the price record.

- open, high, low, close: The opening, highest, lowest, and closing prices of the stock.

- volume: The trading volume of the stock.

- adjusted_close: The adjusted closing price of the stock.

- FactNews: Contains news articles and headlines related to stocks.

- news_id (Primary Key): Unique identifier for each news entry.

- stock_id (FK - Stocks): Foreign Key, links to the central stock table.

- title: The title of the news article.

- source: The source of the news article.

- summary: A summary of the news article.

- published_date: The publication date of the news article.

- url: The URL link to the full news article.

- FactRedditMentions: Contains data from Reddit mentions related to stocks.

- mention_id (Primary Key): Unique identifier for each Reddit mention.

- stock_id (FK - Stocks): Foreign Key, links to the central stock table.

- post_id: The ID of the Reddit post.

- timestamp: The timestamp of the Reddit mention.

- mentions: The number of times the stock is mentioned in the post.

- upvotes: The number of upvotes the Reddit post received.

- comments: The number of comments on the Reddit post.

- FactStocksPredict: Contains predicted stock prices generated by the LSTM model.

- prediction_id (Primary Key): Unique identifier for each prediction entry.

- stock_id (FK - Stocks): Foreign Key, links to the central stock table.

- prediction_date: The date of the prediction.

- predicted_open, predicted_high, predicted_low, predicted_close: Predicted opening, highest, lowest, and closing prices of the stock.

- prediction_timestamp: The timestamp when the prediction was made.

- model_version: The version of the model used for the prediction.

- confidence_level: The confidence level of the prediction.

- Centralized Fact Table: The DimStocks table is at the center, and all dimension tables are connected to it via foreign keys.