This is an unofficial Pytorch implementation of DocParser.

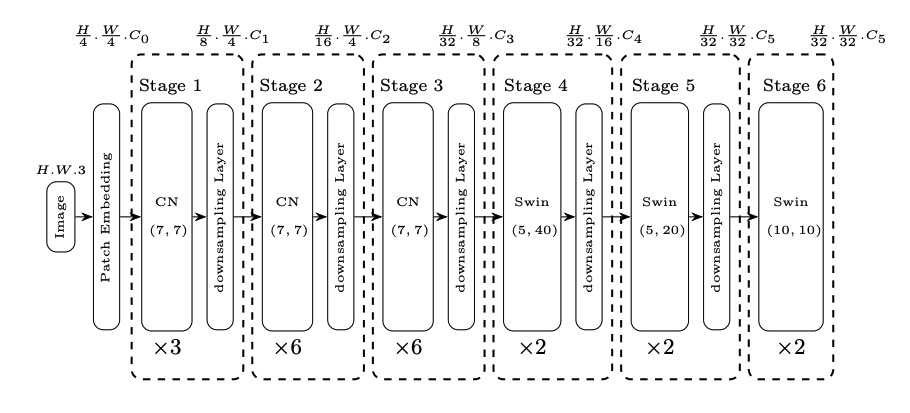

The architecture of DocParser's Encoder- Sep 1st, release the ConNext weight here. Please note that this weight is trained with a CTC head on a OCR task and can only be used to initialize the ConvNext part in the docparser during pretraining. It is NOT intended for fine-tuning in any downstream tasks.

- July 15th, update training scripts for Masked Document Reading Task and model architecture.

pip install -r requirements.txtThe dataset should be processed into the following format

{

"filepath": "path/to/image/folder", // path to image folder

"filename": "file_name", // file name

"extract_info": {

"ocr_info": [

{

"chunk": "text1"

},

{

"chunk": "text2"

},

{

"chunk": "text3"

}

]

} // a list of ocr info of filepath/filename

}You can start the training from train/train_experiment.py or

python train/train_experiment.py --config_file config/base.yamlThe training script also support ddp with huggingface/accelerate by

accelerate train/train_experiment.py --config_file config/base.yaml --use_accelerate TrueThe training script currently solely implements the Masked Document Reading Step described in the paper. The decoder weights, tokenizer and processor are borrowed from naver-clova-ix/donut-base.

Unfortunately, there is no DocParser pre-training weights publicly available. Simply borrowing weights from Donut-based fails to benefit DocParser on any downstream tasks. But I am working on training a pretraining DocParser based on the two-stage tasks mentioned in the paper recently. Once I successfully complete both the pretraining tasks, and achieve a well-performing model successfully, I intend to make it publicly available on the Huggingface hub.