Based on kiwenlau's repo to build with hadoop 3.3.6

docker pull danchoi2001/hadoop:1.0

git clone https://github.com/TianHuijun/hadoop-cluster-docker.git

docker network create --driver=bridge hadoop

cd hadoop-cluster-docker

bash ./start-container.sh

output:

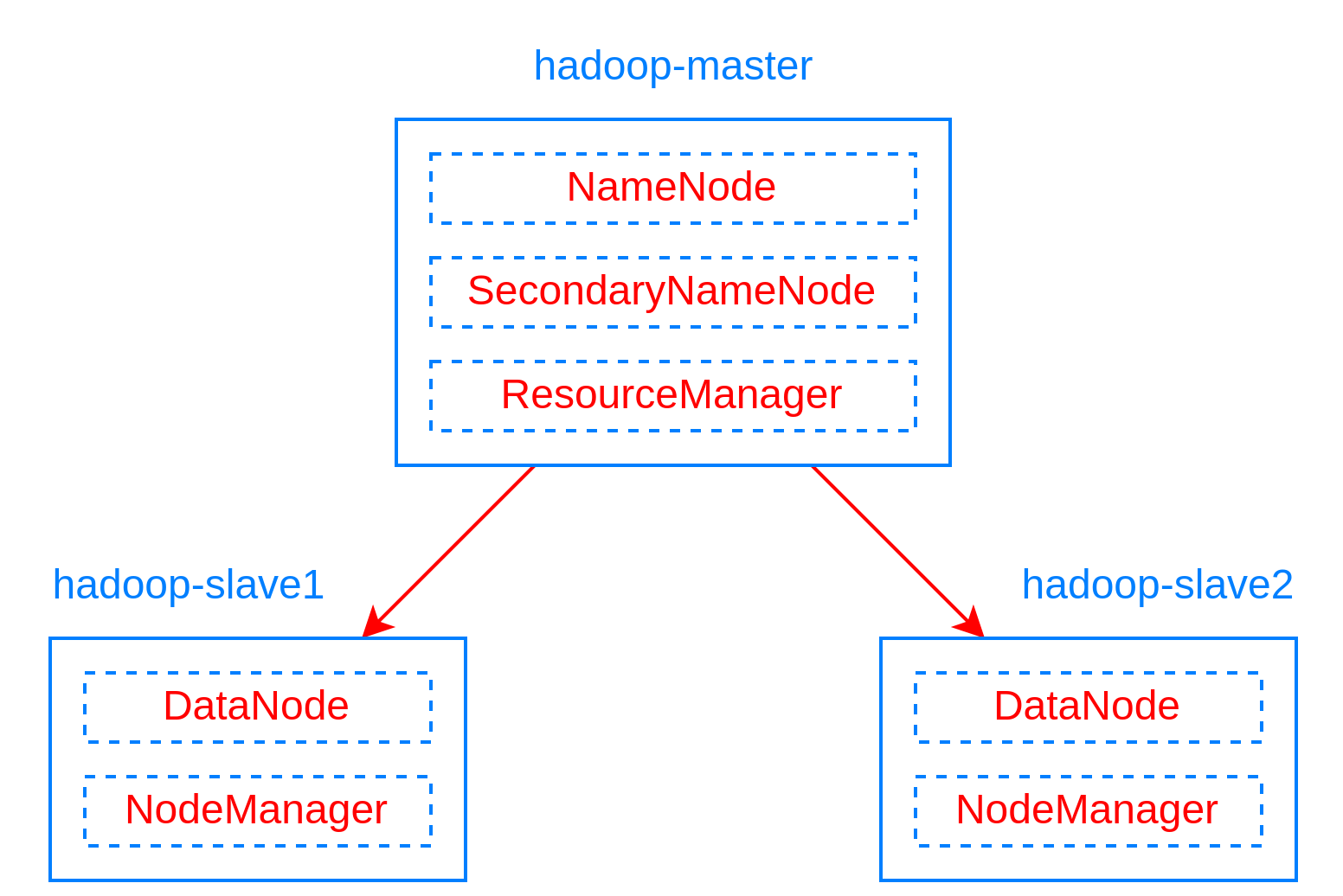

start hadoop-master container...

start hadoop-slave1 container...

start hadoop-slave2 container...

root@hadoop-master:~#

- start 3 containers with 1 master and 2 slaves

- you will get into the /root directory of hadoop-master container

bash ./start-hadoop.sh

bash ./run-wordcount.sh

output

input file1.txt:

Hello Hadoop

input file2.txt:

Hello Docker

wordcount output:

Docker 1

Hadoop 1

Hello 2

do 1~3 like section A

bash ./resize-cluster.sh 5

- specify parameter > 1: 2, 3..

- this script just rebuild hadoop image with different slaves file, which pecifies the name of all slave nodes

bash ./start-container.sh 5

- use the same parameter as the step 2

do 5~6 like section A

bash ./start-container.sh <number_of_nodes>

Run docker network inspect on the network (e.g. hadoop) to find the IP the hadoop interfaces are published on. Access these interfaces with the following URLs:

- Namenode: http://<dockerhadoop_IP_address>:9870/dfshealth.html#tab-overview

- Datanode: http://<dockerhadoop_IP_address>:9864/

- Nodemanager: http://<dockerhadoop_IP_address>:8042/node

- Resource manager: http://<dockerhadoop_IP_address>:8088/

- Theory and setup:

- Configs hadoop 3.2.4:

- Sources:

- https://github.com/big-data-europe/docker-hadoop (if you are using this repo, you can encounter

Namenode is in safe mode, solved here)

- https://github.com/big-data-europe/docker-hadoop (if you are using this repo, you can encounter