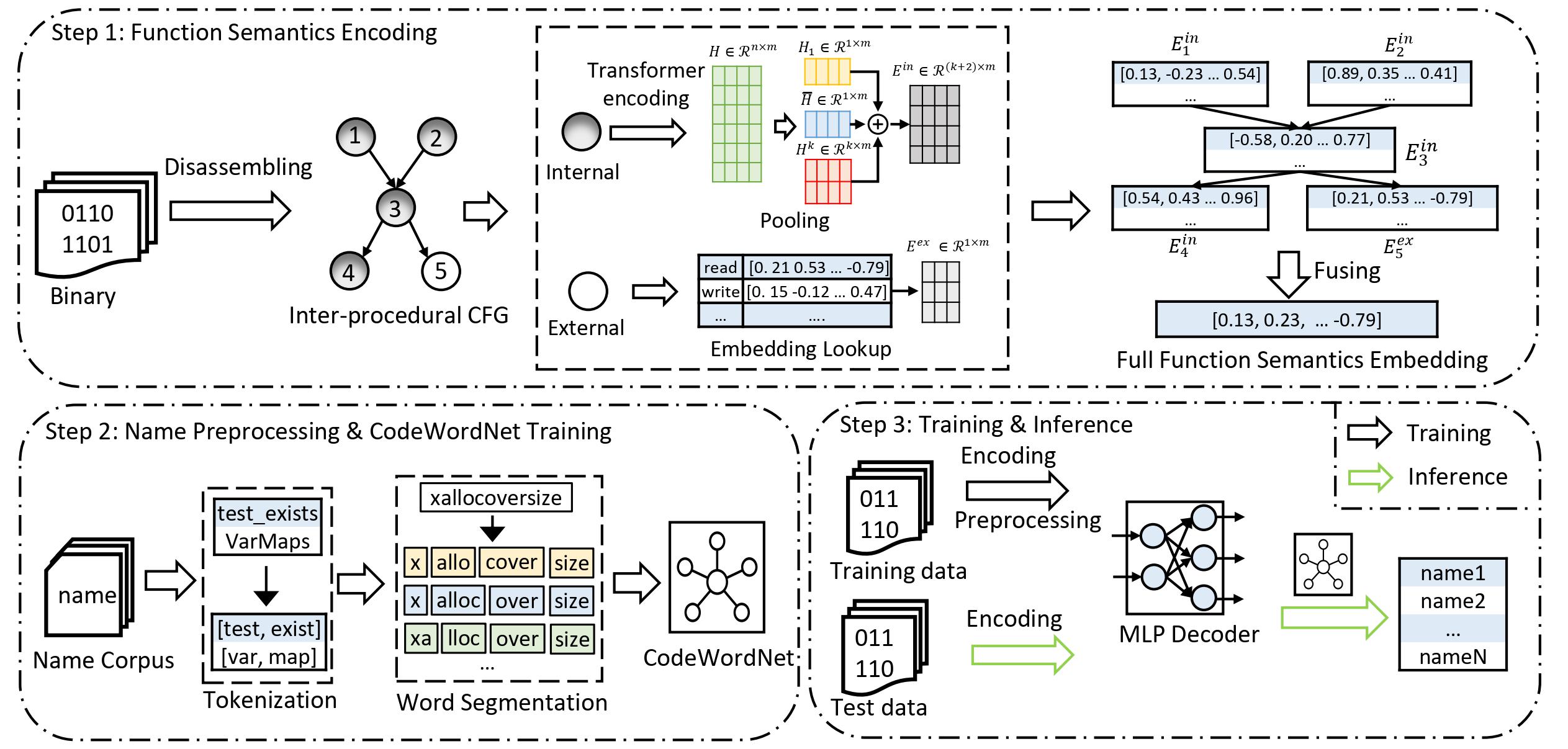

SymLM is a framework for predicting function names in stripped binaries through context-sensitive execution-aware code embeddings. It is a novel neural architecture that learns the comprehensive function semantics by jointly modeling the execution behaviors of the calling context and instructions via a novel fusing encoder. The workflow of SymLM is shown in the image below:

We implemented SymLM using Ghidra (for binary parsing and ICFG construction), the open source microtrace-based pretrained model from Trex (for transformer encoding), NLTK and SentencePiece (for function name preprocessing), and Gensim (for CodeWordNet). We built the other components of SymLM with Pytorch and fairseq. For more details, please refer to our paper.

To setup the environment, we suggest to use conda to install all necessary packages. Conda installation instructions can be found here. The following setup assumes Conda is installed and is running on Linux system (though Windows should work too).

First, create the conda environment,

conda create -n symlm python=3.8 numpy scipy scikit-learn

and activate the conda environment:

conda activate symlm

Then, install the latest Pytorch (assume you have GPU and CUDA installed -- check CUDA version by nvcc -V. Assume that you have CUDA 11.3 installed. Then you can install pytorch with the following command:

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch -c nvidia

If CUDA toolkit hasn't been install in your environment, refer to CUDA toolkit archive for installation instruction. Then the pytorch installation commands for the specific CUDA version can be found here).

Next, clone the SymLM and enter and install it via:

git clone git@github.com:OSUSecLab/SymLM.git

cd SymLM

pip install --editable .Finally, install remaining packages:

pip install -r requirements.txt

For efficient processing of large datasets, please install PyArrow:

pip install pyarrow

For faster training install NVIDIA's apex library:

git clone https://github.com/NVIDIA/apex

cd apex

pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" \

--global-option="--deprecated_fused_adam" --global-option="--xentropy" \

--global-option="--fast_multihead_attn" ./We provide a sample x64 dataset under the dataset_generation/dataset_sample directory and its binarization result under thedata_bin directory.

For more details on how these datasets are generated from binaries, please refer to the README under dataset_generation/.

For the complete dataset across computer architectures (i.e., x86, x64, ARM, and MIPS) and optimization levels (i.e., O0, O1, O2, and O3), please find them HERE.

To train the model, please first download the pretrained model and put it under the checkpoints/pretrained directory. The pretrained model was obtained from Trex in Deceber 2021. For the most recent ones, please refer to this repo. While the authors of Trex keep updating the pretrained model, there could be imcompatibility issues between our current implementation and the latest pretrained model.

The script for training the model is training_evaluation/train.sh, in which you have to set the following parameters:

CHECKPOINT_PATH=checkpoints/train # path to save checkpoints

TOTAL_UPDATES=20000 # Total number of training steps

WARMUP_UPDATES=100 # Warmup the learning rate over this many updates

PEAK_LR=1e-5 # Peak learning rate, adjust as needed

TOKENS_PER_SAMPLE=512 # Max sequence length

MAX_POSITIONS=512 # Num. positional embeddings (usually same as above)

MAX_SENTENCES=4 # Number of sequences per batch (batch size)

NUM_CLASSES=3069 # Vocabulary size of internal function name words, plus one for <unk> token (OOV words)

NUM_EXTERNAL=948 # Vocabulary size of external function names

NUM_CALLs=1 # Number of callers/internal callees/external calees per batch (batch size)

ENCODER_EMB_DIM=768 # Embedding dimension for encoder

ENCODER_LAYERS=8 # Number of encoder layers

ENCODER_ATTENTION_HEADS=12 # Number of attention heads for the encoder

TOTAL_EPOCHs=25 # Total number of training epochs

EXTERNAL_EMB="embedding" # External callee embedding methods, options: (one_hot, embedding)

DATASET_PATH="data_bin" # Path to the binarized datasetValues of the above parameters have been set for the sample binarized dataset under the data_bin folder. Note that, within the parameters, NUM_CLASSES and NUM_CALLs are specific to the dataset. To get the values for these two parameters, you can run the script training_evaluation/get_vocabulary_size.py. For example, you can get the values for our sample dataset by running the following command:

$ python training_evaluation/get_vocabulary_size.py --internal_vocabulary_path dataset_generation/vocabulary/label/dict.txt --external_vocabulary_path dataset_generation/vocabulary/external_label/dict.txt

NUM_CLASSES=3069

NUM_EXTERNAL=948To prediction function names for test binaries, you can simply run the training_evaluation/prediction.sh script, where you have to set the following parameters:

CHECKPOINT_PATH=checkpoints/train # Directory to save checkpoints

NUM_CLASSES=3069 # Vocabulary size of internal function name words, plus one for <unk> token (OOV words)

NUM_EXTERNAL=948 # Vocabulary size of external function names

NUM_CALLs=1 # Number of callers/internal callees/external calees per batch (batch size)

MAX_SENTENCEs=8 # Number of sequences per batch (batch size)

EXTERNAL_EMB="embedding" # External callee embedding methods, options: (one_hot, embedding)

DATASET_PATH="data_bin" # Path to the binarized dataset

RESULT_FILE="training_evaluation/prediction_evaluation/prediction_result.txt" # File to save the prediction results

EVALUATION_FILE="training_evaluation/prediction_evaluation/evaluation_input.txt" # File to save the evaluation inputValues of the above parameters have been set for the sample binarized dataset under the data_bin folder. The predicted names are saved in the $RESULT_FILE file. Note that, the input for evaluation is generated by the training_evaluation/prediction.sh script and the evaluation input file is stored in $EVALUATION_FILE, which contains ground truth, predictions and the probability of each predicted function name words.

To evaluate the performance of the well-trained model, you can get the evaluation results in precision, recall and F1 score by running the training_evaluation/evaluation.py script. For example for the prediction results generated for the sample binarized dataset under the data_bin, you can get evaluation results by running the following command:

$ python training_evaluation/evaluation.py --evaluation-input training_evaluation/prediction_evaluation/evaluation_input.txt

Probability Threshold = 0.3, Precision: 0.4968238162277238, Recall: 0.7111646309994839, F1: 0.584978319536674We address the challenges of the noisy nature of natural languages, we propose to generate distributed representation of function name words and calculate the semantic distance to identify synomyms with the help of CodeWordNet. We provide the script CodeWordNet/train_models.py for CodeWordNet model training and the well-trained models here.

If you find SymLM useful, please consider citing our paper:

@inproceedings{jin2022symlm,

title={SymLM: Predicting Function Names in Stripped Binaries via Context-Sensitive Execution-Aware Code Embeddings},

author={Jin, Xin and Pei, Kexin and Won, Jun Yeon and Lin, Zhiqiang},

booktitle={Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security},

pages={1631--1645},

year={2022}

}

Here is a list of common problems you might encounter.