A music streaming startup, Sparkify, has grown their user base and song database even more and want to move their data warehouse to a data lake. Their data resides in S3, in a directory of JSON logs on user activity on the app, as well as a directory with JSON metadata on the songs in their app.

As their data engineer, you are tasked with building an ETL pipeline that extracts their data from S3, processes them using Spark, and loads the data back into S3 as a set of dimensional tables. This will allow their analytics team to continue finding insights in what songs their users are listening to.

You'll be able to test your database and ETL pipeline by running queries given to you by the analytics team from Sparkify and compare your results with their expected results.

In this project, we will build a data lake on AWS S3 and build an ETL pipeline for a data lake hosted on S3. The data is loaded from S3 and processed into analytics tables using Spark and the processed data is loaded back into S3 in the form of parquet files.

- python

- AWS

Songs dataset is a subset of Million Song Dataset. Each file in the dataset is in JSON format and contains meta-data about a song and the artist of that song. The dataset is hosted at S3 bucket s3://udacity-dend/song_data.

Sample Record :

{"num_songs": 1, "artist_id": "ARJIE2Y1187B994AB7", "artist_latitude": null, "artist_longitude": null, "artist_location": "", "artist_name": "Line Renaud", "song_id": "SOUPIRU12A6D4FA1E1", "title": "Der Kleine Dompfaff", "duration": 152.92036, "year": 0}

Logs dataset is generated by Event Simulator. These log files in JSON format simulate activity logs from a music streaming application based on specified configurations. The dataset is hosted at S3 bucket s3://udacity-dend/log_data.

Sample Record :

{"artist": null, "auth": "Logged In", "firstName": "Walter", "gender": "M", "itemInSession": 0, "lastName": "Frye", "length": null, "level": "free", "location": "San Francisco-Oakland-Hayward, CA", "method": "GET","page": "Home", "registration": 1540919166796.0, "sessionId": 38, "song": null, "status": 200, "ts": 1541105830796, "userAgent": "\"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10_9_4) AppleWebKit\/537.36 (KHTML, like Gecko) Chrome\/36.0.1985.143 Safari\/537.36\"", "userId": "39"}

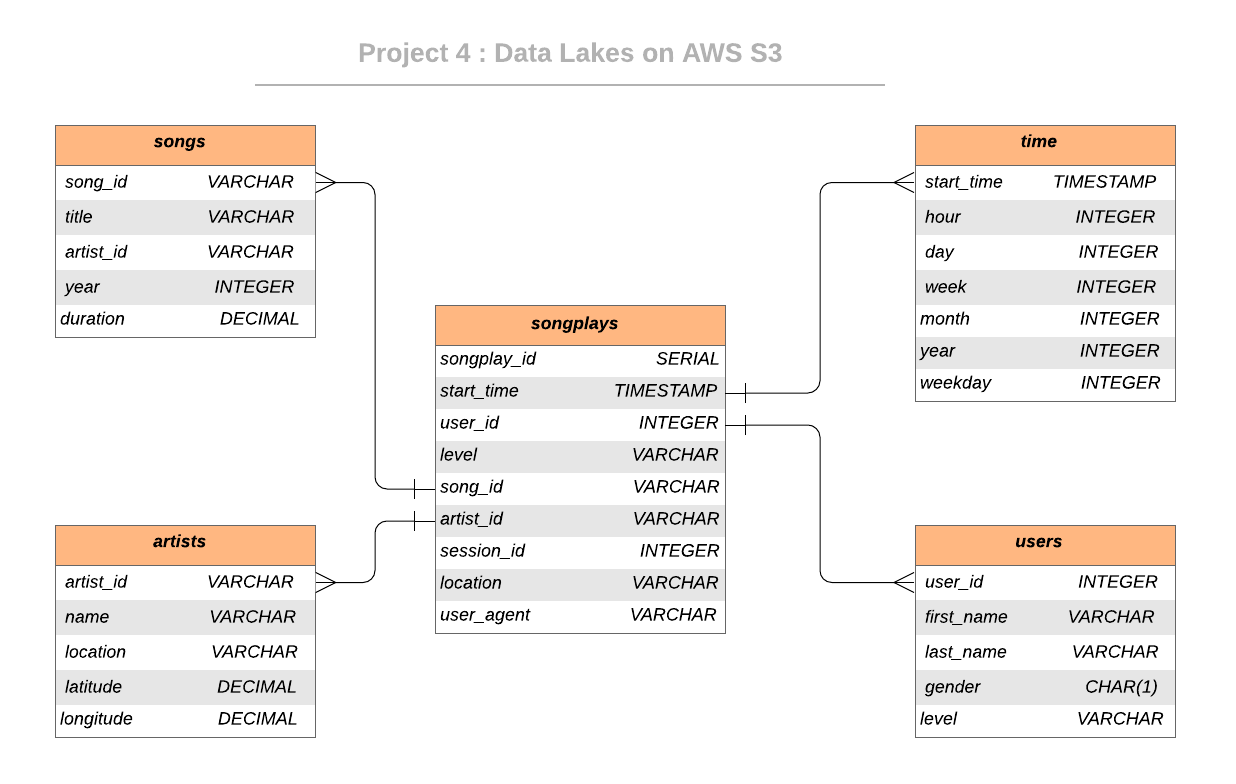

There's one fact table containing all the facts for all the events (user actions), and four dimensions tables, containing associated information such as user name, artist name, song meta-data among others.

The data stored on S3 buckets is extracted and processed using Spark, and is then inserted into the fact and dimensional tables. This tables are stored back to S3 in parquet files, organized for optimal performance. An entity relationship diagram (ERD) of the data model is given below.

Files in this repository:

| File / Folder | Description |

|---|---|

| data | Folder at the root of the project, where smaller version of data are stored |

| images | Folder at the root of the project, where images are stored |

| etl.py | Loads and processes the data from S3 and stores them back to S3 |

| dl.cfg | Sample configuration file for AWS |

| README | Readme file |

Clone the repository into a local machine using

git clone https://github.com/ObinnaIheanachor/Data-Lake-ProjectThese are prerequisites to run the program.

-

= python 3.7

- AWS account

- AWS EMR (preferred, but not necessary)

Follow these steps to replicate this project.

-

Edit the

dl.cfgconfiguration file and fill in the AWS Access Key and Secret Key fields -

Run ETL process by

python3 etl.py

This executes the commands to load data from S3, process the data using Spark and write the processed data back to S3 as parquet files.

-

The stored files can be explored in AWS S3.