WORK IN PROGRESS

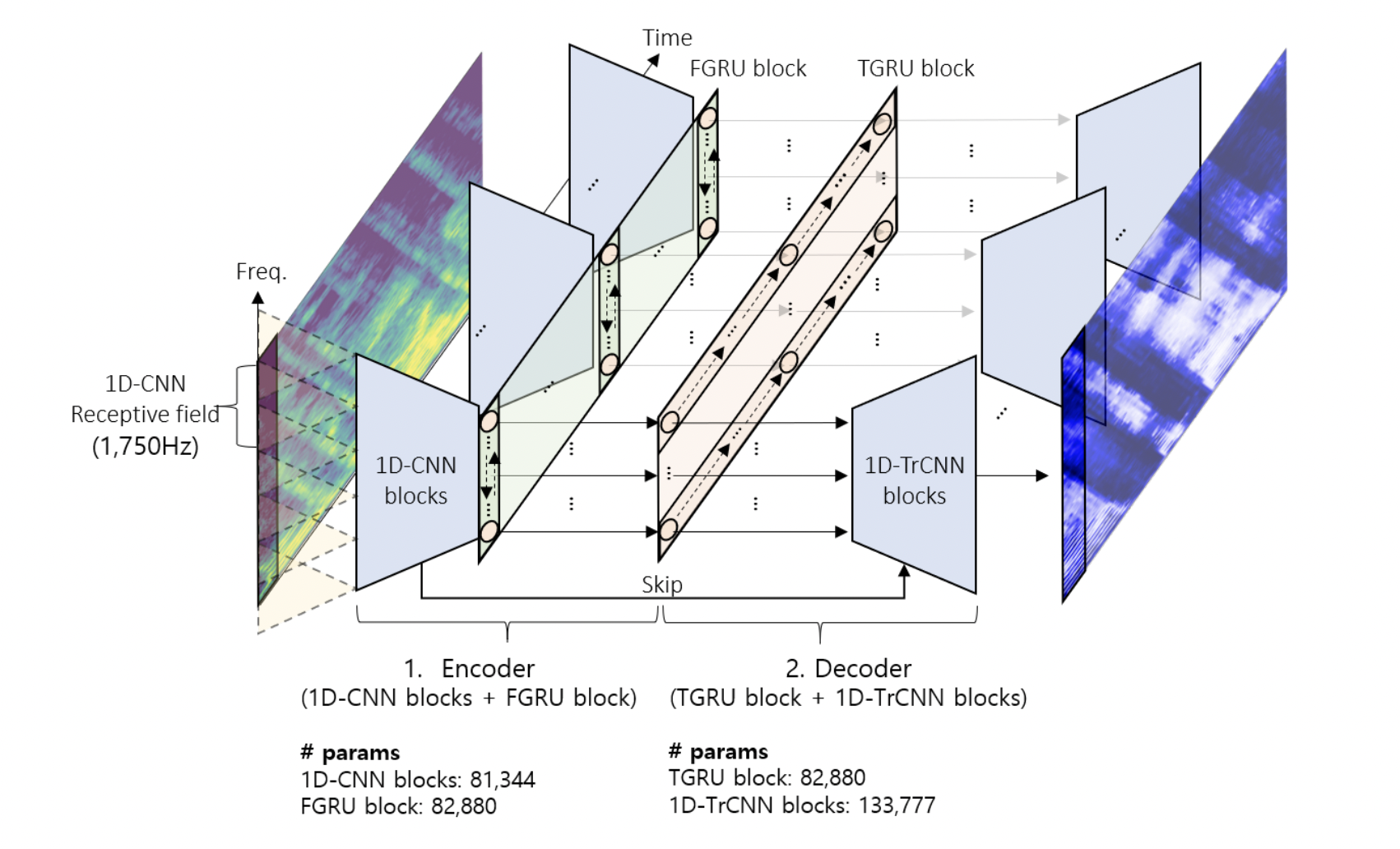

Unofficial implementation of REAL-TIME DENOISING AND DEREVERBERATION WTIH TINY RECURRENT U-NET in PyTorch. Tiny Recurrent U-Net (TRU-Net) is a lightweight online inference model that matches the performance of current (23 Jun 2021) state-of-the-art models. The size of the quantized version of TRU-Net is 362 kilobytes (~300k parameters), which is small enough to be deployed on edge devices. In addition, the small-sized model with a new masking method called phase-aware β-sigmoid mask enables simultaneous denoising and dereverberation.

Create and activate a virtual environment and install dependencies.

pip install -r requirements.txt

- The code uses Microsoft DNS 2020 dataset. The dataset, pre-processing codes, and instruction to generate training data can be found in this link. Assume the dataset is stored under

./dns. Prior to generating clean-noisy data pairs, to comply with the paper's configurations, alter the following parameters in theirnoisyspeech_synthesizer.cfgfile:

total_hours: 300,

snr_lower: -5,

snr_upper: 25,

total_snrlevels: 30

Generate training data:

python noisyspeech_synthesizer_singleprocess.py

Now we assume that the structure of the dataset folder is:

Training set:

.../dns/dataset/clean/fileid_{0..49999}.wav

.../dns/dataset/noisy/fileid_{0..49999}.wav

.../dns/dataset/noise/fileid_{0..49999}.wav

The tiny.json file complies with the paper's configurations and hyperparameters. Should you wish to initiate a training with a different set of hyperparameters, create .json file in the configs directory or simply modify the paramteres in the pre-existing file. We recommend leaving the network hyperparameters untouched if faithfull replication of the model size is intended. To start training run:

python3 distributed.py -c config/tiny.json

The model recieves data with shape of (Time-step, 4, Frequency) where dimension 1 encomapasses a channel-wise concatenation of log-magnitude spectrogram, PCEN spectrogram, and real/imaginary part of demodulated phase respectively. To compensate memory over-load, our code utilises the aforementiond data information to reconstruct time-domain audio in order to calculate Multi-Resolution STFT Loss instead loading audio file pairs on the GPU.

TODO

TODO

To export model in onnx format, run the script below, specifying paths as described:

python onnx.py -c 'PATH_TO_JSON_CONFIG' -i 'PATH_TO_TRAINED_MODEL_CKPTs' -o 'ONNX_EXPORT_PATH'