This project attempts to identify a pattern within President Trump's tweets where he deviates from current major events such as the COVID-19 pandemic, the Presidential Impeachment, etc. We plan to compare topics of Trump's tweets to major news events as well as twitter trends at the time. Our aim is to analyze how the subjects he chooses to focus on deviates from current events. We will also create visualizations to demonstrate how frequently the content of his tweets deviates from the focus of mainstream media.

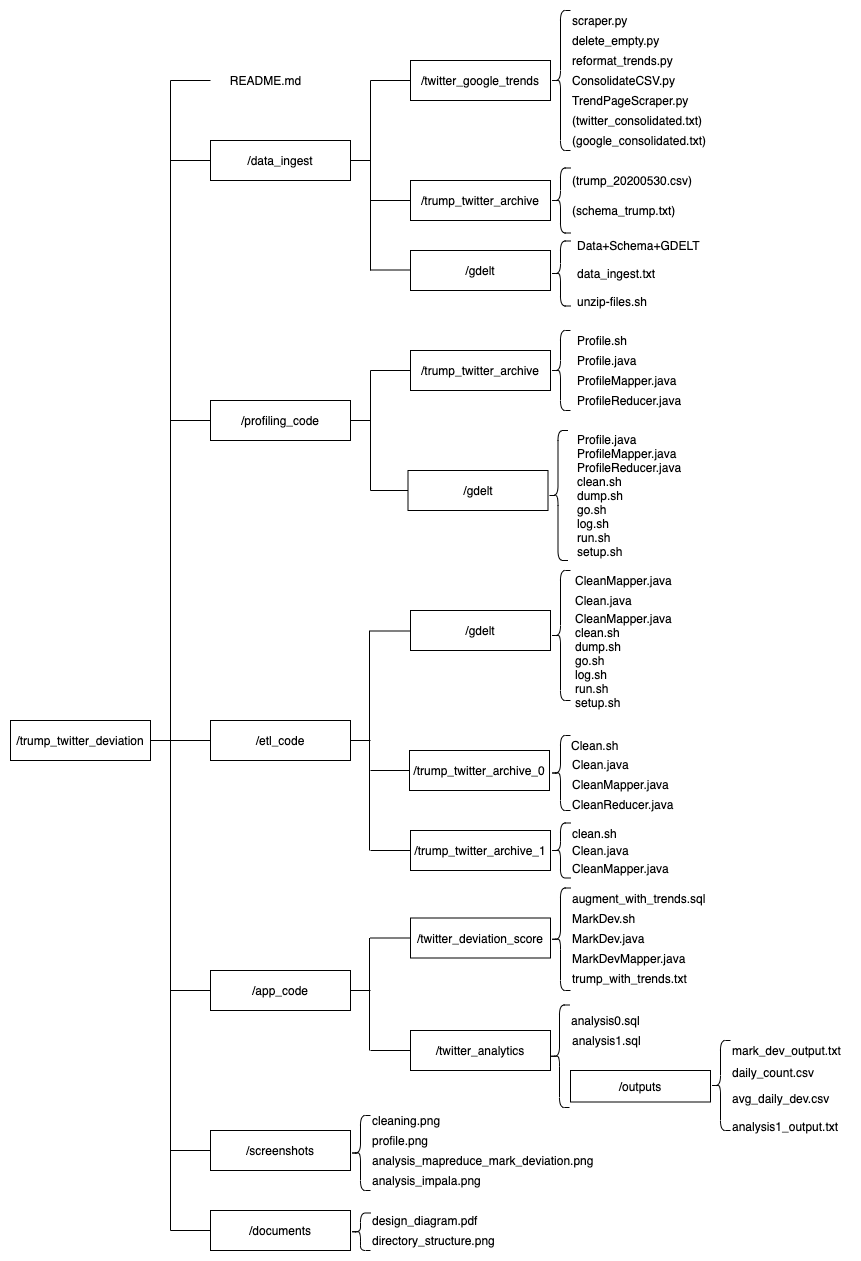

The dataset download steps are at (TrumpTwitterDeviation/data_ingest/gdelt/data_ingest.txt).

In the same directory, Data+Schema+GDELT describes the schema. The unzip-files.sh is used for unzipping the files into DUMBO.

The code can be found at TrumpTwitterDeviation/etl_code/gdelt/. The go.sh will run the other scripts within the directory which setup input/output directories then run the ETL code to clean the GDELT dataset. Go through the *.sh files to ensure the input/output directories are correct for your setup, since it's dependent on what you decided to setup in HDFS.

clean.sh : cleans up the previous runs output directory

dump.sh : cat out one of the part files for this run

go.sh : runs all the other *.sh files in this directory except log.sh

log.sh : used for opening the log for the run

run.sh : executes the ETL code

setup.sh : sets up the output directory in HDFS for the current run

The code can be found at TrumpTwitterDeviation/profiling_code/gdelt/. The go.sh will run the other scripts within the directory which setup input/output directories then run the ETL code to clean the GDELT dataset. Go through the *.sh files to ensure the input/output directories are correct for your setup, since it's dependent on how you decided to setup your files in HDFS.

clean.sh : cleans up the previous runs output directory

dump.sh : cat out one of the part files for this run

go.sh : runs all the other *.sh files in this directory except log.sh

log.sh : used for opening the log for the run

run.sh : executes the Profiling code

setup.sh : sets up the output directory in HDFS for the current run

- Navigate to

/data_ingest/twitter_google_trends - Excecute the following to crawl Twitter trends and Google trends (This may take a while)

python scraper.py

A new directory named trends_data should be created

3. Excecute the following to remove empty files in the crawled data

python delete_empty.py ./trends_data/google

python delete_empty.py ./trends_data/twitter

- Excecute the following to consolidate the crawled data into one single file

(one for twitter and another for google).

Please make sure

trends_datastays in the same directory asreformat_trends.py.

python reformat_trends.py

- The output files of step 4,

twitter_consolidated.txtandgoogle_consolidated.txtare placed in the current directory.twitter_consolidated.txtis used as one of the data sources for this project. We refer totwitter_consolidated.txtas "TWITTER_TRENDS" in this document for convenience.

This dataset is available for download in cleaned csv format (/data_ingest/trump_twitter_archive/trump_20200530.csv). The file size is small enough that it can be transported using the scp command.

In the same directory, schema_trump.txt describes the dataset.

Please move this file to HDFS using the hdfs dfs -put command. We refer to this file as "TRUMP_COMPLETE" and the path to this file as "/path/to/TRUMP_COMPLETE" in this document for convenience.

- Navigate to

/profiling_code/trump_twitter_archive/. There should be 4 files in the directory,Profile.java Profile.sh ProfileMapper.java ProfileReducer.java. - Open

Profile.shin your favorite editor. change the output directory of thehdfs dfs -mkdircommand to desired destination (line 10). - Change the destination of the

hdfs dfs -putcommand to desired location (line 11). Please also make sure the input directory (path totrump_20200530.csv) is correct. - Modify the input and output arguments in line 13 (

hadoop jar Profile.jar ...) according to previous steps. - Execute the bash script

./Profile.sh

- The output should be in the output directory specified in step 4 (line 13)

- Navigate to

/etl_code/trump_twitter_archive_0/. There should be 4 files in the directory,Clean.java Clean.sh CleanMapper.java CleanReducer.java - Make the same modifications to

Clean.shas the ones made toProfile.shin the previous section. - Execute the bash script

./Clean.sh

- The output should be in the output directory specified in step 2 (line 13)

- Note: This MapReduce program consolidate all the tweet data on a day into one line. it was later decided the output of this cleaning process would not be used in the analytics.

- Navigate to

/etl_code/trump_twitter_archive_1/. There should be 3 files in the directory,Clean.java Clean.sh CleanMapper.java - Follow the same procedure in the previous section - "Data Cleaning 0" to execute this MapReduce job

- The output file has the format "tweet_id data keywords" that contains the keywords of each tweet.

- We refer to the output of this job as "TRUMP_KEYWORDS" for convenience.

- Move "TWITTER_TRENDS" and "TRUMP_KEYWORDS" to HDFS using the

hdfs dfs -putcommand. We refer to the paths to these files as "/path/to/TWITTER_TRENDS" abd "/path/to/TRUMP_KEYWORDS" for convenience. - Navitage to

/app_code/twitter_deviation_score. There should be a file named "augment_with_trends.sql". - Change the path in line 5 to "/path/to/TRUMP_KEYWORDS", the path in line 13 to "/path/to/TWITTER_TRENDS", and the path in line 26 to "/path/to/TRUMP_COMPLETE".

- Start Impala shell with command

impala-shell -B -o trump_with_trends.txt --output_delimiter=';'

so that the output of the query in line 24 is written to trump_with_trends.txt.

- Move

trump_with_trends.txtto HDFS using thehdfs dfs -putcommand. - Modify

MarkDev.shin this directory so that on line 9 the input file to he MapReduce job istrump_with_trends.txtand it outputs to desired location. We refer to the output as "mark_dev_output.txt". - "mark_dev_output.txt" has the format "tweet_id date deviate_mark". If a tweet is determined to deviate from twitter trends, deviate_mark is set to 1. Otherwise it is set to 0.

- Navigate to

/app_code/twitter_analytics. - Modify the input location in

analysis0so that it uses the correct path to "mark_dev_output.txt" in line 6 and thatuse zw1400;is changed to the correct database name. - Execute

analysis0andanalysis1using the command

impala-shell -i compute-1-1 -f ./analysis0.sql -B -o analysis0.txt

impala-shell -i compute-1-1 -f ./analysis1.sql -B -o analysis1.txt

- The table information is included either in comment in the .sql files or in the printed messages.

- The results are included in

/app_code/twitter_analytics/outputs.