This project has two parts. In the first part, you will run a regression, and identify and remove the 10% of points that have the largest residual errors. Then you’ll remove those outliers from the dataset and refit the regression, just like the strategy that Sebastian suggested in the lesson videos.

In the second part, you will get acquainted with some of the outliers in the Enron finance data, and learn if/how to remove them.

Sebastian described to us an algorithm for improving a regression, which you will implement in this project. You will work through it in the next few quizzes. To summarize, what you'll do is fit the regression on all training points discard the 10% of points that have the largest errors between the actual y values, and the regression-predicted y values refit on the remaining points.

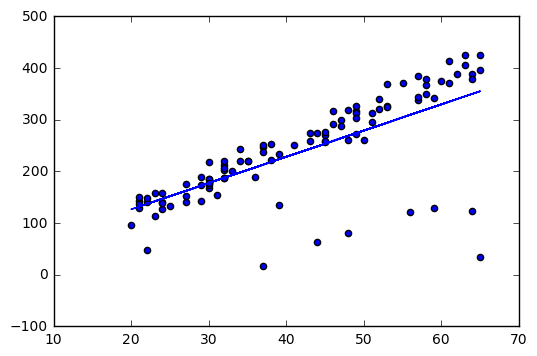

Start by running the starter code (outliers/outlier_removal_regression.py) and visualizing the points. A few outliers should clearly pop out. Deploy a linear regression, where net worth is the target and the feature being used to predict it is a person’s age (remember to train on the training data!).

The “correct” slope for the main body of data points is 6.25 (we know this because we used this value to generate the data); what slope does your regression have?

(Download all files as a zip folder from the Github. Don't use "save link as" because it will download and save files in the html formats, which cannot be picked up by the codes.)

#!/usr/bin/python

import random

import numpy

import matplotlib.pyplot as plt

import pickle

from outlier_cleaner import outlierCleaner

from operator import itemgetter

### load up some practice data with outliers in it

ages = pickle.load( open("practice_outliers_ages.pkl", "r") )

net_worths = pickle.load( open("practice_outliers_net_worths.pkl", "r") )

### ages and net_worths need to be reshaped into 2D numpy arrays

### second argument of reshape command is a tuple of integers: (n_rows, n_columns)

### by convention, n_rows is the number of data points

### and n_columns is the number of features

ages = numpy.reshape( numpy.array(ages), (len(ages), 1))

net_worths = numpy.reshape( numpy.array(net_worths), (len(net_worths), 1))

from sklearn.cross_validation import train_test_split

ages_train, ages_test, net_worths_train, net_worths_test = train_test_split(ages, net_worths, test_size=0.1, random_state=42)

### fill in a regression here! Name the regression object reg so that

### the plotting code below works, and you can see what your regression looks like

from sklearn import linear_model

reg = linear_model.LinearRegression()

reg.fit(ages_train, net_worths_train)

reg.coef_

array([[ 5.07793064]])

What is the score you get when using your regression to make predictions with the test data?

reg.score(ages_test, net_worths_test)0.87826247883513975

In outliers/outlier_cleaner.py, you will find the skeleton for a function called outlierCleaner() that you will fill in with a cleaning algorithm. It takes three arguments: predictions is a list of predicted targets that come from your regression, ages is the list of ages in the training set, and net_worths is the actual value of the net worths in the training set. There should be 90 elements in each of these lists (because the training set has 90 points in it). Your job is to return a list called cleaned_data that has only 81 elements in it, which are the 81 training points where the predictions and the actual values (net_worths) have the smallest errors (90 * 0.9 = 81). The format of cleaned_data should be a list of tuples, where each tuple has the form (age, net_worth, error).

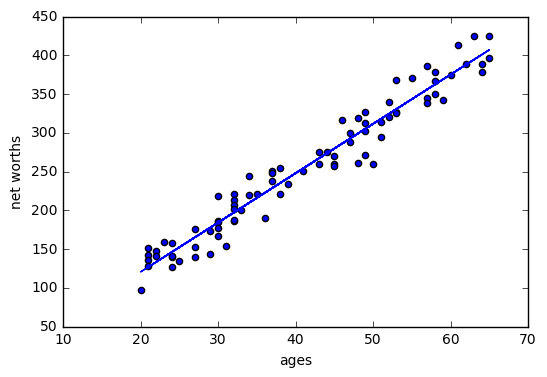

Once this cleaning function is working, you should see the regression result changes. What is the new slope? Is it closer to the “correct” result of 6.25?

def outlierCleaner(predictions, ages, net_worths):

"""

Clean away the 10% of points that have the largest

residual errors (difference between the prediction

and the actual net worth).

Return a list of tuples named cleaned_data where

each tuple is of the form (age, net_worth, error).

"""

cleaned_data = []

### your code goes here

tuple_list = []

for i in range(len(ages)):

a = ages[i][0] #ages are an array, so need to take the element out

b = net_worths[i][0]

c = abs(net_worths[i][0] - predictions[i][0])

tuple_list.append((a,b,c))

new_tuple_list = sorted(tuple_list,key=itemgetter(2), reverse = True)

cleaned_data = new_tuple_list[len(ages)/10:]

return cleaned_datapredictions = reg.predict(ages_train)

cleaned_data = outlierCleaner( predictions, ages_train, net_worths_train )try:

plt.plot(ages, reg.predict(ages), color="blue")

except NameError:

pass

plt.scatter(ages, net_worths)

plt.show()

### identify and remove the most outlier-y points

cleaned_data = []

try:

predictions = reg.predict(ages_train)

cleaned_data = outlierCleaner( predictions, ages_train, net_worths_train )

except NameError:

print "your regression object doesn't exist, or isn't name reg"

print "can't make predictions to use in identifying outliers"

### only run this code if cleaned_data is returning data

if len(cleaned_data) > 0:

ages, net_worths, errors = zip(*cleaned_data)

ages = numpy.reshape( numpy.array(ages), (len(ages), 1))

net_worths = numpy.reshape( numpy.array(net_worths), (len(net_worths), 1))

### refit your cleaned data!

try:

reg.fit(ages, net_worths)

plt.plot(ages, reg.predict(ages), color="blue")

except NameError:

print "you don't seem to have regression imported/created,"

print " or else your regression object isn't named reg"

print " either way, only draw the scatter plot of the cleaned data"

plt.scatter(ages, net_worths)

plt.xlabel("ages")

plt.ylabel("net worths")

plt.show()

else:

print "outlierCleaner() is returning an empty list, no refitting to be done"reg.coef_array([[ 6.36859481]])

What’s the new score when you use the regression to make predictions on the test set?

reg.score(ages_test, net_worths_test)0.98318945568560678

### draw the scatterplot, with color-coded training and testing points

import matplotlib.pyplot as plt

for feature, target in zip(feature_test, target_test): # zip function takes iterables

plt.scatter( feature, target, color= 'r' )

for feature, target in zip(feature_train, target_train):

plt.scatter( feature, target, color= 'b' )

### labels for the legend

plt.scatter(feature_test[0], target_test[0], color= 'r', label="test")

plt.scatter(feature_test[0], target_test[0], color= 'b', label="train")

### draw the regression line, once it's coded

try:

plt.plot( feature_test, reg.predict(feature_test), color = 'black' )

except NameError:

pass

plt.xlabel(features_list[0])

plt.ylabel(features_list[1])

plt.legend()

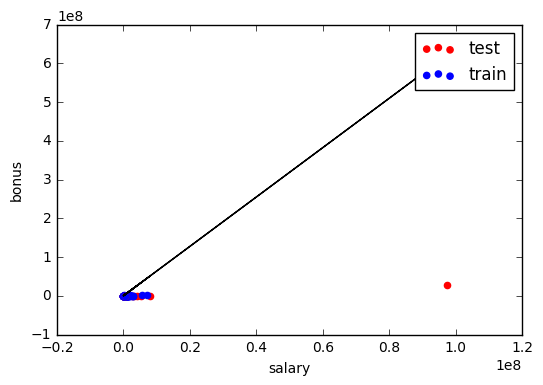

plt.show()In the mini-project for the regressions lesson, you used a regression to predict the bonuses for Enron employees. As you saw, even a single outlier can make a big difference on the regression result. There was something we didn’t tell you, though, which was that the dataset we had you use in that project had already been cleaned of some significant outliers. Identifying and cleaning away outliers is something you should always think about when looking at a dataset for the first time, and now you’ll get some hands-on experience with the Enron data.

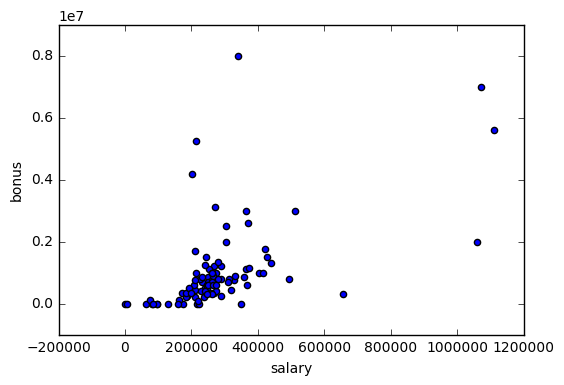

You can find the starter code in outliers/enron_outliers.py, which reads in the data (in dictionary form) and converts it into a sklearn-ready numpy array. Since there are two features being extracted from the dictionary (“salary” and “bonus”), the resulting numpy array will be of dimension N x 2, where N is the number of data points and 2 is the number of features. This is perfect input for a scatterplot; we’ll use the matplotlib.pyplot module to make that plot. (We’ve been using pyplot for all the visualizations in this course.) Add these lines to the bottom of the script to make your scatterplot:

for point in data:

salary = point[0]

bonus = point[1]

matplotlib.pyplot.scatter( salary, bonus )

matplotlib.pyplot.xlabel("salary")

matplotlib.pyplot.ylabel("bonus")

matplotlib.pyplot.show()

#!/usr/bin/python

import pickle

import sys

import matplotlib.pyplot

sys.path.append("../tools/")

from feature_format import featureFormat, targetFeatureSplit

### read in data dictionary, convert to numpy array

data_dict = pickle.load( open("../final_project/final_project_dataset.pkl", "r") )

features = ["salary", "bonus"]

data = featureFormat(data_dict, features,remove_any_zeroes=True)

for point in data:

salary = point[0]

bonus = point[1]

matplotlib.pyplot.scatter( salary, bonus )

matplotlib.pyplot.xlabel("salary")

matplotlib.pyplot.ylabel("bonus")

matplotlib.pyplot.show()

### your code belowAs you can see, visualization is one of the most powerful tools for finding outliers!

There’s one outlier that should pop out to you immediately. Now the question is to identify the source. We found the original data source to be very helpful for this identification; you can find that PDF in final_project/enron61702insiderpay.pdf What’s the name of the dictionary key of this data point? (e.g. if this is Ken Lay, the answer would be “LAY KENNETH L”).

From the PDF, this outlier is the "total".

Does this outlier seem like a data point that we should include when running machine learning on this dataset? Or should we remove it?

Should remove it.

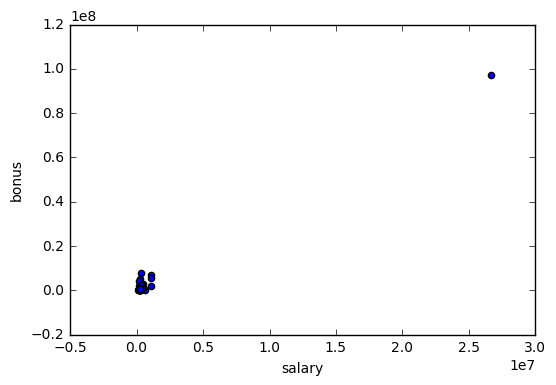

A quick way to remove a key-value pair from a dictionary is the following line: dictionary.pop( key, 0 ) Write a line like this (you’ll have to modify the dictionary and key names, of course) and remove the outlier before calling featureFormat(). Now rerun the code, so your scatterplot doesn’t have this outlier anymore. Are all the outliers gone?

data_dict['TOTAL']{'bonus': 97343619,

'deferral_payments': 32083396,

'deferred_income': -27992891,

'director_fees': 1398517,

'email_address': 'NaN',

'exercised_stock_options': 311764000,

'expenses': 5235198,

'from_messages': 'NaN',

'from_poi_to_this_person': 'NaN',

'from_this_person_to_poi': 'NaN',

'loan_advances': 83925000,

'long_term_incentive': 48521928,

'other': 42667589,

'poi': False,

'restricted_stock': 130322299,

'restricted_stock_deferred': -7576788,

'salary': 26704229,

'shared_receipt_with_poi': 'NaN',

'to_messages': 'NaN',

'total_payments': 309886585,

'total_stock_value': 434509511}

data_dict.pop('TOTAL',0){'bonus': 97343619,

'deferral_payments': 32083396,

'deferred_income': -27992891,

'director_fees': 1398517,

'email_address': 'NaN',

'exercised_stock_options': 311764000,

'expenses': 5235198,

'from_messages': 'NaN',

'from_poi_to_this_person': 'NaN',

'from_this_person_to_poi': 'NaN',

'loan_advances': 83925000,

'long_term_incentive': 48521928,

'other': 42667589,

'poi': False,

'restricted_stock': 130322299,

'restricted_stock_deferred': -7576788,

'salary': 26704229,

'shared_receipt_with_poi': 'NaN',

'to_messages': 'NaN',

'total_payments': 309886585,

'total_stock_value': 434509511}

len(data_dict)145

#re-run the code after removing the outlier 'TOTAL'

features = ["salary", "bonus"]

data = featureFormat(data_dict, features)

for point in data:

salary = point[0]

bonus = point[1]

matplotlib.pyplot.scatter( salary, bonus )

matplotlib.pyplot.xlabel("salary")

matplotlib.pyplot.ylabel("bonus")

matplotlib.pyplot.show()There are still some outliers. We see 4 more outliers, although none that are as waaaayyy out there as the last example (the "total" line)

We would argue that there’s 4 more outliers to investigate; let's look at a couple of them. Two people made bonuses of at least 5 million dollars, and a salary of over 1 million dollars; in other words, they made out like bandits. What are the names associated with those points?

names = []

for i in data_dict.keys():

# be careful with null values; zeros haven't been removed from the data_dict

if data_dict[i]['bonus'] > 5000000 and data_dict[i]['bonus'] != 'NaN' \

and data_dict[i]['salary'] > 1000000 and data_dict[i]['salary'] != 'NaN':

names.append(i)

names['LAY KENNETH L', 'SKILLING JEFFREY K']

Would you guess that these are typos or weird spreadsheet lines that we should remove, or that there’s a meaningful reason why these points are different? (In other words, should they be removed before we, say, try to build a POI identifier?)

No. They should not be removed, because POI may have very different values in their financial features.

They're two of Enron's biggest bosses, and definitely people of interest.