This repository contains the data and benchmark code of the following paper:

EgoExoLearn: A Dataset for Bridging Asynchronous Ego- and Exo-centric View of Procedural Activities in Real World

Yifei Huang, Guo Chen, Jilan Xu, Mingfang Zhang, Lijin Yang, Baoqi Pei, Hongjie Zhang, Lu Dong, Yali Wang, Limin Wang, Yu Qiao

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024

Presented by OpenGVLab in Shanghai AI Lab

[2024/08]We release the test set annotation as well. Please do not use them for training.[2024/08]The original size videos can now be found at the huggingface repo.[2024/05]Our paper with supplementary material can be found here.[2024/03]Part of EgoExoLearn raw annotations released.[2024/03]EgoExoLearn paper released.[2024/03]Annotation and code for the cross-view association and cross-view referenced skill assessment benchmarks are released.[2024/03]EgoExoLearn code and data initially released.

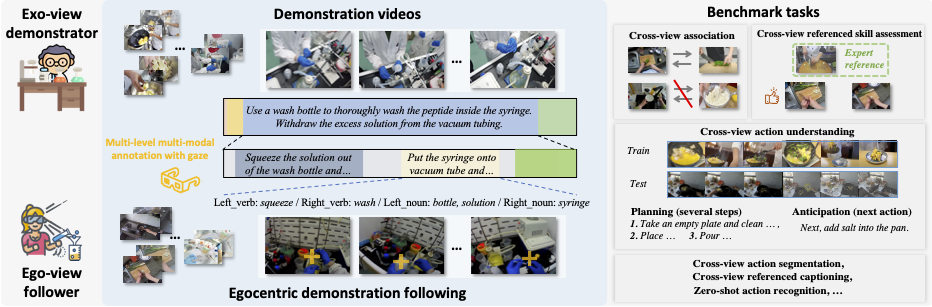

We propose EgoExoLearn, a dataset that emulates the human demonstration following process, in which individuals record egocentric videos as they execute tasks guided by exocentric-view demonstration videos. Focusing on the potential applications in daily assistance and professional support, EgoExoLearn contains egocentric and demonstration video data spanning 120 hours captured in daily life scenarios and specialized laboratories. Along with the videos we record high-quality gaze data and provide detailed multimodal annotations, formulating a playground for modeling the human ability to bridge asynchronous procedural actions from different viewpoints.

We propose EgoExoLearn, a dataset that emulates the human demonstration following process, in which individuals record egocentric videos as they execute tasks guided by exocentric-view demonstration videos. Focusing on the potential applications in daily assistance and professional support, EgoExoLearn contains egocentric and demonstration video data spanning 120 hours captured in daily life scenarios and specialized laboratories. Along with the videos we record high-quality gaze data and provide detailed multimodal annotations, formulating a playground for modeling the human ability to bridge asynchronous procedural actions from different viewpoints.

Please visit each subfolder for code and annotations. More updates coming soon.

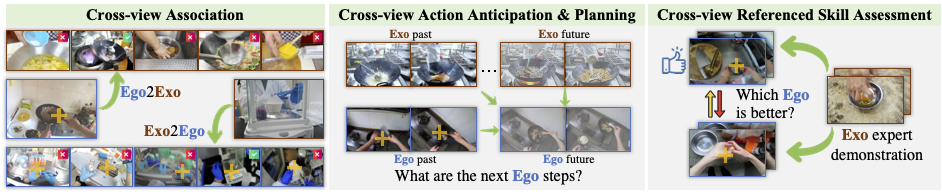

We design benchmarks of 1) cross-view association, 2) cross-view action understanding (action segmentation, action ancitipation, action planning), 3) cross-view referenced skill assessment, and 4) cross-view referenced video captioning. Each benchmark is meticulously defined, annotated, and supported by baseline implementations. In addition, we pioneeringly explore the role of gaze in these tasks. We hope our dataset can provide resources for future work for bridging asynchronous procedural actions in ego- and exo-centric perspectives, thereby inspiring the design of AI agents adept at learning from real-world human demonstrations and mapping the procedural actions into robot-centric views.

CLIP features of gaze cropped videos

I3D RGB features of gaze cropped videos

Code: tm1g

(fullsize videos only)

If you find our repo useful for your research, please consider citing our paper:

@InProceedings{huang2024egoexolearn,

title={EgoExoLearn: A Dataset for Bridging Asynchronous Ego- and Exo-centric View of Procedural Activities in Real World},

author={Huang, Yifei and Chen, Guo and Xu, Jilan and Zhang, Mingfang and Yang, Lijin and Pei, Baoqi and Zhang, Hongjie and Lu, Dong and Wang, Yali and Wang, Limin and Qiao, Yu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}Led by Shanghai AI Laboratory, Nanjing University and Shenzhen Institute of Advanced Technology, this project is jointly accomplished by talented researchers from multiple institutes including The University of Tokyo, Fudan University, Zhejiang University, and University of Science and Technology of China.

📬 Primary contact: Yifei Huang ( hyf at iis.u-tokyo.ac.jp )