Ghost in the Minecraft: Generally Capable Agents for Open-World Environments via Large Language Models with Text-based Knowledge and Memory

Minecraft, as the world's best-selling game, boasts over 238 million copies sold and more than 140 million peak monthly active users. Within the game, hundreds of millions of players have experienced a digital second life by surviving, exploring and creating, closely resembling the human world in many aspects. Minecraft acts as a microcosm of the real world. Developing an automated agent that can master all technical challenges in Minecraft is akin to creating an artificial intelligence capable of autonomously learning and mastering the entire real-world technology.

Ghost in the Minecraft (GITM) is a novel framework integrates Large Language Models (LLMs) with text-based knowledge and memory, aiming to create Generally Capable Agents in Minecraft. GITM features the following characteristics:

-

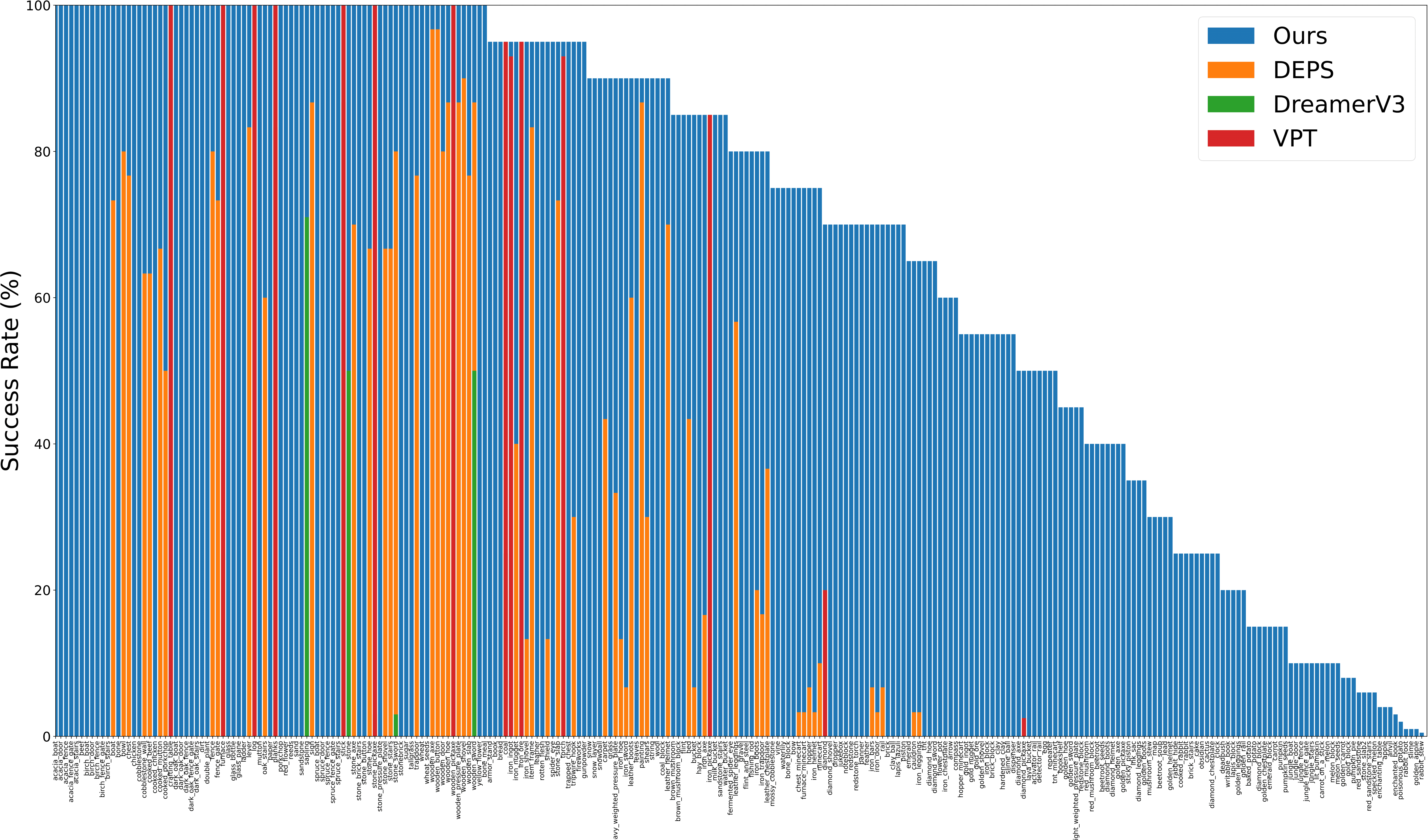

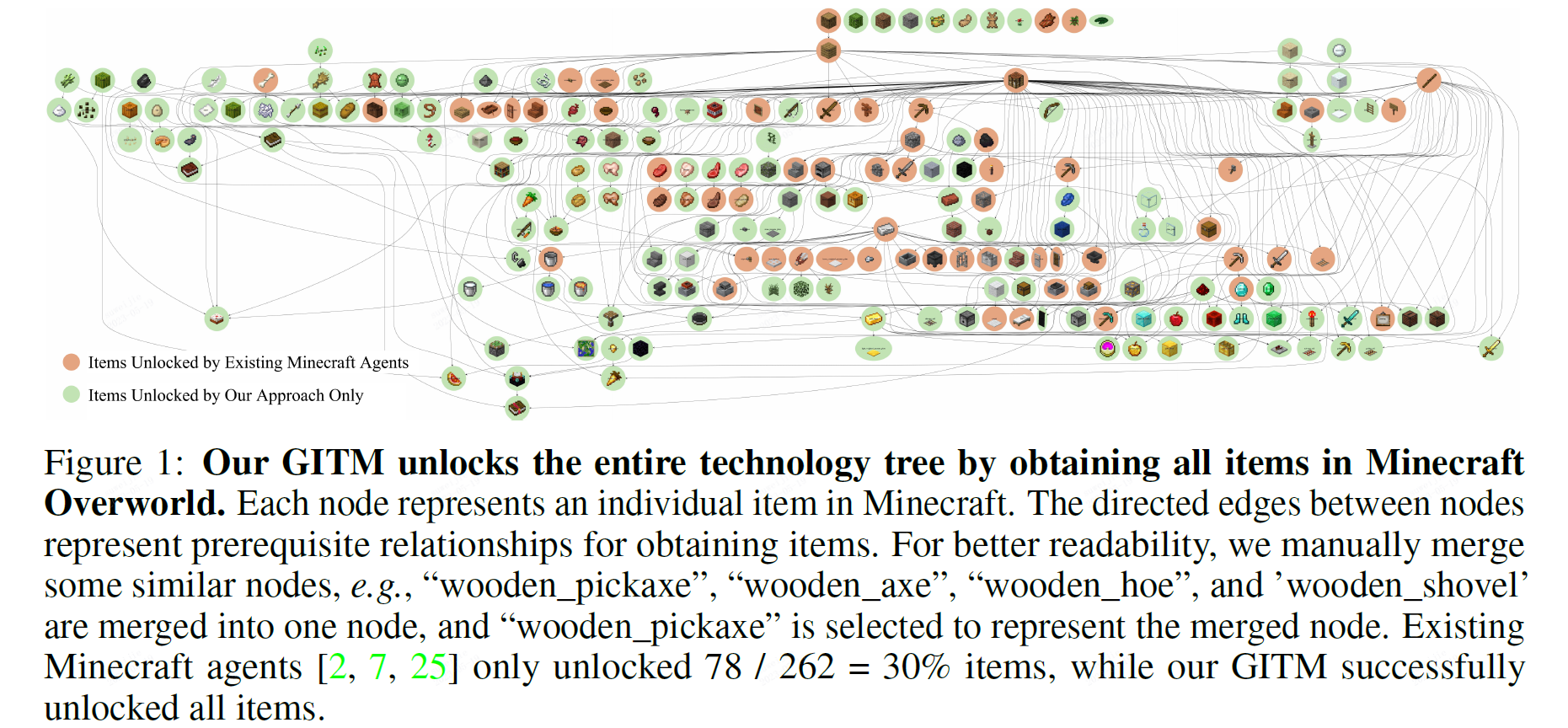

Broad task coverage. All previous agents combined can only achieve 30% completion rate of all items in the Minecraft Overworld technology tree, while GITM is able to unlock 100% of them.

-

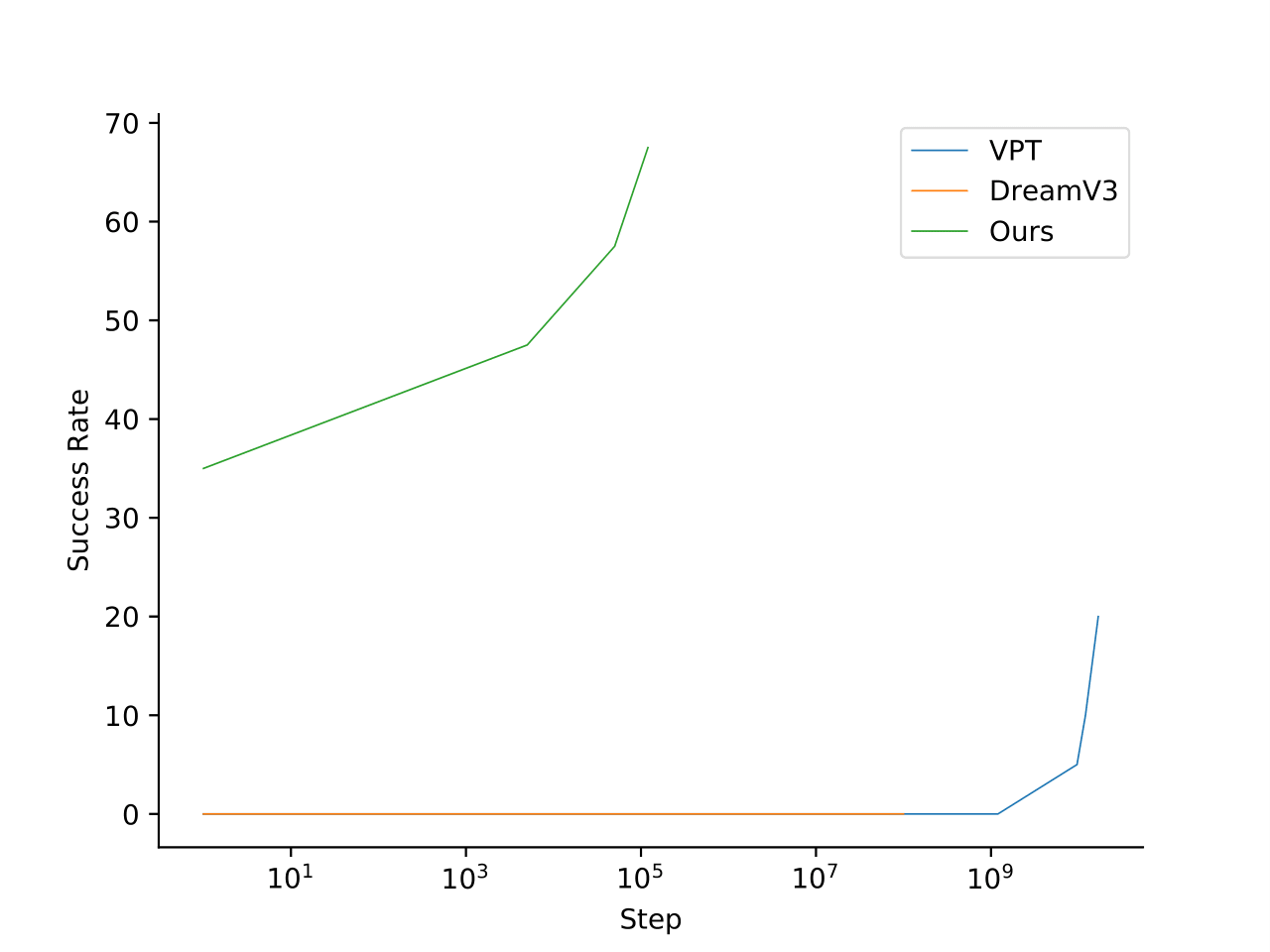

High success rate. GITM achieves 67.5% success rate on the "ObtainDiamond" task, improving the SOTA (OpenAI's VPT) by +47.5%.

-

Excellent training efficiency. OpenAI's VPT needs to be trained for 6,480 GPU days, DeepMind's DreamerV3 needs to be trained for 17 GPU days, while our GITM does not need any GPUs and can be trained in 2 days using only a single CPU node with 32 CPU cores.

This research shows the potential of LLMs in developing capable agents for handling long-horizon, complex tasks and adapting to uncertainties in open-world environments.

GITM can handle various biomes, environments, day and night scenes, and even encounter monsters with ease.

Due to size limit of github, the video is played at 2x speed, and the part of finding ores is played at 10x speed.

Obtain Enchanted Book

enchanted_book.mp4

The enchanted book is the ultimate creation in the technology tree of Minecraft Overworld.

Watch high-definition video on YouTube.

Obtain Diamond

diamond.mp4

Watch high-definition video on YouTube.

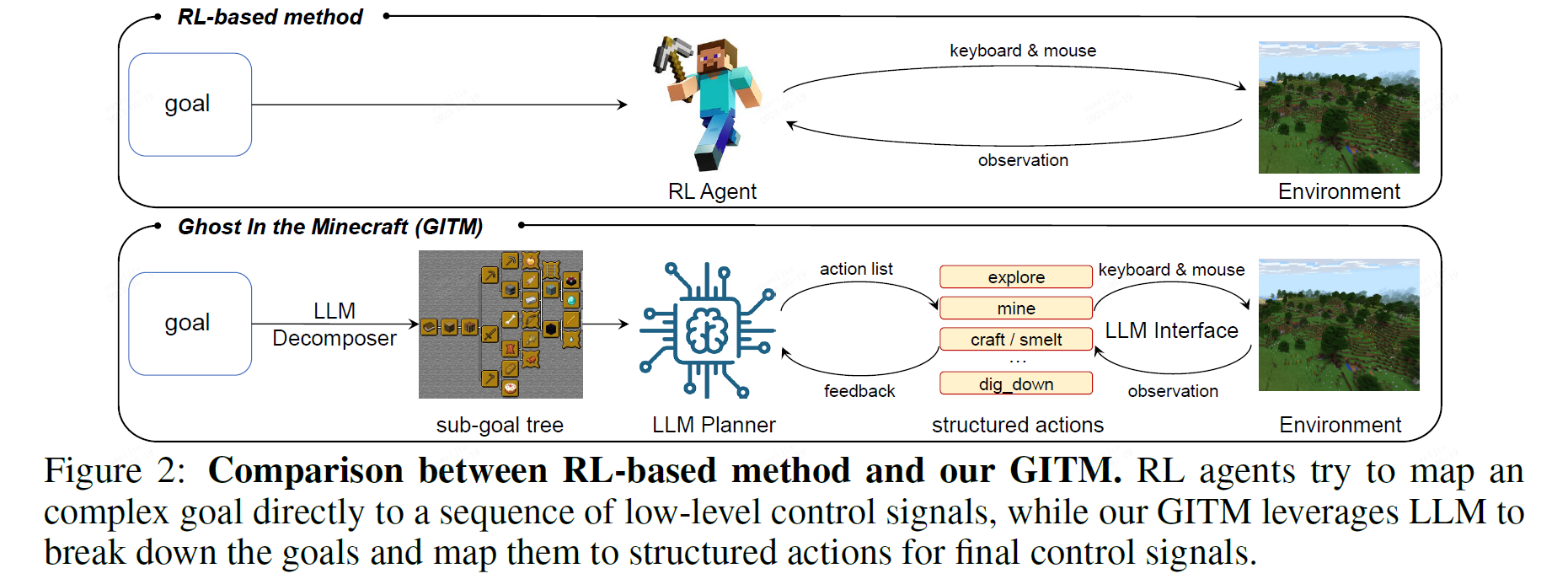

The biggest dilemma of previous RL-based agents is how to map an extremely long-horizon and complex goal to a sequence of lowest-level keyboard/mouse operations. To address this challenge, we propose our framework Ghost In the Minecraft (GITM), which uses Large Language Model (LLM)-based agents as a new paradigm. Instead of direct mapping like RL agents, our LLM-based agents employ a hierarchical approach. It first breaks down the decompose goal into sub-goals, then into structured actions, and finally into keyboard/mouse operations.

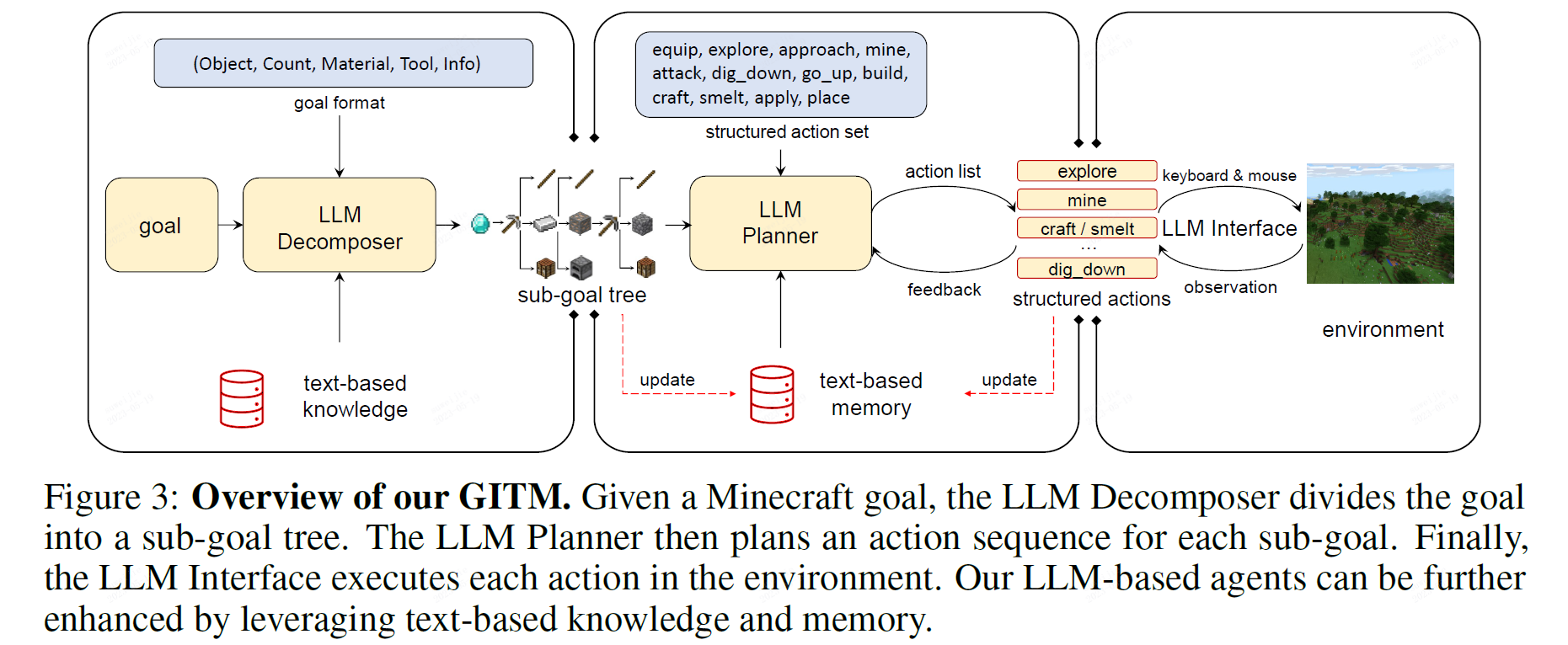

The proposed LLM-based agent consists of a LLM Decomposer, a LLM Planner, and a LLM Interface, which are responsible for the decomposition of sub-goals, structured actions, and keyboard/mouse operations, respectively.

-

LLM Decomposer first decomposes a goal in Minecraft into a series of well-defined sub-goals according to the text-based knowledge collected from the Internet.

-

LLM Planner then plans a sequence of structured actions for each sub-goal. LLM Planner also records and summarizes successful action lists into a text-based memory to enhance future planning.

-

LLM Interface execute the structured actions to interact with the environment by processing raw keyboard/mouse input and receiving raw observations.

GITM achieves non-zero success rates for all items which indicates a strong collecting capability, while all previous methods combined can only complete 30% of these items.

GITM only requires a single CPU node with 32 cores for training. Compared with 6,480 GPU days of OpenAI's VPT and 17 GPU days of DeepMind's DreamerV3, GITM improves the efficiency by at least 10,000 times.

If you find this project useful in your research, please consider cite:

@article{zhu2023ghost,

title={Ghost in the Minecraft: Generally Capable Agents for Open-World Environments via Large Language Models with Text-based Knowledge and Memory},

author={Zhu, Xizhou and Chen, Yuntao and Tian, Hao and Tao, Chenxin and Su, Weijie and Yang, Chenyu and Huang, Gao and Li, Bin and Lu, Lewei and Wang, Xiaogang and Qiao, Yu and Zhang, Zhaoxiang and Dai, Jifeng},

journal={arXiv preprint arXiv:2305.17144},

year={2023}

}